With over 35% of Cassandra workloads now running in the cloud, ensuring consistent performance across distributed nodes is a growing challenge. Many teams struggle with fragmented monitoring tools, costly data pipelines, and poor correlation between metrics and logs.

CubeAPM is the best solution for Cassandra monitoring, offering unified observability across metrics, logs, and error tracing in one OpenTelemetry-native platform. With built-in Cassandra exporters, smart sampling, and predictive dashboards, CubeAPM simplifies root-cause analysis and delivers real-time insights at a predictable cost.

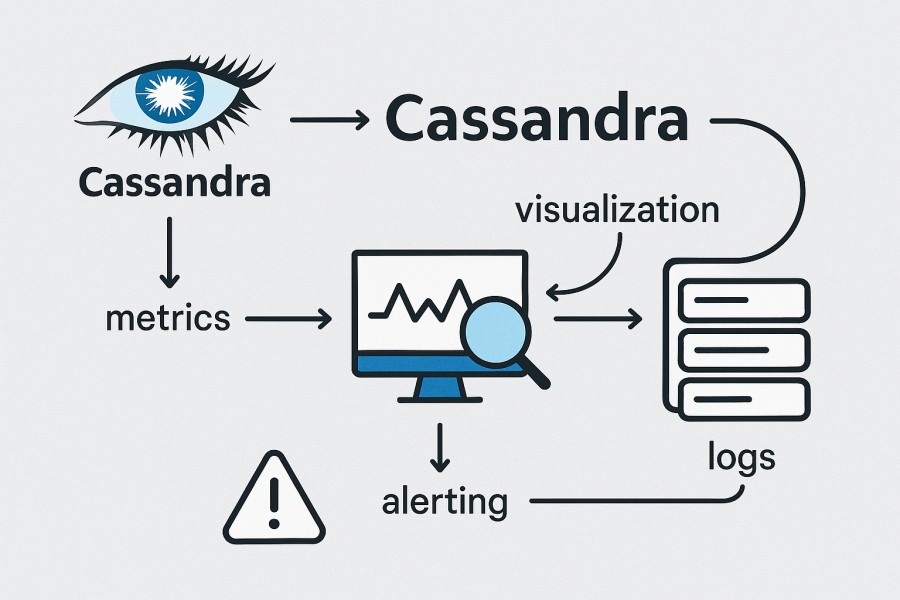

In this article, we’ll explore what Cassandra database monitoring is, why it matters, the key metrics to track, and how CubeAPM enables full-stack visibility for your Cassandra databases.

What is Cassandra Monitoring?

Apache Cassandra is a highly scalable, distributed NoSQL database designed to handle massive volumes of structured, semi-structured, and unstructured data across multiple nodes without a single point of failure. Cassandra is ideal for use cases that demand 24/7 uptime, fault tolerance, and low-latency performance—such as e-commerce transactions, IoT event processing, financial analytics, and real-time personalization at scale.

Monitoring Cassandra databases is critical for ensuring performance consistency, data integrity, and operational efficiency. When properly instrumented, Cassandra monitoring helps teams:

- Detect performance bottlenecks early: Identify slow queries, overloaded nodes, and GC pauses before they affect SLAs.

- Optimize resource usage: Track CPU, disk, and memory utilization to prevent hot spots and uneven data distribution.

- Maintain replication and consistency: Monitor read/write latencies and consistency levels to avoid data drift between clusters.

- Ensure scalability and uptime: Analyze node health, compactions, and repair tasks for stable cluster growth.

Example: Using Cassandra for Real-Time Analytics

A global ride-sharing company uses Cassandra to process millions of GPS events per second across multiple regions. Without real-time monitoring, sudden spikes in write latency during peak hours once led to delayed ride-matching and user frustration.

By implementing continuous Cassandra monitoring with CubeAPM, the company correlated JVM heap usage with compaction tasks, optimized GC settings, and reduced request latency by over 40%, ensuring faster, more reliable analytics at scale.

Why Monitoring Cassandra Databases Is Crucial

Preserve replica consistency and prevent inconsistency

Cassandra’s distributed architecture relies on mechanisms like hinted handoff, anti-entropy repair, and read repair to synchronize data across replicas. Without monitoring metrics such as hint backlog, repair progress, or coordinator read/write timeouts, replicas may diverge or fail consistency checks during node failures or maintenance windows.

Catch compaction stalls, tombstone overhead, and I/O spikes

When compactions lag or tombstones pile up, reads must scan more SSTables and skip more stale data, driving p99 latency sharply upward. Monitoring PendingCompactions, CompletedCompactions, tombstone scan counts, and compaction throughput lets you detect these emergent issues well before user impact.

Track cache efficiency and memory/memtable behavior

Cassandra relies on caches (key cache, row cache) and memtables to speed reads and writes. A drop in cache hit rates or surging memtable flush frequency may indicate misconfiguration, workload change, or memory pressure. Observing cache hit ratios, flush counts, and heap/memory usage helps you fine-tune performance.

Understand thread pools, backpressure & internal latency

Internal thread pools (read, mutation, request, compaction) can become saturated under load. Monitoring their queue depths, blocked threads, and latency gives you early warning when pressure is building. Cassandra’s official metric docs emphasize that these internal bottlenecks often precede visible service degradation.

Correlate system signals with database symptoms

Most Cassandra performance issues begin at the OS or JVM layer—GC pauses, disk I/O saturation during compaction, network latency between nodes—and manifest downstream as coordinator timeouts or query failures. Only by correlating across layers can you distinguish “slow disk” from “bad query.” DataStax’s recommended metric set (including GC, thread pools, and file system stats) underscores this necessity.

Reduce business risk from downtime and latency impact

Although not Cassandra-specific, industry studies consistently show steep costs for downtime. For instance, the 2024 ITIC report notes >90% of firms say hourly downtime costs exceed $300,000, and 41% place it between $1M and $5M per hour. Monitoring, alerting, and capacity planning are essential in high-stakes environments such as e-commerce, financial systems, and SaaS platforms.

Avoid observability overload in large clusters

When your Cassandra cluster scales to dozens or hundreds of nodes with many keyspaces and tables, naive metric collection can explode into tens of thousands of time series—introducing processing overhead or telemetry cost overruns. It’s critical to use metric cardinality control, selective sampling, and focused dashboards to maintain efficiency without losing traction on key signals.

Key Cassandra Metrics to Monitor

Monitoring Apache Cassandra effectively means tracking metrics across several layers — from nodes and compaction to cache and replication. These metrics help you understand performance bottlenecks, replication lag, and overall cluster health. Below are the most important categories and their key metrics, along with what they mean and practical thresholds to guide alerting.

Node Health and Availability

Your cluster’s stability depends on every node functioning properly. Node-level metrics help detect failures, restarts, or unbalanced loads early.

- LiveNodes: Tracks the number of active nodes in the cluster. A drop here may indicate a node crash, network partition, or failed startup sequence. Threshold: Should always match total configured nodes; alert if below 100% for more than 2 minutes.

- DownNodes: Measures nodes that are unresponsive to gossip or heartbeat signals. Persistent down nodes can cause consistency issues and replication lag. Threshold: >0 triggers immediate alert; critical if lasting over 5 minutes.

- Uptime: Tracks how long each node has been running without interruption. Frequent restarts often point to heap exhaustion or crash loops. Threshold: Investigate if uptime resets multiple times within 24 hours.

Read and Write Latency

Latency metrics reveal query responsiveness and cluster health under load. High latency often indicates compaction pressure, poor data modeling, or GC pauses.

- ReadLatency: Average time for read requests in milliseconds. Increases may result from slow disks, tombstone scans, or missing cache hits. Threshold: p99 > 20 ms indicates emerging read issues.

- WriteLatency: Average time for write operations. A sudden spike can mean overloaded memtables or pending compactions. Threshold: p99 > 10 ms; critical beyond 25 ms.

- CoordinatorReadLatency: Measures latency at the coordinator node level before query dispatch. High coordinator latency suggests inter-node communication delays. Threshold: p99 > 15 ms; investigate cross-AZ or network issues.

Compaction and Storage

Compaction keeps read paths efficient and storage balanced. Monitoring these metrics prevents SSTable bloat and I/O bottlenecks.

- PendingCompactions: Shows how many compactions are waiting to run. A growing backlog indicates disk saturation or overactive write throughput. Threshold: >100 pending tasks signals backlog; critical if >500.

- CompletedCompactions: Tracks total completed compactions. A consistent rise reflects healthy maintenance; stagnation signals a blocked thread. Threshold: No increase for >10 min suggests compaction stalls.

- TombstoneScanned: Number of deleted rows scanned per query. Excessive tombstones slow reads dramatically. Threshold: >1000 tombstones per read is concerning; review TTL usage.

- SSTablesPerRead: Indicates how many SSTables are read per query. High counts degrade performance due to disk I/O. Threshold: >5 SSTables per read indicates need for compaction tuning.

Memory, GC, and JVM Health

Since Cassandra runs on the JVM, monitoring memory and garbage collection is vital for preventing pauses and throughput drops.

- HeapMemoryUsage: Total heap used by the JVM process. Steady growth without GC recovery indicates leaks or oversized memtables. Threshold: >85% heap usage for more than 5 min triggers GC or OOM risk.

- GCTime: Duration of garbage collection cycles. Long pauses block request handling and cause latency spikes. Threshold: Full GC > 2s indicates heap tuning needed.

- GCCount: Tracks the number of GC events. A rising count without corresponding heap recovery means a misconfigured heap or pressure from compaction. Threshold: >10 full GCs/hour suggests tuning is required.

Caching and Memtable Efficiency

Caches and memtables reduce read latency and improve throughput. Monitoring their hit ratios helps evaluate query efficiency.

- KeyCacheHitRate: Ratio of successful key lookups served from cache. Low values imply frequent disk seeks. Threshold: <80% hit rate requires cache size adjustment.

- RowCacheHitRate: Tracks row-level cache utilization. It’s lower by design but helps optimize hot-row access. Threshold: <30% hit rate under consistent workload may need review.

- MemtableFlushCount: Number of memtables flushed to disk. Frequent flushes indicate high write activity or small memtable configurations. Threshold: >10 flushes/minute suggests tuning memtable thresholds.

Replication and Consistency

Cassandra ensures fault tolerance via replication. Monitoring these metrics confirms replica synchronization and read/write quorum reliability.

- HintsInProgress: Tracks the number of hinted handoff messages waiting to be delivered. A high backlog can delay recovery from node outages. Threshold: >10 K hints sustained for 10 min indicates node failure or network issue.

- RepairPending / RepairProgress: Shows ongoing anti-entropy repairs and their completion percentage. Stalled repairs risk replica divergence. Threshold: No progress in 30 min signals repair stuck.

- Unavailables: Count of requests failing due to insufficient replicas. These directly affect SLAs. Threshold: >0 per minute warrants investigation.

- ConsistencyLevelFailures: Number of failed reads/writes due to unmet consistency requirements. Threshold: >1% of total requests signals cluster imbalance.

Disk and System Resource Metrics

Hardware resource metrics reveal whether Cassandra’s I/O capacity matches data volume and workload pattern.

- DiskSpaceUsed: Total disk space consumed by data, commit logs, and hints. Rapid growth can precede compaction stalls or disk exhaustion. Threshold: >80% utilization on any node requires cleanup or scaling.

- CommitLogSize: Tracks active commit logs before flush to SSTables. Excessive growth indicates a slow disk or I/O bottleneck. Threshold: >2 GB sustained backlog signals flush delay.

- CPUUtilization: High CPU under compaction or repair can starve query threads. Threshold: Sustained >85% CPU for >5 min warrants investigation.

Client Request and Error Rates

These metrics directly reflect the user experience. Spikes in errors or timeouts mean users are facing degraded service.

- RequestFailure: Total failed client requests. Common causes include overloaded nodes or GC pauses. Threshold: >1% of total requests indicates incident severity.

- RequestTimeouts: Number of requests timing out before coordinator completion. Threshold: >0.5% of requests per minute indicates cluster pressure.

- ErrorCount (Server-Side): Internal errors during query execution. Threshold: Any sustained nonzero rate requires debugging or tracing.

Together, these metrics provide a full picture of Cassandra cluster performance — from node reliability and query latency to compaction, replication, and JVM health. Monitoring them in CubeAPM enables teams to visualize latency patterns, correlate metrics with traces, and configure intelligent alerts that preempt costly downtime or data inconsistency.

Common Cassandra Performance Issues

Even well-optimized Cassandra clusters can face performance degradation due to growing data volume, uneven workloads, or configuration drift. Below are some of the most common issues to watch for when monitoring Cassandra in production.

Increased Read/Write Latency

Compaction backlogs or long GC pauses block read/write threads, causing spikes in query latency and timeouts. Overloaded disks or heap memory often trigger this slowdown. In one DataStax report, clusters with GC pauses over 2 seconds saw read latency rise by 35% under peak load.

Uneven Load Distribution

Improper token assignment or poor partition key design can overload specific nodes, creating hotspots that serve most of the traffic. A fintech team once found one node processing 3× more reads, leading to uneven CPU and I/O usage. Regular rebalancing and partition audits prevent this.

High Tombstone Ratio

Frequent deletes or long TTLs accumulate tombstones that slow down read paths and compactions. According to DataStax benchmarks, a 20% tombstone ratio can reduce read speed by 40%. Monitoring tombstone scans helps maintain read performance and storage efficiency.

Disk Saturation

When disks fill up from unflushed SSTables or oversized commit logs, Cassandra throttles I/O and slows writes. One telecom operator saw a latency spike when disk usage exceeded 85%, which resolved only after compaction cleanup.

Unbalanced Replication

Incorrect replication factors or mismatched consistency levels lead to stale reads and “Unavailable” errors. A global e-commerce team observed 50 ms higher read latency after cross-region RF mismatches. Monitoring Unavailables and consistency metrics ensures cluster reliability.

How to Monitor Cassandra with OpenTelemetry and CubeAPM

Below is a practical, Cassandra-specific, step-by-step path to get metrics, logs, and traces into CubeAPM using OpenTelemetry. Every step links to the relevant CubeAPM docs for deeper reference.

Step 1: Deploy CubeAPM (Kubernetes Helm or standalone)

Run CubeAPM where it can receive OTLP telemetry. For Kubernetes, install via the official Helm chart (repo, values, install/upgrade flow are documented). For VMs/Docker, use the general install guide.

helm repo add cubeapm https://charts.cubeapm.com && helm repo update cubeapm && helm show values cubeapm/cubeapm > values.yaml && helm install cubeapm cubeapm/cubeapm -f values.yaml

Step 2: Configure CubeAPM core settings

Set required parameters so alerts, auth, and URLs work correctly. At minimum, configure token and auth.key.session, and usually base-url, auth.sys-admins, cluster.peers, and time-zone. You can set them via CLI args, env vars (CUBE_*), or config.properties.

Step 3: Expose Cassandra metrics via JMX → Prometheus

Enable a JMX Prometheus exporter on each Cassandra node so the collector can scrape database metrics (latencies, compaction, caches, thread pools). A common pattern is to start Cassandra with the jmx_prometheus_javaagent listening on a local port, e.g., :7070/metrics. (Add this to your service launch or cassandra-env.sh.) Then the endpoint per node becomes http://<node-ip>:7070/metrics. You’ll scrape this in Step 4.

Step 4: Deploy an OpenTelemetry Collector for Cassandra metrics

Run the OpenTelemetry Collector (as a DaemonSet on Kubernetes or a service on VMs) to scrape Cassandra’s Prometheus endpoint and forward to CubeAPM over OTLP. CubeAPM natively supports OTLP; point the exporter to your CubeAPM endpoint.

receivers:

prometheus:

config:

scrape_configs:

- job_name: cassandra

scrape_interval: 15s

static_configs:

- targets: ['10.0.0.11:7070','10.0.0.12:7070','10.0.0.13:7070']

exporters:

otlp:

endpoint: https://<your-cubeapm-host>:4317

tls:

insecure: false

service:

pipelines:

metrics:

receivers: [prometheus]

exporters: [otlp]Step 5: Capture infrastructure (host/container) metrics where Cassandra runs

Cassandra symptoms (latency spikes, timeouts) often originate in OS/JVM/I/O. Enable node/host metrics (CPU, memory, disk, network) with the Infra Monitoring flow so you can correlate GC pauses, disk saturation, and network issues with database metrics. On K8s, run the collector as a DaemonSet; on VMs, enable hostmetrics in the collector.

Step 6: Ingest Cassandra logs for root-cause context

Ship system.log, debug.log, and GC logs to CubeAPM so you can pivot from a latency spike to the exact error or compaction event. Use OpenTelemetry log ingestion or your preferred shipper (e.g., file tail → OTLP). Keep fields structured (timestamp, node, keyspace, table, thread, level) to enable fast correlation.

Step 7: Instrument application services for Cassandra query traces

Most query-level spans come from the client applications (services using the Cassandra driver). Enable OpenTelemetry in those services so you get DB spans (operation, keyspace, consistency level, duration) that line up with cluster metrics and logs in CubeAPM. Start with the instrumentation overview and OTLP setup, then enable the language-specific auto/agent instrumentation you use.

After completing these steps, CubeAPM begins collecting Cassandra metrics, logs, and traces in real time—displaying cluster health, latency trends, and compaction activity across nodes.

Once data flow is verified, the next steps involve validating dashboards, setting alert thresholds, and building a verification checklist, which we’ll cover later in the article.

Real-World Example: Cassandra Cluster Monitoring

Challenge

A global fintech company relied on a 12-node Apache Cassandra cluster to handle thousands of real-time payment transactions per second across multiple regions. During high-traffic windows, their systems experienced unpredictable read and write latency spikes, leading to delayed transaction confirmations and failed API calls. Traditional dashboards offered limited visibility—operators could see slow queries but couldn’t pinpoint whether the issue originated from the JVM, compaction backlog, or uneven node load.

Solution

The DevOps team integrated CubeAPM with their Cassandra environment using the OpenTelemetry Collector. They enabled JMX Prometheus exporters on all nodes and configured CubeAPM to collect compaction metrics, GC activity, and thread pool utilization. The Cassandra latency dashboard in CubeAPM provided a unified view of coordinator, replica, and system-level latency, allowing engineers to correlate application slowdowns with internal database behavior in real time.

Fixes Implemented

Through CubeAPM’s correlation analysis, the team discovered that two nodes were handling 3× more compaction load due to an uneven distribution of SSTables. They adjusted the compaction_throughput_mb_per_sec parameter to balance disk I/O and tuned JVM heap allocation to reduce garbage collection frequency. They also set up automated alerts in CubeAPM to trigger whenever PendingCompactions > 500 or GC pauses exceeded 2 seconds.

Result

After tuning compaction throughput and JVM memory settings, read latency dropped by 45% and write latency stabilized under 10 ms at peak load. The improved observability also helped the SRE team proactively detect node imbalances before they affected SLAs. With CubeAPM, the company moved from reactive troubleshooting to predictive performance management—keeping their Cassandra-backed payment pipeline consistently fast and reliable.

Verification Checklist & Example Alert Rules for Cassandra Monitoring with CubeAPM

Before going live with Cassandra observability, it’s important to confirm that metrics, logs, and traces are correctly flowing into CubeAPM and that your alert thresholds are aligned with operational SLAs. Below is a verification checklist followed by sample alert rules you can adapt for production clusters.

Verification Checklist for Cassandra Monitoring

- Exporter connected: Verify that the JMX Prometheus exporter on each Cassandra node is exposing metrics at

/metricsand responding successfully when queried. - OTEL collector running: Ensure the OpenTelemetry Collector service (DaemonSet or VM agent) is running and forwarding data to CubeAPM’s OTLP endpoint without dropped batches.

- Metrics ingestion confirmed: In CubeAPM, check the Cassandra dashboard for active metrics such as

ReadLatency,PendingCompactions, andTombstoneScanned. - Logs pipeline active: Confirm that Cassandra’s

system.logand GC logs appear under the Logs tab and can be filtered by node, keyspace, and severity. - Traces visible: Validate that application-level Cassandra spans (SELECT, INSERT, UPDATE) are visible and linked with cluster-level metrics in CubeAPM.

- Infra metrics aligned: Cross-check host-level CPU, disk, and memory metrics with Cassandra’s JVM metrics to ensure correct node-to-host correlation.

- Alerts tested: Simulate a small failure (e.g., stop one node or slow compaction) to confirm that alert notifications trigger in CubeAPM via email or webhook.

Example Alert Rules for Cassandra Monitoring

1. High Write Latency Alert

Triggers when write latency exceeds 25 ms for over 5 minutes, indicating possible compaction or I/O saturation.

alert: CassandraHighWriteLatency

expr: cassandra_write_latency_seconds > 0.025

for: 5m

labels:

severity: warning

annotations:

summary: "High write latency detected on {{ $labels.instance }}"

description: "Write latency exceeded 25 ms for 5 minutes — check compaction and disk I/O."2. Node Down Alert

Alerts when any node in the cluster stops responding to gossip heartbeats for more than 2 minutes.

alert: CassandraNodeDown

expr: cassandra_node_up == 0

for: 2m

labels:

severity: critical

annotations:

summary: "Cassandra node {{ $labels.instance }} is down"

description: "The node has not responded for over 2 minutes — investigate connectivity or resource exhaustion."3. Compaction Backlog Alert

Notifies when compaction tasks queue beyond safe thresholds, signaling disk congestion or aggressive writes.

alert: CassandraCompactionBacklog

expr: cassandra_pending_compactions > 500

for: 10m

labels:

severity: warning

annotations:

summary: "Compaction backlog rising on {{ $labels.instance }}"

description: "Pending compactions exceed 500 — consider tuning compaction throughput or expanding disk capacity."With these checks and alerts, CubeAPM provides proactive coverage for critical Cassandra conditions—ensuring teams detect performance degradation, node failures, and compaction bottlenecks before they escalate into production outages.

Conclusion

Monitoring Cassandra databases is essential for ensuring consistent performance, data integrity, and reliability across distributed clusters. Without real-time insights, even small configuration issues—like compaction lag or uneven token distribution—can lead to severe query latency and replication failures.

CubeAPM simplifies Cassandra monitoring by unifying metrics, logs, and traces into one OpenTelemetry-native platform. It visualizes node health, GC activity, compaction status, and client latency while offering intelligent alerting and seamless correlation across all layers—from JVM to query-level diagnostics.

With CubeAPM, teams can proactively detect performance regressions, prevent outages, and scale Cassandra confidently. Start monitoring your Cassandra clusters with CubeAPM today for complete observability, faster troubleshooting, and predictable cost.

Disclaimer: The information in this article reflects the latest details available at the time of publication and may change as technologies and products evolve.

FAQs

1. What are the key metrics to monitor in Cassandra?

Essential metrics include read/write latency, pending compactions, tombstone counts, GC time, cache hit ratios, and replication health (hints and repairs). These indicators help detect early performance degradation.

2. How do I monitor Cassandra’s compaction and GC performance?

Enable JMX exporters to collect compaction and GC metrics, and use CubeAPM dashboards to visualize trends. Persistent GC pauses or large compaction queues signal disk or memory pressure.

3. Can CubeAPM monitor self-hosted Cassandra clusters?

Yes, CubeAPM supports both self-hosted and Kubernetes-based Cassandra deployments via OpenTelemetry Collector. You can install CubeAPM on-premise or use its managed SaaS version for centralized observability.

4. How can I correlate Cassandra query latency with node performance?

CubeAPM links metrics, traces, and logs automatically. For instance, a slow SELECT query trace can be correlated with GC spikes or compaction delays on the corresponding node.

5. Does CubeAPM support alerting and anomaly detection for Cassandra?

Yes. CubeAPM’s alerting engine lets you define rules for latency, compactions, and node health. You can send real-time alerts via email, Slack, or webhook integrations.