MongoDB monitoring is the process of tracking performance metrics, logs, and traces to maintain database health and optimize query execution. Today, over 70% of Fortune 500 companies rely on MongoDB for mission-critical workloads.

Effective monitoring reveals query latency, replication lag, cache usage, and system resource trends before they impact applications. Yet, monitoring MongoDB databases remains challenging for many teams due to hidden replication lag, unindexed queries, and lock contention often going unnoticed until user latency spikes.

CubeAPM is one of the best MongoDB monitoring tools, combining metrics, logs, and error tracing in one OpenTelemetry-native platform. It offers pre-built dashboards, anomaly detection, and smart sampling to simplify observability across complex clusters.

In this article, we’ll explore what MongoDB monitoring is, why it matters, key metrics to track, and how CubeAPM delivers full-stack MongoDB monitoring and alerting.

What is MongoDB Monitoring?

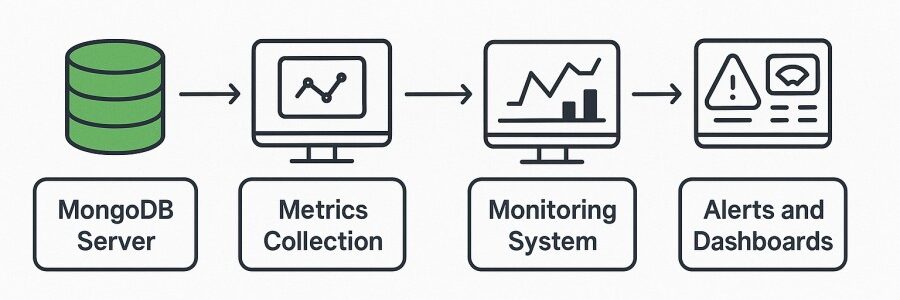

MongoDB monitoring is the process of collecting, analyzing, and visualizing performance data from MongoDB databases to ensure optimal reliability, scalability, and responsiveness. It tracks metrics such as query latency, replication lag, cache usage, and connection pool utilization, helping teams maintain consistent performance across production workloads.

For modern organizations, MongoDB database monitoring is essential because it turns database management into a proactive, insight-driven process. It enables teams to:

- Detect slow queries, replication delays, or lock contention before service degradation.

- Optimize resource usage across distributed or sharded clusters.

- Correlate application behavior with database performance for faster root-cause analysis.

- Ensure uptime, data consistency, and compliance across hybrid or cloud-native deployments.

By continuously observing these metrics, businesses minimize downtime, improve application speed, and maintain predictable scaling even under dynamic workloads.

Example: Monitoring MongoDB for a Healthcare Analytics Platform

Consider a healthcare analytics company that processes millions of patient records using MongoDB. During peak data ingestion hours, the system begins showing inconsistent query latency. With MongoDB monitoring enabled, engineers spot rising WiredTiger cache usage and replication lag within CubeAPM dashboards.

By correlating traces, they identify a poorly optimized aggregation pipeline causing the slowdown. The issue is fixed proactively—preventing delayed analytics and maintaining compliance with healthcare data SLAs.

Why Monitoring MongoDB Databases Is Important

Prevent replication lag and oplog “fall-off”

Replication lag measures the delay between a write on the primary and its application on the secondary. If lag exceeds your oplog window (i.e., the time span covered by the primary’s oplog), a secondary can no longer catch up via replication and must do a full resync. Atlas tracks Replication Lag, Replication Headroom, and Oplog Window at ~85-second intervals to help you spot this risk early.

Expose slow queries and index regressions

Every schema or usage change can introduce performance pitfalls. MongoDB’s query profiler and Atlas’s Performance Advisor analyze slow operations (e.g., high docsExamined / keysExamined ratios) and suggest index optimizations, letting you fix regressions before latency climbs.

Control cache pressure and eviction latency

WiredTiger employs eviction thresholds: by default, eviction begins when the cache occupancy reaches ~95%, and eviction_dirty_target (typically 5%) constrains how much dirty data can accumulate. If eviction threads can’t keep pace, application threads may perform eviction, which impacts query latency.

Avoid connection bottlenecks and lock queues

During workload surges, connection pools can saturate, and many operations may queue waiting for locks. Atlas surfaces lock queue metrics via GLOBAL_LOCK_CURRENT_QUEUE_TOTAL, *_READERS, *_WRITERS — key signals that you need to scale or tune concurrency.

Monitor shard balance and skew in distributed environments

In sharded deployments, uneven data growth or query patterns can create hot shards. Monitoring per-shard metrics (document count, storage size) helps you detect imbalance, unusual load, or routing anomalies in your cluster.

Align with real-world operator priorities

Database engineers frequently cite memory pressure, disk I/O latency, replication lag, and connection/lock saturation as top signals to watch in MongoDB systems. Native MongoDB metrics and Atlas dashboards support these signals.

Key MongoDB Metrics to Monitor

Effective MongoDB monitoring depends on understanding what to measure and why it matters. Each category below focuses on a different layer of MongoDB performance—from system-level resource utilization to query execution and replication health. Tracking these metrics continuously ensures smooth scaling, stable response times, and predictable performance under heavy workloads.

System Metrics

System-level metrics reflect how the underlying infrastructure (CPU, memory, disk, and network) affects MongoDB performance. Bottlenecks here can cascade into higher query latency and replication lag.

- CPU Utilization: Measures how much processing power MongoDB processes consume. Sustained CPU usage above 85% usually indicates inefficient queries, missing indexes, or unbalanced workloads. Threshold: keep average CPU usage below 75–80% for consistent query performance.

- Memory Usage: Indicates how much memory MongoDB and the WiredTiger cache use for active data and indexes. High memory consumption with frequent paging signals indicates that the working set doesn’t fit in RAM. Threshold: maintain at least 20% free RAM for OS-level caching.

- Disk IOPS and Latency: Tracks read/write throughput and latency to disk. High IOPS but increasing latency indicates I/O saturation or slow storage. Threshold: write latency ideally below 5 ms, read latency below 2 ms.

- Network Throughput: Monitors data transfer across nodes and client connections. Network congestion can amplify replication lag or timeouts. Threshold: maintain network utilization below 70% of interface capacity.

Database Operations Metrics

These metrics reveal how actively MongoDB is handling CRUD operations and can highlight unbalanced workloads or inefficient access patterns.

- opcounters: Shows per-second counts of inserts, queries, updates, and deletes. A sudden surge in

updateorquerycounts may indicate a runaway process or new workload pattern. Threshold: monitor relative deltas—rapid 3×–5× spikes require investigation. - operationsLatency (opLatencies): Tracks average latency for reads, writes, and commands in microseconds. Persistent increases usually point to inefficient queries or locks. Threshold: maintain average read/write latency under 100 ms for production systems.

- connections: Displays the number of current and available connections. Rapid growth can signal unbounded connection pools or high concurrency pressure. Threshold: alert if connections exceed 80–85% of the configured maximum.

- locks: Reflects contention for database or collection-level resources. High lock time or queued operations indicate concurrency bottlenecks. Threshold: lock time ratio should remain below 10% of total operations.

WiredTiger Cache Metrics

MongoDB’s WiredTiger storage engine relies heavily on cache to accelerate reads and writes. Monitoring cache utilization prevents eviction storms and throughput collapse.

- cacheUsedPercent: Percentage of the allocated WiredTiger cache currently in use. If consistently above 90%, queries may stall as the system evicts pages more aggressively. Threshold: keep cache utilization below 85–90%.

- cacheDirtyPercent: Portion of cache occupied by dirty (unwritten) pages. A rapid rise indicates write pressure and possible I/O backlog. Threshold: maintain dirty cache below 5–10% of total cache.

- cacheEvictedPages: Counts the number of pages evicted from cache per second. Frequent spikes reflect cache churn and can slow queries. Threshold: evaluate if evictions exceed 10% of read volume.

Replication Metrics

Replication ensures data redundancy and fault tolerance, but performance issues here can cause stale reads or failovers. Monitoring lag and sync behavior keeps clusters reliable.

- replicationLag: The delay (in seconds) between the primary and secondary applying the same operation. Sustained lag affects read consistency and failover readiness. Threshold: maintain lag below 100 ms in low-latency clusters, under 1s for global deployments.

- oplogWindow: Represents the time range of operations available in the primary’s oplog. If replication lag approaches this window, secondaries risk full resync. Threshold: lag should not exceed 30–40% of the oplog window duration.

- replicationHeadroom: Indicates how much “space” remains before secondaries fall out of sync. Declining headroom is an early warning of replication stress. Threshold: alert if headroom drops below 25% of normal values.

Index and Query Performance Metrics

Indexes and query patterns directly affect throughput and latency. Tracking these metrics highlights slow queries, table scans, and missing indexes.

- indexMissRatio: The ratio of queries that don’t use indexes. A high ratio increases CPU and I/O usage. Threshold: aim for index utilization > 95% for OLTP workloads.

- scannedObjects vs. returnedObjects: Compares the number of documents scanned versus returned in query results. A large gap signals inefficient query filters. Threshold: maintain a scan/return ratio below 10:1.

- queryLatency: Measures the time taken for query execution at the database level. A consistent rise implies poor indexes or cache contention. Threshold: average latency should stay under 100 ms for typical workloads.

Error and Cursor Metrics

These metrics help detect failed operations, timeouts, and resource exhaustion during query execution.

- asserts: Counts internal database assertions or errors (e.g., regular, warning, user, rollovers). Increasing user asserts often means client-side issues or malformed queries. Threshold: alert on any sustained increase beyond baseline +20%.

- cursorTimedOut: Tracks the number of cursors that expired before completion. Frequent timeouts point to unoptimized queries or slow clients. Threshold: keep timeouts under 1–2% of total active cursors.

By consistently monitoring these MongoDB metrics through CubeAPM, teams can correlate database behavior with application traces and infrastructure events. This unified visibility enables proactive tuning, capacity planning, and rapid troubleshooting before performance issues impact end users.

How to Monitor MongoDB Databases with CubeAPM Step-by-Step

Step 1: Deploy CubeAPM server

First, install the CubeAPM backend (single binary, container, or Kubernetes). Use the Install CubeAPM docs to set up the control plane that will receive your MongoDB data. This gives you the central UI, metrics API, alert engine, and data storage.

Refer: Install CubeAPM docs

Step 2: Configure authentication & core settings

Next, configure CubeAPM’s config.properties (or environment variables) with your token, auth.key.session, and optional SMTP / webhook info. This is required before any telemetry (including MongoDB) can flow in.

Refer: CubeAPM Configure docs

Step 3: Set up OpenTelemetry Collector to scrape MongoDB

Use the OTel Collector as your ingestion pipeline.

- Add a mongodb receiver / exporter block in the collector config to scrape metrics from your MongoDB instance(s).

- Specify endpoints (e.g.

localhost:27017or cluster host), auth (if needed), and TLS settings. - Add the Prometheus receiver or scraper if you’re using a MongoDB exporter that exposes

/metrics.

The pipelined data now flows into CubeAPM’s metrics endpoint.

Refer: Infra Monitoring / Prometheus Metrics in CubeAPM

Step 4: Ship MongoDB logs (slow queries, replication events)

Make sure MongoDB’s log files (e.g., slow query logs, replication logs) are forwarded into CubeAPM’s log ingestion pipeline. Use OpenTelemetry logs, FluentD, or another log forwarder. Once logs arrive, they link with metrics and traces for context.

Refer: CubeAPM Logs documentation

Step 5: Instrument application layers (traces) that call MongoDB

In your services (Java, Python, Node.js, etc.), instrument the database client (or use auto-instrumentation) with OpenTelemetry SDK. Those traces include spans around MongoDB calls, so you can see which query from which API caused latency.

Refer: CubeAPM Instrumentation / OpenTelemetry docs

Step 6: Enable alerts for MongoDB metrics

Once metrics and logs are flowing, configure alert rules for key MongoDB signals (replication lag, cache saturation, query latency). In CubeAPM, set up SMTP or webhook integrations so alerts reach your on-call team.

Refer: CubeAPM Alerting → connect with email/webhook

Step 7: Validate MongoDB dashboards & metrics

Finally, open CubeAPM UI and check whether you see MongoDB-specific dashboards—metrics like mongodb_connections_current, mongodb_op_latencies, replication lag, cache usage, etc. Confirm log entries from MongoDB appear. If everything is visible, your MongoDB monitoring is live.

Real-World Example: Monitoring MongoDB Databases with CubeAPM

Challenge: Diagnosing Latency in a FinTech Transaction System

A global FinTech platform managing real-time wallet transactions noticed intermittent latency spikes during payment settlements. The backend relied on a sharded MongoDB cluster for transaction records, but response times occasionally exceeded 800 ms—triggering failed API calls and reconciliation mismatches. Traditional logs showed only query slowdowns, without pinpointing which shard, service, or query pattern caused the lag. Engineers lacked full-stack visibility across MongoDB metrics, slow query logs, and application traces.

Solution: Unified MongoDB Observability with CubeAPM

The team deployed CubeAPM to achieve complete MongoDB observability. Using the OpenTelemetry Collector, they configured a MongoDB exporter to send live metrics such as mongodb_op_latencies_total, mongodb_repl_lag_seconds, and mongodb_wiredtiger_cache_bytes_used to CubeAPM’s ingestion endpoint.

MongoDB slow query logs were forwarded through the log pipeline, while backend services (written in Go and Node.js) were instrumented with OpenTelemetry SDKs. This created a single view connecting API spans → MongoDB queries → underlying cluster metrics. Alerts were set up for replication lag (> 1s), cache saturation (> 90 %), and slow query latency (> 500 ms).

Fixes: Insights and Optimization Steps

Within the first hour of deployment, CubeAPM dashboards revealed:

- A single shard (

shard-txn-02) showing replication lag up to 4s during peak load. - High WiredTiger cache eviction rates correlate with latency spikes.

- Several unindexed queries in the

transactionscollection performing full scans.

By correlating traces with metrics, the engineers identified that missing compound indexes (user_id, txn_date) were the primary culprit. After adding the indexes and tuning cache allocation from 6 GB to 10 GB, replication lag stabilized below 200 ms. CubeAPM’s anomaly detection confirmed latency reduction and cache efficiency improvement in real time.

Results: Faster Transactions and Predictable Scaling

Post-optimization, average MongoDB query latency dropped from 780 ms to 120 ms, and replication lag remained consistently below 250 ms during peak traffic.

The company reported a 65% reduction in payment processing time and eliminated timeout-related errors. With CubeAPM’s unified dashboards, DevOps teams now receive automated alerts before performance degradation occurs, ensuring compliance with strict SLAs across all global regions.

Verification Checklist and Alert Rules for MongoDB Monitoring with CubeAPM

Before diving into performance analysis, it’s essential to verify that your MongoDB monitoring pipeline is collecting accurate and complete data. A proper verification ensures that CubeAPM is ingesting all relevant metrics, logs, and traces, giving you end-to-end visibility before alerts go live.

Verification Checklist for MongoDB Monitoring

- MongoDB Metrics Visibility: Confirm that key metrics such as

mongodb_connections_current, mongodb_op_latencies_total, andmongodb_repl_lag_secondsare visible in CubeAPM dashboards. - OTel Collector Connection: Ensure the OpenTelemetry Collector is running and successfully forwarding MongoDB metrics to CubeAPM’s ingestion endpoint.

- Exporter Status: Check that the Prometheus or MongoDB exporter endpoint (

/metrics) is active and accessible from the collector node. - Log Ingestion: Verify MongoDB slow query and error logs are appearing in CubeAPM’s Log view under the correct cluster or namespace.

- Trace Correlation: Validate that MongoDB query spans are linked with the correct application traces within CubeAPM’s MELT view.

- Alerting Integration: Confirm that email or Slack integrations are active by sending a test notification through CubeAPM’s alert connector.

- Dashboard Accuracy: Review that metrics refresh at regular intervals and units (milliseconds, bytes, operations) are consistent across all widgets.

Alert Rules for MongoDB Monitoring

1. Replication and Cluster Health Alerts

Replication lag and cluster synchronization issues can lead to stale reads or secondary node desyncs. These alert rules ensure early detection and cluster consistency.

Alert Rule 1: Replication Lag Threshold

Trigger an alert when MongoDB secondaries lag more than 2 seconds behind the primary.

alert: MongoDBReplicationLagHigh

expr: mongodb_repl_lag_seconds > 2

for: 1m

labels:

severity: critical

annotations:

summary: "High MongoDB Replication Lag"

description: "Replication lag has exceeded 2 seconds — check network or secondary load."Alert Rule 2: Primary Election Frequency

Trigger when multiple elections occur within a short period, indicating cluster instability.

alert: MongoDBPrimaryElectionsFrequent

expr: mongodb_repl_election_count > 3

for: 5m

labels:

severity: warning

annotations:

summary: "Frequent MongoDB Primary Elections Detected"

description: "Multiple elections in 5 minutes — review replica set configuration and node health."2. Query Performance and Cache Alerts

These alerts focus on identifying latency, inefficient queries, and cache saturation that degrade database throughput.

Alert Rule 1: High Query Latency

Trigger an alert when the average query latency exceeds 500 ms for sustained periods.

alert: MongoDBHighQueryLatency

expr: mongodb_op_latencies_latency_total / mongodb_op_latencies_ops_total > 500

for: 2m

labels:

severity: high

annotations:

summary: "MongoDB Query Latency Spike Detected"

description: "Average query latency above 500 ms — possible missing index or slow I/O subsystem."Alert Rule 2: WiredTiger Cache Utilization High

Trigger when cache utilization crosses 90%, indicating risk of eviction storms.

alert: MongoDBCacheUsageHigh

expr: mongodb_wiredtiger_cache_bytes_used / mongodb_wiredtiger_cache_bytes_total > 0.9

for: 2m

labels:

severity: warning

annotations:

summary: "WiredTiger Cache Utilization Above 90%"

description: "High cache usage detected — consider resizing or reviewing write workload."These verification and alerting configurations ensure CubeAPM provides accurate, actionable insights into MongoDB’s health. Once verified, teams can confidently rely on real-time alerts, dashboards, and trace correlation to detect anomalies long before they impact end users.

Conclusion

Monitoring MongoDB databases is vital for ensuring high availability, consistent performance, and predictable scalability, especially in production environments handling mission-critical workloads. Without proper observability, issues like replication lag, cache saturation, or unindexed queries can silently degrade user experience.

CubeAPM simplifies MongoDB monitoring by unifying metrics, logs, and traces into one OpenTelemetry-native platform. Its real-time dashboards, anomaly detection, and smart sampling help DevOps teams detect and fix problems before they impact performance.

By adopting CubeAPM for MongoDB monitoring, businesses gain proactive visibility, faster root-cause analysis, and predictable cost at just $0.15/GB ingestion. Start monitoring MongoDB with CubeAPM today and achieve complete observability for your database infrastructure.

Disclaimer: The information in this article reflects the latest details available at the time of publication and may change as technologies and products evolve.

FAQs

1. What is MongoDB monitoring used for?

MongoDB monitoring tracks metrics like query latency, replication lag, and cache usage to ensure the database remains healthy, performant, and resilient under load.

2. How do I monitor MongoDB performance?

You can monitor MongoDB using OpenTelemetry and Prometheus exporters integrated with CubeAPM to visualize metrics, logs, and traces in unified dashboards.

3. What are the key MongoDB metrics to monitor?

Critical metrics include opLatencies, replicationLag, connectionsCurrent, cacheUsedPercent, and locksQueuedTotal, as they directly reflect database health and performance.

4. How can I detect slow queries in MongoDB?

Enable MongoDB’s slow query log and forward it to CubeAPM. CubeAPM correlates slow queries with traces to show which API endpoints or workloads are causing delays.

5. Why is CubeAPM ideal for MongoDB monitoring?

CubeAPM offers native OpenTelemetry support, prebuilt MongoDB dashboards, and cost-effective pricing. It captures every metric and trace across MongoDB clusters for faster diagnostics and efficient scaling.