Exit Code 137 in Kubernetes means a container was killed by the Linux Out-of-Memory (OOM) process after exceeding its memory limit. These failures cause pods to restart abruptly and can ripple into wider outages. In the Sysdig 2023 Cloud-Native Report, 49% of containers were found running without memory limits, making OOM events a common reliability risk. For businesses running microservices, frequent OOM errors can lead to degraded reliability.

CubeAPM helps teams stay ahead of Exit Code 137 failures by correlating memory usage trends, pod lifecycle events, OOMKill messages, and node pressure metrics. With OpenTelemetry-native ingestion, it links spikes in memory demand to application code or misconfigured limits. This real-time visibility reduces both detection and resolution time for out of memory events.

In this guide, we’ll cover what Exit Code 137 means, why it happens, how to fix it, and how CubeAPM enables proactive monitoring and alerting to prevent repeat failures.

What is Exit Code 137 (Out of Memory) in Kubernetes

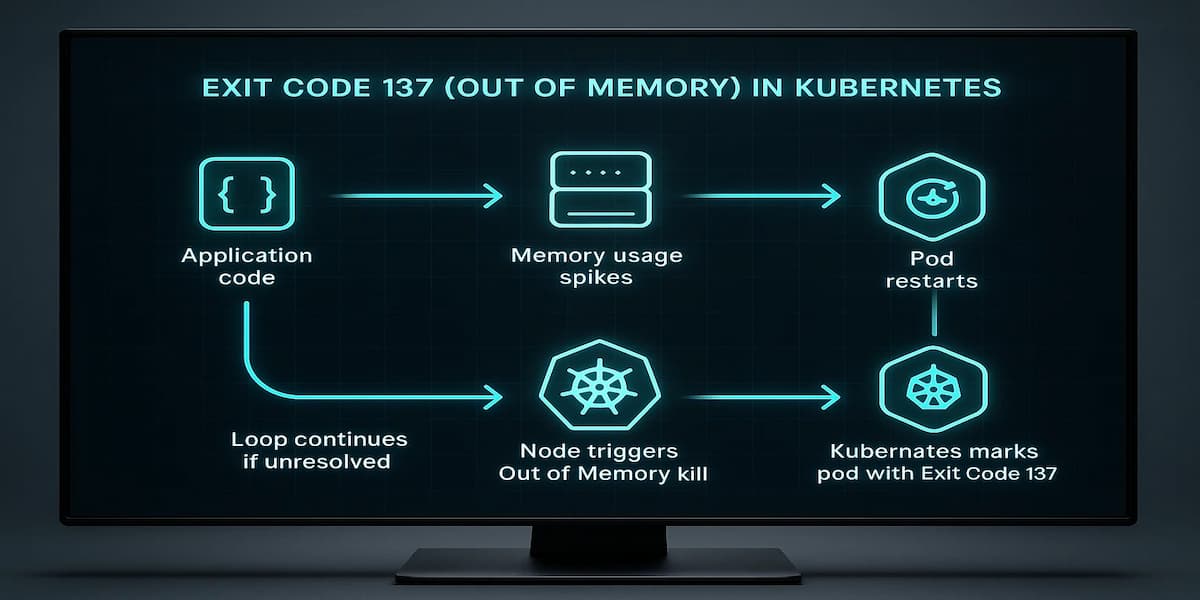

Exit Code 137 means the Linux Out-of-Memory (OOM) killer terminated a container process after it exceeded the memory limit defined by its cgroup. In Kubernetes, this shows up as a pod failure with the reason out of memory. It is not an application crash by itself but a forced termination at the node level when the container tries to allocate more memory than allowed.

You can confirm this by describing the pod. Running:

kubectl describe pod <pod-name>It will show the pod state as Terminated with an Exit Code: 137 and a message that the container was out of memory. Logs often stop abruptly before the point of failure, which makes these errors harder to debug without correlated metrics.

The impact on workloads can be significant. Kubernetes will restart the container automatically, but if the memory limits or code-level inefficiencies aren’t fixed, the pod will continue to loop through OOMKills. This can cause lost requests, failed background jobs, or cascading failures in microservice-based applications. For stateful services, repeated OOM events may even lead to data loss or corruption if writes are interrupted mid-operation.

Why Exit Code 137 (Out of Memory) in Kubernetes Happens

Exit Code 137 doesn’t come from a single root cause. It’s Kubernetes’ way of saying the container crossed a memory boundary, but the reasons behind it vary widely. Some stem from cluster configuration, while others trace back to application code or runtime behavior. Understanding these patterns—and knowing how to confirm them—is key to preventing repeat out of memory loops.

1. Improper resource limits

When memory requests and limits are set too low, even normal workload spikes can push usage beyond the defined cap. Kubernetes enforces these limits strictly, and the Linux OOM killer terminates the process once it breaches. For example, a service capped at 256Mi may briefly surge during traffic spikes, triggering repeated OOMKills until its configuration is tuned.

Quick check:

kubectl top pod <pod-name> -n <namespace>Compare memory usage against the configured requests and limits in the pod spec.

2. Application-level memory leaks

Some applications gradually consume more memory over time due to inefficient code, unbounded caches, or missing cleanup routines. In long-running pods like web APIs or background workers, memory can steadily climb until the container maxes out its allocation and is killed.

Quick check:

kubectl logs <pod-name> -c <container> --previousLook for patterns of increasing memory usage or out-of-memory messages before termination.

3. Node memory pressure

Exit Code 137 can also occur when multiple pods on the same node collectively use too much memory. In this case, the kubelet defers to the Linux OOM killer, which chooses which process to terminate. Pods with lower Quality of Service (QoS) classes, such as BestEffort or Burstable, are often the first to be evicted.

Quick check:

kubectl describe node <node-name>Check for “MemoryPressure” conditions or eviction events in the node status.

4. Data-heavy workloads

Pods handling image processing, analytics queries, or machine learning jobs may require far more memory than anticipated. If the memory cap doesn’t match the workload’s peak usage, the container will hit the limit and be terminated. This is especially common in batch jobs that expand datasets in memory.

Quick check:

kubectl top pod <pod-name> --containersObserve memory usage during job execution to confirm peak requirements exceed configured limits.

5. Misconfigured runtimes

Languages and runtimes like Java, Python, or Node.js may request more memory than the pod’s defined limit if not configured carefully. A common case is the JVM, which sets its heap size relative to the system memory, not the container’s cgroup limit. As a result, the process overshoots its allowance and ends up out of memory.

Quick check:

Inspect runtime configuration (e.g., -Xmx for Java, NODE_OPTIONS=–max-old-space-size for Node.js) and ensure it fits within the pod’s memory limit.

How to Fix Exit Code 137 (Out of Memory) in Kubernetes

Exit Code 137 won’t go away with restarts—you need to remove the memory pressure. Use the steps below (mapped to the causes) and verify after each change.

1) Right-size resource requests and limits

If usage regularly hits the cap, increase the limit and raise the request so the scheduler reserves enough memory.

Quick check:

kubectl top pod <pod-name> -n <namespace>Fix:

kubectl set resources deployment <deploy-name> -n <namespace> --containers=<container> --requests=memory=512Mi --limits=memory=1Gi2) Eliminate unbounded growth (leaks, caches, buffers)

Unbounded objects, in-memory queues, or caches that don’t evict will climb until the container is killed.

Quick check:

kubectl logs <pod-name> -c <container> --previousLook for increasing allocations or out-of-memory messages prior to termination.

Fix:

- Add bounded caching/eviction policies and cap queue sizes

- Release buffers promptly; stream large payloads instead of fully loading into memory

- Load-test locally and in staging to confirm flat memory profiles over time

3) Relieve node-level memory pressure

Crowded nodes trigger the kernel OOM killer across pods—even if your limit seems fine.

Quick check:

kubectl describe node <node-name>Check for MemoryPressure and recent eviction/OOM events.

Fix:

- Scale the node pool or move to larger nodes

- Increase pod requests so the scheduler packs less aggressively

- Prefer Guaranteed QoS for critical pods by setting equal request=limit

4) Match limits to peak usage for data-heavy work

ETL, analytics, image/video, and ML jobs have bursty peaks that exceed “average” sizing.

Quick check:

kubectl top pod <pod-name> --containersObserve peak usage during the heaviest phase.

Fix:

- Raise memory limit to observed peak with a safe buffer

- Split jobs into smaller batches or use chunked/streaming processing

- Consider Vertical Pod Autoscaler (VPA) for adaptive sizing

5) Make the application container-aware

Many runtimes default to machine-level memory, not the container cgroup. Ensure the app honors the pod limit.

Quick check:

- Inspect startup args/env for any “max memory”/“heap”/“buffer”/“pool” settings

- Confirm the configured maximum is below the pod memory limit with headroom for runtime/GC/native allocations

Fix:

- Set a cap for the process’s total memory footprint that fits inside the pod limit (leave ~25–30% headroom)

- Avoid unbounded thread pools and per-request buffers; bound concurrency

- Fail fast on oversize inputs instead of buffering everything in memory

6) Reduce concurrency and memory amplification

High parallelism multiplies per-request memory. Even well-sized limits can OOM under spikes.

Quick check:

- Compare current pod concurrency (workers, threads, async tasks) with per-request memory profile

- Correlate traffic spikes with OOMKills

Fix:

- Lower workers/parallelism or adopt adaptive concurrency

- Use backpressure and request queue limits at the ingress or job controller

7) Use memory-efficient patterns

Sometimes the fix is architectural rather than numeric.

Quick check:

- Identify steps that fully materialize large datasets, create big copies, or compress/decompress in memory

Fix:

- Stream I/O, paginate queries, process in windows/chunks

- Prefer zero-copy or in-place transforms where possible

- Externalize large intermediate state to object storage or temp files

8) Verify the fix and prevent regressions

Each change should flatten memory curves and stop OOM loops.

Quick check:

kubectl get pods -n <namespace> -wWatch for stable restarts=0 and no new out of memory events.

Fix:

- Add SLOs and alerts on pod memory utilization vs. limit (e.g., >85% for 5m)

- Bake a load test into CI to catch memory regressions before release

Monitoring Exit Code 137 (Out of Memory) in Kubernetes with CubeAPM

When a Pod exits with Exit Code 137, the fastest way to root cause is by correlating four signal streams: Kubernetes Events (e.g., out of memory, Evicted), pod & node memory metrics (requests, limits, usage), container logs (kernel “Killed process <pid> due to out-of-memory”), and deployment rollouts or scaling actions. CubeAPM ingests all of these via the OpenTelemetry Collector and stitches them into timelines so you can see exactly what pushed the container over its memory limit—whether it was a spike, leak, or node resource exhaustion.

Step 1 — Install CubeAPM (Helm)

Install (or upgrade) CubeAPM with your values file (endpoint, auth, retention, etc.):

helm install cubeapm cubeapm/cubeapm -f values.yamlUpgrade if already installed:

helm upgrade cubeapm cubeapm/cubeapm -f values.yamlStep 2 — Deploy the OpenTelemetry Collector (DaemonSet + Deployment)

Run the Collector both as a DaemonSet (for node-level stats, kubelet scraping, and events) and as a Deployment (for central pipelines).

helm install otel-collector-daemonset open-telemetry/opentelemetry-collector -f otel-collector-daemonset.yaml

helm install otel-collector-deployment open-telemetry/opentelemetry-collector -f otel-collector-deployment.yamlFor complete Kubernetes monitoring the Collector needs to run in both modes.

Step 3 — Collector Configs Focused on Exit Code 137

Keep the configurations distinct for the DaemonSet and central Deployment to capture the right signals.

3a) DaemonSet config (otel-collector-daemonset.yaml)

Key idea: collect kubelet stats (memory), Kubernetes events (out of memory, Evicted), and kube-state-metrics if present.

receivers:

k8s_events: {}

kubeletstats:

collection_interval: 30s

auth_type: serviceAccount

endpoint: https://${NODE_NAME}:10250

insecure_skip_verify: true

prometheus:

config:

scrape_configs:

- job_name: "kube-state-metrics"

scrape_interval: 30s

static_configs:

- targets: ["kube-state-metrics.kube-system.svc.cluster.local:8080"]

processors:

batch: {}

exporters:

otlp:

endpoint: ${CUBEAPM_OTLP_ENDPOINT}

headers:

x-api-key: ${CUBEAPM_API_KEY}

service:

pipelines:

metrics:

receivers: [kubeletstats, prometheus]

processors: [batch]

exporters: [otlp]

logs:

receivers: [k8s_events]

processors: [batch]

exporters: [otlp]- k8s_events captures out of memory and Evicted events.

- kubeletstats surfaces per-pod memory usage vs limits.

- prometheus (using kube-state-metrics) provides restart counts and pod phase.

These components are aligned with the config examples in the Infra Monitoring → Kubernetes section.

3b) Central Deployment config (otel-collector-deployment.yaml)

Key idea: receive OTLP telemetry, enrich with metadata, and export to CubeAPM.

receivers:

otlp:

protocols:

grpc:

http:

processors:

resource:

attributes:

- key: cube.env

value: production

action: upsert

batch: {}

exporters:

otlp:

endpoint: ${CUBEAPM_OTLP_ENDPOINT}

headers:

x-api-key: ${CUBEAPM_API_KEY}

service:

pipelines:

metrics:

receivers: [otlp]

processors: [resource, batch]

exporters: [otlp]

logs:

receivers: [otlp]

processors: [resource, batch]

exporters: [otlp]

traces:

receivers: [otlp]

processors: [resource, batch]

exporters: [otlp]Make sure CUBEAPM_OTLP_ENDPOINT and CUBEAPM_API_KEY are set via Helm values or Secrets. This central pipeline enriches data with metadata for filtering by namespace, pod, etc.

Step 4 — One-Line Helm Installs for kube-state-metrics (if missing)

helm repo add prometheus-community https://prometheus-community.github.io/helm-charts && helm repo update && helm install kube-state-metrics prometheus-community/kube-state-metrics -n kube-system –create-namespace

Step 5 — Verification (What You Should See in CubeAPM)

After a few minutes of data ingestion, you should confirm:

- Events timeline: out of memory and Evicted events aligned with memory usage spikes or node pressure.

- Memory graphs: Pods showing usage approaching or reaching their configured memory limits.

- Restart counts: From kube-state-metrics, pods restarting with reason=out of memory.

- Logs: Kernel messages like Killed process <pid> due to out-of-memory.

- Rollout or scaling context: If triggered by a deployment, see ReplicaSet changes or scale-up/down in the same timeline.

Example Alert Rules for Detecting Exit Code 137 Out of Memory Errors in Kubernetes

1. High Pod Memory Usage

It’s best to catch pods before they cross the memory limit. This alert fires when a container is using more than 90% of its assigned limit for 5 minutes.

- alert: PodHighMemoryUsage

expr: (container_memory_usage_bytes / container_spec_memory_limit_bytes) > 0.9

for: 5m

labels:

severity: warning

annotations:

summary: "Pod {{ $labels.pod }} in namespace {{ $labels.namespace }} is close to memory limit"2. out of memory Pod Restarts

This alert triggers when a pod restarts more than 3 times in 10 minutes due to out of memory, helping you detect loops early.

- alert: Podout of memoryRestarts

expr: increase(kube_pod_container_status_restarts_total{reason="out of memory"}[10m]) > 3

for: 1m

labels:

severity: critical

annotations:

summary: "Pod {{ $labels.pod }} in namespace {{ $labels.namespace }} restarted repeatedly due to out of memory"3. Node Memory Pressure

Sometimes the container itself isn’t misconfigured—the node runs out of memory. This alert warns when the kubelet marks a node as under memory pressure.

- alert: NodeMemoryPressure

expr: kube_node_status_condition{condition="MemoryPressure",status="true"} == 1

for: 2m

labels:

severity: critical

annotations:

summary: "Node {{ $labels.node }} is under MemoryPressure"4. Correlated Memory Saturation + out of memory

This composite alert reduces noise by only firing when high memory usage is followed by an out of memory event.

- alert: PodMemorySaturationFollowedByOOM

expr: (container_memory_usage_bytes / container_spec_memory_limit_bytes > 0.9) and (increase(kube_pod_container_status_restarts_total{reason="out of memory"}[5m]) > 0)

for: 1m

labels:

severity: critical

annotations:

summary: "Pod {{ $labels.pod }} in namespace {{ $labels.namespace }} hit memory saturation and was out of memory"Conclusion

Exit Code 137 in Kubernetes is more than just a numeric code — it’s a signal that a container exhausted its memory allowance and was forcefully killed. Left unresolved, these failures create restart loops, wasted compute, and degraded reliability across workloads.

The good news is that most causes — from misconfigured limits to node pressure — can be prevented with proactive monitoring and tuning. By setting realistic memory boundaries, optimizing workloads, and putting alerting in place, teams can avoid recurring out of memory issues.

CubeAPM simplifies this entire process. With Kubernetes events, container memory metrics, pod restarts, and node conditions all stitched into one view, it delivers real-time visibility into Exit Code 137. Teams can spot the warning signs, act before users are impacted, and keep production clusters running smoothly.

FAQs

1. What does Exit Code 137 mean in Kubernetes?

It means a container was terminated by the Linux Out-of-Memory (OOM) killer after breaching its memory limit. With CubeAPM, you can detect these events instantly and correlate them with memory usage trends and pod restarts.

2. How do I confirm if my pod was out of memory?

Kubernetes reports the reason as OOMKilled when this happens. CubeAPM makes it easier by centralizing events, logs, and metrics so you can see the termination reason in one place without manual digging.

3. Can Exit Code 137 occur even with memory limits set?

Yes. If the limits are too low or if the node itself is under memory pressure, pods can still be killed. CubeAPM helps by tracking both pod-level memory saturation and node-level pressure, giving you full context.

4. How do I prevent Exit Code 137 errors in production?

You need realistic resource limits, optimized workloads, and proactive monitoring. CubeAPM provides dashboards and alerts that highlight pods nearing their limits so you can act before users are impacted.

5. How is Exit Code 137 different from other Kubernetes errors?

This code specifically signals an OOM event, while errors like CrashLoopBackOff or ErrImagePull point to other failure types. CubeAPM distinguishes between these errors automatically and shows them on timelines, helping teams resolve the right problem faster.