The main difference between Prometheus and Grafana is that Prometheus is a metrics collection and alerting system, while Grafana is a visualization and analytics layer that sits on top of data sources like Prometheus. Prometheus stores and queries time-series metrics. Grafana turns that data into dashboards, charts, and exploratory views.

This comparison exists because Prometheus and Grafana are often deployed together, yet they serve different roles. As environments grow beyond a few services, teams start questioning whether their current setup still delivers fast answers during incidents or simply produces more dashboards.

This guide examines how Prometheus and Grafana differ in deployment effort, scalability, data retention, and operational ownership, to help teams understand where each tool fits today and where trade-offs typically emerge at scale.

Prometheus vs Grafana Comparison

The table below compares Prometheus and Grafana across core capabilities such as deployment, pricing, retention, and support. It highlights how each tool is designed to operate and where their responsibilities differ in real-world environments.

| Feature | Prometheus | Grafana |

| Known for | Open-source metrics collection, time-series storage, and alerting | Open-source dashboards, composable observability stack |

| Multi-Agent Support | No agents, only configurable exporters and scrape targets | Yes (OTel, Prometheus exporters, Loki, Tempo) |

| MELT Support | Metrics only | Full MELT coverage |

| Deployment | Self-hosted, self- managed | SaaS, self-hosted, & self-managed |

| Pricing | Open source & free license; infra, storage, and ops costs apply | OSS: Free; Cloud: $19/month; Enterprise: $25,000/yr |

| Sampling Strategy | None | Head-based & tail-based via OTel |

| Data Retention | 15d by default; configurable (30d, 60d, 90d, 1yr) | Free: 14d; Pro: 30d for logs/traces, 13m for metrics |

| Support Channel & TAT | Community-based | Community-based; Pro: 8×5 email; Enterprise: 24×7, custom SLAs |

Prometheus vs Grafana: Feature Breakdown

This section breaks down how Prometheus and Grafana differ across core capabilities, based strictly on their official documentation and project design. Each feature explains what the tools are built for, how they behave in real deployments, and where their responsibilities start and end.

Known for

Prometheus is known for metrics collection, time-series storage, and alerting. Its primary role is to scrape numeric metrics from configured targets at regular intervals, store them in a local time-series database, and evaluate alerting rules using PromQL. Prometheus is intentionally focused on metrics and alerting, not on dashboards, logs, or traces. This design is documented clearly in its project overview and data model documentation.

Grafana is known for data visualisation, dashboards, and exploratory analysis across multiple data sources. Grafana does not act as a primary data store by default. Instead, it connects to backends such as Prometheus, Loki, and Tempo to query, visualise, and correlate data. Its core strength lies in building dashboards, sharing insights across teams, and enabling ad-hoc exploration of metrics, logs, and traces from different systems.

Verdict: Prometheus answers “what is the metric value and should we alert?”, while Grafana answers “how do we visualise, explore, and understand this data across systems.” They are often deployed together, but they are built for distinct responsibilities.

Multi-Agent Support

Prometheus does not use agents in the traditional observability sense. Instead, it operates on a pull-based model, where a central Prometheus server scrapes metrics from configured endpoints and exporters over HTTP. Exporters expose metrics, but they do not manage telemetry pipelines, buffering, or routing. This architecture is intentional and is documented as a core design principle of Prometheus.

Grafana supports multiple agents and telemetry sources through its data source model. Grafana can query metrics, logs, and traces coming from OpenTelemetry collectors, Prometheus exporters, Loki agents, and Tempo pipelines at the same time. Grafana itself does not run agents, but it is designed to sit on top of environments where multiple collectors and backends coexist, which is explicitly described in its data source and architecture documentation.

Verdict: Prometheus centralises metrics collection through exporters and scrape targets, while Grafana assumes telemetry may already be coming from multiple independent agents and systems and focuses on querying and correlating that data.

MELT Support

Prometheus supports metrics only. Its data model, storage engine, and query language (PromQL) are designed exclusively for numeric time-series data. Prometheus does not natively ingest, store, or query logs or traces, and this separation is explicitly stated in its project overview and architecture documentation. Logs and traces are intentionally considered out of scope for the Prometheus server itself.

Grafana supports full MELT visibility through integrations rather than a single unified backend. Grafana queries metrics from systems like Prometheus, logs from Loki, and traces from Tempo, and presents them in a shared interface. While Grafana does not store all MELT signals itself, its architecture is explicitly designed to correlate metrics, logs, and traces across these systems, as documented in its official observability and data source guides.

Verdict: Prometheus focuses narrowly on metrics reliability and alerting, while Grafana acts as the layer that brings MELT signals together for exploration and investigation, relying on dedicated backends for each signal type.

Deployment

Prometheus is deployed as a self-hosted, self-managed service. The Prometheus project does not offer a SaaS-hosted or managed SaaS option. Teams are responsible for installing the server, configuring scrape targets, managing storage, handling upgrades, and designing for availability and scale. This deployment model is a deliberate design choice and is documented as part of Prometheus’ core architecture.

Grafana supports multiple deployment options. Grafana can be run as a self-hosted, self-managed instance, or consumed as a managed SaaS through Grafana Cloud. Both options are officially supported and documented, allowing teams to choose between operational control and managed convenience depending on their needs.

Verdict: Prometheus assumes teams want full control and are willing to operate the system themselves, while Grafana offers flexibility for teams that prefer either self-management, self-hosting, or SaaS-based managed service, depending on scale, compliance, and operational maturity.

Pricing

Prometheus is fully open source and free under the Apache 2.0 license. There are no licensing or usage fees for the Prometheus software itself. Costs arise from the infrastructure it runs on, including compute, storage, networking, and the operational effort required to manage scaling, upgrades, and long-term retention. This pricing model is explicitly stated in the Prometheus project documentation and licensing terms.

Grafana offers a tiered pricing model. Grafana’s open-source edition is free to self-host. Grafana Cloud pricing starts at $19 per month, while Enterprise plans are listed at approximately $25,000 per year, based on Grafana’s official pricing page.

- Metrics: Free tier includes 10,000 active series per month with 14-day retention. Beyond that, the Pro plan (starting at $19/month) charges $6.50 per 1,000 active series with 13-month retention. Enterprise plan is priced at $3/1k series (minmum $25k spend per year), and custom retention.

- Logs: Free tier includes 50 GB ingested per month with 14-day retention. On the Pro plan, ingestion beyond 50 GB costs $0.50 per GB with 30-day retention.

- Traces: Free tier includes 50 GB ingested per month with 14-day retention, and Pro overages cost $0.50 per GB with 30-day retention.

Verdict: Prometheus has no direct software cost but requires teams to budget for infrastructure and operations. Grafana provides both free and paid options, with pricing tied to the hosting model, scale, and support level.

Sampling Strategy

Prometheus does not support native sampling. Prometheus is designed to scrape and store all configured metrics at full resolution on every scrape interval. Volume control is handled indirectly by adjusting scrape intervals, limiting which metrics are exposed by exporters, or aggregating data after ingestion using recording rules. This behavior is part of Prometheus’ core design and is documented in its data model and storage architecture.

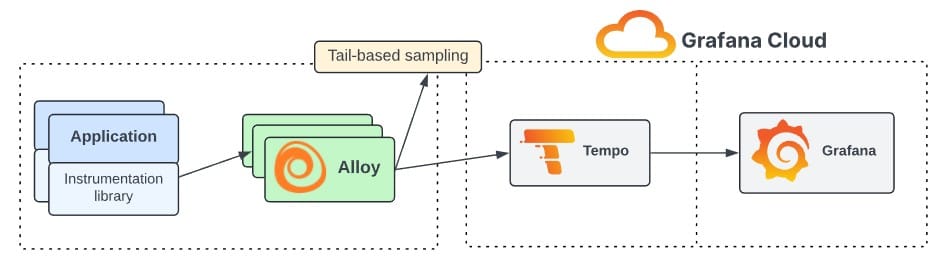

Grafana supports head-based and tail-based sampling, depending on the telemetry backend it connects to. Grafana itself does not implement sampling logic, but it integrates with systems like Tempo and OpenTelemetry pipelines, where sampling strategies are applied to traces before storage. Grafana’s role is to query and visualise the resulting sampled data, as described in its tracing and data source documentation.

Verdict: Prometheus prioritises completeness and simplicity for metrics collection, while Grafana assumes sampling decisions may already have been made upstream and focuses on exploring and correlating the data that is retained.

Data Retention

Prometheus stores metrics locally using its built-in TSDB and applies time- or size-based retention. By default, Prometheus retains data for 15 days if no retention flags are set. Retention can be explicitly configured using flags such as –storage.tsdb.retention.time, allowing teams to set 30 days, 60 days, 90 days, or 1 year (or any other duration). Once the configured limit is reached, older data is automatically deleted. Long-term retention beyond this window typically requires external systems, which are outside of core Prometheus.

Grafana does not enforce a single retention policy itself; retention depends on the plan and the connected backend. Based on Grafana’s official documentation and pricing pages, Grafana Cloud Free retains data for 14 days. Paid plans extend retention to 30 days for logs and traces, while metrics retention is listed as 13 months. Retention policies are defined by plan tier and backend configuration rather than the Grafana UI alone.

Verdict: Prometheus retention is controlled locally and constrained by disk and performance, while Grafana’s retention is plan- and backend-dependent, with longer retention available through paid tiers.

Support Channel & TAT

Prometheus is supported entirely through community channels. The project does not offer commercial support, paid plans, or SLAs. Users rely on public mailing lists, GitHub issues, documentation, and community chat channels. There are no guaranteed response times, and support depends on community availability and maintainer capacity, as outlined in the project’s official documentation and governance pages.

Grafana provides tiered support options depending on the plan. Community support is available to all users. Pro plans include 8×5 email support, while Enterprise plans offer 24×7 support with custom SLAs, as listed on Grafana’s official pricing and support documentation. Response times and escalation paths vary by subscription level.

Verdict: Prometheus assumes teams are self-sufficient or supported internally, while Grafana offers structured support paths for organisations that require defined response times and enterprise-grade assistance.

How Teams Typically Decide Between Prometheus and Grafana

This decision is rarely about choosing one tool over the other in isolation. In practice, teams evaluate Prometheus and Grafana together, based on how responsibility, cost, and operational risk evolve as systems scale.

Who is involved (engineering, finance, security)

Engineering teams usually lead the evaluation. They look at how metrics are collected, how alerts behave under load, and how easily data can be queried during incidents. For them, Prometheus is about reliability and signal quality, while Grafana is about visibility and collaboration.

Finance teams get involved once observability moves beyond experimentation. They focus on infrastructure spend, storage growth, and whether costs scale predictably as telemetry volume increases. Even with open-source tooling, questions around ongoing operational cost and staffing effort become relevant.

Security and compliance teams weigh in when data sensitivity, access control, or deployment model matters. Self-hosted Prometheus appeals where data residency is strict, while Grafana’s deployment and access options are reviewed for auditability, user permissions, and external exposure.

What questions block decisions

Several recurring questions tend to slow decisions:

- Who owns operating and scaling the system long term?

- How much historical data do we actually need, and where will it live?

- What happens to performance and cost during incidents or traffic spikes?

- Can we correlate metrics with logs and traces quickly enough to reduce MTTR?

These questions often cannot be answered by feature lists alone. They depend on workload patterns, team maturity, and how much operational responsibility the organisation is willing to take on.

Why comparisons alone aren’t enough

Static comparisons describe what Prometheus and Grafana can do, but not how they behave under pressure. Real trade-offs appear during outages, high-cardinality growth, or rapid service expansion. At that point, teams discover whether their setup prioritises simplicity, flexibility, or long-term operability.

As a result, many teams move from asking “Which tool is better?” to “Which responsibilities do we want to own ourselves, and which do we want abstracted away?” That shift, more than individual features, is what ultimately shapes the decision between Prometheus, Grafana, or a broader observability approach.

Prometheus vs Grafana: Use Cases

These use cases reflect how teams apply Prometheus and Grafana in real environments, based on official documentation, common deployment patterns, and what typically shows up in demos, evaluations, and production usage.

Choose Prometheus if:

Prometheus is a strong fit when metrics are the primary signal and teams are comfortable operating the monitoring stack themselves.

- You are a startup or early-stage team that needs reliable metrics and alerting without licensing costs. Based on Prometheus’ official documentation, the software is fully open source and free, making it attractive when budgets are tight and infrastructure is still manageable.

- You want lightweight, metrics-first monitoring for Kubernetes, infrastructure, or backend services. Prometheus is commonly used for scraping system, container, and application metrics where logs and traces are handled separately.

- You have strict data residency or compliance requirements that require everything to run on your own infrastructure. Prometheus’ self-hosted, self-managed model keeps all metric data within your environment.

- You prefer full control over retention, scrape intervals, and alerting logic, even if that means handling storage sizing and scaling yourself. Based on real-world usage, this appeals to teams with strong platform or SRE ownership.

- You are focused on known failure modes and threshold-based alerting rather than exploratory analysis or cross-signal correlation.

Choose Grafana if:

Grafana is a better fit when teams need visibility across multiple signals and data sources, not just metrics.

- You need dashboards and shared views across engineering, product, and operations teams. Based on Grafana’s official site, its core strength is visualising and exploring data from many backends in one place.

- You are working toward full-stack observability, combining metrics, logs, and traces from systems like Prometheus, Loki, and Tempo. Grafana is often used as the unifying layer for MELT signals.

- You want a faster time to value with less operational overhead. Based on Grafana Cloud documentation and sales positioning, managed deployment reduces the burden of running and upgrading the visualisation layer yourself.

- You are troubleshooting distributed systems or microservices and need to reduce mean time to resolution (MTTR) by correlating metrics with logs and traces during incidents.

- You need flexibility to support multiple telemetry sources, including OpenTelemetry, Prometheus exporters, and tracing pipelines, as systems and teams grow.

In practice, many teams start with Prometheus for metrics and adopt Grafana as visibility needs expand. Over time, the decision becomes less about individual features and more about how much operational responsibility, cost variability, and observability depth the organisation is prepared to manage.

Why Some Teams Look Beyond Prometheus and Grafana

As environments grow, teams often run into practical limits with a Prometheus + Grafana setup.

Common friction points include:

- Multiple tools for metrics, logs, and traces, each with separate storage and lifecycle management

- Higher operational overhead to maintain exporters, backends, and integrations

- Cost becoming harder to forecast as data volume and retention increase

- Fragmented MELT signals slow investigation during incidents

What begins as a flexible, low-cost stack can become harder to operate and reason about once telemetry volume and system complexity increase.

This is where some teams start evaluating unified observability platforms like CubeAPM. CubeAPM brings metrics, logs, traces, errors, and user experience data into a single platform. It is OpenTelemetry-native, runs in a self-hosted environment for data control, and is vendor-managed to reduce operational effort. Based on demo data and sales conversations, its ingestion-based pricing model is designed to remain predictable as telemetry scales.

CubeAPM is typically considered when:

- Teams outgrow metrics-only monitoring and need full MELT visibility

- Compliance or data residency requirements require self-hosted observability

- Microservices architectures need consistent end-to-end tracing

- Reducing MTTR becomes a priority through faster signal correlation

Conclusion

Prometheus and Grafana serve different but complementary roles in modern observability stacks. Prometheus focuses on collecting and alerting on metrics, while Grafana provides the visibility and exploration layer that helps teams understand the data across systems.

For many teams, this combination works well in early and mid-stage environments. As systems grow, trade-offs around operational overhead, retention, and signal correlation become more visible and shape how the stack evolves.

Ultimately, the right choice depends on team maturity, workload complexity, and how much operational responsibility an organisation is prepared to own as observability needs scale.

Disclaimer: The information in this article reflects the latest details available at the time of publication and may change as technologies and products evolve.

FAQs

1. Is Grafana required to use Prometheus?

No. Prometheus can run on its own and includes a built-in interface for querying metrics. Grafana is commonly added for richer dashboards and visualisation, but it is not required for Prometheus to function.

2. Can Grafana work without Prometheus?

Yes. Grafana is data-source agnostic and can connect to many backends, including cloud monitoring services, SQL databases, log systems, and tracing platforms. Prometheus is just one of many supported data sources.

3. Which is better for alerting: Prometheus or Grafana?

Prometheus is generally better suited for alerting. It includes native alerting capabilities and integrates directly with Alertmanager for routing and notifications. Grafana can generate alerts from queries, but it typically relies on the underlying data source rather than acting as the primary alerting engine.

4. Do Prometheus and Grafana store the same data?

No. Prometheus stores time-series metrics data locally. Grafana does not store metrics by default; it queries data from external systems and presents it through dashboards and visualisations.

5. Is Prometheus or Grafana easier to scale?

They scale differently. Prometheus scaling requires architectural decisions, such as federation or remote storage, and increases operational effort. Grafana scales more easily from a user and dashboard perspective, especially when using managed offerings, but still depends on the scalability of its connected data sources.