Monitoring Postgres Slow Queries is now essential for modern applications where milliseconds define user experience. Over 40% of production slowdowns stem from inefficient queries.

Without real-time monitoring, these bottlenecks cascade across APIs and user-facing services, crippling performance. Yet most teams struggle when monitoring Postgres slow queries, as logs are noisy, dashboards lack query-level context, and tracing often stops at the application layer.

CubeAPM is the best solution for Postgres Slow Query monitoring. It combines metrics, logs, and error tracing into one OpenTelemetry-native platform, linking slow SQL to specific services, endpoints, and latency spikes. With smart sampling, real-time dashboards, and unified MELT data, CubeAPM delivers deep visibility at 60% lower cost than legacy APMs.

In this article, we’ll cover what defines Postgres slow queries, why monitoring Postgres slow queries matters, key metrics to track, and how CubeAPM provides it.

What Constitutes a “Slow Query”?

In PostgreSQL, a slow query is any SQL statement that takes longer than an acceptable threshold, typically 500 milliseconds to 1 second, depending on the workload. Even small delays at the query level can cascade into significant response-time spikes for user-facing applications. In high-concurrency environments, thousands of slightly delayed queries can overwhelm CPU and memory, degrading system performance and user experience.

PostgreSQL offers several built-in mechanisms to detect and analyze such slow queries:

pg_stat_statementsextension aggregates execution statistics, helping you identify the most time-consuming queries by mean or total execution timeEXPLAIN ANALYZEgoes deeper, showing whether the query planner used indexes or performed full table scansauto_explainautomatically logs execution plans for slow-running queries in production environments, invaluable for long-term trend analysis.

Common root causes of slow queries include missing indexes, sequential scans on large datasets, inefficient joins, outdated statistics, bloat from frequent updates/deletes, and I/O contention due to disk latency. These issues often compound under high transaction volume, making continuous monitoring essential.

Example: A Slow Query in Action

Imagine a SaaS analytics platform that stores millions of transactions in three related tables: orders, customers, and payments. A developer writes a query to fetch recent customer purchase summaries:

SELECT c.name, SUM(p.amount)

FROM customers c

JOIN orders o ON c.id = o.customer_id

JOIN payments p ON o.id = p.order_id

WHERE o.created_at > NOW() - INTERVAL '30 days'

GROUP BY c.name;Because there’s no index on created_at or the join keys, PostgreSQL performs sequential scans across all three tables, evaluating millions of rows. On a production database with 10 million orders, this query could easily exceed 2–3 seconds of execution time, especially during peak usage. The result? Slower API responses, delayed dashboards, and potential timeouts in client-facing services.

By enabling pg_stat_statements and monitoring with CubeAPM’s Postgres integration, teams can catch such high-latency queries in real time, visualize their impact, and use EXPLAIN ANALYZE insights to optimize them before they disrupt performance.

Why Monitor Postgres Slow Queries?

Protect Application Performance and User Experience

Slow SQL queries are among the top causes of performance degradation in PostgreSQL-based systems. Inefficient queries and missing indexes as the primary culprits behind database slowdowns. Even a 500 ms query can trigger visible lag in API responses or dashboards when it runs hundreds of times per second. Monitoring Postgres slow queries ensures you detect these delays before they ripple through user sessions and revenue streams.

Catch Query Bottlenecks Before They Cascade

In distributed architectures, one slow SQL statement can block dozens of upstream microservices. PostgreSQL exposes wait events and lock statistics that reveal when transactions are stalled behind a long-running query. Monitoring slow queries in real time helps you spot these long locks early and prevent them from affecting dependent services.

Reduce I/O Pressure and Cloud Costs

Most slow queries aren’t purely logical errors—they’re resource inefficiencies. Sequential scans and unoptimized joins cause unnecessary reads, inflating I/O costs and slowing caching efficiency. Optimizing query access patterns can cut read query times by up to 50% and reduce IOPS utilization dramatically. Continuous slow query monitoring helps teams identify such heavy statements before they bloat compute costs or throttle disk throughput.

Shorten Mean Time to Resolution (MTTR)

When production slows down, pinpointing which query caused it can take hours without proper instrumentation. PostgreSQL’s built-ins—pg_stat_statements, EXPLAIN ANALYZE, and auto_explain—allow you to log and rank queries by total or mean execution time. Combining these with query-level metrics in an observability platform drastically reduces MTTR. Teams can jump from “API is slow” to “this JOIN on orders table ran 900 ms too long” in seconds, enabling faster recovery and sustained uptime.

Maintain Index and Vacuum Health

Slow queries often signal deeper maintenance issues—table bloat, outdated statistics, or inefficient indexes. Over time, this affects the planner’s ability to choose optimal paths. Percona’s 2025 tuning guide highlights that routine monitoring of slow queries often exposes tables needing reindexing or vacuum tuning. Tracking these trends keeps the database lean, query plans selective, and cache hit ratios above 99%.

Connect Database Metrics to Business KPIs

Monitoring Postgres slow queries is not just a DBA task—it’s a business reliability measure. Slow queries delay order placements, report generation, or payment processing. Mapping query latency to service-level objectives (SLOs) and business KPIs gives engineering and product teams shared visibility. When a checkout API’s P95 latency spikes, tying it to a specific SQL query empowers teams to fix the root cause before users notice.

Key Metrics to Track for Postgres Slow Queries

Monitoring slow queries in PostgreSQL requires tracking both query-level metrics and system-level signals that affect performance. These metrics help identify inefficient SQL statements, suboptimal plans, or underlying I/O and resource bottlenecks. CubeAPM unifies these indicators from PostgreSQL exporters and OpenTelemetry pipelines, giving teams complete visibility across metrics, traces, and logs.

Query Performance Metrics

These metrics reveal which queries are consuming the most time or resources. They are critical for identifying slow statements, inefficient joins, or high-latency patterns.

- Average Query Duration: Measures the mean execution time per SQL statement. A high value indicates inefficient query design or a lack of proper indexing. Threshold: Keep under 200–500 ms for OLTP workloads; beyond 1s signals a slow query.

- Maximum Query Duration: Captures the longest observed runtime of any query during a specific window. Helps identify occasional outliers that may not affect averages but degrade user experience. Threshold: Investigate any queries exceeding 1s in production environments.

- Calls per Query: Tracks how often each query executes within a given interval. High-frequency queries with moderate latency can cumulatively stress the system. Threshold: Watch for queries executed >1,000 times/minute with noticeable latency.

- Rows Processed per Query: Indicates how many rows are scanned or returned during execution. High counts often imply sequential scans or missing WHERE filters. Threshold: Queries scanning >100k rows should be reviewed for indexing opportunities.

- Query Plans with Sequential Scans: Shows how many queries rely on full table scans instead of index scans. Sequential scans on large tables severely impact performance. Threshold: Sequential scans should account for <5% of total query executions.

Resource Utilization Metrics

These metrics reflect how slow queries consume database resources like CPU, memory, and disk I/O. Tracking them ensures that problematic statements are identified before they impact system stability.

- CPU Time per Query: Measures CPU utilization for query execution. High CPU usage typically indicates expensive joins or functions within queries. Threshold: Keep CPU time per query below 70% of total available cycles for sustained load.

- I/O Wait Time: Reflects delays in disk access during query processing. High values often result from unindexed joins or overloaded storage systems. Threshold: Keep average I/O wait below 10–15 ms per operation for SSD-backed systems.

- Temporary Disk Writes: Tracks how often queries write intermediate results to temporary files due to insufficient

work_mem. Threshold: Aim for zero temp writes in typical workloads; recurring writes indicate low memory settings. - Shared Buffer Hit Ratio: Measures how effectively queries use PostgreSQL’s caching layer. Lower ratios signal frequent disk reads or poor caching of hot data. Threshold: Maintain a cache hit ratio above 99% for optimal performance.

Locking and Contention Metrics

Lock contention can escalate into cascading query delays. These metrics help detect blocking queries and transaction bottlenecks that contribute to overall slowness.

- Lock Wait Time: Measures the average time queries spend waiting for locks on tables or rows. Excessive lock waits often stem from uncommitted long-running transactions. Threshold: Investigate any wait time exceeding 100–200 ms per transaction.

- Blocked Queries Count: Counts the number of queries currently waiting on locks. Continuous spikes suggest concurrency issues or missing transaction isolation handling. Threshold: Aim for 0 blocked queries during steady-state operation.

- Deadlocks Detected: Tracks the number of deadlock events where PostgreSQL cancels transactions to prevent system freeze. Threshold: Deadlocks should be rare (ideally zero); recurring events warrant schema or transaction redesign.

System Health and Storage Metrics

Slow queries can emerge from system-level inefficiencies. These metrics provide insight into storage throughput, bloat, and memory health—all factors that influence query latency.

- Table Bloat Ratio: Indicates how much wasted space exists in frequently updated tables. High bloat degrades performance by increasing scan times. Threshold: Keep bloat under 20%; anything higher requires a VACUUM FULL or reindex.

- Index Usage Ratio: Shows the proportion of queries leveraging indexes versus full scans. Low ratios signal missing or unused indexes. Threshold: Maintain >90% index usage for healthy workloads.

- Disk Read Throughput: Monitors the rate at which data is read from disk during query execution. Low throughput with high latency often points to storage bottlenecks. Threshold: Consistently low throughput (<100 MB/s on SSD) should prompt I/O layer analysis.

- Memory Usage per Connection: Tracks how much memory each backend process consumes. Queries consuming excessive memory can trigger swap usage and latency spikes. Threshold: Keep per-connection memory under 50–100 MB, depending on workload size.

How to Enable and Collect Slow Query Data in PostgreSQL with CubeAPM

Monitoring slow queries in PostgreSQL through CubeAPM involves combining Postgres native telemetry (like pg_stat_statements and slow query logs) with OpenTelemetry instrumentation so that CubeAPM can ingest, visualize, and alert on query latency in real time.

Step 1: Enable PostgreSQL Slow Query Logging

To begin, configure PostgreSQL to log any query that exceeds a specific duration threshold.

1. Edit your postgresql.conf file:

log_min_duration_statement = 500 # Logs queries slower than 500 ms

log_statement = 'none'

logging_collector = on

log_directory = 'pg_log'

log_filename = 'postgresql-%Y-%m-%d.log'This ensures all queries longer than 500 ms are captured in the logs.

2. Reload the configuration:

SELECT pg_reload_conf(); For advanced visibility, enable the auto_explain extension to log execution plans automatically:

CREATE EXTENSION IF NOT EXISTS auto_explain;

SET auto_explain.log_min_duration = 500;These logs become the foundation for slow query analysis.

Step 2: Enable the pg_stat_statements Extension

pg_stat_statements is PostgreSQL’s most powerful module for analyzing query performance.

1. Activate the extension:

CREATE EXTENSION IF NOT EXISTS pg_stat_statements;2. Verify its availability:

SELECT * FROM pg_available_extensions WHERE name = 'pg_stat_statements';3. Query top slow statements:

SELECT query, calls, mean_exec_time, total_exec_time

FROM pg_stat_statements

ORDER BY mean_exec_time DESC

LIMIT 10;These statistics feed directly into CubeAPM dashboards once instrumentation is active.

Step 3: Configure OpenTelemetry for PostgreSQL Metrics and Traces

Next, use the OpenTelemetry Collector to export query metrics and spans to CubeAPM.

1. Follow the OpenTelemetry instrumentation guide to configure the Collector service.

2. In the Collector’s configuration YAML, set up Prometheus and OTLP exporters to pull data from pg_stat_statements and forward it to CubeAPM:

receivers:

prometheus:

config:

scrape_configs:

- job_name: 'postgresql'

static_configs:

- targets: ['localhost:9187'] # PostgreSQL exporter port

exporters:

otlp:

endpoint: "https://<your-cubeapm-endpoint>:4317"

tls:

insecure: false

service:

pipelines:

metrics:

receivers: [prometheus]

exporters: [otlp]3. Ensure your application also emits database spans (with attributes like db.statement, db.system: postgresql, and db.duration) using the OpenTelemetry SDK or agent.

This configuration allows CubeAPM to receive both query metrics and traces for detailed correlation.

Step 4: Connect OpenTelemetry Collector to CubeAPM

CubeAPM provides native support for OpenTelemetry ingestion via OTLP.

- Point your Collector to the CubeAPM endpoint shown in your dashboard after setup.

- Verify endpoint details in the CubeAPM Configuration Guide.

- If you’re self-hosting, ensure the OTLP port (default:

4317for gRPC or4318for HTTP) is open. - Once connected, CubeAPM will start receiving Postgres query spans and metrics in real time.

You can confirm ingestion by navigating to the Metrics section in CubeAPM and searching for pg_stat_statements-based metrics such as mean_exec_time_seconds_total.

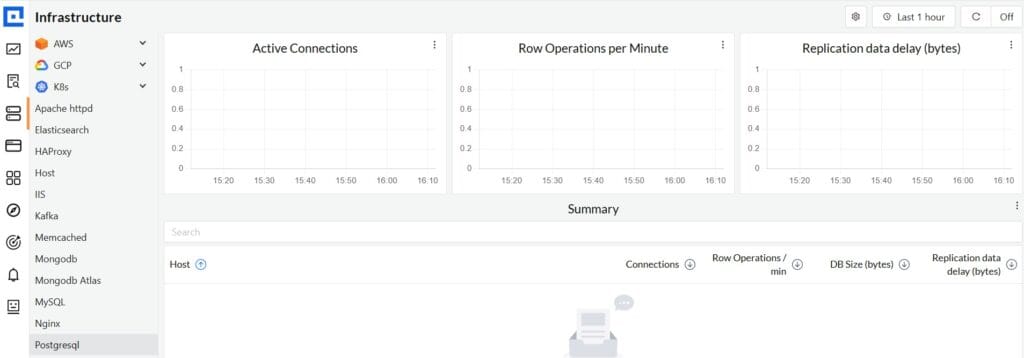

Step 5: Visualize and Correlate Slow Queries in CubeAPM

After data ingestion, CubeAPM automatically visualizes slow queries in your database performance dashboards.

- Navigate to Metrics → PostgreSQL to view latency distributions, query frequency, and top slow statements.

- Open Traces to correlate high-latency SQL queries with the corresponding API or service span.

- Use the Logs section to inspect slow-query log entries with full query text and timestamps (CubeAPM Logs Docs).

This correlation helps teams trace a slow /orders API call down to the exact SQL statement causing the delay.

Step 6: Set Up Alerts for Slow Query Thresholds

Once visibility is established, configure alerting rules to notify you when query latency crosses defined thresholds.

Follow the CubeAPM Alerting Setup Guide to connect email or webhook notifications. Alerts can also be routed to Slack, PagerDuty, or custom webhooks for instant visibility.

Correlating Slow Queries with Traces and Logs in CubeAPM

In PostgreSQL, a slow query is rarely an isolated problem — it’s often tied to application latency, API bottlenecks, or I/O pressure. CubeAPM helps you connect these dots by correlating metrics, traces, and logs into one unified view.

Here’s how CubeAPM makes this correlation powerful and actionable:

- Unified Context: Every query executed through PostgreSQL emits a trace span enriched with attributes like

db.system: postgresql,db.statement, anddb.duration. CubeAPM captures these spans and links them to their upstream API requests and downstream logs. - Trace-Level Visibility: In the Traces view, you can filter by

db.system=postgresqlor high-latency thresholds to instantly see the slowest SQL calls. Each trace reveals:

- Execution duration and query text

- Application endpoint or service where the query originated

- Correlated metrics from

pg_stat_statements, such as mean and total execution time

- Execution duration and query text

- Log Correlation: The Logs view displays PostgreSQL slow-query logs alongside trace data. Each log line is automatically linked to its corresponding span, showing:

- Exact query timestamp and user session

- Error messages, lock waits, or sequential scan warnings

- Exact query timestamp and user session

- Root-Cause Clarity: Within a few clicks, you can follow a latency spike in

/checkoutor/ordersto the specific SQL query — for example, a missing index or an unoptimized join — that caused the delay. - MELT Integration: CubeAPM’s Metrics, Events, Logs, and Traces (MELT) model ensures all data points connect in one place, allowing teams to move from symptom → query → execution plan → fix seamlessly.

By correlating Postgres slow queries with traces and logs, CubeAPM replaces guesswork with visibility — turning hours of investigation into minutes of insight.

Real-Life Case Study: How a SaaS Platform Reduced Postgres Query Latency by 70% Using CubeAPM

A fast-growing SaaS analytics company operating multi-tenant PostgreSQL clusters noticed recurring query latency spikes during peak traffic. Despite using pg_stat_statements and manual log parsing, the team lacked cross-layer visibility — slow queries surfaced in APIs like /reports and /usage, but engineers couldn’t connect them to specific code paths or services.

Challenge

- Queries exceeding 2–3 seconds appeared intermittently across services.

- No unified view linking Postgres slow queries with application traces.

- Repeated unindexed JOINs were driving up CPU and I/O utilization.

- Debugging required hours of manual log analysis after incidents.

Solution with CubeAPM

- Deployed CubeAPM’s OpenTelemetry Collector and enabled

pg_stat_statementsacross all Postgres instances. - Used Traces view to filter

db.system=postgresqlspans and identify which endpoints triggered the slowest SQL queries. - Leveraged Logs integration to correlate PostgreSQL’s slow-query logs and

auto_explainoutput directly within CubeAPM. - Added query-level alert rules in CubeAPM to notify engineers when the mean execution time exceeded 1 second.

Results

- 70% faster queries: Average latency dropped from 2.8s to 850ms after indexing key joins.

- 35% lower CPU usage: Optimized queries reduced resource load across clusters.

- 45% higher throughput: The database handled more transactions with the existing infrastructure.

- 60% cost savings: An affordable 0.15/GB pricing eliminated host-based overages charged by legacy APMs.

By correlating PostgreSQL metrics, traces, and logs through CubeAPM, the company transformed slow-query troubleshooting from hours to minutes — achieving both better performance and lower observability costs.

Verification Checklist & Alert Rules for PostgreSQL Slow Query Monitoring with CubeAPM

Verification Checklist

Before you trust dashboards, verify that Postgres is emitting the right signals and CubeAPM is receiving, correlating, and alerting on them. Use this checklist to validate end-to-end coverage—metrics, traces, and logs—for slow queries.

pg_stat_statementsenabled: Confirm the extension is created and returns rows (e.g., top queries by mean_exec_time). Validate after deploy and on every Postgres upgrade or failover.- OTEL collector connected to CubeAPM: Check that the OpenTelemetry Collector is scraping Postgres metrics (Prometheus exporter) and exporting to CubeAPM’s OTLP endpoint without drops.

- Query dashboards live: Ensure latency, calls, rows scanned, and sequential scan panels populate; top-N slow queries should be visible for your current window (e.g., last 15–60 minutes).

- Alert rules active: Verify alerts exist for latency thresholds, tail-latency spikes (P95/P99), and anomaly bursts in slow-query counts—and that notifications reach your channels.

- Traces linked to database spans: Open a slow API trace and confirm it contains db.system=postgresql spans with db.statement, and that related slow-query logs are searchable—this validates MELT correlation.

Example Alert Rules for Postgres Slow Query Monitoring

1. Average Query Duration Alert

This rule fires if the average execution time across all statements exceeds 1 second for 10 minutes, signaling a system-wide slowdown.

alert: PostgresMeanQueryLatency

expr: rate(pg_stat_statements_mean_time_seconds_total[5m]) > 1

for: 10m

labels:

severity: critical

annotations:

summary: "PostgreSQL query latency >1s"

description: "Sustained mean query latency detected across multiple queries."2. Tail Latency (P95) Alert

This detects spikes in 95th percentile latency, often the first sign of degraded query performance.

alert: PostgresP95LatencySpike

expr: histogram_quantile(0.95, sum(rate(pg_stat_statements_exec_time_histogram_seconds_bucket[5m])) by (le)) > 1.5

for: 5m

labels:

severity: warning

annotations:

summary: "P95 query latency >1.5s"

description: "High tail latency observed in PostgreSQL queries. Investigate slow joins or missing indexes."Traces linked to database spans: Validate that every slow query captured in CubeAPM dashboards links to a trace span with db.system=postgresql. Open any slow API trace and ensure you can view the corresponding SQL statement, duration, and related logs. This confirms that CubeAPM’s MELT correlation—Metrics, Events, Logs, and Traces—is working end-to-end.

Conclusion

Monitoring Postgres slow queries is critical for maintaining database health, application responsiveness, and user satisfaction. Even well-tuned systems can suffer from hidden inefficiencies that gradually erode performance and reliability. Without continuous monitoring, these issues often surface too late—when dashboards stall or customer requests start timing out.

CubeAPM simplifies this challenge with full-stack observability for PostgreSQL. Unifying metrics, traces, and logs allows teams to pinpoint which queries, endpoints, or services contribute to latency. Its OpenTelemetry-native pipelines and real-time dashboards make detecting and resolving slow queries effortless and cost-efficient.

Start monitoring your PostgreSQL slow queries today with CubeAPM and turn reactive debugging into proactive optimization. Experience unified visibility, transparent pricing, and faster troubleshooting—all in one observability platform.

Disclaimer: The information in this article reflects the latest details available at the time of publication and may change as technologies and products evolve.

FAQs

1. How do I identify slow queries in PostgreSQL?

You can enable pg_stat_statements and set log_min_duration_statement in postgresql.conf to capture queries that exceed your desired threshold. CubeAPM then aggregates these metrics and displays them in latency dashboards for easy visibility.

2. What is a good threshold for slow queries?

For most OLTP systems, queries running longer than 500–1000 milliseconds are considered slow. Analytical or OLAP systems may tolerate longer times, but consistent monitoring helps define realistic baselines for your workload.

3. Can I monitor PostgreSQL slow queries in real time?

Yes. With CubeAPM’s OpenTelemetry integration, slow queries are collected and visualized in real time, allowing engineers to detect latency spikes before they affect users or SLAs.

4. How does CubeAPM help reduce Postgres slow queries?

CubeAPM correlates each slow query with its application trace, logs, and metrics—helping you identify whether the delay comes from missing indexes, inefficient joins, or I/O contention. This deep context makes optimization faster and more accurate.

5. What alerts should I configure for slow queries?

Set up alerts for average query duration and P95 latency using CubeAPM’s alerting engine. For example, trigger a warning when the mean query duration exceeds 1s or P95 latency crosses 1.5s. This ensures early detection of performance regressions.