Monitoring Nginx is critical because it powers 33.3% of all websites currently. With its role as a web server, reverse proxy, and load balancer, even small issues in latency or error rates can disrupt user experience and revenue.

Many teams face challenges with Nginx monitoring: hidden 5xx errors, overwhelming log volumes without smart sampling, and fragmented tools that slow down troubleshooting. At scale, costs rise and vital signals get buried in noise.

CubeAPM is the best solution for monitoring Nginx. It unifies metrics, structured logs, and error tracing in an OpenTelemetry-native platform, with smart sampling and flat $0.15/GB pricing for predictable costs and full-stack visibility.

In this article, we’ll explore what Nginx is, why monitoring it matters, key metrics, and how CubeAPM makes Nginx monitoring reliable and cost-efficient.

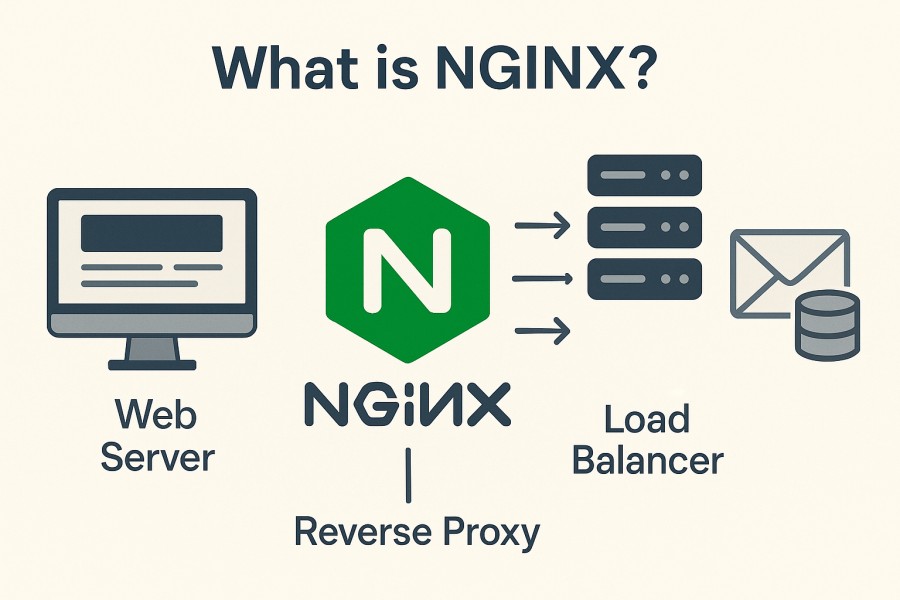

What is Nginx?

Nginx is a high-performance, open-source web server that also functions as a reverse proxy, load balancer, and caching engine. Known for its event-driven, asynchronous architecture, it can handle thousands of concurrent connections with minimal resource usage, making it one of the most widely adopted servers on the internet today.

For businesses, Nginx provides the foundation for fast, reliable, and secure digital experiences:

- Scalability: Handles high volumes of concurrent requests without significant overhead.

- Flexibility: Serves static content, proxies traffic to backend apps, and integrates with modern microservices.

- Resilience: Balances traffic across multiple servers and caches responses to reduce latency.

- Security: Filters malicious traffic, manages TLS/SSL certificates, and helps prevent common attacks.

Together, these capabilities enable enterprises, SaaS providers, and e-commerce platforms to deliver consistent uptime, fast performance, and cost-efficient infrastructure at scale.

Example: Using Nginx for a SaaS Application API Gateway

A SaaS company can use Nginx as an API gateway to manage thousands of client requests to its microservices. With built-in load balancing, Nginx distributes traffic evenly across service instances, avoiding downtime during traffic surges. Rate limiting protects APIs from abuse, caching speeds up repeated queries, and SSL termination secures data exchanges — resulting in reliable performance and a smoother customer experience.

Why Monitoring Nginx Deployments is Important

Catch upstream and gateway failures before users do

Nginx often fronts application backends; when upstreams stall or time out, users see 502/504 errors and abandoned sessions. Proactive monitoring of upstream response time, gateway_timeout, upstream_connect_time, and proxy_next_upstream behavior lets you spot regressions early and tie spikes in 5xx to specific backends or routes. Troubleshooting guides consistently trace 502/504 to slow or crashing upstreams—making per-route latency and error telemetry non-negotiable.

Defend against modern L7 attack patterns that look like “legit” traffic

Application-layer DDoS is accelerating, and Nginx sits on the front line. Cloudflare reports 21.3 million DDoS attacks blocked in 2024 (a 53% YoY increase), with hundreds of “hyper-volumetric” events crossing 1 Tbps—attacks that saturate request handling and connection queues if you’re blind to surge patterns. Pair this with the HTTP/2 Rapid Reset technique that pushed attacks to 201 million requests/sec, and you need request-rate, per-IP anomaly, and connection state visibility directly on your Nginx tier.

Avoid certificate-related outages as TLS lifecycles shrink

Shorter certificate lifetimes are becoming the norm: industry watchers expect most SSL/TLS certs to drop to 6-month validity by 2026, and Let’s Encrypt has announced short-lived 6-day certs and the end of expiration reminder emails—meaning automation and monitoring of TLS expiry on Nginx endpoints is now essential. Track certificate expiration, handshake failures, and protocol errors to prevent sudden outages.

Plan capacity with connection and worker metrics, not guesswork

Nginx’s event-driven workers are efficient, but saturation shows up as rising active connections, accept failures, backlog growth, or a wave of 499 client-closed requests. Monitoring connections { active, reading, writing, waiting }, worker utilization, and queue depth—plus per-location request rates—lets you right-size keepalives, buffers, and worker_connections before throughput flattens. NGINX’s own monitoring guidance emphasizes live activity views to watch these load characteristics in real time.

Control noise and cost with structured logs and targeted retention

Raw access/error logs balloon fast; without structure, they’re expensive and hard to query. Adopting JSON log formats with consistent fields (route, status, upstream timing, request ID) and life-cycle retention makes detection of anomalies—like route-specific latency or bot spikes—reliable and cost-efficient. Recent operational guides stress that Nginx logging done right is foundational for performance and incident response.

Tie the technical risk to business impact

When Nginx blips cascade into downtime, the financial hit is real. Independent survey data shows 90% of enterprises put the hourly cost of downtime above $300,000, with 41% estimating $1M–$5M per hour—a direct incentive to surface Nginx-side errors and latency regressions before they escalate. Use SLO-aligned alerts on p95/p99 latency and 5xx rates to protect KPIs that matter to the business.

Prepare for traffic spikes and promotions without firefighting

Whether a launch, marketing campaign, or seasonal peak, Nginx is the first layer to feel the surge. Monitoring request throughput, cache hit ratio, upstream queue time, and per-backend error rates allows proactive scaling and cache/TTL tuning, so your origin survives the spike—and your users never notice. Cloud-scale reports show quarter-over-quarter growth in large DDoS and high-traffic events, reinforcing the need to watch these signals at the edge.

Keep security posture strong without interrupting performance

Nginx often enforces rate limits, IP reputation filters, and mTLS. Observability into blocked/allowed rates, 401/403 patterns, and TLS version/cipher use lets you tighten controls without breaking legitimate traffic. As record-sized attacks keep appearing, having these security-adjacent metrics on your Nginx tier is a practical safeguard.

Key Metrics to Track in Nginx Monitoring

Monitoring Nginx requires keeping a close eye on several categories of metrics that reflect its performance, stability, and security. Each category provides unique insights into how your web server and proxy layer are behaving under real-world load.

Traffic & Request Metrics

These metrics highlight the overall volume of traffic flowing through Nginx and how well it is being served.

- Requests per second (RPS): Tracks the number of HTTP requests Nginx handles each second. A sudden spike can signal a traffic surge, while a drop may indicate service degradation. Threshold: sustained >2x baseline over 5 minutes should be investigated.

- Request distribution by status code: Monitors the proportion of 2xx, 3xx, 4xx, and 5xx responses. A rise in 4xx may point to client errors, while 5xx indicates server-side issues. Threshold: 5xx above 1% of total requests need immediate attention.

- Request size and response size: Measures average payload sizes to identify anomalies such as abnormally large uploads or downloads that may strain bandwidth. Threshold: Request sizes exceeding 10 MB regularly could require tuning.

Connection Metrics

Connections show how Nginx is managing client sessions and whether resources are being saturated.

- Active connections: Indicates how many concurrent connections Nginx is handling. A rapid increase can signal DDoS or traffic spikes. Threshold: 80–90% of worker_connections limit should trigger alerts.

- Reading, writing, and waiting connections: Breaks down how many connections are currently reading client requests, writing responses, or idle. High waiting counts may indicate underpowered workers. Threshold: waiting >70% of total active connections suggests bottlenecks.

- Dropped connections: Tracks connections reset or rejected by Nginx. Frequent drops may point to queue saturation or TCP-level issues. Threshold: >1% of total connections dropped consistently requires tuning.

Latency & Performance Metrics

These metrics uncover how efficiently Nginx handles traffic and communicates with upstreams.

- Request processing time: Measures how long Nginx spends serving requests. Spikes often indicate backend slowness. Threshold: p95 above 500ms should be investigated.

- Upstream response time: Captures delays introduced by backend servers that Nginx proxies to. Persistent high values may reveal API or database issues. Threshold: upstream latency >300ms for >5 minutes is concerning.

- Cache hit ratio: Shows how effectively Nginx is serving requests from cache instead of fetching upstream. Low ratios increase load on backends. Threshold: a hit ratio below 70% in caching-heavy workloads signals misconfiguration.

Error Metrics

Errors provide direct insight into both client behavior and server stability.

- 5xx error rate: Identifies server-side failures like 502, 503, or 504. These often point to upstream crashes or misconfigured reverse proxies. Threshold: above 1% over baseline requires immediate action.

- 4xx error rate: Reflects client-side issues such as bad requests or unauthorized access. A sharp increase may also signal bot traffic or misrouted requests. Threshold: sustained rise >10% compared to baseline is unusual.

- Handshake failures: Tracks TLS/SSL negotiation issues such as expired or misconfigured certificates. A rise here often leads to downtime and loss of secure connections. Threshold: any increase above normal baseline warrants urgent review.

Resource & System Metrics

These measure how much of the host system Nginx consumes, ensuring the server itself doesn’t become the bottleneck.

- CPU usage by workers: High CPU load may indicate inefficient configuration or a flood of expensive requests. Threshold: workers using >85% CPU consistently suggest scaling or tuning.

- Memory usage: Monitors how much RAM Nginx processes consume. Memory leaks or poor caching configuration can cause spikes. Threshold: memory usage above 80% of host capacity should be flagged.

- Disk I/O from logs: Continuous heavy logging can saturate disk I/O, slowing down overall performance. Threshold: logging consuming >70% disk bandwidth requires rotation or offloading.

Collecting Nginx Logs for Monitoring with CubeAPM

Logs are the backbone of Nginx observability, offering detailed insights into traffic behavior, errors, and performance bottlenecks. With CubeAPM, you can collect, structure, and analyze these logs seamlessly through its OpenTelemetry-native pipeline, transforming raw data into actionable dashboards and alerts.

Access Logs

Access logs capture every request handled by Nginx, including request path, response status, user agent, and bytes transferred. These are vital for tracking user traffic, identifying hot endpoints, and spotting anomalies such as unusual request patterns or bot traffic. In CubeAPM, access logs are ingested in structured JSON, enabling easy filtering, aggregation, and visualization on dashboards. Threshold: a sustained increase in 4xx/5xx responses >5% in access logs should trigger alerts.

Error Logs

Error logs record issues such as misconfigurations, failed upstream connections, permission denials, or resource exhaustion. These logs often provide the root cause of Nginx failures that status codes alone cannot explain. CubeAPM correlates error logs with metrics and traces, so you can pinpoint which upstream or location block caused the issue. Threshold: spikes in error log entries above baseline (>50 errors/min) should be investigated immediately.

Structured JSON Logging

Switching to JSON log format simplifies parsing and analysis, avoiding brittle regex pipelines. In nginx.conf, you can define a log_format json directive that captures fields like $request_time, $upstream_response_time, $status, and $request_id. CubeAPM natively supports JSON logs, so these fields appear as structured attributes you can query and chart. Threshold: ensure >95% of logs are structured consistently; otherwise, ingestion pipelines may drop fields.

Ingesting Logs into CubeAPM

Once logs are structured, CubeAPM’s OpenTelemetry Collector ships them into the platform with minimal setup. From there, you can:

- Build dashboards to visualize error rates, latency per route, or TLS handshake failures.

- Define alerts using PromQL-like rules (e.g., 5xx error rate >1% for 5 minutes).

- Apply smart sampling to retain statistically significant data while cutting log volume by 70–80%, reducing costs without losing visibility.

How to Monitor Nginx with CubeAPM

Monitoring Nginx in CubeAPM involves setting up ingestion pipelines, configuring logs, and enabling dashboards that give you visibility into latency, errors, and throughput. Here’s a step-by-step process:

Step 1: Deploy OpenTelemetry Collector

Start by installing CubeAPM in your environment. You can deploy it on bare metal or VMs, Docker, or Kubernetes with Helm. Once installed, set up the OpenTelemetry Collector to receive Nginx telemetry. The Collector acts as the bridge between your Nginx logs/metrics and CubeAPM, ensuring data is parsed and exported reliably.

Step 2: Configure Access and Error Logs with JSON Format

Enable structured logging for Nginx by defining a log_format json directive in your nginx.conf. This ensures fields like $status, $request_time, and $upstream_response_time are captured in JSON, making them machine-readable. Forward these logs using the CubeAPM Logs pipeline to centralize analysis. Properly structured logs allow CubeAPM to index status codes, response times, and upstream metrics with minimal parsing overhead.

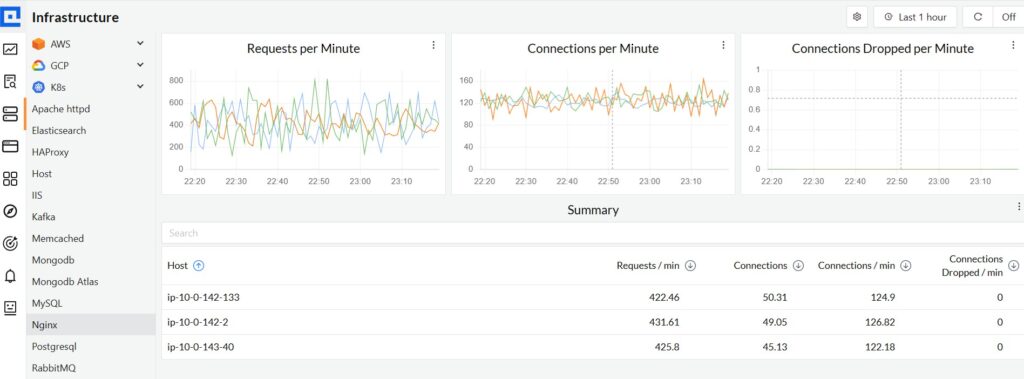

Step 3: Enable Nginx Dashboards for Latency, Throughput, and TLS Errors

Once logs and metrics are ingested, CubeAPM automatically populates dashboards tailored to Nginx workloads. Using the Infra Monitoring module, you can visualize key metrics such as request throughput, active connections, error rates, and TLS handshake failures. These dashboards provide drill-down views that let you connect high-level performance issues with granular log details in seconds.

Step 4: Apply Smart Sampling for Cost-Efficient Observability

Large-scale Nginx deployments generate terabytes of logs monthly. CubeAPM’s Smart Sampling reduces data volume by up to 70–80% while retaining statistical fidelity. For example, 10 TB of raw monthly Nginx logs can compress to ~2.5 TB of billed volume — costing about $375 with CubeAPM’s flat $0.15/GB pricing versus $4,000+ on legacy APM tools. This makes high-volume observability predictable and affordable.

Real-World Example: Monitoring an E-commerce Platform with CubeAPM

Challenge: Checkout failures with 502 errors

Peak traffic campaigns were triggering intermittent 502 Bad Gateway responses at the edge. Nginx error logs showed upstream timeouts and upstream prematurely closed connection messages, while access logs revealed spikes in POST /checkout and elevated p95 latency. The team needed to prove whether the bottleneck was Nginx itself or the payment provider upstream.

Solution: Correlated Nginx logs with upstream latency in CubeAPM

Using structured JSON access/error logs, CubeAPM linked each failing request to its upstream timings ($upstream_connect_time, $upstream_header_time, $upstream_response_time) and traced them to payment API calls. Dashboards overlaid 5xx rate, upstream latency, and connection pool saturation, making it obvious that timeouts clustered around the payment gateway during TLS renegotiation bursts.

Fixes: Optimized keepalive + payment API integration

Engineers tuned proxy_connect_timeout, proxy_read_timeout, and keepalive_requests, and enabled keepalive on upstream pools to reduce handshake churn. They also increased proxy_buffers and aligned retry/backoff via proxy_next_upstream to avoid thundering herds, while the payment client was updated to reuse connections and respect server-side rate limits.

Result: 40% fewer checkout errors, higher conversions

Post-change, CubeAPM showed a 40% drop in 502s on /checkout, p95 latency fell from ~780ms to ~420ms, and abandoned sessions declined measurably. With alerts on 5xx ratio and p95 latency, the team now catches regressions within minutes and sails through promotions without firefighting.

Verification Checklist & Example Alert Rules for Nginx Monitoring with CubeAPM

Before putting Nginx into production, it’s essential to validate that your observability setup is complete. CubeAPM makes it easier to verify key dimensions of health, performance, and security while giving you the flexibility to define alert rules that catch problems before they reach users.

Verification Checklist

- Uptime and availability: Confirm that Nginx endpoints are covered by synthetic checks to detect downtime instantly.

- Error tracking: Ensure access and error logs are structured in JSON and ingested into CubeAPM for visibility into 4xx/5xx spikes.

- Latency monitoring: Validate that p95/p99 request latency and upstream response times are captured and displayed in dashboards.

- TLS health: Check that CubeAPM tracks certificate expiration dates and handshake failures.

- Capacity metrics: Confirm that active connections, worker utilization, and dropped connections are being ingested.

Example Alert Rules in CubeAPM

Here are a few practical alert rules (PromQL-style) you can configure in CubeAPM:

1. Alert for high 5xx error rate

nginx_http_requests_total{status=~"5.."} > 5

groups:

- name: nginx-core

rules:

- alert: NginxHigh5xxErrorRate

expr: increase(nginx_http_requests_total{status=~"5.."}[5m])

/

increase(nginx_http_requests_total[5m]) > 0.01

for: 5m

labels:

severity: critical

service: nginx

annotations:

summary: "High 5xx rate on Nginx"

description: "5xx >1% over 5m on {{$labels.instance}}. Check upstream health, timeouts, and proxy buffers."

2. Alert for TLS handshake failures

nginx_handshake_errors_total > 1

- name: nginx-tls

rules:

- alert: NginxTLSHandshakeFailures

expr: rate(nginx_ssl_handshake_errors_total[5m]) > 0

for: 3m

labels:

severity: warning

service: nginx

annotations:

summary: "TLS handshake failures detected"

description: "Handshake errors rising on {{$labels.instance}}. Verify cert expiry, ciphers, SNI, and chain."

3. Alert for high p95 request latency

histogram_quantile(0.95, rate(nginx_request_duration_seconds_bucket[5m])) > 0.5

- name: nginx-latency

rules:

- alert: NginxP95LatencyHigh

expr: histogram_quantile(

0.95,

sum by (le) (rate(nginx_request_duration_seconds_bucket[5m]))

) > 0.5

for: 10m

labels:

severity: critical

service: nginx

annotations:

summary: "High p95 request latency"

description: "p95 >500ms on {{$labels.instance}}. Check upstream response times, cache hit ratio, and I/O."

Conclusion

Monitoring Nginx is essential for ensuring reliable performance, maintaining security, and keeping user experiences seamless. From handling massive amounts of traffic to balancing upstream services, visibility into metrics, logs, and errors can make the difference between smooth operations and costly outages.

CubeAPM delivers everything you need for Nginx observability — structured log ingestion, latency tracking, error correlation, and real-time dashboards — all powered by an OpenTelemetry-native pipeline. Its flat $0.15/GB pricing and smart sampling make it possible to achieve enterprise-grade monitoring without unpredictable costs.

Start monitoring Nginx with CubeAPM today and gain full-stack visibility, faster troubleshooting, and cost-efficient observability that scales with your business. Take a free demo.

Disclaimer: The information in this article reflects the latest details available at the time of publication and may change as technologies and products evolve.

FAQs

Q1. Can Nginx monitoring help detect security threats like brute-force or bot attacks?

Yes, monitoring Nginx logs for repeated 401/403 responses, unusual request patterns, or abnormal connection spikes can help detect brute-force attempts and bot traffic early, enabling proactive defenses.

Q2. How often should I rotate or archive Nginx logs?

Best practice is to rotate logs daily and set lifecycle rules to archive after 30 days for hot analysis and 180 days for long-term storage, ensuring both cost control and compliance readiness.

Q3. Does Nginx monitoring cover HTTP/2 and HTTP/3 traffic?

Yes, Nginx provides metrics and logs for both HTTP/2 multiplexed streams and QUIC/HTTP/3 requests, which can be ingested into observability platforms for visibility into protocol-level issues

Q4. Can I monitor Nginx running inside containers or Kubernetes?

Absolutely. By exporting logs and metrics from containerized Nginx instances and routing them through OpenTelemetry, you can integrate Nginx monitoring into Kubernetes observability workflows.

Q5. What are the key differences between monitoring Nginx open source and Nginx Plus?

Nginx Plus offers additional metrics like active upstream health checks, session persistence, and live activity monitoring. While open-source Nginx requires external exporters, both can be integrated into observability tools like CubeAPM for full visibility.