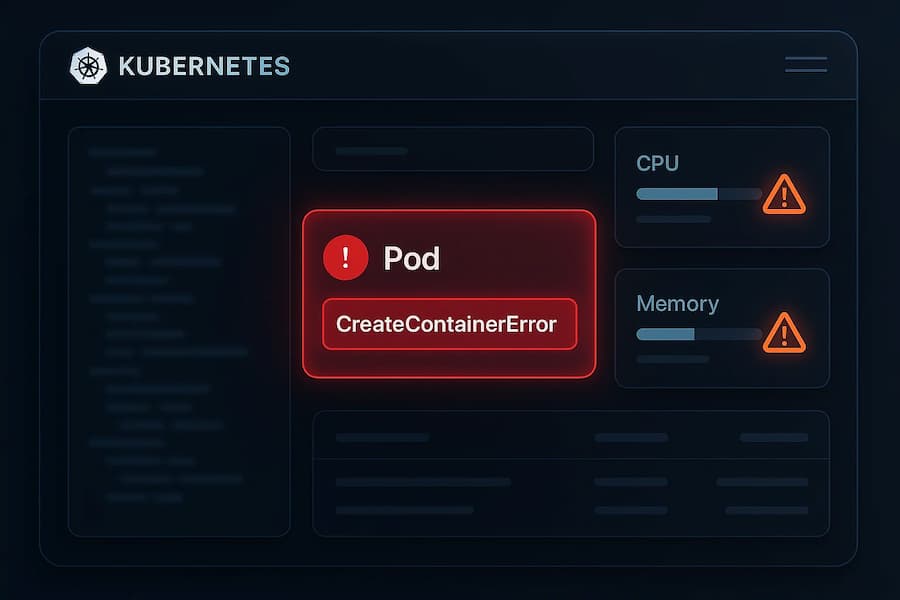

Kubernetes CreateContainerError is a common issue that occurs when the container runtime fails to launch a Pod’s container. Left unresolved, it can stall deployments, disrupt CI/CD pipelines, and trigger production downtime. With Kubernetes now powering workloads in 66% of organizations worldwide, even small configuration errors can ripple into major business impact.

Tools like CubeAPM simplify how teams diagnose and monitor these issues. CubeAPM collects logs, metrics, and Kubernetes events in real time, giving engineers full visibility into why a Pod failed to start. With built-in OpenTelemetry support, predictive alerting, and clear dashboards, CubeAPM accelerates root-cause analysis and reduces MTTR.

In this guide, we’ll explore what CreateContainerError means, why it happens, how to fix it, and how to monitor it effectively using CubeAPM.

What is CreateContainerError in Kubernetes

When Kubernetes schedules a Pod, it goes through a lifecycle: scheduling, image pulling, container creation, and finally running the container. A CreateContainerError happens when the process fails specifically at the container creation step. This stops the Pod from ever reaching the Running state, leaving workloads unavailable.

The points below show how Kubernetes interprets this error internally and the common scenarios where teams encounter it:

1. Pod initialization failure

From Kubernetes’ perspective, CreateContainerError indicates that the container startup sequence failed during initialization. The runtime tried to create the container but hit an error condition that prevented it from progressing further.

2. Typical scenarios

From a user’s point of view, CreateContainerError usually stems from practical issues like a wrong image name or tag, missing pull credentials for private registries, invalid entrypoints, or insufficient node resources. Each of these prevents the Pod from completing startup, leaving it stuck in a waiting state.

Why CreateContainerError in Kubernetes Happens

A CreateContainerError can stem from several root causes. Some are simple config mistakes, others come from deeper runtime or cluster restrictions.

Here’s a detailed breakdown:

1. Wrong image name or tag

A mistyped image name or tag is one of the most common reasons for CreateContainerError. Kubernetes tries to pull the image, but if the tag doesn’t exist in the registry, the container can’t be created.

Example: nginx:latestt instead of nginx:latest.

Quick check:

kubectl describe pod <pod-name>If the Events section says manifest for <image> not found, it’s almost always due to a bad tag.

2. Missing image pull credentials

If the image lives in a private registry, Kubernetes needs a valid imagePullSecret. Without it, the runtime fails at container creation.

Quick check:

kubectl describe pod <pod-name>If you see Failed to pull image: unauthorized, then the Pod doesn’t have the right credentials.

3. Invalid entrypoint or command

When the Pod spec includes a wrong command or args, the container exits immediately. This results in a CreateContainerError because the runtime can’t start the process.

Example: Running a script path that doesn’t exist inside the image.

Quick check:

kubectl logs <pod-name> --previousLook for errors like exec: not found in the logs.

4. Insufficient node resources

If the Pod requests more CPU or memory than what’s available on the node, the runtime can’t allocate resources and container creation fails.

Quick check:

kubectl describe node <node-name>If allocatable CPU/Memory is lower than what the Pod requests, Kubernetes won’t start the container.

5. Security or permission restrictions

PodSecurity policies, SELinux, or AppArmor can all block container startup. In these cases, the runtime denies container creation for security reasons.

Quick check:

kubectl describe pod <pod-name>If you see events like permission denied or operation not permitted, the issue is tied to security contexts.

How to Fix CreateContainerError in Kubernetes

Fixing CreateContainerError requires validating common failure points and applying the right correction.

Here’s a step-by-step approach with both checks and fixes:

1. Fix invalid entrypoint or command

If command or args point to a non-existent binary, the container exits immediately.

Check:

kubectl logs <pod> -c <container> --previousLook for errors like exec: not found.

Fix:

Update the Pod spec to use valid commands. Verify against the image’s Dockerfile or run docker run <image> locally to test the entrypoint.

2. Correct faulty volume mounts (ConfigMap/Secret/hostPath)

Containers fail to start if they mount missing keys, wrong paths, or invalid volumes.

Check:

kubectl describe pod <pod>Look for Events about missing keys or invalid mount paths.

Fix:

Ensure the referenced ConfigMap/Secret exists, contains the expected keys, and the mountPath is valid. Example correction:

volumeMounts:

- name: app-config

mountPath: /etc/config3. Align security context and policies

If SELinux, AppArmor, or PodSecurity restricts startup, you’ll see operation not permitted.

Check:

kubectl describe pod <pod>Look for permission denied messages.

Fix:

Adjust the Pod’s securityContext to comply. Example:

securityContext:

runAsUser: 1000

runAsGroup: 1000

fsGroup: 2000Or update cluster PSP/OPA policies if broader access is required.

4. Adjust resource requests and limits

Requesting more CPU/memory than available prevents container creation.

Check:

kubectl describe node <node>Compare allocatable resources vs. Pod spec.

Fix:

Lower the Pod’s requests/limits in the YAML or scale cluster nodes. Example:

resources:

requests:

cpu: "250m"

memory: "512Mi"

limits:

cpu: "500m"

memory: "1Gi"5. Validate image reference and registry access

Though often seen as ErrImagePull, bad images can bubble into CreateContainerError.

Check:

kubectl describe pod <pod>If Events show manifest not found or unauthorized, it’s an image issue.

Fix:

Correct the tag, or add pull secrets:

kubectl create secret docker-registry regcred \

--docker-server=<registry> \

--docker-username=<user> \

--docker-password=<pass> \

--docker-email=<email>Then reference it in the Pod spec.

Monitoring CreateContainerError in Kubernetes with CubeAPM

Unlike kubectl describe which only shows you a snapshot of one Pod, CubeAPM continuously collects Kubernetes events, container logs, and resource metrics using the OpenTelemetry Collector. The Collector is deployed both as a DaemonSet (to capture node and pod-level data) and as a Deployment (to scrape cluster-wide events and metrics).

This means every failure reason — including CreateContainerError — is ingested, enriched with metadata like pod, namespace, and container ID, and made available in CubeAPM dashboards.

- Events show what happened (a Pod entered CreateContainerError).

- Logs show why it happened (invalid command, permission denied, missing mount).

- Metrics show the context (CPU/memory pressure or node exhaustion).

- Metadata ties everything back to the right Pod, namespace, and workload.

This turns CreateContainerError from a one-off kubectl message into a fully observable signal you can query, visualize, and alert on across your cluster.

1. Install the OpenTelemetry Collector (Helm)

Deploy the Collector in two modes — as a DaemonSet and as a Deployment — for complete Kubernetes monitoring.

helm repo add open-telemetry https://open-telemetry.github.io/opentelemetry-helm-charts

helm repo update open-telemetry

helm install otel-collector-daemonset open-telemetry/opentelemetry-collector -f otel-collector-daemonset.yaml

helm install otel-collector-deployment open-telemetry/opentelemetry-collector -f otel-collector-deployment.yamlThis sets up pipelines for metrics, logs, and events.

2. Capture pod status and container metrics (DaemonSet)

The DaemonSet configuration enables kubeletstats and hostmetrics, which collect pod/container states (including waiting reasons like CreateContainerError) and resource metrics (CPU, memory, filesystem, network).

presets:

kubernetesAttributes: { enabled: true }

hostMetrics: { enabled: true }

kubeletMetrics: { enabled: true }

logsCollection:

enabled: true

storeCheckpoints: true

receivers:

kubeletstats:

collection_interval: 60s

metric_groups: [container, node, pod, volume]

hostmetrics:

collection_interval: 60s

scrapers: { cpu: {}, filesystem: {}, memory: {}, network: {} }With this, CubeAPM records both the event reason and the resource state whenever a Pod fails.

3. Stream Kubernetes events (Deployment)

The Deployment configuration enables kubernetesEvents, which streams Pod lifecycle events into CubeAPM. This is where reason=”CreateContainerError” is captured and stored.

presets:

kubernetesEvents: { enabled: true }

clusterMetrics: { enabled: true }

receivers:

k8s_cluster:

collection_interval: 60s

service:

pipelines:

logs:

receivers: [k8sobjects]

exporters: [otlphttp/k8s-events]These events let you search, filter, and alert directly on CreateContainerError occurrences.

4. Ingest container startup logs

By enabling logsCollection in the DaemonSet, CubeAPM streams container runtime logs with metadata like pod name, namespace, and container ID. This means when CreateContainerError happens, you’ll see the exact startup failure (exec: not found, permission denied) alongside the event.

presets:

logsCollection:

enabled: true

storeCheckpoints: true

exporters:

otlphttp/logs:

logs_endpoint: http://<cubeapm_endpoint>:3130/api/logs/insert/opentelemetry/v1/logsLogs are searchable in CubeAPM’s Explorer using labels such as { kubernetes_reason=”CreateContainerError” }.

5. Enrich with metadata for correlation

It is advisable to enable the kubernetesAttributes processor. This attaches metadata like k8s.namespace, k8s.pod, k8s.deployment, and container.id.

receivers:

kubeletstats:

extra_metadata_labels:

- container.idThis ensures that every CreateContainerError event is tied to the right workload, making it easier to diagnose whether the issue is isolated or systemic.

6. Build dashboards and alerts

Finally, CubeAPM dashboards can be configured to visualize waiting reasons over time. The docs show how to query metrics and build alerts; for CreateContainerError, you can base rules on:

kube_pod_container_status_waiting_reason{reason="CreateContainerError"}This lets you create panels that show error trends and alerts that notify when Pods repeatedly fail to start.

Example Alert Rules

1. Alert when any Pod enters CreateContainerError

This alert is designed to catch single-Pod startup failures as soon as they occur. In many cases, a CreateContainerError starts with just one Pod — perhaps due to a bad command or a missing mount. Detecting it early means developers can fix the manifest before more Pods are rolled out with the same problem.

- alert: PodCreateContainerError

expr: kube_pod_container_status_waiting_reason{reason="CreateContainerError"} > 0

for: 2m

labels:

severity: warning

annotations:

summary: "CreateContainerError on {{ $labels.namespace }}/{{ $labels.pod }}"

description: "Container {{ $labels.container }} in pod {{ $labels.pod }} failed to start with CreateContainerError."2. Alert on a burst of errors in the same namespace

When multiple Pods in the same namespace fail to start with CreateContainerError, it usually points to a systemic issue — for example, a bad deployment spec, a broken image reference, or a missing secret. This alert helps you spot those widespread issues before they affect production environments.

- alert: NamespaceCreateContainerErrors

expr: sum by (namespace) (kube_pod_container_status_waiting_reason{reason="CreateContainerError"}) > 3

for: 5m

labels:

severity: critical

annotations:

summary: "Multiple CreateContainerErrors in {{ $labels.namespace }}"

description: "More than 3 containers are failing to start in namespace {{ $labels.namespace }}."3. Alert when a workload keeps failing to start

Sometimes a single Deployment or StatefulSet will continuously fail because all replicas are stuck in CreateContainerError. This alert focuses on persistent errors, not short spikes, to highlight workloads that need developer intervention. It’s especially useful in CI/CD pipelines where failed workloads block new releases.

- alert: WorkloadCreateContainerError

expr: sum by (namespace, pod, container) (kube_pod_container_status_waiting_reason{reason="CreateContainerError"}) > 0

for: 10m

labels:

severity: critical

annotations:

summary: "Persistent CreateContainerError in {{ $labels.namespace }}"

description: "Container {{ $labels.container }} in pod {{ $labels.pod }} has been in CreateContainerError for over 10 minutes."4. Alert when a node has many CreateContainerErrors

If many Pods on the same node fail to start, the problem might not be the workload but the node itself — perhaps a runtime misconfiguration, permission issue, or resource bottleneck. This alert surfaces node-local issues quickly so you can cordon or replace the node before the problem spreads.

- alert: NodeCreateContainerErrorSpike

expr: sum by (node) (kube_pod_container_status_waiting_reason{reason="CreateContainerError"}) > 5

for: 5m

labels:

severity: warning

annotations:

summary: "CreateContainerError spike on node {{ $labels.node }}"

description: "More than 5 containers on node {{ $labels.node }} failed to start recently."5. Alert when errors coincide with low available memory

Not every CreateContainerError comes from misconfigurations — sometimes they happen because the cluster is simply out of resources. This rule combines container error checks with cluster memory availability, helping you confirm resource starvation as the likely cause. It provides better context so you don’t waste time chasing false leads.

- alert: CreateContainerErrorWithLowMemory

expr: (sum by (namespace) (kube_pod_container_status_waiting_reason{reason="CreateContainerError"}) > 0)

and on() (avg(node_memory_MemAvailable_bytes) / avg(node_memory_MemTotal_bytes) < 0.1)

for: 5m

labels:

severity: warning

annotations:

summary: "CreateContainerError with cluster low memory"

description: "Pods are failing to start while cluster available memory is below 10%."Conclusion

CreateContainerError signals that Kubernetes was unable to start a container, often blocking deployments and workloads. While kubectl can help with ad-hoc checks, the CubeAPM docs make it clear that scalable monitoring requires continuous ingestion of Kubernetes events, logs, and resource metrics through the OpenTelemetry Collector.

By enabling kubeletstats, hostmetrics, logsCollection, and kubernetesEvents as recommended in the documentation, CubeAPM captures not just the error reason but also the surrounding context. With dashboards and Prometheus-style alerts, teams can track when pods fail, see why they failed, and respond before issues cascade across the cluster.

In short: CubeAPM turns CreateContainerError from a transient event into a fully observable signal, helping reduce downtime and accelerate troubleshooting.

FAQs

1. What is CreateContainerError in Kubernetes?

CreateContainerError occurs when Kubernetes schedules a Pod but the container runtime fails to start the container. Common causes include invalid entrypoints, missing volumes, insufficient resources, or security restrictions.

2. How do I debug CreateContainerError?

The first step is to check events and logs:

|

kubectl describe pod <pod-name> |

These commands reveal whether the issue is due to a wrong command, missing mount, or policy block.

3. Can resource limits cause CreateContainerError?

Yes. If a Pod requests more CPU or memory than the node can allocate, the runtime fails container creation. Checking node resources with kubectl describe node <node-name> helps confirm this.

4. How can I prevent CreateContainerError in production?

Adopt best practices like using tested images, validating commands/args, setting realistic resource requests, and ensuring correct security contexts. For production environments, monitoring tools like CubeAPM help by automatically collecting events, logs, and metrics tied to Pod failures.

5. What’s the difference between CreateContainerError and CrashLoopBackOff?

CreateContainerError means the container never started successfully. CrashLoopBackOff happens when a container starts but repeatedly crashes after initialization. CubeAPM captures both conditions in Kubernetes events, making it easier to tell them apart and act quickly.