Nginx is used by 33.3% of all websites whose web server we know, making it one of the most pervasive web and reverse-proxy servers in production. But when upstream services fail, users are greeted with Nginx 502 Bad Gateway or 504 Gateway Timeout errors that disrupt transactions and hurt customer trust.

While both Nginx errors look similar, their causes differ: a 502 occurs when Nginx gets an invalid response from an upstream server, while a 504 happens when the upstream fails to respond in time. Either way, the business impact is huge—Google reports 53% of users abandon sites that take more than 3 seconds, and recurring gateway errors amplify bounce rates and lost revenue.

Diagnosing these issues isn’t simple. A 502 might stem from misconfigured upstream health checks, while a 504 can result from slow database queries or container resource exhaustion. Traditional tools often surface error counts but lack the context to tie failures back to latency, infrastructure bottlenecks, or app performance.

This article will explain what Nginx 502/504 errors mean, why monitoring Nginx 502/504 errors is critical, and how CubeAPM provides real-time correlation, metrics, and alerts to stop them before they impact production.

What Are Nginx 502 and 504 Errors?

A 502 Bad Gateway error occurs when Nginx, acting as a reverse proxy, receives an invalid response from the upstream server it is trying to communicate with. This typically happens when the backend service (such as PHP-FPM, Node.js, Python, or a microservice) crashes, restarts unexpectedly, or returns malformed responses that Nginx cannot interpret.

A 504 Gateway Timeout error means that Nginx was able to connect to the upstream server, but the server failed to respond within the configured timeout window. This often points to performance bottlenecks, like long-running database queries, slow application logic, or overloaded infrastructure unable to keep up with incoming requests.

While these errors look similar to end users, they represent different failure modes in the request lifecycle. And in today’s digital environment, they come with a heavy business cost:

- Lost transactions: checkout or payment flows fail mid-request, leading directly to abandoned carts.

- Damaged brand trust: recurring 502/504s frustrate customers who may switch to competitors.

- Revenue leakage: according to Forrester, companies lose 10%–30% of revenue due to poor web performance, with gateway errors being among the most visible.

- Operational firefighting: engineers spend hours triaging logs instead of focusing on feature delivery.

Example: 502s During a Banking Login Surge

Imagine a banking application where thousands of users try to log in simultaneously after salary credits. If the authentication microservice crashes under load, Nginx starts serving 502 Bad Gateway errors to end-users. Customers perceive the bank as “down,” triggering support calls and damaging trust—even though the root cause was just an overwhelmed backend service.

Difference Between Nginx 502 and 504 Errors

Although a 502 Bad Gateway and a 504 Gateway Timeout may appear similar to end users, they indicate distinct issues in the request lifecycle. Recognizing the difference is essential for both faster troubleshooting and applying the right fix.

- 502 Bad Gateway

- What it means: Nginx attempted to forward a request but received an invalid or no response from the upstream server.

- Typical scenarios:

- FastCGI or PHP-FPM workers crash before sending back a reply.

- The upstream returns a malformed response that Nginx cannot parse.

- An application container restarts mid-request, breaking the connection.

- FastCGI or PHP-FPM workers crash before sending back a reply.

- What it means: Nginx attempted to forward a request but received an invalid or no response from the upstream server.

- 504 Gateway Timeout

- What it means: The upstream server was reachable, but it failed to respond within the configured timeout period.

- Typical scenarios:

- Long-running database queries delaying responses

- Backend APIs overloaded with traffic and responding too slowly

- Resource starvation in a Kubernetes pod (CPU or memory) slowing application logic

- Long-running database queries delaying responses

- What it means: The upstream server was reachable, but it failed to respond within the configured timeout period.

Put simply, a 502 error signals a bad or invalid response, while a 504 indicates the response did not arrive in time. Both disrupt user experience, but they demand different monitoring and remediation strategies.

Why Monitoring Nginx 502 / 504 Errors Is Critical

Lost Conversions on Critical Flows

A sudden wave of Nginx 502/504 errors on login, checkout, or API endpoints translates directly into lost revenue and abandoned sessions. In e-commerce, users expect reliability above all—if payment or checkout fails even momentarily, they often leave. One study finds that around 15% of cart abandonments are due to site errors or crashes, which include server-side gateway failures.

Soaring Outage Costs with Delayed Detection

When gateway errors linger unnoticed, every extra minute is costly. Large enterprises often report downtime costs in the thousands per minute; some recent analyses suggest $9,000 per minute for major outages. But more importantly, the difference between catching a blip and letting it escalate can multiply the total impact by 10× or more, as teams scramble to trace root causes.

Avoid Misdiagnosis by Distinguishing 502 vs. 504

One of the biggest traps is treating all 5xx errors alike. A 502 usually means the upstream returned something invalid (crashed process, malformed payload), while a 504 means the upstream was reached but did not respond in time. Misinterpreting the signal can lead to wasted effort chasing infrastructure when the problem lies in a function, container, or slow query.

Latency Trends Precede Error Spikes

In many cases, 504 floods are preceded by creeping upstream latencies or socket timeouts. If your monitoring only watches error counts, you miss the gradual slope before the crash. Good alerting should pick up p95/p99 latency jumps, connect/read/write socket durations, or rising worker queues before pushing the error wall. In Kubernetes setups, misconfigured readiness probes or CPU throttling often show up as latency blips first.

Visibility at the Proxy Layer Is Key

Because Nginx sits between users and backend systems, it can act as the “observability lens” if you instrument it properly. You want metadata like upstream_addr, upstream_status, and timing breakdowns (connect, send, read) in each log or trace. Without this context, alerts become generic “502 spike at Nginx” rather than seeing “502s from upstream-X at 10.1.2.5, 2s response, crash likely downstream.”

Reputation & SEO Risk

If a site regularly responds with 5xx errors, web crawlers may start penalizing it. Persistent 502s or 504s can reduce crawl rates or lead to dropped indexation on critical pages, eroding organic traffic over time. While comprehensive studies on this specific behavior are rare, SEO best practices repeatedly caution against sustained 5xx errors.

Common Causes of Nginx 502 / 504 Errors

Nginx 502 and 504 errors usually aren’t caused by the proxy itself but by conditions in the upstream services or infrastructure. Understanding the most common triggers helps narrow down root causes faster:

Application Crashes or Overload

When upstream application servers like PHP-FPM, Node.js, or Python services crash or get overloaded, they stop sending valid responses. Nginx, waiting as a proxy, returns a 502 Bad Gateway because it has nothing valid to pass along. This is especially common when worker limits are too low to handle surges in concurrent requests.

Backend Latency or Database Query Slowness

If the backend application responds too slowly—often due to heavy database queries, long-running transactions, or blocking I/O—Nginx will hit its timeout and return a 504 Gateway Timeout. Even optimized apps can experience latency spikes during traffic bursts, exposing bottlenecks in query execution plans or poorly indexed databases.

Connection Pool Exhaustion

Each Nginx worker relies on connections to upstream servers. When keepalive or FastCGI connection pools run out, new requests cannot be serviced. Nginx either drops connections or times out waiting, leading to 502s or 504s. This issue becomes visible in high-throughput environments without tuned connection limits.

Upstream Misconfigurations

Incorrect proxy settings, DNS resolution failures, or timeout mismatches can cause requests to fail before they even reach the upstream app. For example, if proxy_read_timeout is set too low while a backend process legitimately takes longer, Nginx will prematurely return a 504. Similarly, DNS lookup failures for upstream hosts result in immediate 502 errors.

Resource Starvation in Containers

In Kubernetes or Dockerized environments, CPU throttling or memory pressure can starve application pods. When a container is OOM-killed or throttled, the upstream becomes unresponsive, causing Nginx to surface 502s or 504s. These issues are particularly prevalent during traffic spikes or when limits are misconfigured.

Network Routing or Load Balancer Issues

Sometimes the problem lies outside the app stack. Packet drops, routing instability, or overloaded load balancers can prevent requests from reaching upstream servers on time. In these cases, Nginx dutifully returns 504 errors even though the backend might be healthy.

Example: High-Traffic E-commerce Site on Black Friday

During Black Friday, an e-commerce platform experienced a flood of 502 errors across checkout pages. Investigation revealed that PHP-FPM workers maxed out under peak load, leaving Nginx unable to obtain valid responses. By scaling worker processes, optimizing keepalive connections, and auto-scaling upstream pods in Kubernetes, the team reduced 502s by more than 70% during the next campaign, ensuring smoother customer checkouts.

Key Metrics to Track for Nginx 502/504 Monitoring

Error Metrics

These show you when Nginx is surfacing gateway failures and how severe they are.

- 502/504 error rate: Track the volume of bad gateway and timeout errors per minute. Spikes indicate upstream servers are unstable or overwhelmed. Threshold: more than 20 errors/minute should trigger investigation.

- Error percentage of total requests: Instead of raw counts, watch what percentage of traffic results in 502/504s. Even a 2–3% error rate on checkout or login can cripple conversions. Threshold: sustained error rates >1% on key endpoints.

Latency Metrics

Latency signals often spike before full-blown 504 floods, giving you a chance to react.

- Upstream response time ($upstream_response_time): Measures how long upstream servers take to respond. Rising values signal bottlenecks in databases or APIs. Threshold: p95 above 2 seconds for more than 3 minutes.

- Nginx request processing time: Captures end-to-end duration inside Nginx, including waiting for upstreams. Growth here hints at either proxy saturation or upstream slowness. Threshold: average >1.5 seconds across workloads.

Upstream Health Metrics

These metrics focus on the stability and availability of backend servers connected to Nginx.

- Upstream status ($upstream_status): Shows if responses from upstreams were valid (200) or failed (502/504). A concentration of failures from one server highlights a localized issue. Threshold: more than 10% failed responses from a single upstream.

- Failed connections to upstreams: Indicates if sockets to backend servers are being refused or reset. High values point to pool exhaustion or backend crashes. Threshold: more than 5% of connections failing within 5 minutes.

Traffic and Load Metrics

Monitoring load alongside errors tells you whether failures are traffic-driven or configuration-related.

- Requests per second (RPS/QPS): Helps correlate traffic surges with spikes in gateway errors. A sudden jump in RPS with matching 502/504s indicates capacity issues. Threshold: 20%+ increase in RPS without corresponding scaling.

- Worker process utilization: Shows how busy Nginx workers are handling incoming requests. High utilization leads to queuing and timeouts. Threshold: sustained >80% worker utilization.

Infrastructure & Resource Metrics

Errors often trace back to infrastructure pressure, especially in containerized setups.

- CPU usage of upstream pods/VMs: Spikes in CPU can throttle upstream response times, leading to 504 errors. Sustained high CPU often means services need scaling. Threshold: >85% CPU utilization over 5 minutes.

- Memory consumption of upstreams: Memory exhaustion can cause OOM kills and trigger 502s when Nginx receives no valid response. Threshold: >90% memory usage or frequent pod restarts.

Monitoring Nginx 502 / 504 Errors Step by Step with CubeAPM

Step 1. Install CubeAPM in your environment

Deploy CubeAPM where you run Nginx—VM, Docker, or Kubernetes. For production in Kubernetes, you can install via Helm with values.yaml customization to scale ingestion and storage. This ensures logs, traces, and infra metrics from Nginx flow into a unified backend.

Install CubeAPM | Kubernetes Install Guide

Step 2. Enable Nginx log collection

Most 502/504 errors are first visible in Nginx logs. Make sure your log format includes status, upstream_addr, upstream_status, and upstream_response_time. Send these logs to CubeAPM for parsing and real-time queries, so you can filter specifically on 502/504 and correlate with upstream servers.

Logs Setup

Step 3. Monitor infrastructure signals around incidents

502/504s often stem from backend pods/VMs under stress. CubeAPM’s infra monitoring shows CPU, memory, restarts, and disk/network usage, so you can tie error spikes to resource bottlenecks in the upstream services behind Nginx.

Infrastructure Monitoring

Step 4. Instrument upstream services with OpenTelemetry

To know why Nginx times out or sees bad responses, instrument your apps and APIs with OpenTelemetry. CubeAPM then links Nginx requests to downstream spans—like DB queries or API calls—so you can spot the exact bottleneck that led to a 504 or crash that triggered a 502.

Instrumentation Overview | OpenTelemetry Guide

Step 5. Build a 502/504-focused dashboard

Create a dashboard overlaying:

- Error counts for 502/504

- $upstream_response_time p95/p99 latency

- Top upstream servers by error count

- Infra metrics (CPU, memory, restarts)

This helps quickly distinguish “bad/invalid responses” (502) from “delayed/no responses” (504).

Logs | Infra Monitoring

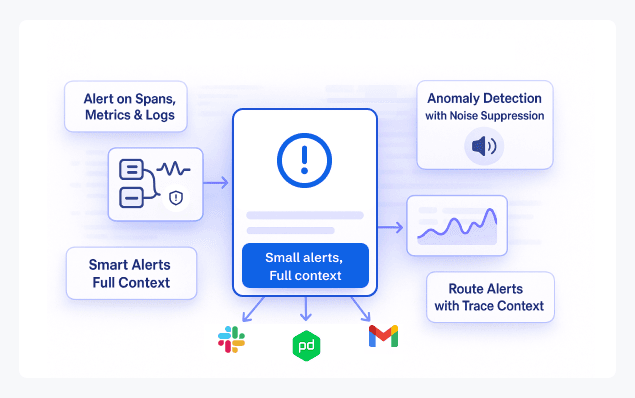

Step 6. Configure proactive alerting via email

Don’t wait for customers to report outages. Configure CubeAPM alerts to fire on:

- Spikes in 502 errors per minute

- 504 rates above a safe threshold

- Rising upstream latency (e.g., p95 > 2s)

Connect alerts to SMTP so your on-call team is notified instantly.

Alerting via Email

Step 7. Handle Kubernetes-specific causes

In Kubernetes, 502/504s often occur due to pod restarts, OOMKills, or readiness probe failures. Deploy CubeAPM via Helm and monitor pod health to catch resource starvation or scaling issues before they cascade into Nginx gateway failures.

Kubernetes Install Guide

Step 8. Enrich Nginx logs for faster triage

Add request IDs and timing breakdowns (connect, send, wait, read) to your Nginx logs. When these are ingested into CubeAPM alongside traces, you can jump from a single 504 log line to the slow DB query or failing API call in one click—cutting MTTR dramatically.

Logs | OpenTelemetry Instrumentation

Real-World Example: Monitoring Nginx 502/504 in Production

Challenge

A high-traffic e-commerce site running on Kubernetes faced a surge of 502 Bad Gateway errors during a flash sale. Shoppers hit checkout pages only to see errors, leading to abandoned carts and angry support calls. The team suspected upstream overload but had no clear view of whether the issue was in Nginx, PHP-FPM workers, or the database.

Solution

The engineers ingested Nginx error logs into CubeAPM with fields like $status, $upstream_status, and $upstream_response_time. They also enabled infrastructure monitoring for worker pods and instrumented the checkout service with OpenTelemetry traces. Within minutes, CubeAPM dashboards correlated the 502 spikes with PHP-FPM worker crashes and rising memory usage in the application pods.

Fix

Armed with this insight, the team scaled PHP-FPM worker pools, increased Kubernetes pod limits to prevent OOM kills, and tuned Nginx keepalive settings to reduce connection churn. They also added CubeAPM alert rules for upstream response time >2s and 502 spikes >50/min, so future issues would trigger an instant notification to the on-call engineer.

Result

The next big campaign ran smoothly: 502 errors dropped by 65%, checkout conversion improved by 18%, and the support team reported a sharp decline in user complaints. By monitoring Nginx 502/504 errors with CubeAPM, the company moved from reactive firefighting to proactive reliability, ensuring revenue-critical paths stayed resilient under load.

Verification Checklist & Example Alert Rules for Nginx 502/504 Monitoring with CubeAPM

Verification Checklist

Before you roll out fixes for 502/504 spikes, it’s important to verify the core layers where these errors originate. This checklist ensures you cover logs, upstreams, infra, and configs systematically.

- Nginx error/access logs: Verify that logs capture status, upstream_addr, upstream_status, and $upstream_response_time. Without these fields, triage becomes guesswork.

- Upstream server health: Check whether backend services (PHP-FPM, Node.js, APIs) are alive and reachable. Run health checks or curl to confirm response validity.

- Timeout configurations: Inspect values for proxy_read_timeout, fastcgi_read_timeout, and related directives. Too low, and legitimate requests get cut off; too high, and you hide latency issues.

- Connection pools: Ensure keepalive or FastCGI pools have enough capacity to handle traffic surges. Exhausted pools often manifest as 502s.

- Infrastructure resources: Look at CPU, memory, and pod restarts. OOM-killed containers or throttled CPUs are a leading cause of upstream timeouts.

Example Alert Rules in CubeAPM

Alerting is where you move from detection to prevention. The following alert rules are tailored to 502/504 patterns and can be created in CubeAPM once logs and metrics are ingested.

1. Spike in 502 errors

alert: Nginx502Spike

expr: increase(nginx_http_requests_total{status="502"}[5m]) > 50

for: 2m

labels:

severity: critical

annotations:

summary: "High rate of 502 Bad Gateway errors detected"

description: "More than 50 502 errors in 5 minutes — upstream crash or misconfig likely."

2. Elevated 504 error rate

alert: Nginx504Rate

expr: rate(nginx_http_requests_total{status="504"}[1m]) > 0.05

for: 1m

labels:

severity: warning

annotations:

summary: "504 Gateway Timeouts exceeding threshold"

description: "504 error rate >5% of traffic — upstream response too slow."

3. Slow upstream response times

alert: UpstreamLatencyHigh

expr: histogram_quantile(0.95, rate(nginx_upstream_response_ms_bucket[5m])) > 2000

for: 2m

labels:

severity: critical

annotations:

summary: "Upstream latency exceeding 2s at p95"

description: "504 risk detected — backend service latency rising above normal."

These alert rules ensure you don’t just detect errors after the fact but get proactive signals when upstreams are close to failing.

Conclusion

Nginx 502 and 504 errors aren’t just technical glitches, they’re red flags that critical upstream services are failing to respond properly or on time. Left unchecked, they disrupt logins, payments, and APIs that users depend on every day.

The challenge is that these errors look similar to end users but have very different root causes. Monitoring logs, upstream timings, infra pressure, and traces in CubeAPM ensures you can distinguish between a crash (502) and a timeout (504) in seconds, not hours.

By combining real-time error tracking, dashboards, and proactive alerts, CubeAPM gives you full control over Nginx reliability. Set up monitoring today and prevent 502/504 errors from costing you customers, revenue, and reputation.

Disclaimer: The information in this article reflects the latest details available at the time of publication and may change as technologies and products evolve.

FAQs

1. Can SSL/TLS misconfigurations cause Nginx 502 errors?

Yes. If Nginx is configured to use HTTPS upstreams but certificates are invalid, expired, or mismatched, it will fail to complete the handshake. This results in a 502 Bad Gateway since the proxy can’t establish a valid session.

2. Do CDN or reverse proxy layers affect 504 errors?

Absolutely. If a CDN or external reverse proxy sits in front of Nginx, timeouts can happen at multiple layers. Monitoring both Nginx and the upstream edge service ensures you know if the delay occurred inside your infra or outside.

3. How can rate limiting in Nginx trigger 502/504 issues?

Aggressive rate-limiting or connection throttling may block legitimate requests during traffic spikes. Users then experience errors that look like backend failures. Monitoring request rejections alongside 502/504s helps separate policy-driven denials from genuine upstream issues.

4. Can DNS resolution issues be a hidden cause of 502 errors?

Yes. If Nginx cannot resolve the upstream hostname due to DNS server delays or misconfigurations, it immediately throws a 502. Adding DNS metrics and alerts into your monitoring stack helps catch this often-overlooked problem.

5. How do keepalive misconfigurations influence Nginx gateway errors?

Improperly tuned keepalive settings can exhaust connection pools or keep stale connections open. This leads to intermittent 502s or 504s during bursts of traffic. Tracking active connection counts and tuning keepalive thresholds prevents these errors.