In Kubernetes, Pods usually terminate gracefully, but sometimes they hit the Kubernetes Pod Stuck Terminating error. A Pod lingers in the Terminating state, blocking resources and workloads. With nearly 90% of organizations running Kubernetes in production, Pod lifecycle issues like this can waste capacity and disrupt critical services.

CubeAPM stands out as a solid solution for monitoring Kubernetes clusters. CubeAPM provides deep observability into Kubernetes workloads, automatically collecting metrics, events, and logs tied to Pod lifecycle states. With smart alerting and root-cause analysis, it helps teams detect and fix “stuck terminating” Pods before they cascade into bigger outages.

In this guide, we’ll explain what a Pod stuck terminating means, explore the common causes, walk through step-by-step fixes, and show how CubeAPM helps teams monitor and prevent this issue at scale.

What is Pod Stuck Terminating in Kubernetes?

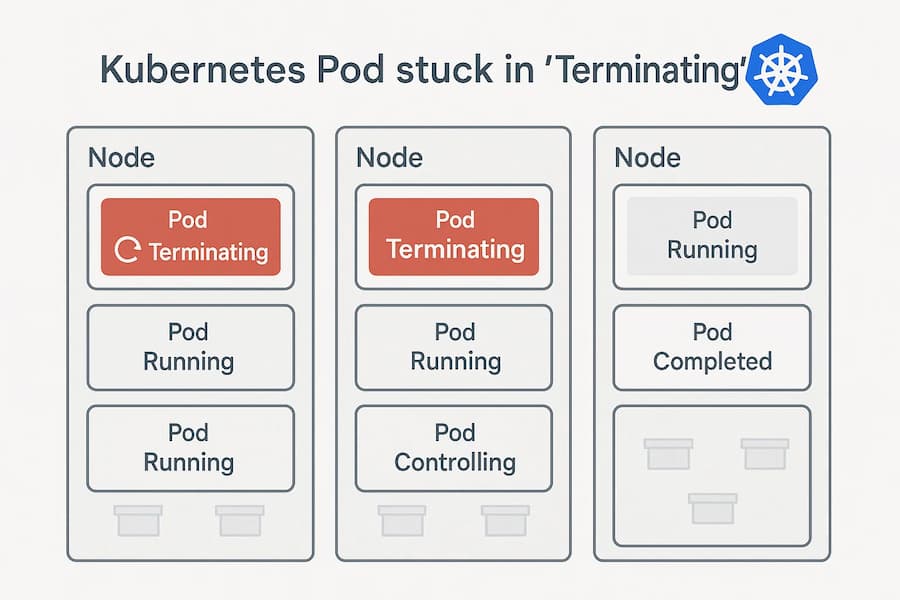

Pod Stuck Terminating is a condition where a Pod stays in the Terminating state far longer than expected, unable to shut down or be deleted from the cluster. Instead of exiting cleanly, the Pod lingers because resources, processes, or cleanup steps aren’t finishing as Kubernetes expects. This can leave workloads hanging, waste cluster capacity, and even block new deployments.

To understand this better, we need to look at two things: the normal Pod lifecycle during termination, and what it actually means when a Pod gets stuck in this state.

Pod Lifecycle Context

A Pod enters the Terminating state when Kubernetes receives a delete request. The kubelet sends SIGTERM to containers, waits for the grace period (default 30s), then forces a kill with SIGKILL if containers don’t exit.

What Stuck Terminating Means

If containers ignore signals, finalizers hang, or volumes don’t detach, the Pod remains marked as Terminating. In practice, it looks frozen—still visible in the cluster but unable to run workloads, consuming resources, and blocking smooth scheduling.

Why Pod Stuck Terminating in Kubernetes Happens

1. Finalizers Blocking Pod Deletion

Kubernetes uses finalizers to ensure resources like PVCs or CRDs are cleaned up before deletion. If the cleanup logic hangs or fails, the Pod remains in Terminating indefinitely, waiting for the finalizer to finish.

Quick check:

kubectl get pod <pod-name> -o yaml | grep finalizers -A 2If you see finalizers listed under metadata that don’t get cleared, they’re blocking the Pod from terminating.

2. Stuck Processes Inside the Container

Some processes ignore SIGTERM or spawn child processes that remain alive. Since Kubernetes waits for all processes to exit, the Pod cannot complete termination until they’re cleared.

Quick check:

kubectl exec -it <pod-name> -- ps auxIf you see lingering processes even after termination signals, they’re preventing the Pod from shutting down.

3. Volume Detach or Storage Cleanup Issues

If PersistentVolumes fail to unmount, the Pod lingers while Kubernetes waits for the storage driver or CSI plugin to release it. This is common with NFS mounts or CSI misconfigurations.

Quick check:

kubectl describe pod <pod-name>If you see events like FailedUnmount or VolumeDetach, the storage layer is blocking the Pod.

4. Network or Node Unavailability

When the node hosting the Pod goes NotReady, the control plane cannot confirm that the Pod terminated. The Pod object remains stuck until node reconciliation.

Quick check:

kubectl get nodesIf you see the node hosting the Pod marked NotReady, the Pod’s termination is stalled due to node issues.

5. Grace Period Misconfiguration

Pods respect the terminationGracePeriodSeconds setting. If it’s set too high, Pods may look stuck even though Kubernetes is simply waiting.

Quick check:

kubectl get pod <pod-name> -o yaml | grep terminationGracePeriodSecondsIf you see a very high value (e.g., hundreds or thousands of seconds), the Pod will remain terminating much longer than expected.

How to Fix Pod Stuck Terminating in Kubernetes

1. Remove Problematic Finalizers

Finalizers are meant to ensure cleanup before resources are deleted, but if they fail or hang, they can trap a Pod in Terminating indefinitely. This often happens with CRDs or PVCs where cleanup controllers don’t respond as expected, leaving Kubernetes waiting forever.

Quick check:

kubectl get pod <pod-name> -o yaml | grep finalizers -A 2

Fix: Remove them manually with:

kubectl patch pod <pod-name> -p '{"metadata":{"finalizers":null}}'2. Force Delete the Pod

When a Pod refuses to exit gracefully, Kubernetes will hold onto it for the entire grace period, sometimes hours if misconfigured. In such cases, force deletion can clear the stuck Pod and free resources, though it should only be used when cleanup isn’t critical.

Quick check:

kubectl get pod <pod-name>If it’s been Terminating for several minutes without progress, proceed.

Fix:

kubectl delete pod <pod-name> --grace-period=0 --force3. Kill Stuck Processes

A common reason for stuck Pods is processes inside containers that refuse to exit on SIGTERM. These zombie processes keep the Pod alive, even when everything else has shut down, forcing manual intervention.

Quick check:

kubectl exec -it <pod-name> -- ps auxIf you see lingering processes even after SIGTERM, they’re blocking termination.

Fix:

kubectl exec -it <pod-name> -- kill -9 <pid>4. Restart the Kubelet on the Node

Sometimes the kubelet itself gets into a bad state, unable to reconcile termination requests. This leaves Pods in limbo until the kubelet is reset. Restarting it forces Kubernetes to reevaluate Pod states and usually clears stuck entries.

Quick check:

journalctl -u kubelet | grep <pod-name>If you see repeated termination attempts with no success, kubelet may be stuck.

Fix:

systemctl restart kubelet5. Fix Volume Detach Issues

Pods using PersistentVolumes can remain stuck if the storage driver or CSI plugin fails to detach or unmount volumes. This is especially common with NFS or iSCSI mounts. Until the volume is released, Kubernetes won’t complete termination.

Quick check:

kubectl describe pod <pod-name>If you see FailedUnmount or VolumeDetach events, storage is the culprit.

Fix: Drain the node to release the volume:

kubectl cordon <node>

kubectl drain <node> --ignore-daemonsets --delete-emptydir-dataMonitoring Pod Stuck Terminating in Kubernetes with CubeAPM

Fixing a Pod stuck in Terminating once is fine, but preventing it from happening again requires visibility. CubeAPM automatically ingests Kubernetes events, kubelet metrics, and container logs, correlating them to highlight why Pods don’t shut down. For example, it can tell you if the problem is a finalizer that won’t clear, a volume that won’t detach, or a process that ignores signals. Combined with dashboards and alerting, this makes CubeAPM a complete monitoring solution for Pod lifecycle errors.

Here’s a practical guide to setting it up:

1. Make sure CubeAPM is running (Helm)

Start by installing CubeAPM in your cluster so it can capture lifecycle metrics, logs, and events. The Kubernetes install guide explains the full setup.

helm repo add cubeapm https://charts.cubeapm.com

helm repo update cubeapm

helm show values cubeapm/cubeapm > values.yaml

# edit values.yaml as needed, then:

helm install cubeapm cubeapm/cubeapm -f values.yaml2. Install OpenTelemetry Collector in both modes

For complete coverage, CubeAPM recommends deploying the OTel Collector as both a DaemonSet (node-level logs and kubelet metrics) and a Deployment (cluster events and metrics). The Kubernetes monitoring guide shows how to configure the exporters to send data to CubeAPM.

exporters:

otlphttp/metrics: { endpoint: "https://<CUBE_HOST>:4318/v1/metrics" }

otlphttp/logs: { endpoint: "https://<CUBE_HOST>:4318/v1/logs" }

otlphttp/traces: { endpoint: "https://<CUBE_HOST>:4318/v1/traces" }3. (Optional) Pull in Prometheus metrics for lifecycle states

If you’re running kube-state-metrics, scrape it with Prometheus so CubeAPM can chart Pod phase durations, including how long Pods stay in Terminating. The Prometheus integration docs cover how to wire this into OTel.

receivers:

prometheus:

config:

scrape_configs:

- job_name: kube-state-metrics

scrape_interval: 60s

static_configs:

- targets: ["kube-state-metrics.kube-system.svc.cluster.local:8080"]4. Verify in CubeAPM (what you should see)

Once data is flowing, you’ll see stuck Pods and related events under Infrastructure → Kubernetes and Logs → Kubernetes events. Dashboards also visualize Pod phase durations, so you can spot workloads that fail to exit. For dashboard usage, see the CubeAPM dashboards guide.

5. Wire an alert quickly

Finally, set alerts for Pods lingering in Terminating longer than normal (e.g., 5m) or for recurring storage detach errors. The alerting guide shows how to configure thresholds on metrics and events.

Example Alert Rules

Alert for Pod Stuck Terminating Longer Than 5minutes

Pods should terminate quickly under normal conditions. If a Pod lingers in Terminating beyond 5 minutes, it’s a strong signal that finalizers, processes, or storage cleanup are blocking the shutdown. An early alert helps teams intervene before deployments are delayed.

- alert: PodStuckTerminating

expr: kube_pod_status_phase{phase="Terminating"} > 0

for: 5m

labels:

severity: warning

annotations:

description: "Pod {{ $labels.pod }} in namespace {{ $labels.namespace }} has been stuck in Terminating state for more than 5 minutes."Alert for Volume Unmount Failures

Storage cleanup issues like failed unmounts are one of the most common reasons Pods remain in Terminating. By alerting on these events, teams can quickly identify and fix CSI driver or NFS detach errors before they cause cascading stuck Pods.

- alert: PodVolumeDetachFailure

expr: kube_pod_container_status_waiting_reason{reason="FailedUnmount"} > 0

for: 2m

labels:

severity: critical

annotations:

description: "Pod {{ $labels.pod }} in namespace {{ $labels.namespace }} is stuck due to volume unmount or detach issues."Alert for Node NotReady Causing Stuck Pods

If the node hosting a Pod becomes NotReady, termination can stall indefinitely. Alerting on this condition ensures operators act quickly—either by cordoning/draining the node or investigating node health.

- alert: NodeCausingPodStuck

expr: kube_node_status_condition{condition="Ready",status="false"} > 0

for: 2m

labels:

severity: critical

annotations:

description: "Node {{ $labels.node }} is NotReady, which may be causing Pods to remain stuck in Terminating state."Conclusion

A Pod stuck in Terminating might look harmless at first, but it often signals deeper issues with finalizers, volumes, or unresponsive processes. Left unchecked, these Pods can block deployments, consume resources, and slow down your entire delivery pipeline.

The key is not just fixing stuck Pods, but detecting them early and preventing recurrences. That’s where CubeAPM makes a difference—it collects Kubernetes events, kubelet metrics, and container logs, then ties them together so you know exactly why a Pod won’t exit. With dashboards, alerts, and real-time insights, CubeAPM helps teams resolve termination issues before they turn into outages.

If you want smoother rollouts and less firefighting, CubeAPM is the monitoring solution that keeps Kubernetes workloads clean, stable, and reliable.

FAQs

1. What does it mean when a Pod is stuck in Terminating?

It means Kubernetes has sent shutdown signals, but the Pod cannot complete cleanup or deletion. This is usually caused by finalizers, storage drivers, or processes that refuse to exit.

2. How long should a Pod stay in the Terminating state?

Normally under 30 seconds (default grace period). If it lingers for minutes or hours, it likely indicates a deeper problem.

3. Can I safely force delete a stuck Pod?

Yes, but force deletion skips cleanup. It’s safe for stateless Pods, but risky for stateful workloads since volumes or resources may remain orphaned.

4. How does CubeAPM help with Pods stuck in Terminating?

CubeAPM monitors Pod lifecycle states, kubelet events, and storage operations. It correlates logs and metrics to show the exact cause and alerts you when termination is unusually long.

5. What’s the best way to prevent Pods from getting stuck?

Design containers to handle signals gracefully, configure finalizers correctly, validate your storage drivers, and use monitoring with CubeAPM to catch termination issues before they spread.