Istio is among the most widely adopted service meshes in the cloud native ecosystem, thanks to its fine-grained routing, mTLS security, and observability via Envoy, making it a top choice for managing complex microservices.

Yet as organizations scale, Istio adds layers of complexity. Every call flows through sidecars and gateways, making it harder to pinpoint latency, retries, or failures. Without strong monitoring, Istio risks becoming a performance black box.

CubeAPM solves this by offering OTel-native Istio integration, Smart Sampling to retain high-value traces, and ready dashboards at 60–70% lower cost than Datadog or New Relic.

In this guide, we’ll explore why Istio service mesh monitoring matters, key metrics, and how to achieve full visibility with CubeAPM.

What is an Istio Service Mesh?

An Istio Service Mesh is a dedicated infrastructure layer that manages how microservices communicate inside Kubernetes. It works by deploying Envoy sidecar proxies alongside application pods, intercepting all traffic between services. Through this design, Istio gives teams fine-grained control over service discovery, traffic routing, load balancing, and zero-trust security (mTLS), without requiring code changes to the applications themselves.

For businesses, Istio service mesh monitoring has become crucial as modern platforms scale into hundreds of interconnected services. Every request flows through Envoy proxies, meaning that performance bottlenecks or policy misconfigurations in the mesh can directly affect customer experience. With the right monitoring in place, teams can:

- Spot traffic anomalies quickly: Detect latency spikes, failed retries, or misrouted traffic before they cascade.

- Improve reliability: Track service-to-service SLAs and error budgets across the mesh.

- Optimize resource usage: Reduce the CPU and memory overhead of sidecar proxies and avoid noisy neighbor effects.

- Strengthen security posture: Validate mTLS policies and surface unauthorized attempts across the cluster.

- Connect performance to business outcomes: Correlate mesh latency with KPIs like checkout time, API response rates, or customer drop-offs.

Example: Using Istio for API Latency in a Telecom Platform

A telecom provider offering real-time messaging APIs noticed that response times were spiking during regional traffic surges. By monitoring Istio’s Envoy sidecar metrics and traces, the platform team discovered excessive retries between the gateway and messaging microservices caused by a misconfigured timeout policy. This ripple effect was degrading message delivery SLAs across the mesh. After tuning Istio’s retry logic and scaling targeted sidecar proxies, API latency stabilized under 200ms, restoring SLA compliance and preventing customer churn in high-traffic regions.

Why is Istio Service Mesh Monitoring Important?

Traffic visibility: catch latency, retries, and bad routes early

In Istio, every east-west hop transits Envoy, so routing rules, timeouts, and retries can quietly amplify latency or trigger retry storms. Mesh-level telemetry (QPS, p50/p90/p99, success rates, upstream errors) tells you whether the slowdown lives in the app, the sidecar, or a route/policy. Rigorous studies show sidecar meshes can incur up to 269% higher latency and 163% more CPU in some configurations—making early detection and right-sizing policies essential.

Security enforcement: validate mTLS and policy behavior, not just YAML

Zero-trust value comes from runtime verification—mTLS handshakes, cert rotation, and AuthorizationPolicies actually being honored across namespaces. Monitoring handshake success/latency, failed authn/z, and policy drift helps you prove enforcement. Istio’s Ambient mode (GA in v1.24) further simplifies L4/L7 policy with ztunnel and waypoint proxies, but still requires visibility to confirm intent matches behavior.

Resource efficiency: control sidecar overhead and avoid “noisy neighbors”

Sidecars add real CPU/RAM to every pod. MeshInsight quantified how overhead varies by protocol (HTTP/gRPC vs TCP) and filters, with sizable CPU and latency costs in certain modes. If you’re adopting Ambient, independent benchmarks (vendor) report ~4.78% CPU to add mTLS in Ambient vs ~24.3% with sidecars—evidence that architecture choices materially affect footprint; monitor sidecar/waypoint CPU, RSS, and restarts to right-size and contain spend.

Service reliability: protect SLOs and catch cascading failure patterns

Retries, timeouts, and circuit breakers are reliability levers—but the wrong mix can create thundering herds or cross-service congestion. Mesh-aware alerts on elevated p99s, 5xx bursts, retry budgets, and upstream saturation help you halt incidents before SLO breaches. Operator reports frequently cite mTLS/policy-related outages surfacing only under load—hard to triage without mesh telemetry.

Business impact: connect mesh health to customer experience

With 89% of organizations adopting cloud-native techniques, microservices on Kubernetes are the norm—placing the mesh on the critical path of user transactions. Even tens of milliseconds per hop compound across service graphs, affecting API SLAs, checkout/flow completion times, or real-time comms. Monitoring ties mesh signals (handshake times, retries, L7 error codes) to business KPIs (conversion, request success, SLA adherence) so teams prioritize fixes that move revenue.

Key Metrics for Istio Service Mesh Monitoring

Istio service mesh monitoring requires tracking metrics at multiple layers—traffic, proxies, resources, and security—to get a full picture of mesh health. Below are the most critical categories and the specific metrics that matter, with thresholds where relevant.

Traffic Metrics

Traffic metrics give you visibility into how requests flow across services and help identify bottlenecks.

- Request volume: Tracks QPS across services to reveal load patterns. Sudden spikes often point to load imbalance or bad routing. A consistent 20–30% deviation from baseline in QPS can indicate problems.

- Request latency (p50, p90, p99): Measures service response times across percentiles. High p90 or p99 latencies usually highlight services struggling under load. Latency above 300ms in a service mesh is often considered a degradation.

- Request success rate: Monitors the proportion of successful vs. failed requests. A drop below 98–99% success rate can flag failing routes or downstream dependencies.

Proxy Metrics (Envoy sidecars)

These metrics capture Envoy sidecar behavior, essential since Istio relies on them for routing, retries, and encryption.

- Active connections: Shows open connections per proxy. High connection counts can saturate sidecars and limit throughput. Sustained >80% of connection pool capacity signals risk.

- Retries and timeouts: Tracks how many requests are retried or timed out at the proxy. Spikes often mean unhealthy upstream services or misconfigured retry budgets. If retries exceed 5–10% of total traffic, it’s a red flag.

- mTLS handshake latency: Measures how long encryption handshakes take between services. Overhead should remain minimal (<10ms per handshake). Higher values may point to certificate or policy misconfigurations.

Resource & Performance Metrics

Resource efficiency ensures Istio doesn’t consume more CPU or memory than the apps it’s supporting.

- CPU/memory per sidecar: Monitors sidecar resource consumption. Excessive CPU or memory usage indicates “mesh bloat.” If sidecars consume >20–25% of a node’s total CPU, scaling or policy optimization is needed.

- Pod restarts due to proxy failures: Tracks restarts linked to Envoy crashes or resource exhaustion. Frequent restarts (>3 per hour per sidecar) usually suggest instability or poor tuning.

Security & Policy Metrics

Since Istio enforces zero-trust policies, tracking security-related metrics ensures policies are applied and effective.

- Unauthorized attempts: Counts rejected requests due to invalid credentials or blocked routes. Spikes may mean intrusion attempts or misconfigured policies. Threshold: More than a handful per minute should be investigated.

- Policy enforcement success rate: Monitors how consistently security and traffic policies are applied across the mesh. Rates below 99% signal inconsistencies in enforcement or misconfigured namespaces.

How to Perform Istio Service Mesh Monitoring with CubeAPM (Step-by-Step)

Step 1: Install CubeAPM for Istio telemetry ingestion

Start by deploying CubeAPM so it can receive Istio telemetry. If you’re running Istio on Kubernetes, install CubeAPM in your cluster so it can ingest sidecar proxy metrics, logs, and traces directly. Follow the official CubeAPM Kubernetes installation guide.

Step 2: Enable Istio’s OpenTelemetry integration

Istio’s Envoy sidecars can export telemetry using the OpenTelemetry protocol. Configure Istio to send OTLP traces and metrics to CubeAPM. CubeAPM provides detailed instructions in the doc: OpenTelemetry instrumentation. This step ensures you capture request paths across the mesh, including retries, routing decisions, and service latencies.

Step 3: Scrape Istio & Envoy Prometheus metrics into CubeAPM

Istio exposes rich metrics from Envoy sidecars and the control plane (istiod, pilot, ingress/egress gateways). Use CubeAPM’s Prometheus integration to collect these metrics Prometheus metrics with CubeAPM. This allows you to monitor request volumes, error rates, active connections, and proxy resource usage at scale.

Step 4: Stream Istio and Envoy logs into CubeAPM

Envoy access logs and Istio proxy logs provide essential context for failures and retries. Configure CubeAPM’s log ingestion pipeline to collect them, so you can correlate log entries with traces and metrics. Set up details are here: CubeAPM log monitoring. This helps you debug issues like failed mTLS handshakes or route denials.

Step 5: Set Istio-specific alert rules in CubeAPM

Define alerting rules for critical mesh conditions such as high 5xx rates, latency spikes, handshake failures, or sidecar restarts. CubeAPM allows you to configure thresholds and link them to notification channels. For setup, see CubeAPM alerting configuration. Example: alert if p99 latency exceeds 500ms for 5 minutes, or if retry rates rise above 10%.

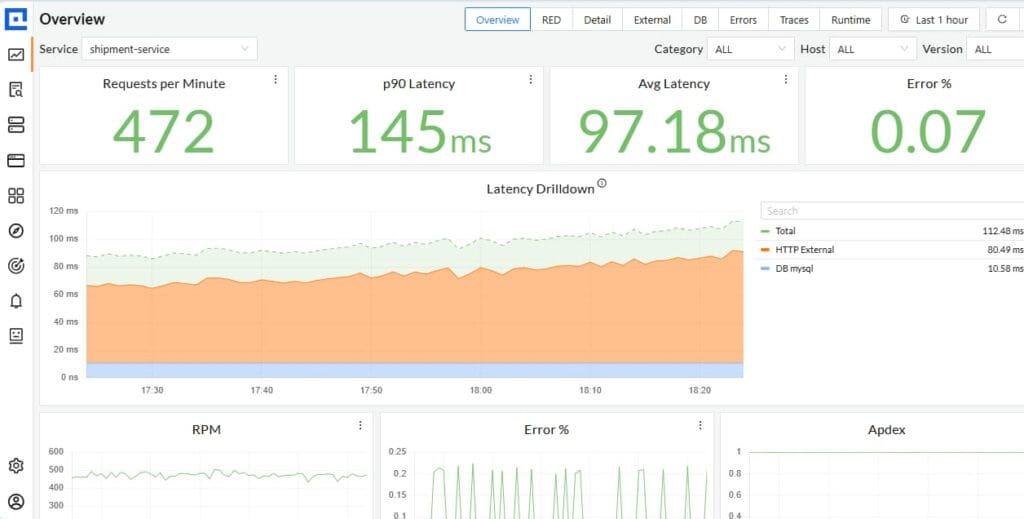

Step 6: Use Istio dashboards in CubeAPM

CubeAPM offers dashboards where you can visualize Istio service-to-service traffic, Envoy resource consumption, and error hotspots. Use these dashboards to trace a request across services, correlate spikes with sidecar resource usage, or validate that mTLS policies are being enforced consistently.

Step 7: Test Istio monitoring setup with real scenarios

Finally, validate your setup by generating traffic and simulating failures. For example:

- Inject latency into a microservice and confirm CubeAPM traces highlight the bottleneck.

- Misconfigure an mTLS policy and verify CubeAPM shows failed handshakes.

- Overload a gateway proxy and watch CubeAPM surface resource saturation.

These tests ensure your CubeAPM + Istio integration provides reliable visibility before you rely on it in production.

Real-World Example: Istio in a Logistics Platform with CubeAPM

Challenge

A national logistics platform saw parcel ETA breaches spike during evening peaks. Traces showed the order-service → routing-service hop adding 800–1200 ms, even when downstream gRPC handlers were fast. Both services ran with Istio sidecars behind a shared ingress-gateway. SLO at risk: p99 end-to-end request latency ≤ 750 ms.

Investigation

Platform SREs used CubeAPM’s service map to isolate the slow edge, then drilled into correlated traces and Envoy metrics. They noticed:

- Retry anomalies: istio_requests_total{response_code=”503″,response_flags=”UO”} rising with matching spikes in envoy_cluster_upstream_rq_retry.

- Gateway saturation: High downstream_cx_active and rising downstream_cx_destroy_remote_active.

- mTLS fine: Handshake error rate < 0.1%; not the culprit.

- Latency shape: App handler < 100 ms; added time accumulated between sidecar → upstream cluster.

Findings

CubeAPM trace waterfalls and linked Envoy logs revealed retry loops triggered by a misaligned timeout budget: the routing-service had an upstream request timeout = 1s while the retry policy allowed 3 retries with exponential backoff. Under burst load, the first attempt often hit the 1s boundary, causing Envoy to retry twice more and push the hop well past the p99 target. The gateway’s connection pool also ran near capacity, exacerbating queueing delay.

Solution

The team validated the hypothesis by A/B testing traffic through two DestinationRule variants while watching CubeAPM’s p95/p99 and retry counters in real time. The corrected profile:

- Timeout: increased upstream timeout to 2.5s to match backend SLA.

- Retries: reduced to 1 retry, jittered backoff capped at 150 ms, and retry_on: connect-failure, retriable-status-codes only.

- Connection pool: raised max_connections and tuned circuit breaker thresholds on the gateway cluster.

Fix

They rolled out updated VirtualService and DestinationRule for the routing service and applied a Gateway patch to expand the connection pool. CubeAPM’s dashboards confirmed:

- envoy_cluster_upstream_rq_retry fell from 12–15% to < 2% of requests.

- Gateway downstream_cx_active stabilized at ~60% of capacity with lower churn.

- Hop latency p99 dropped from ~1.1 s → ~410 ms; end-to-end p99 from ~1.8 s → ~980 ms.

Result

Order-routing hop latency reduced by 45%, end-to-end p99 improved by ~46%, and SLA compliance returned above 99.3% during peaks. Incident tickets tied to “routing delays” fell by 62% over the next two sprints.

Verification Checklist & Alert Rules for Istio Monitoring with CubeAPM

Verification Checklist

- Request metrics: Latency percentiles (p50/p90/p99), QPS, and success rates should be visible in CubeAPM dashboards. This ensures you can detect service slowdowns and failed routes across the mesh.

- Service map: CubeAPM’s service graph must show all Istio services, gateways, and dependencies. Missing edges usually indicate incomplete telemetry or uninstrumented workloads.

- Envoy metrics: Sidecar counters like active connections, retries, and timeouts should be ingested properly. These metrics reveal proxy saturation and routing anomalies early.

- mTLS telemetry: Handshake success rates and latency need to be monitored consistently. This confirms that security policies are being enforced in real time across namespaces.

- Logs: Envoy access logs and error logs must flow into CubeAPM for correlation with traces. Without logs, identifying root causes behind retries or policy denials becomes harder.

- Sidecar resources: CPU and memory usage per proxy should be tracked continuously. Frequent pod restarts or high utilization signal mesh overhead or instability.

- Unauthorized attempts: Requests blocked with 401/403 responses should be captured with source/destination info. Spikes often mean intrusion attempts or policy misconfigurations.

- Policy enforcement: Authorization and authentication policies should show >99% enforcement success. Inconsistent rates usually point to misaligned PeerAuthentication or DestinationRules.

- Alerting: Critical alerts must be tested for latency spikes, retry storms, handshake errors, and 5xx bursts. A working alerting setup ensures you can respond before customers notice.

- Notifications: Email, Slack, or PagerDuty channels should deliver alerts in under a minute. End-to-end testing confirms that on-call teams won’t miss urgent Istio incidents.

Alert Rules for Istio Service Mesh Monitoring

Alert on high service latency

It fires when p99 latency for a destination service exceeds 500ms for 5 minutes.

- alert: IstioHighP99Latency

expr: histogram_quantile(0.99, sum by (destination_service, le) (rate(istio_request_duration_milliseconds_bucket[5m]))) > 500

for: 5m

labels:

severity: critical

Alert on retry storms

It detects when more than 10% of total requests are being retried, often due to bad timeout or retry policies.

- alert: IstioRetryStorm

expr: (sum by (destination_service) (rate(istio_requests_total{response_flags=~"UR|UO|UT"}[5m])))

/ sum by (destination_service) (rate(istio_requests_total[5m])) > 0.10

for: 10m

labels:

severity: warning

Alert on slow mTLS handshakes

It triggers if p99 mTLS handshake latency rises above 10ms, indicating security overhead or misconfigurations.

- alert: IstioSlowMTLSHandshake

expr: histogram_quantile(0.99, rate(istio_mtls_handshake_duration_seconds_bucket[5m])) > 0.01

for: 5m

labels:

severity: warning

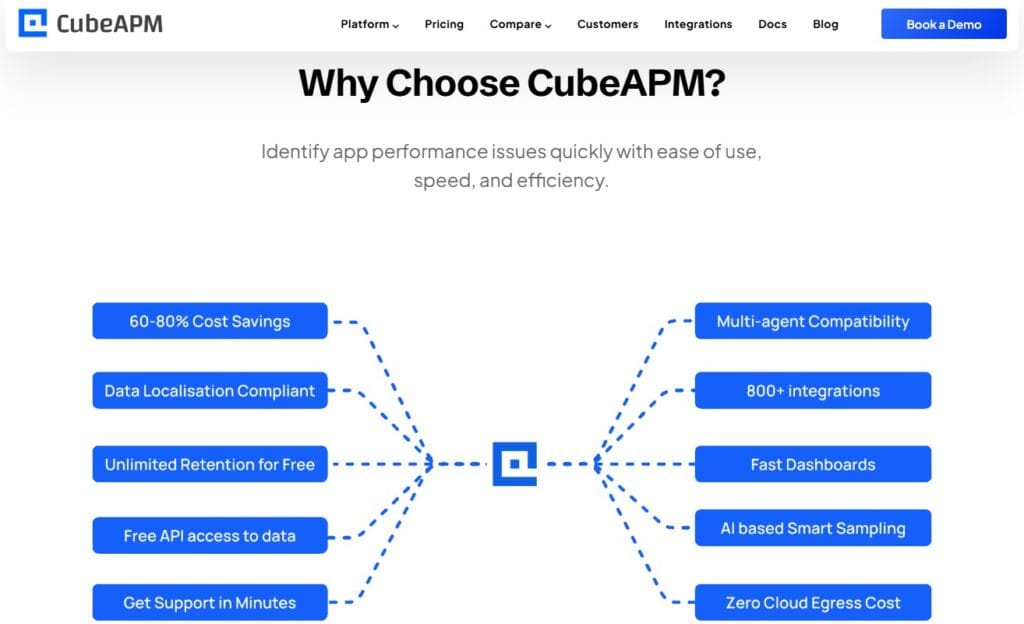

Why Use CubeAPM for Istio Service Mesh Monitoring

Istio service mesh monitoring isn’t just about collecting metrics, it’s about making sense of complex mesh behavior without drowning in cost or noise. This is where CubeAPM is purpose-built to stand out.

- Transparent, low-cost pricing: CubeAPM charges $0.15/GB for data ingestion, with infra at $0.02/GB. Compared to Datadog and New Relic, customers save 60–70% at scale. For Istio workloads that generate large volumes of proxy metrics, logs, and traces, predictable pricing is critical.

- Smart Sampling for high-RPS meshes: Istio sidecars generate a flood of traces under heavy load. Unlike probabilistic sampling, CubeAPM’s Smart Sampling keeps the most valuable traces (slow requests, failed handshakes, retry loops) while discarding redundant noise. This means you capture the insights that matter without blowing up your storage or bills.

- OpenTelemetry-native integration: Istio already supports exporting metrics and traces via OTLP. CubeAPM is OTel-first, making it easy to plug Istio’s Envoy sidecars and control plane into CubeAPM without custom agents or vendor lock-in.

- Full MELT observability: CubeAPM unifies Metrics, Events, Logs, and Traces from Istio in one platform. You can trace a request through Envoy, correlate it with a spike in retries, and link it to the exact error log line — all in a single workflow.

- Enterprise-grade compliance & BYOC: For regulated industries running Istio (fintech, healthcare, telecom), CubeAPM offers BYOC/self-hosting so all Istio telemetry stays in your own cloud. This satisfies data residency requirements out of the box.

- Faster support from real engineers: While incumbents often take days to respond, CubeAPM gives direct Slack/WhatsApp access to core engineers with turnaround in minutes, not days. That speed matters when Istio retries or mTLS failures start cascading in production.

- 800+ integrations, Istio included: CubeAPM integrates seamlessly with Istio, Envoy, Kubernetes, and the wider CNCF ecosystem. This lets you enrich mesh telemetry with cluster, infra, and app-level data for a complete context.

In short, Istio’s complexity makes monitoring both critical and expensive. CubeAPM delivers the same (or deeper) visibility at a fraction of the cost, with smarter sampling, OTel-native plumbing, and enterprise compliance — a combination that’s hard to match.

Conclusion

Istio has become a cornerstone of modern Kubernetes platforms, enabling traffic management, zero-trust security, and observability at scale. But without proper monitoring, the mesh itself can become a source of latency, retries, and policy drift, directly impacting reliability and user experience.

CubeAPM makes Istio monitoring simple, efficient, and cost-effective. With OpenTelemetry-native integration, Smart Sampling, and unified MELT observability, it delivers deep visibility into sidecar proxies, traffic flows, and mTLS enforcement — all at 60–70% lower cost than legacy APM tools.

If your business depends on Istio, it deserves observability you can trust. Start monitoring Istio with CubeAPM today and gain full visibility, control, and cost savings. Book a free demo today.

Disclaimer: The information in this article reflects the latest details available at the time of publication and may change as technologies and products evolve.

FAQs

Q1. How is Istio monitoring different from standard Kubernetes monitoring?

Kubernetes monitoring focuses on node, pod, and cluster health, while Istio monitoring targets service-to-service communication, sidecar proxies, and mesh policies. It’s about ensuring requests, retries, mTLS, and routing rules work reliably at runtime.

Q2. Can Istio monitoring detect misconfigurations in routing or policies?

Yes. With the right telemetry, Istio monitoring surfaces anomalies like excessive retries, 403 rejections, or handshake failures. Tools like CubeAPM can trace these errors back to misconfigured DestinationRules, VirtualServices, or PeerAuthentications.

Q3. Does Istio monitoring add overhead to applications?

Monitoring itself doesn’t, but sidecar proxies do add CPU/memory overhead. This makes monitoring critical — CubeAPM helps quantify this footprint and provides insights to optimize proxy usage and reduce wasted resources.

Q4. Can Istio monitoring help in multi-cluster or hybrid cloud setups?

Absolutely. Istio supports multi-cluster meshes, and monitoring ensures traffic routing, mTLS enforcement, and service discovery remain consistent across environments. CubeAPM’s BYOC model lets teams keep telemetry inside their own cloud for compliance.

Q5. What’s the role of OpenTelemetry in Istio monitoring?

OpenTelemetry is the bridge between Istio and observability platforms. By exporting OTLP traces and metrics from Envoy sidecars, teams can feed mesh telemetry directly into CubeAPM, avoiding vendor lock-in and ensuring standardization.