Kubernetes adoption is exploding—over 66%+ of organizations now run it in production, but monitoring hasn’t kept up. Teams juggle thousands of short-lived pods, noisy microservices, and hybrid nodes, yet still struggle to get real-time visibility.

Without eBPF, monitoring depends on sidecars, agents, or log shippers that bloat CPU/memory usage, miss kernel-level signals, and drive data ingestion bills far higher than infra costs. Blind spots around pod-to-pod latency, syscall errors, and packet drops often surface only after outages.

eBPF solves this by running inside the Linux kernel, capturing syscalls, network flows, and I/O at near-zero overhead—offering deep, continuous visibility without the noise or cost explosion. With CubeAPM’s Smart Sampling (reduces volume by 70%+) and BYOC/self-hosting for compliance, eBPF-powered monitoring finally becomes scalable and enterprise-ready.

In this article, we’ll break down how eBPF works, the challenges it solves, the key KPIs to track, and a step-by-step guide to setting up eBPF Kubernetes monitoring with CubeAPM.

What is eBPF and Why It Matters for Kubernetes Monitoring?

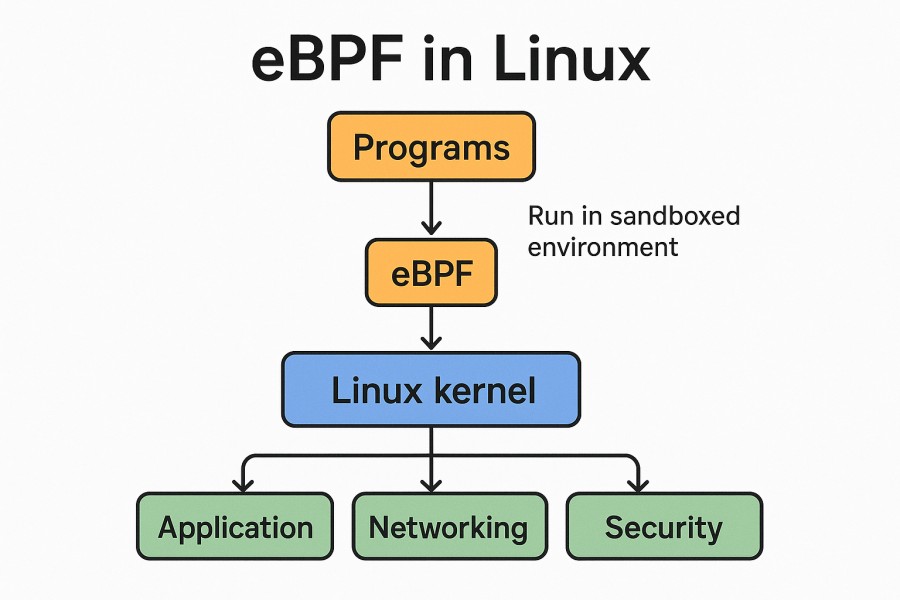

eBPF (extended Berkeley Packet Filter) is a kernel technology in Linux that allows you to run sandboxed programs directly inside the operating system kernel. Instead of relying on user-space agents or intrusive code instrumentation, eBPF can hook into system calls, network packets, and I/O events, delivering telemetry straight from the kernel with near-zero overhead.

For Kubernetes monitoring, this changes the game. With eBPF, businesses gain deep, real-time insights into cluster activity without slowing down workloads or inflating monitoring costs. Some of the biggest advantages include:

- Kernel-level visibility: Capture pod-to-pod traffic, syscalls, and network drops that traditional sidecars often miss.

- Low overhead: eBPF runs safely in the kernel, avoiding extra CPU/memory drain on already resource-constrained nodes.

- Faster troubleshooting: Detect issues like TCP retransmits, DNS delays, or syscall failures in real time, before they cascade into outages.

- Simplified monitoring stack: No need for heavy sidecars or agents—eBPF reduces infrastructure sprawl and complexity.

- Security + compliance insights: By monitoring kernel activity, eBPF also highlights privilege escalations, anomalous traffic, and system misconfigurations.

Together, these benefits make eBPF an essential building block for observability in today’s large-scale, distributed Kubernetes environments—where even a small blind spot can trigger costly downtime.

Example: Using eBPF to Troubleshoot Network Latency in Kubernetes

Imagine a retail platform running hundreds of services in Kubernetes. Customers report checkout delays, but dashboards show healthy CPU and memory usage. With eBPF, engineers trace network flows at the kernel level and discover a spike in TCP retransmissions between the payment-service pods and the database. This kernel-level visibility pinpoints the root cause—a misconfigured NIC on one node—something sidecar proxies completely missed. By fixing the configuration, latency drops instantly, preventing further revenue loss.

Key Challenges in Kubernetes Monitoring Without eBPF

Even with modern monitoring stacks, most teams still face gaps when relying only on sidecars, agents, or logs. Without eBPF, Kubernetes observability is limited in the following ways:

- Agent Overhead: Sidecars and node agents consume CPU and memory, adding 10–20% resource overhead on busy clusters. At scale, this directly inflates cloud bills.

- Blind Spots in Visibility: Traditional tools rarely capture kernel-level signals like syscall failures, packet drops, or TCP retransmits—problems that often cause unexplained latency or 5xx errors.

- High Data Volume & Cost: Log shippers and metric scrapers flood backends with raw events. According to Gartner (2024), observability data is growing 5× faster than infrastructure costs, making budgets unpredictable.

- Short-Lived Pod Challenges: Containers that live for seconds often disappear before sidecar agents can scrape their metrics, leaving gaps in tracing and debugging.

- Compliance & Audit Gaps: Relying on probabilistic sampling or incomplete retention means teams can lose critical event data—impacting audits for GDPR, HIPAA, or India’s DPDP Act.

These challenges mean teams either overspend on monitoring or fly blind during critical outages—both scenarios hurt reliability and business outcomes.

Why eBPF + CubeAPM is the Future of Kubernetes Monitoring

Seamless OpenTelemetry Integration

eBPF telemetry—covering syscalls, network flows, and kernel events—plugs directly into CubeAPM through the OpenTelemetry collector. This eliminates lock-in to proprietary agents and ensures that logs, metrics, traces, and eBPF signals are unified in one workflow, giving teams a complete picture of Kubernetes health (OpenTelemetry).

Smarter Data Reduction with Sampling

Traditional APMs rely on probabilistic sampling that often drops critical error traces. CubeAPM uses Smart Sampling, which prioritizes anomalies such as latency spikes or network retransmits, cutting telemetry volume by up to 70% while preserving valuable insights. This makes eBPF-powered observability sustainable at scale.

Predictable Costs at Scale

Gartner notes that observability data volume is growing 5× faster than infrastructure costs (Gartner 2024). Legacy vendors often charge hidden fees for retention, RUM, or synthetic checks. CubeAPM simplifies this with transparent pricing and no surprise add-ons—paired with eBPF’s efficient collection to keep monitoring spend under control.

Built for Compliance and Localization

Compliance frameworks like GDPR and India’s DPDP Act demand strict data residency. eBPF captures low-level telemetry without intrusive agents, and CubeAPM extends this with BYOC/self-hosting, ensuring data stays inside your cloud and region. This makes observability compliant by design.

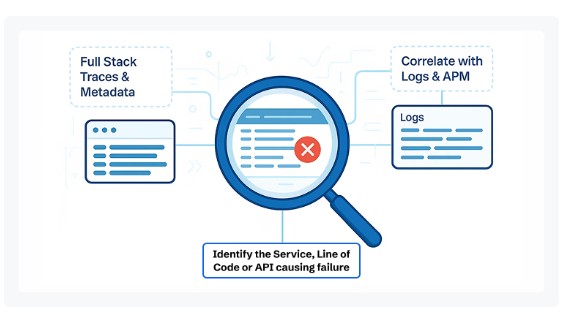

Faster Troubleshooting and MTTR

eBPF surfaces low-level issues—like dropped packets, syscall failures, and pod churn—that traditional tools miss. CubeAPM correlates these with application traces and metrics, helping engineers resolve incidents up to 3× faster (IDC Report on MTTR). The result: shorter outages, higher uptime, and fewer late-night firefights.

eBPF Signals & Key KPIs to Track in Kubernetes

eBPF brings kernel-level visibility into Kubernetes environments, making it possible to track metrics that sidecars or log shippers often miss. Below are the core categories and the most valuable KPIs to monitor with CubeAPM.

Network Reliability & Latency

Networking issues often manifest as slow checkouts, 502 errors, or degraded user experience. eBPF helps capture low-level flow data for early detection.

- Pod-to-Service RTT (p95/p99): Measures round-trip latency between pods and services. Spikes often indicate congestion or faulty service routing. Threshold: p99 > 500 ms sustained for 5 minutes.

- TCP Retransmit Rate (%): Tracks the percentage of retransmitted packets in cluster traffic. High values suggest congestion, MTU mismatches, or flaky NICs. Threshold: retransmit ratio > 2% over a 10-minute window.

- Packet Drops by Reason: Captures drops at the kernel or network layer (e.g., firewall, queue overflow). Helps explain sudden 502/504s missed by L7 monitoring. Threshold: any 3× increase compared to 24-hour baseline.

DNS & Connectivity

DNS issues are a silent killer in Kubernetes clusters, leading to hidden latency and intermittent failures.

- DNS Resolution Latency (p95): Tracks the time to resolve service names. Elevated values often mean overloaded kube-dns or CoreDNS pods. Threshold: p95 > 100 ms for 10 minutes.

- DNS Error Rate (SERVFAIL/NXDOMAIN): Measures the proportion of failed lookups. Spikes can stall service discovery and cause cascading timeouts. Threshold: error rate > 1% for 5 minutes.

Kernel & Syscall Health

Syscalls are the bridge between applications and the OS. Monitoring failures here surfaces problems invisible to traditional APM tools.

- Failed Syscalls by Errno: Detects errors like ETIMEDOUT, ECONNREFUSED, or EADDRINUSE. Useful for tracing app errors back to system-level failures. Threshold: > 50 failures/minute per container.

- File I/O Latency (p95 reads/writes): Captures how quickly files are read or written by pods. Helps identify node or disk bottlenecks. Threshold: read/write p95 > 20 ms sustained for 10 minutes.

Resource Pressure (cGroup/Node Signals)

eBPF exposes signals from kernel schedulers and cGroups that show real resource contention, not just usage.

- CPU Throttled Seconds: Monitors the amount of CPU time throttled by cGroup limits. High throttling often explains p99 latency spikes. Threshold: throttled ratio > 10% over 5 minutes.

- OOM-Kill Count: Tracks kernel-triggered Out-of-Memory kills. Useful for catching root causes of CrashLoopBackOff errors. Threshold: any increase > 0 in the last 5 minutes.

- Run Queue Delay (sched latency): Shows the time processes wait before being scheduled on the CPU. Confirms noisy neighbor or node contention. Threshold: run queue delay p95 > 10 ms.

Security-Relevant Operations

Security anomalies often overlap with reliability issues—like runaway retries hammering the network.

- Unexpected Outbound Fan-Out: Monitors excessive outbound connections to unique IP ranges. Often a sign of compromised pods or retry storms. Threshold: > 200 unique /24 connections in 5 minutes.

- Exec/Privilege Escalation Anomalies: Detects execve spikes or unusual capability changes inside containers. Helps catch misconfigurations or breaches. Threshold: 3× increase compared to the weekly baseline.

Kubernetes-Aware Correlations

These metrics tie eBPF signals directly to Kubernetes objects for context-aware observability.

- NetNS/Pod Churn Rate: Measures the frequency of namespace or pod creation/deletion. High churn destabilizes DNS and connection pools. Threshold: churn > 2× hourly baseline.

- Service-to-Service Error Map: Correlates upstream 5xx errors with downstream syscall or network anomalies. Critical for tracing cascading failures. Threshold: upstream error rate > 1% with concurrent downstream ETIMEDOUT spikes.

How to Monitor Kubernetes with eBPF and CubeAPM (Step-by-Step)

Step 1: Deploy eBPF Agents in Your Kubernetes Cluster

To start, deploy eBPF-based probes such as Cilium or BCC tools as a DaemonSet across your Kubernetes nodes. These agents are compatible with GKE, EKS, and AKS, ensuring smooth deployment on managed clusters. For setup, follow the CubeAPM Kubernetes installation guide.

Example DaemonSet YAML (one-liner for illustration):

kubectl apply -f https://docs.cubeapm.com/examples/ebpf-daemonset.yamlThis deploys lightweight probes on each node to capture system calls, network flows, and kernel-level telemetry for CubeAPM.

Step 2: Connect eBPF Streams to CubeAPM

Next, configure the OpenTelemetry (OTLP) collector to forward eBPF metrics and traces into CubeAPM. This ensures all kernel-level telemetry is streamed without vendor lock-in. Refer to the CubeAPM OpenTelemetry instrumentation guide for collector setup.

Example collector snippet for eBPF metrics:

receivers:

otlp:

protocols:

grpc:

http:

exporters:

cubeapm:

endpoint: https://<your-cubeapm-endpoint>

service:

pipelines:

metrics:

receivers: [otlp]

exporters: [cubeapm]

This configuration ensures eBPF flows (latency, retransmits, syscalls) are ingested in CubeAPM alongside logs, traces, and metrics.

Step 3: Visualize Data in CubeAPM Dashboards

Once data is flowing, CubeAPM automatically maps it into prebuilt dashboards for Kubernetes. You can view pod restarts, syscall failures, and network latency spikes segmented by namespace or workload. Explore the infrastructure monitoring dashboards and log views for correlated troubleshooting.

For example, a spike in TCP retransmits will appear in the network dashboard, linked to the exact payment-service pods experiencing errors. This shortens root-cause analysis from hours to minutes.

Step 4: Set Smart Alerts

Finally, configure alerts to notify teams of anomalies detected via eBPF. CubeAPM supports flexible alerting pipelines, including email, Slack, and webhook integrations. Full setup instructions are available in the CubeAPM alerting documentation.

Example alert rules:

- Pod restarts > 5/min → catches unstable deployments early.

- Network latency > 500ms between namespaces → prevents customer-facing slowdowns.

- Critical syscall error rate > 2% → detects systemic OS-level issues before they escalate.

With these steps, teams gain a complete, low-overhead monitoring pipeline powered by eBPF and CubeAPM.

Real-World Example: Checkout Failures in E-Commerce

Challenge: Customers Experiencing 502 Errors at Checkout

During a seasonal sale, a retail platform running on Kubernetes faced a surge of 502 errors during checkout. Standard dashboards showed healthy CPU and memory usage, leaving engineers blind to the root cause. Customers abandoned carts, directly impacting revenue.

Solution: eBPF Tracing with CubeAPM

By deploying eBPF probes and forwarding telemetry into CubeAPM, engineers traced network flows and syscalls at the kernel level. CubeAPM dashboards revealed a surge in TCP retransmits and dropped packets between the API gateway and the payment-service pods—issues invisible to sidecar-based monitoring.

Fixes: Network & Configuration Optimization

Engineers adjusted TCP settings, patched kube-proxy on affected nodes, and reconfigured the payment API integration. With CubeAPM’s correlated dashboards, they validated improvements in real time, confirming a drop in retransmit rates and syscall errors.

Result: Fewer Failures, Higher Conversions

Within hours, checkout failures dropped by 40%, restoring user trust and preventing further revenue loss. By surfacing kernel-level anomalies through CubeAPM’s eBPF integration, the team reduced MTTR significantly and built confidence in handling peak traffic events.

Benefits of Using CubeAPM for eBPF Kubernetes Monitoring

Unified Observability Across Signals

CubeAPM brings logs, metrics, traces, and eBPF signals into a single platform. Instead of juggling multiple tools, teams get a correlated view of infrastructure and applications—reducing context switching and speeding up investigations.

Smarter Data Control with Sampling

Unlike generic sampling, CubeAPM applies Smart Sampling that keeps critical anomalies (errors, latency spikes, retransmits) while cutting routine noise. This allows enterprises to capture what matters most, while reducing overall telemetry volume by up to 70%.

Flexible Deployment Options

Compliance-heavy industries need control over data location. CubeAPM supports SaaS, BYOC, and full self-hosting, allowing businesses to store observability data inside their own cloud or region for GDPR, HIPAA, and DPDP compliance.

Transparent & Predictable Costs

Legacy vendors often add charges for RUM, synthetic checks, or retention. CubeAPM provides transparent, usage-based pricing without hidden add-ons. When combined with eBPF’s efficient data collection, monitoring costs stay predictable even as clusters grow.

Faster Troubleshooting and Reduced MTTR

By correlating eBPF kernel-level telemetry with application traces, CubeAPM helps engineers pinpoint root causes in minutes. Teams can move from symptoms (502 errors, latency) to solutions (network tuning, syscall fixes) up to 3× faster, improving reliability and user experience.

Verification Checklist & Example Alerts for eBPF Kubernetes Monitoring with CubeAPM

Before declaring your eBPF monitoring setup production-ready, it’s important to verify that probes, pipelines, and dashboards are functioning as expected.

Verification Checklist

- eBPF Probes Running: Confirm DaemonSet pods are deployed and reporting across all Kubernetes nodes (kubectl get pods -n kube-system).

- OTLP Pipeline Connected: Check that the OpenTelemetry collector is forwarding metrics and traces into CubeAPM without errors.

- Data Visible in Dashboards: Validate that CubeAPM dashboards (e.g., infrastructure monitoring) are showing network latency, pod churn, and syscall errors in real time.

- Logs Flowing: Ensure system-level events and dropped packet logs are captured and visible in CubeAPM log monitoring.

Example Alerts in CubeAPM

Set a few baseline alerts to catch critical anomalies surfaced by eBPF. These can be configured directly in CubeAPM alerting:

1. OOM-Kill Events > 0 in 5m

Catches pods terminated by the kernel due to memory pressure—often the hidden root cause behind CrashLoopBackOff and tail-latency spikes. Route this alert to your on-call channel via CubeAPM’s alerting setup.

groups:

- name: cubeapm-ebpf.rules

rules:

- alert: KubernetesOOMKills

expr: increase(kube_pod_container_status_last_terminated_reason{reason="OOMKilled"}[5m]) > 0

for: 2m

labels:

severity: critical

service: kubernetes

product: cubeapm

annotations:

summary: "OOM kill detected in {{ $labels.namespace }}/{{ $labels.pod }}"

description: "One or more containers were OOM-killed in the last 5 minutes. Check memory requests/limits, leaks, and node pressure; correlate with eBPF cgroup pressure and OOM events."

runbook: "https://docs.cubeapm.com/infra-monitoring"

2. TCP Retransmit Rate > 3% for 10m

Surfaces network congestion, MTU mismatches, or flaky NICs that degrade service reliability. Correlate with namespace-level latency in CubeAPM dashboards:

groups:

- name: cubeapm-ebpf.rules

rules:

- alert: HighTCPRetransmitRate

expr: |

(

sum by (namespace,pod) (rate(ebpf_tcp_retransmits_total[10m])) /

clamp_min(sum by (namespace,pod) (rate(ebpf_tcp_packets_total[10m])), 1)

) * 100 > 3

for: 10m

labels:

severity: warning

service: network

product: cubeapm

annotations:

summary: "High TCP retransmit rate (>3%) in {{ $labels.namespace }}/{{ $labels.pod }}"

description: "eBPF indicates excessive retransmissions. Investigate congestion, MTU/PMTU issues, or NIC/driver problems; correlate with p95/p99 RTT and packet drops."

note: "If ebpf_* metrics are unavailable, fallback: rate(node_netstat_Tcp_RetransSegs[10m]) / rate(node_netstat_Tcp_OutSegs[10m]) * 100"

3. Syscall Error Count > 100/minute per container

Highlights systemic kernel-level failures (e.g., ETIMEDOUT, ECONNREFUSED) that often precede 5xx spikes and retry storms. Tie firings to application traces to speed up RCA. See OTEL setup:

groups:

- name: cubeapm-ebpf.rules

rules:

- alert: SyscallErrorBurst

expr: sum by (namespace,pod,errno) (rate(ebpf_syscall_errors_total[5m])) > 100

for: 5m

labels:

severity: warning

service: kernel

product: cubeapm

annotations:

summary: "Syscall error burst in {{ $labels.namespace }}/{{ $labels.pod }} (errno={{ $labels.errno }})"

description: "Elevated kernel syscall failures can cascade into timeouts and 5xx. Inspect downstream deps, DNS latency, and CPU throttling; validate fixes in CubeAPM dashboards."

threshold: ">100 errors/min per pod for 5m"

With this checklist and a few critical alerts, teams can ensure their eBPF-powered monitoring pipeline is both reliable and actionable from day one.

Conclusion: Why eBPF Kubernetes Monitoring with CubeAPM Matters

eBPF turns Kubernetes monitoring from guesswork into clarity. By observing syscalls, network flows, and I/O in the Linux kernel, teams get real-time, low-overhead visibility into the issues that truly break customer journeys—packet drops, DNS stalls, OOM kills, and noisy-neighbor contention.

CubeAPM makes this power practical: OpenTelemetry-native ingestion, Smart Sampling that preserves high-value anomalies, and correlated dashboards that connect kernel signals to application traces, logs, and infrastructure—available via SaaS, BYOC, or self-hosted for strict data residency and compliance.

If you’re ready to reduce blind spots, shrink MTTR, and keep costs predictable, adopt eBPF-powered Kubernetes monitoring with CubeAPM today. Start now; book a demo and see eBPF observability in action.

Disclaimer: The information in this article reflects the latest details available at the time of publication and may change as technologies and products evolve.

FAQs

1. Will eBPF work on managed Kubernetes (EKS/GKE/AKS) and do I need special kernel settings?

Yes, modern managed node images ship with BPF enabled. You typically deploy a privileged DaemonSet per node; just ensure required BPF helpers/capabilities aren’t restricted by your image or policy.

2. Is eBPF safe and what’s the runtime overhead?

eBPF programs are verified before loading, preventing unsafe memory access. Overhead is low because collection happens in-kernel; keep programs scoped and sample high-volume paths to stay lightweight.

3. Can eBPF replace my service mesh or sidecar agents?

It replaces much L3/L4 visibility and reduces sidecar overhead, but not mesh L7 features like mTLS, retries, or traffic shaping. Use eBPF to complement the mesh and trim sidecars where you don’t need L7 policy.

4. What permissions does the DaemonSet need, and how do I keep it compliant?

Most collectors run privileged to attach to kernel hooks. Restrict via node selectors/Pod Security, tag data by cluster/namespace, and use CubeAPM BYOC/self-hosting to keep data in-region for compliance.

5. How do I roll this out safely across multiple clusters?

Canary on a small pool, baseline for a week, then roll dev → staging → prod. Version your OTEL configs, label events (cluster, namespace, workload), and use CubeAPM dashboards/RBAC to segment teams.