Monitoring Envoy Proxy is essential for reliable, low-latency microservices. The observability tools market is projected to grow from US$3.2 billion in 2024 to US$9.2 billion by 2034, showing rising demand for unified monitoring. Since Envoy often runs at ingress, as sidecars, or API gateways, monitoring lets you detect latency spikes, error floods, and unhealthy clusters.

Teams face challenges with Envoy Proxy monitoring: fragmented metrics, siloed logs, and brittle tracing. Under high load, retry storms, TLS handshake failures, and upstream endpoint issues can erupt without proper instrumentation, leading to SLA breaches and downtime.

CubeAPM is the best solution for monitoring Envoy Proxy. It unifies metrics, logs, and error tracing with an OpenTelemetry-native approach, enabling deep visibility into Envoy’s performance while offering a predictable pricing and Smart Sampling for scale.

In this article, we’ll cover what Envoy Proxy is, why monitoring matters, key metrics, and how CubeAPM helps.

Table of Contents

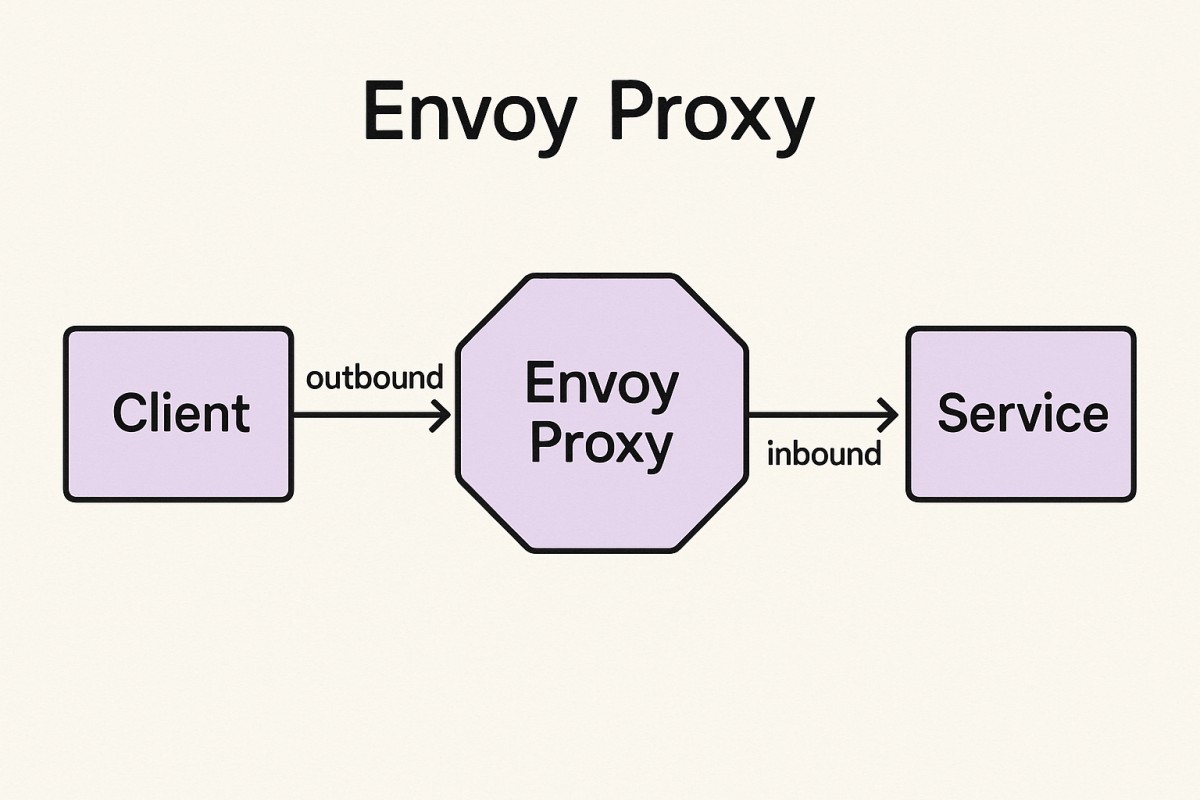

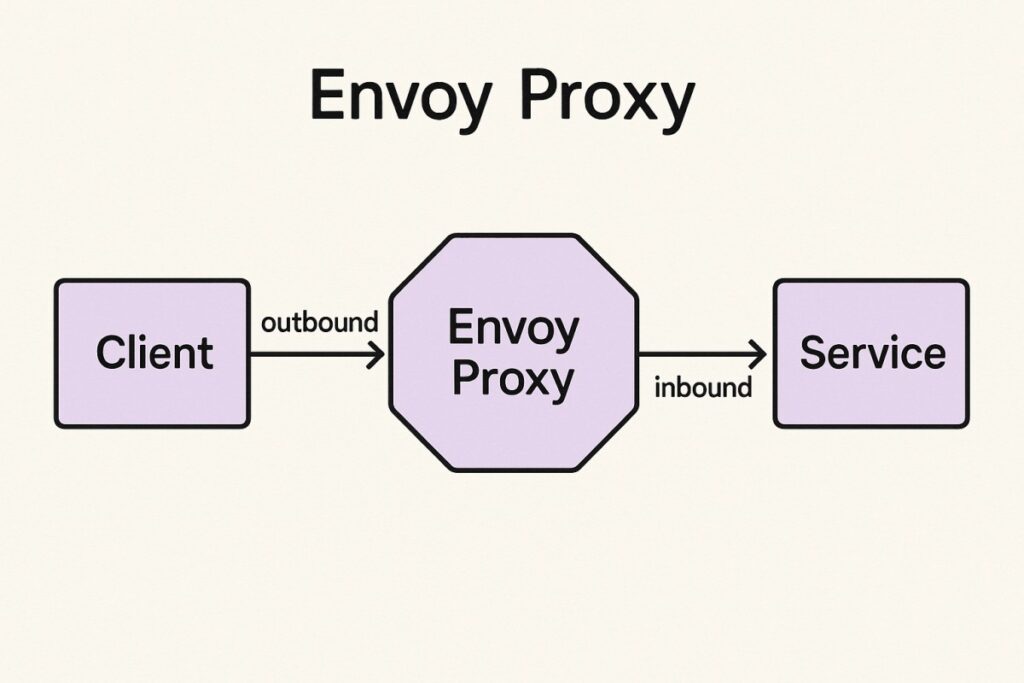

ToggleWhat is Envoy Proxy?

Envoy Proxy is an open-source, high-performance edge and service proxy designed for modern cloud-native applications. Originally developed at Lyft and now a CNCF graduated project, Envoy acts as a universal data plane, handling service discovery, load balancing, traffic routing, observability, and security across microservices. It is lightweight, extensible, and built to integrate with distributed systems like Kubernetes and service meshes such as Istio and Consul.

For businesses, Envoy provides a reliable way to manage and secure traffic across highly dynamic environments. Its key benefits include:

- Service mesh backbone: Powers sidecar architectures, enabling zero-trust networking and consistent observability across services.

- Advanced traffic control: Features like retries, circuit breaking, and rate limiting help maintain reliability during failures.

- Security at scale: Native support for TLS termination, mTLS between services, and policy enforcement protects sensitive traffic.

- Deep observability: Built-in support for tracing and detailed stats makes it easier to monitor performance and diagnose issues.

Example: Using Envoy as an API Gateway for FinTech Services

A digital payments company deploys Envoy as its API gateway to handle millions of daily transactions. By leveraging Envoy’s advanced load balancing and TLS termination, they can ensure low-latency connections between mobile apps and backend services. With monitoring in place, sudden surges in 503 errors or handshake failures are caught early, preventing failed payments and improving customer trust.

Why Monitoring Envoy Proxy is Important

Envoy sits in the hot path of every request

Envoy isn’t just another component; it’s in the critical data path. Whether terminating TLS, routing requests, or load balancing upstreams, every call flows through it. That means even minor issues—like a misconfigured route, an overwhelmed listener, or a cluster health flap—can ripple across your microservices estate. Monitoring Envoy’s dimensional metrics (counters, gauges, histograms) is the only way to catch these early before they escalate into latency spikes and downtime.

Retry storms and circuit breaking amplify outages

Retries are a lifesaver when upstreams fail, but mis-tuned retry budgets can turn small incidents into massive traffic floods. Operators often report sudden spikes of 503 UF (“upstream connect error or reset”) after enabling retries—classic signs of retry amplification. Without monitoring Envoy’s retry counters, overflow events, and outlier ejections, businesses risk outages that are bigger than the initial upstream issue.

No healthy upstreams = instant user impact

Envoy’s load balancing depends on endpoint health. When autoscaling lags or outlier detection marks too many endpoints as unhealthy, Envoy falls back to 503, no healthy upstream. These errors surface immediately to users. Monitoring upstream health ratios and outlier ejections helps you react before blackholes form—a problem frequently highlighted by engineers in Istio and App Mesh production deployments.

TLS and certificate rotation failures

Envoy often handles TLS termination and mTLS between services. If certificate rotation via SDS fails or SAN mismatches occur, handshake failures spike instantly. Without visibility into handshake error counters or certificate expiry, teams only discover issues after production traffic breaks. In industries with compliance needs (finance, healthcare), this can mean SLA breaches or regulatory risks.

Egress and external dependencies

Many businesses run third-party integrations—payment APIs, SaaS services—through Envoy egress policies. Here, failures aren’t always in your code but in DNS lookups, network hops, or provider downtime. Monitoring Envoy’s external service metrics (latency, DNS errors, deny counts) helps catch upstream provider issues before they cascade into customer-facing incidents.

High-cardinality metrics require control

Envoy emits tagged, dimensional stats via sinks like Prometheus, OTLP, and DogStatsD. But uncontrolled tag cardinality (e.g., per-route, per-code) can overwhelm backends like Prometheus. Monitoring pipelines must be tuned for Envoy’s labels—cluster, zone, response_code—so you gain insight without blowing up storage.

gRPC and HTTP/2 need special observability

As gRPC adoption accelerates, Envoy acts as the default proxy for bidirectional streams. But gRPC codes differ from HTTP status codes, and resets or trailer-based failures can easily be misattributed. Monitoring per-method latency, reset reasons, and gRPC error codes in Envoy ensures you diagnose the right layer instead of chasing phantom upstream bugs.

The business impact of outages is massive

Monitoring Envoy isn’t just a technical best practice—it’s a business necessity. Gartner reports that over 90% of enterprises peg downtime above $300,000 per hour, and 41% say it costs between $1M–$5M per hour. Add to this that 84% of companies faced an API security incident in 2024, and the need to monitor Envoy’s TLS, authentication failures, and anomalous traffic patterns becomes clear.

Key Metrics to Monitor in Envoy Proxy

Monitoring Envoy Proxy effectively means focusing on the metrics that directly reflect service health, traffic flow, and user experience. Envoy emits dimensional metrics via stats sinks like Prometheus or OTLP, and grouping them into categories makes it easier to prioritize.

Traffic Metrics

These metrics highlight how much traffic Envoy is handling and whether requests are flowing smoothly through listeners, routes, and clusters.

- Requests per second (RPS): Tracks the volume of requests hitting Envoy listeners and upstream clusters. A sudden spike may indicate a surge or DDoS, while a drop could mean routing or DNS issues. Threshold: baseline deviations above 20–30% warrant investigation.

- Request latency (p50, p95, p99): Shows how long requests take to complete across Envoy. P99 latency is especially useful to catch tail-end slowness that impacts user experience. Threshold: keep p99 latency under 200ms for APIs and <1s for interactive apps.

- Active connections: Measures open connections between Envoy and clients/upstreams. A rising trend may indicate traffic growth, but sudden drops or spikes often suggest load balancer misconfigurations. Threshold: sustained spikes >80% of the connection pool capacity need action.

Error Metrics

Error metrics expose upstream and downstream problems that can directly cause outages or degraded user experience.

- 5xx error rate: Captures server-side failures routed through Envoy. A steady increase signals upstream instability or misconfigured filters. Threshold: should remain below 1% in production.

- 503/504 upstream errors: 503 indicates no healthy upstreams; 504 reflects upstream timeouts. Both can disrupt SLAs if left unchecked. Threshold: alert if error rate >2% over 5 minutes.

- gRPC failures: Envoy surfaces gRPC-specific codes such as UNAVAILABLE or DEADLINE_EXCEEDED. These often differ from HTTP codes, so tracking them prevents misdiagnosis. Threshold: >0.5% error rate typically requires action.

Resource Metrics

Envoy’s performance also depends on underlying resources—CPU, memory, and threading. Monitoring these helps avoid saturation that slows traffic.

- CPU utilization: Envoy’s filters, TLS handshakes, and logging can be CPU-intensive. Spikes during peak hours may signal costly Lua filters or mis-tuned retries. Threshold: >80% CPU sustained should trigger scaling.

- Memory usage: High connection churn or buffer sizes can lead to rising memory. If left unchecked, this causes OOMKills and proxy restarts. Threshold: alert if memory usage exceeds 75–80% of allocated limits.

- Thread pool saturation: Envoy uses worker threads to handle requests. Thread starvation reduces throughput and increases latency. Threshold: sustained saturation above 70% signals risk of backpressure.

Service Mesh Metrics

When Envoy is deployed in a mesh (Istio, Consul, App Mesh), additional metrics capture cluster health and resilience.

- Cluster health: Tracks the percentage of healthy endpoints in each upstream cluster. Low health percentages directly cause 503 errors. Threshold: <80% healthy endpoints is critical.

- Retry counts: Retries help absorb transient failures, but spikes create retry storms that overwhelm backends. Threshold: sudden >3x increase in retries per second indicates cascading issues.

- TLS handshake metrics: Measures success vs. failure rates of TLS/mTLS sessions. High failure rates often mean expired certificates, SAN mismatches, or SDS misconfigurations. Threshold: >1% handshake failure rate requires urgent investigation.

How to Monitor Envoy Proxy with CubeAPM (Step-by-Step)

Step 1: Install CubeAPM

Begin by installing CubeAPM in your environment. You can deploy it on Kubernetes or on standalone servers, depending on your infrastructure. Follow the installation guide for setup instructions, including Helm charts and YAML manifests for Kubernetes.

Step 2: Configure CubeAPM

Once installed, configure CubeAPM with your account token, base URL, and cluster peers. Configuration can be done via CLI, config files, or environment variables. Detailed parameters are documented in the configuration guide.

Step 3: Enable Envoy Metrics via OpenTelemetry

Envoy supports Prometheus and OpenTelemetry exporters. Use the CubeAPM OpenTelemetry instrumentation guide to collect Envoy’s request, latency, and cluster health metrics. This ensures Envoy stats are ingested directly into CubeAPM.

Step 4: Collect Envoy Logs

Envoy emits detailed access and error logs. Use CubeAPM’s logs integration to centralize them. Ingest logs into CubeAPM for correlation with metrics and traces—critical for diagnosing retries, 503s, or TLS failures.

Step 5: Configure Alerts

Set up alert rules for Envoy-specific signals such as p99 latency, 5xx error rates, and unhealthy upstream endpoints. CubeAPM allows email, Slack, PagerDuty, or Opsgenie notifications. See the alerting configuration guide for setup details.

Step 6: Add Infrastructure Context

To go beyond proxy stats, monitor the health of nodes and containers running Envoy. CubeAPM’s infrastructure monitoring module adds CPU, memory, and network visibility to correlate Envoy behavior with underlying resource usage.

Real-World Example: Monitoring Envoy in a FinTech API Gateway

Challenge

A large digital payments provider deployed Envoy Proxy as its API gateway to handle millions of daily transactions. During peak traffic hours, customers began experiencing intermittent 504 Gateway Timeout errors. Standard metrics showed increased latency, but the team lacked visibility into whether the issue was caused by Envoy, the network, or backend services. The errors not only disrupted transactions but also risked violating SLAs with enterprise customers.

Solution

By integrating Envoy with CubeAPM, the provider ingested both Envoy metrics and detailed logs. CubeAPM dashboards revealed a clear correlation: retry storms were spiking in Envoy whenever the backend MySQL cluster approached high CPU utilization. Traces visualized by CubeAPM confirmed that the proxy was retrying failed queries excessively, amplifying the database bottleneck. With these insights, the engineering team fine-tuned retry policies in Envoy and optimized backend query performance.

Result

Within weeks, the company saw a 35% reduction in failed payment transactions during traffic surges. SLA compliance improved significantly, and incident resolution time dropped as on-call engineers had full visibility into Envoy’s role in transaction flows. The combination of Envoy’s rich telemetry and CubeAPM’s unified metrics, logs, and traces provided the team with the actionable intelligence needed to keep payments reliable at scale.

Verification Checklist & Example Alert Rules for Monitoring Envoy Proxy with CubeAPM

Before going live, it’s essential to validate that Envoy Proxy telemetry is being ingested correctly and that actionable alerts are configured. This ensures your team can catch latency spikes, error floods, or unhealthy clusters before they impact SLAs.

Verification Checklist

- Envoy stats enabled: Confirm /stats endpoint or OpenTelemetry exporter is active and exposing counters, gauges, and histograms.

- Metrics ingestion verified: Ensure CubeAPM is successfully scraping Envoy metrics via OTLP or Prometheus pipeline.

- Logs integrated: Access and error logs are flowing into CubeAPM for correlation with metrics.

- Dashboards visible: CubeAPM dashboards display live RPS, latency (p95/p99), error rates, and cluster health.

- Alerts configured: Thresholds set for key signals like latency, 5xx error rate, retries, and upstream health.

- Infrastructure context added: CPU, memory, and thread pool metrics of nodes running Envoy are captured.

Example Alert Rules

1. Alert: High 5xx Error Rate

Triggers when Envoy is consistently returning 5xx errors, often pointing to upstream instability or misconfigurations.

alert: EnvoyHigh5xxErrorRate

expr: sum(rate(envoy_http_downstream_rq_xx{response_code_class="5"}[5m]))

/ sum(rate(envoy_http_downstream_rq_total[5m])) > 0.02

for: 5m

labels:

severity: critical

annotations:

description: "5xx error rate has exceeded 2% for the past 5 minutes."

2. Alert: Unhealthy Upstream Endpoints

Flags when too many upstreams in a cluster are marked unhealthy by Envoy’s outlier detection.

alert: EnvoyUnhealthyUpstreams

expr: (envoy_cluster_membership_total - envoy_cluster_membership_healthy)

/ envoy_cluster_membership_total > 0.25

for: 5m

labels:

severity: critical

annotations:

description: "More than 25% of endpoints in the upstream cluster are unhealthy."

3. Alert: Retry Storm Detected

Identifies when retry attempts surge abnormally, often amplifying upstream issues.

alert: EnvoyRetryStorm

expr: rate(envoy_cluster_upstream_rq_retry[5m]) > 100

for: 5m

labels:

severity: critical

annotations:

description: "Retry attempts exceeded 100 per second, indicating a retry storm."

Why Use CubeAPM for Envoy Proxy Monitoring

- Transparent $0.15/GB pricing, no hidden fees: CubeAPM simplifies observability costs with flat, predictable pricing. Unlike legacy vendors that charge for hosts, containers, or extra features, you only pay for the data you ingest. This makes monitoring Envoy Proxy at scale cost-efficient and easy to forecast.

- Smart Sampling for high-RPS workloads: Envoy often processes thousands of requests per second, making raw data collection expensive and noisy. CubeAPM’s Smart Sampling ensures you capture statistically significant traces and logs without overwhelming storage or budgets—perfect for traffic-heavy gateways and service meshes.

- 800+ integrations, including Envoy, Istio, Kubernetes, gRPC: With native support for Envoy Proxy and its ecosystem, CubeAPM plugs seamlessly into service meshes, ingress controllers, and Kubernetes clusters. Prebuilt dashboards and queries accelerate setup so your team gains visibility faster.

- SaaS or BYOC deployments, GDPR/HIPAA-ready: Whether you prefer a fully managed SaaS solution or a Bring Your Own Cloud (BYOC) deployment for compliance, CubeAPM adapts to your environment. Enterprises in finance, healthcare, and regulated markets can stay compliant with GDPR, HIPAA, and DPDP requirements while still gaining deep Envoy insights.

- Proven case studies across FinTech, SaaS, logistics: Organizations across industries use CubeAPM to monitor Envoy in production. From payment providers reducing failed transactions to SaaS platforms improving API reliability, CubeAPM has a track record of delivering actionable observability for critical Envoy workloads.

Conclusion

Monitoring Envoy Proxy is essential for keeping modern microservices reliable, secure, and performant. Sitting in the hot path of every request, Envoy directly impacts latency, error rates, and SLA compliance, making proactive observability a business-critical need.

CubeAPM provides complete visibility into Envoy with unified metrics, logs, and traces. Its Smart Sampling, transparent $0.15/GB pricing, and 800+ integrations make it easier to scale monitoring without runaway costs or tool sprawl.

Whether you’re running Envoy as an API gateway, sidecar, or service mesh proxy, CubeAPM ensures your systems stay resilient. Start monitoring Envoy Proxy with CubeAPM today and keep your microservices running flawlessly.