Monitoring HAProxy is vital for ensuring reliable load balancing and traffic routing. As of September 2025, HAProxy remains a market leader, ranked top in all its G2 Summer 2025 Grid® categories, proving its widespread adoption in enterprise environments. Effective monitoring prevents traffic spikes, latency, and backend failures from escalating into outages.

For many teams, monitoring HAProxy is still challenging. The native stats page lacks correlation with backend health, logs, or error traces. Alerts often come too late, while storing high-volume metrics and traces quickly becomes complex and expensive, slowing down incident response.

CubeAPM is the best solution for monitoring HAProxy, offering unified metrics, logs, and error tracing in one platform. With smart sampling, scalable retention, and 800+ integrations, CubeAPM makes it easy to monitor HAProxy at scale while keeping costs predictable.

In this article, we’ll cover what HAProxy is, why monitoring it matters, key metrics to track, and how CubeAPM simplifies HAProxy monitoring.

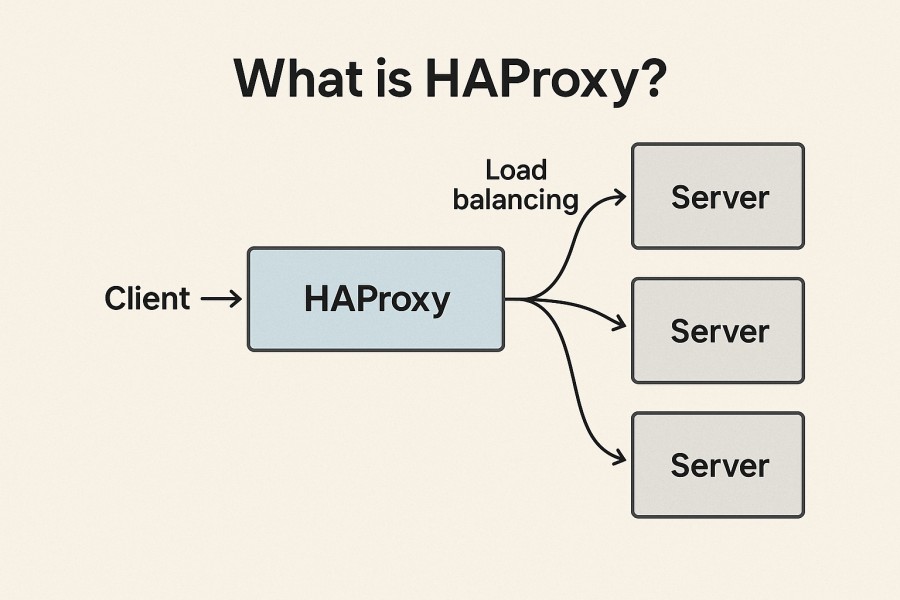

What is HAProxy?

HAProxy (High Availability Proxy) is a fast, open-source TCP/HTTP load balancer and reverse proxy designed to distribute traffic across multiple backend servers. It is trusted by enterprises handling massive workloads because it can process millions of requests per second with low latency, advanced health checks, SSL termination, caching, and flexible routing policies.

For businesses today, HAProxy provides more than just traffic distribution—it enables performance, reliability, and resilience at scale. Some of the key benefits include:

- High availability: Ensures uptime by rerouting traffic from failing backends.

- Scalability: Handles traffic spikes during peak hours without service degradation.

- Security: Supports TLS termination, rate limiting, and DDoS protection features.

- Observability: Offers detailed metrics via its stats page or exporters for monitoring.

These capabilities make HAProxy indispensable in sectors like e-commerce, finance, SaaS, and telecom, where downtime translates directly to lost revenue and customer trust.

Example: Using HAProxy for E-commerce Traffic Spikes

An online retailer running flash sales uses HAProxy to evenly distribute millions of concurrent checkout requests across backend servers. By combining load balancing with real-time health checks, HAProxy ensures that if one server becomes overloaded or fails, traffic is seamlessly rerouted—preventing cart failures and keeping revenue flowing.

Why Monitoring HAProxy is Critical

Your front door is a single point of amplification

HAProxy sits at the very edge of your stack, meaning any latency, configuration issue, or overload ripples through every downstream service. The cost of downtime here is staggering: over 90% of organizations estimate an hour of downtime costs $300,000+, and 41% say it costs between $1M–$5M per hour. Catching HAProxy’s 503 (no server available) or 504 (gateway timeout) errors in real time is the difference between fast recovery and a customer-impacting outage.

Latency breakdown pinpoints where the time is lost

HAProxy provides detailed timing phases (Tq, Tw, Tc, Tr, Tt) that break down the lifecycle of each request. Monitoring these allows teams to isolate whether slowness comes from queue saturation, network handshakes, or slow backend responses. The official HAProxy configuration manual defines these phases and recommends tracking them for production troubleshooting.

Backend health and failover must be verified continuously

HAProxy’s routing depends on health checks. If thresholds are misconfigured, degraded servers may still receive traffic, or flapping nodes may cause instability. The HAProxy docs and community best practices highlight monitoring queue lengths, backend state, and error rates with Prometheus scrapes to validate failover before customers notice.

Capacity & connection management under real traffic

Without monitoring, spikes in active sessions or maxed-out connection limits can silently queue requests, producing waves of 503 errors. Keeping an eye on active vs. idle connections, session rate, and per-backend queues ensures you can scale pool sizes and tune keep-alive settings to absorb peak loads.

Unified visibility across metrics, logs, and traces speeds MTTR

HAProxy produces detailed syslog logs with timing and capture fields. Pairing these logs with metrics and backend traces drastically reduces mean time to resolution (MTTR). HAProxy’s own logging guides emphasize the importance of a custom log-format to extract actionable details during incidents.

Modern metrics scraping is built in

Since HAProxy 2.x, there’s a native Prometheus metrics endpoint, making the old haproxy_exporter unnecessary. The recommended approach is to enable this built-in endpoint and scrape each HAProxy instance directly for accurate, instance-level service-level objectives (SLOs).

User expectations (and scrutiny) are higher than ever

HAProxy’s importance keeps climbing: in G2’s Fall 2025 reports, HAProxy earned 71 badges and multiple Leader positions, reflecting its role as the most trusted open-source load balancer in enterprise production. That ubiquity raises the bar—customers expect seamless availability, and monitoring is how you deliver it.

Practical HAProxy-specific signals to alert on

The most valuable signals to monitor are 5xx error rates (esp. 503/504), queue time spikes, backend health flaps, TLS handshake errors, and imbalanced backend load distribution. These are all surfaced directly in HAProxy’s Stats page and Prometheus metrics, making them the authoritative sources for SLAs and SLOs.

Key HAProxy Metrics to Monitor

Monitoring HAProxy effectively means going beyond the default stats page. HAProxy exposes a wide set of metrics through its built-in stats socket and Prometheus endpoint, giving teams visibility into traffic, latency, errors, and backend health. Below are the most critical categories and metrics you should track.

Traffic & Request Metrics

These metrics help you understand how much traffic is flowing through HAProxy, how it’s distributed, and whether requests are succeeding or failing.

- Requests per second: Measures the volume of incoming traffic handled by HAProxy. Tracking spikes reveals load trends and helps prepare for peak demand. A sudden drop may indicate frontend or network issues. Threshold: Consistently high spikes over baseline (e.g., >20% above normal) should trigger investigation.

- Session rate: Captures how many sessions are created per second. This highlights short-lived or bursty connections that could overwhelm servers. Monitoring trends helps in planning connection pooling. Threshold: If the session rate exceeds 80% of the configured maximum, scaling is required.

- HTTP response codes: Shows the distribution of 2xx, 3xx, 4xx, and 5xx responses. High 4xx suggests client-side issues, while rising 5xx signals backend failures. This is one of the fastest ways to detect production errors. Threshold: 5xx errors above 1% of requests sustained for 5 minutes.

Latency & Performance Metrics

Latency metrics break down where delays occur during request handling.

- Queue time (Tq): Time a request spends waiting in HAProxy’s queue before being sent to a backend. Spikes mean backends are saturated, or too few servers are active. Threshold: >200ms average queue time is a red flag for backend overload.

- Connect time (Tc): Measures how long it takes to establish a TCP connection to a backend. Long values often indicate network congestion or backend availability issues. Threshold: >100ms sustained connect time may require investigation.

- Response time (Tr): Time taken by the backend server to send the first byte after a request. High response times are usually application-level bottlenecks. Threshold: >500ms median response time signals degraded backend performance.

- Total session time (Tt): The sum of all phases (queue, connect, response) representing end-to-end request handling. Monitoring this gives a full picture of HAProxy’s performance. Threshold: >1s on average for critical endpoints requires urgent review.

Connection & Resource Utilization Metrics

These metrics show whether HAProxy is approaching its configured capacity.

- Active vs. idle connections: Active connections represent current load; idle connections show available headroom. Tracking this helps avoid connection exhaustion. Threshold: If active connections exceed 90% of max connections, scale pools or increase limits.

- Max connections: The configured maximum number of concurrent connections HAProxy will handle. Exceeding this forces HAProxy to drop or queue requests. Threshold: >95% utilization consistently suggests the need for capacity expansion.

- Retries & redispatches: Counts how many requests are retried or reassigned to other backends. High numbers suggest instability or poor backend health. Threshold: >5% of requests being retried indicates systemic backend issues.

Error & Availability Metrics

These metrics highlight backend failures, server unavailability, or routing misconfigurations.

- Health check status: Tracks whether backend servers are marked UP or DOWN by HAProxy’s health checks. If a large percentage of servers go DOWN, availability is compromised. Threshold: >20% of backends failing health checks should alert immediately.

- Failed servers: Monitors the number of servers that have failed or stopped responding. Frequent failures are often early signs of capacity or hardware problems. Threshold: More than 2 backend servers failing in the same pool.

- 503 responses: Indicates requests that couldn’t be served due to no backend being available. This directly affects users and is often the first symptom of overload. Threshold: >1% of total requests returning 503s is critical.

How to Monitor HAProxy with CubeAPM

Step 1: Install CubeAPM

Download and run the CubeAPM binary on the server where HAProxy is running (or on a nearby host). It’s a single binary, no external dependencies required. Provide your auth.key.session and other credentials via command line, environment variables, or a config file. Installation guide

Step 2: (Optional) Install CubeAPM in Kubernetes

If HAProxy is deployed inside Kubernetes, you can use the Helm chart or manifests to deploy CubeAPM as a sidecar or daemonset. Configure service discovery and add annotations to HAProxy pods so metrics are scraped automatically. Kubernetes installation

Step 3: Configure CubeAPM Settings

Fine-tune your installation by setting parameters like metrics.retention, logs.retention, base-url, and alert integrations. Configs can be applied using flags, environment variables (prefix CUBE_), or YAML files. Configuration reference

Step 4: Instrument HAProxy Metrics

Enable HAProxy’s built-in Prometheus endpoint or stats socket, then configure an OpenTelemetry Collector to scrape those metrics. CubeAPM natively ingests OpenTelemetry data, so you can forward HAProxy’s request rates, latency phases, error codes, and connection stats directly. OpenTelemetry instrumentation

Step 5: Enable Infrastructure Monitoring

Deploy an OpenTelemetry Collector (often the contrib build) to gather host metrics like CPU, memory, disk, and network I/O. This adds context so you can see whether HAProxy slowdowns are caused by resource exhaustion. Infrastructure monitoring

Step 6: Ingest HAProxy Logs

Configure HAProxy to ship logs (via syslog or custom formats) to a pipeline like Fluentd, Vector, or the OpenTelemetry logging exporter. Forward those logs into CubeAPM to correlate traffic anomalies with detailed request/response logs. Logs ingestion guide

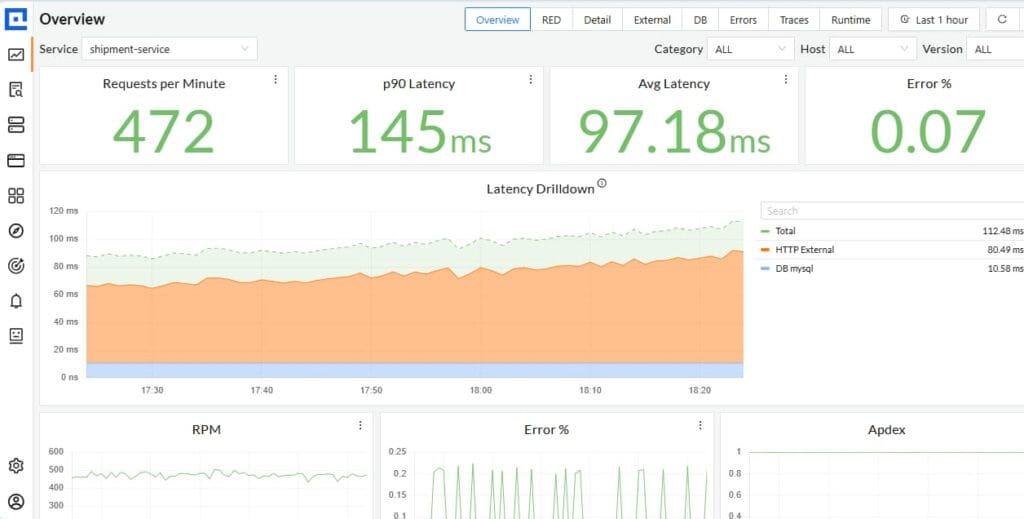

Step 7: Build Dashboards in CubeAPM

Use CubeAPM’s UI to create HAProxy dashboards. Add charts for traffic (requests/s), error rates (503/504), queue times, latency phases (Tq/Tc/Tr), and backend health. Segment dashboards by HAProxy instance or cluster for faster troubleshooting.

Step 8: Configure Alerts & Notifications

Set up real-time alerting for critical signals like 5xx errors > 1%, queue time > 200ms, or “≥2 backends down.” CubeAPM integrates with email, Slack, or Jira for instant notifications.

Alerting setup

Step 9: Validate & Iterate

Simulate traffic spikes or disable a backend to confirm that dashboards and alerts fire correctly. Adjust thresholds and sampling policies to align with production behavior. Continue tuning as your traffic grows.

Real-World Example: Monitoring HAProxy in Cloud-Native Banking Infrastructure

Challenge

Form3 provides payment and banking infrastructure to financial institutions, where transaction reliability and uptime are mission-critical. Their HAProxy layer needed to manage millions of payment API calls daily, apply routing changes dynamically, and guarantee zero downtime during configuration updates. Standard HAProxy monitoring tools gave only surface-level visibility, making it hard to validate whether backend pools, health checks, and failover rules were functioning correctly under peak load.

Solution

Form3 adopted HAProxy Enterprise with the Data Plane API to automate and safely manage routing changes in Kubernetes. They combined this with continuous monitoring of HAProxy’s Prometheus metrics and logs, which provided visibility into traffic distribution, error rates, and health-check states. Integrating CubeAPM would allow teams to ingest these HAProxy metrics, correlate them with backend service traces, and catch anomalies in real time—whether it was queue time spikes, 503 responses, or failed API gateway health checks.

Fixes

- Implemented transactional config updates via the HAProxy Data Plane API to avoid partial misconfigurations.

- Used HAProxy’s native Prometheus metrics endpoint to track request rates, latency breakdowns, and backend server states.

- Configured CubeAPM dashboards to display frontend vs. backend traffic splits, queue times, retries, and 5xx error ratios.

- Set alert rules in CubeAPM for backend health flaps, rising 503/504 errors, and configuration push failures to trigger instant Slack/email notifications.

Result

Form3 reduced the risk of outages during routing updates, improved observability into the entire HAProxy data plane, and met strict payment uptime SLAs in regulated financial environments. With CubeAPM’s unified metrics, logs, and traces, their operations team gained confidence in making dynamic routing changes while ensuring that HAProxy continued to deliver consistent, low-latency transaction processing.

Perfect, let’s make this section more actionable by writing PromQL-style alert rules that can be used directly in CubeAPM’s alerting system. I’ll follow the same style we used in your Apache/Nginx guides.

Verification Checklist & Example Alerts for Monitoring HAProxy with CubeAPM

Verification Checklist

- HAProxy stats enabled: Ensure the HAProxy Prometheus endpoint (or stats socket) is enabled and accessible.

- Metrics ingested into CubeAPM: Confirm that HAProxy traffic, latency, and error metrics are visible in CubeAPM.

- Dashboards visible: Check CubeAPM dashboards for live charts on request rates, error codes, latency phases, and backend health.

- Alerting configured: Verify that alert rules are active and sending notifications through email, Slack, or Jira integrations.

Example Alerts in CubeAPM

1. Spike in 5xx errors

alert: HighHAProxy5xxErrors

expr: sum(rate(haproxy_http_responses_total{code=~"5.."}[5m]))

/ sum(rate(haproxy_http_responses_total[5m])) > 0.01

for: 5m

labels:

severity: critical

annotations:

summary: "HAProxy 5xx error rate above 1%"

description: "More than 1% of HAProxy responses are 5xx in the last 5 minutes."

2. Queue time >200ms

alert: HighHAProxyQueueTime

expr: avg_over_time(haproxy_server_queue_time_seconds[5m]) > 0.2

for: 5m

labels:

severity: warning

annotations:

summary: "HAProxy queue time above 200ms"

description: "Average HAProxy queue time is above 200ms for the last 5 minutes."

3. Two or more backends failing simultaneously

alert: MultipleHAProxyBackendsDown

expr: count(haproxy_server_status{state="DOWN"}) > 2

for: 2m

labels:

severity: critical

annotations:

summary: "Multiple HAProxy backends are DOWN"

description: "More than 2 backend servers are failing health checks."

Conclusion

Monitoring HAProxy is essential for any business that depends on reliable traffic distribution, high availability, and low-latency performance. With HAProxy acting as the gateway to critical services, even small issues in queue times, backend health, or error rates can quickly cascade into costly outages.

CubeAPM makes HAProxy monitoring seamless by unifying metrics, logs, and traces in one platform. From queue time analysis to backend health checks, CubeAPM offers real-time dashboards, smart sampling, and cost-efficient retention that scale with modern infrastructures.

By adopting CubeAPM, organizations gain deep visibility into HAProxy performance, reduce downtime, and ensure customer-facing services remain resilient. Start monitoring HAProxy with CubeAPM today and experience observability that is fast, reliable, and built for scale.

Disclaimer: The information in this article reflects the latest details available at the time of publication and may change as technologies and products evolve.

FAQs

1. Can HAProxy monitoring be done without third-party tools?

Yes, HAProxy provides a built-in stats page and socket interface that expose traffic, session, and error metrics. However, these are limited for large-scale environments, which is why most teams integrate HAProxy with observability platforms like CubeAPM or Prometheus for real-time analysis and alerting.

2. How do I enable HAProxy’s native Prometheus metrics endpoint?

Starting with HAProxy 2.0, you can enable a Prometheus endpoint by adding http-request use-service prometheus-exporter if { path /metrics } to your frontend configuration. This makes metrics scrapeable directly without using a separate exporter.

3. Which logs are most valuable when monitoring HAProxy?

Custom syslog logs with capture fields (e.g., request headers, latency, backend server name) provide the most context. These logs are essential during incident investigations, as they correlate with metrics to pinpoint where failures occur.

4. Is it possible to monitor SSL/TLS performance in HAProxy?

Yes, HAProxy exposes SSL/TLS statistics such as handshake times, renegotiations, and failed verifications. Monitoring these helps identify certificate misconfigurations, handshake delays, or security anomalies.

5. How often should I review HAProxy monitoring dashboards?

Dashboards should be reviewed continuously during high-traffic events and at least weekly during normal operations. Regular review ensures baselines are understood, anomalies are detected early, and alert thresholds remain relevant to evolving workloads.