Monitoring Kubernetes ETCD is critical for cluster reliability. As of September 2025, over 40% of Kubernetes outages stem from control-plane failures, with ETCD at the core of latency spikes, quorum loss, and disk I/O bottlenecks. Early warning from ETCD metrics can prevent cascading downtime.

Yet, many teams find monitoring Kubernetes ETCD painful. Issues like slow commits or frequent leader elections appear subtle but cause 30–50% slower API responses under load. Left unchecked, these degrade deployments and hurt user experience.

CubeAPM is the best solution for monitoring Kubernetes ETCD. It unifies metrics, logs, and error tracing with prebuilt dashboards and smart alerts. Teams can quickly detect anomalies in leader elections, disk latency, or failed proposals while reducing monitoring costs. Let’s explore what ETCD is, key metrics, and how CubeAPM enables reliable ETCD monitoring.

What is Kubernetes ETCD?

Kubernetes ETCD is a distributed, consistent key-value store that acts as the brain of a Kubernetes cluster. It stores all cluster data, including API objects, configuration details, secrets, service discovery information, and the desired state of workloads. Every kubectl command or API request eventually reads from or writes to ETCD, making it the single source of truth for Kubernetes control planes.

For businesses, monitoring ETCD is critical because it directly impacts cluster stability and performance:

- Cluster availability: If ETCD loses quorum, Kubernetes control plane operations stall.

- Performance at scale: Slow ETCD writes cause API latency, delaying pod scheduling and autoscaling decisions.

- Data integrity & compliance: Secure snapshots and consistent state storage ensure reliable rollbacks and support regulatory needs.

- Operational efficiency: Healthy ETCD prevents costly outages, helping teams meet SLAs and reduce mean time to recovery (MTTR).

Example: ETCD in E-Commerce Scaling

Imagine an e-commerce platform scaling pods during a holiday sale. When ETCD is healthy, API requests to spin up new checkout pods happen in milliseconds, keeping checkout smooth for thousands of customers. But if ETCD commit latency spikes, autoscaling slows, causing delayed deployments, higher cart abandonment, and revenue loss. Monitoring ETCD ensures those spikes are caught and fixed before they hit business KPIs.

Why is Monitoring Kubernetes ETCD Important?

Preserve control-plane availability (quorum & leader health)

Kubernetes relies on etcd for every API call, so quorum and leader stability directly determine cluster health. Metrics like etcd_server_has_leader and leader change counts reveal instability early. If the cluster is leaderless for more than a few seconds, the control plane can grind to a halt.

Keep latency and disk I/O in check before users feel it

etcd is extremely sensitive to disk and network performance. Red Hat guidance notes that high disk latency directly increases Raft commit delays, often triggering leader re-elections. They recommend monitoring p99 peer latency and provisioning SSD/NVMe storage with ~500 sequential IOPS at ≤2 ms for heavy loads. If commit latency exceeds 500 ms at the 99th percentile, API server requests will quickly degrade.

Right-size cluster membership for stability

Adding members doesn’t always improve resilience; it increases replication overhead. Datadog highlights the trade-off: a five-member cluster is the common sweet spot (tolerates two failures), while going beyond seven often hurts performance. Monitoring replication latency and Raft proposal queues ensures your cluster design stays reliable as it scales.

Control DB growth to prevent write-stops

etcd keeps a full MVCC history, and if the database size grows unchecked, it can hit quotas and restrict writes. AWS EKS guidance documents alarms triggered when etcd_mvcc_db_total_size_in_bytes grows too close to capacity. Monitoring DB growth alongside compaction and defragmentation jobs ensures storage remains healthy.

Backups & recovery are non-negotiable

Snapshots are lifelines during cluster failures. Red Hat and OpenShift documentation emphasize testing backup/restore workflows to avoid catastrophic data loss. Treat snapshot jobs like SLOs: track size, age, and success metrics so recovery is possible when you need it most.

Monitor certificates to avoid hidden outages

Control-plane components communicate with etcd using TLS. kubeadm-generated client certificates expire after one year, and unrotated etcd certs can make the API server unreachable. Monitoring certificate expiry dates and automating renewals prevents unexpected, self-inflicted outages.

Tie ETCD health to real business impact

The financial stakes are high: average IT downtime now costs $14,056 per minute (EMA, 2024). For enterprises running Kubernetes at scale, undetected etcd failures translate directly into lost revenue and SLA breaches, making proactive monitoring a board-level priority.

Key Metrics to Monitor for Kubernetes ETCD

Kubernetes ETCD is the database that holds your cluster’s entire state. If it slows down or breaks, the whole control plane feels the impact. That’s why monitoring the right metrics is critical. Below are the key categories of ETCD signals, explained in simple terms with practical thresholds.

Cluster Health & Leadership

These metrics confirm that ETCD always has a stable leader and can make decisions.

- etcd_server_has_leader: Tells you if the cluster currently has a leader. No leader means no writes. Alert if this is 0 for more than 30 seconds.

- etcd_server_is_leader: Shows whether a given node thinks it’s the leader. Only one should say “yes.” Alert if more than one claims leadership.

- etcd_server_leader_changes_seen_total: Counts how many times leadership changed. Too many changes = instability. Alert if more than 3 in 15 minutes.

Performance & Latency

These metrics measure how fast ETCD processes requests and communicates across nodes.

- etcd_disk_backend_commit_duration_seconds: How long it takes to save data to disk. Slow disk = slow cluster. Alert if the 99th percentile is above 500ms.

- etcd_network_peer_round_trip_time_seconds: Time for messages to travel between ETCD members. Alert if p99 is over 50ms.

- etcd_server_proposals_pending: Number of pending changes waiting to be written. Backlogs point to stress. Alert if consistently over 100.

Reliability & Consensus

Consensus metrics confirm that ETCD’s Raft protocol is healthy and agrees on changes.

- etcd_server_proposals_failed_total: Counts failed proposals. Failures often mean misconfigurations or overloaded nodes. Alert if more than 1% fail within 5 minutes.

- etcd_server_proposals_committed_total: Successful proposals that got written. Sudden drops mean leadership or quorum problems. Alert if it dips below the normal baseline.

Storage & Database Growth

These metrics keep track of how much data ETCD is storing. Without monitoring, it can fill up and block writes.

- etcd_mvcc_db_total_size_in_bytes: Total size of the database on disk. Alert if over 80% of the available disk space.

- etcd_mvcc_db_size_in_use_in_bytes: Space actively being used after cleanup (compaction). If unused space keeps growing, you need defrag. Alert if gap >20% for days.

- etcd_debugging_mvcc_keys_total: Number of stored key-value pairs. Spikes mean runaway objects. Alert if growth exceeds 30% in 24 hours.

Backup & Maintenance

Backups and maintenance tasks keep ETCD recoverable and fast.

- etcd_debugging_snap_save_total_duration_seconds: How long it takes to save a snapshot. Slow saves = backup risks. Alert if snapshots take longer than 10 seconds.

- etcd_disk_wal_fsync_duration_seconds: Time to write ETCD’s transaction log to disk. High values = commit delays. Alert if p99 is above 50ms.

For a full reference list, check the official ETCD metrics documentation.

Logs, Metrics, and Traces for Kubernetes ETCD Monitoring with CubeAPM

When it comes to monitoring Kubernetes ETCD, relying on just one type of signal isn’t enough. You need the full picture: metrics to measure performance, logs to explain why issues happen, and traces to show how they affect the rest of the system. CubeAPM brings all three together in a single observability platform, making ETCD monitoring both simpler and more effective.

Metrics: Real-time health and performance

CubeAPM ingests ETCD’s Prometheus metrics out of the box. This means you can track:

- Leadership stability (leader changes, quorum health)

- Latency and throughput (commit durations, peer round-trip times)

- Storage growth (database size, number of keys, fragmentation)

With CubeAPM dashboards, teams see not just spikes in metrics but also trends over time. For example, if commit latency climbs from 200ms to 600ms at the 99th percentile, you’ll see it correlated with API response slowdowns.

Logs: Context for every failure

Metrics show symptoms, but logs tell the story. CubeAPM centralizes ETCD logs, making it easy to search for warnings like:

- “failed to apply snapshot”

- “leader election timeout”

- “fsync took too long”

Smart sampling ensures you keep the most valuable log entries (errors, warnings, anomalies) without drowning in noise. This reduces costs by up to 60% compared to legacy APMs while still retaining the detail you need for root cause analysis.

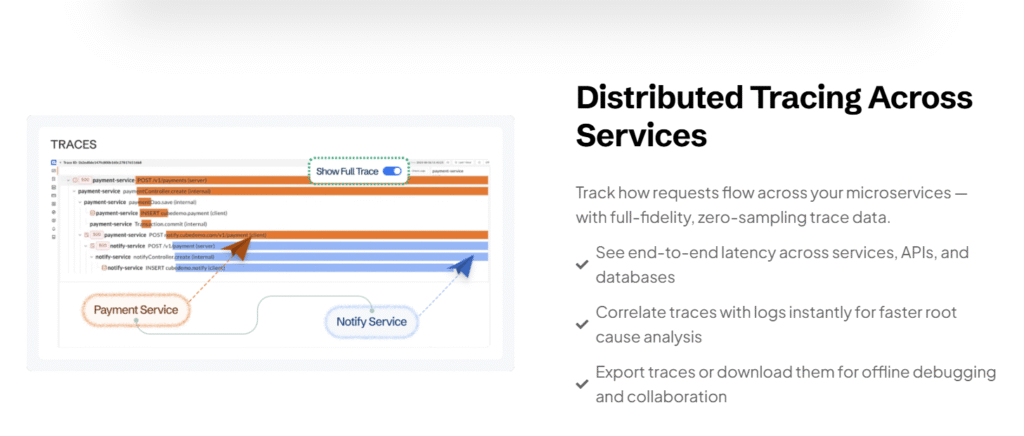

Traces: Connecting ETCD issues to cluster behavior

CubeAPM is OpenTelemetry-native, so traces connect ETCD bottlenecks with downstream impact. For example:

- A slow ETCD write → delayed API server response → pods stuck in Pending.

- Leader election flaps → cascading delays in scaling workloads.

By visualizing these traces, teams can pinpoint how an ETCD spike affects deployments, workloads, and end-user experience.

Why this matters for your business

Without correlation, teams waste hours piecing together metrics, logs, and events from separate tools. CubeAPM unifies them: when commit latency rises, you instantly see the corresponding log warnings and trace slowdowns. This cuts mean time to resolution (MTTR) dramatically and helps maintain uptime for customer-facing apps.

How to monitor Kubernetes ETCD with CubeAPM

To monitor Kubernetes ETCD with CubeAPM, you’ll set up metrics, logs, traces, and alerts using CubeAPM’s Kubernetes-infra support and OpenTelemetry. These are the steps, based on the official docs.

Step 1: Install CubeAPM and Infrastructure Monitoring

- Install CubeAPM on your infrastructure or cloud environment to receive data. This is foundational. (See Install CubeAPM for setup instructions.)

- Enable Kubernetes Infrastructure Monitoring using CubeAPM’s Infra Monitoring → Kubernetes section. Run one OpenTelemetry Collector DaemonSet and one Deployment. The DaemonSet collects metrics/logs from each node, while the Deployment handles cluster-wide metrics, as given in Docs.

- For easier management, use the official Helm chart to configure receivers, exporters, and processors. This ensures metrics from ETCD’s Prometheus endpoint are scraped and sent to CubeAPM.

Step 2: Instrument ETCD using OpenTelemetry

- Enable ETCD metrics by exposing its /metrics endpoint. This is supported natively by ETCD.

- Configure the OTel Collector to scrape ETCD’s metrics endpoint and forward them to CubeAPM via the OTLP exporter. Docs here.

- Logs ingestion: Collect ETCD logs (stdout/stderr or file logs) using the OTel Collector or Fluentd/Vector agents. Send them to CubeAPM’s logs pipeline for indexing and analysis. Docs here.

Step 3: Configure CubeAPM UI, Dashboards, and Alerts

- In CubeAPM, open Infrastructure → Kubernetes to view dashboards for cluster, node, and ETCD metrics such as commit latency, peer round-trip time, and leader changes.

- Set up alert rules for ETCD metrics (e.g., high commit latency, quorum loss, DB size over limit) via Configure → Alerting. You can integrate with Email, Slack, or other channels (explained in Docs).

- Use tracing support to correlate ETCD latency with API server performance or pod scheduling delays, giving you a full picture of how ETCD issues affect workloads.

Step 4: Monitor, Review, and Maintain

- Regularly review dashboards for long-term trends, such as steadily increasing DB size or higher write latency.

- Search logs in CubeAPM for ETCD warnings like “leader election timeout” or “fsync took too long” to catch problems early.

- Test alerting rules to ensure notifications reach your team in real time.

- Keep configurations up to date: rotate ETCD certificates, schedule snapshots, and upgrade ETCD versions as needed.

Real-world Example: Managing ETCD Latency Spikes During an E-commerce Sale with CubeAPM

The Challenge

A fast-growing e-commerce company ran Kubernetes clusters to handle seasonal sales. During a major holiday campaign, customers began reporting delays at checkout. The SRE team traced the issue to Kubernetes API latency, but the root cause was unclear. ETCD metrics showed growing commit latency and leader election instability, yet without a unified observability view, they struggled to confirm it.

The Solution

The team deployed CubeAPM’s Kubernetes infrastructure monitoring and configured the OpenTelemetry Collector to scrape ETCD metrics and logs. CubeAPM dashboards quickly correlated high etcd_disk_backend_commit_duration_seconds with bursts of leader changes and warnings in ETCD logs about disk fsync delays. Traces showed the latency ripple effect: slow ETCD writes → slower API server responses → delayed pod autoscaling during peak checkout demand.

The Fixes

- Migrated ETCD pods to nodes with faster NVMe-backed storage, cutting disk latency.

- Tuned compaction frequency to reduce database bloat and fragmentation.

- Added CubeAPM alerts for leader loss and high commit latency to catch early warning signs.

- Implemented automated log ingestion in CubeAPM to surface snapshot and fsync errors immediately.

The Result

After the fixes, ETCD commit latency dropped by 65% (from ~700ms to ~240ms at p99), and API response times improved by over 40% during peak loads. CubeAPM’s unified view helped the team reduce mean time to resolution (MTTR) by 55%, ensuring checkout remained fast and stable even under heavy seasonal traffic.

Verification Checklist & Example Alert Rules for CubeAPM K8s ETCD Monitoring

Before you ship to production, validate that signals, dashboards, and alerts for ETCD are truly actionable. Use the checklist below to confirm coverage, then apply the sample alert rules (Prometheus-style) as a starting point inside CubeAPM.

Verification Checklist

- Metrics ingestion: Confirm the OpenTelemetry/Prometheus pipeline is scraping ETCD /metrics from all control-plane nodes, and data is visible in CubeAPM charts.

- Leader visibility: Ensure panels show etcd_server_has_leader, current leader identity, and a trend of leader changes for the last 24–72 hours.

- Latency baselines: Establish p95/p99 baselines for commit latency and peer round-trip time during normal load, then record them in your runbook.

- Proposal health: Track pending/failed Raft proposals and verify a quiet baseline (near zero failures, low backlog).

- DB growth watch: Plot db_total_size vs in_use and confirm compaction/defrag jobs keep the gap reasonable over days/weeks.

- Log coverage: Stream ETCD logs into CubeAPM and verify search queries for “fsync”, “snapshot”, “election”, and “no space left” return recent events.

- Backup hygiene: Check that snapshot jobs run on schedule, produce artifacts of expected size, and are retained per policy.

- Alert routing: Verify email (and Slack if used) integrations in CubeAPM fire test alerts to the right channel and include runbook links.

- Certificate posture: Track ETCD and API-server client cert expiration dates; confirm a warning threshold (e.g., 30 days) exists.

- Runbook links: Add short “what to do next” notes to each alert so on-call engineers can act quickly.

Alert Rules

Cluster Health & Leadership

What to watch: Leader presence, stability of leadership over time, and quick detection of leader loss.

Goal: Never operate leaderless; minimize avoidable re-elections.

- alert: EtcdNoLeader

expr: etcd_server_has_leader == 0

for: 30s

labels:

severity: critical

team: platform

annotations:

summary: "ETCD cluster has no leader"

description: "Leader missing for {{ $value }}; control plane may be write-blocked. Check peer connectivity and quorum."

Performance & Latency

What to watch: Disk commit latency and peer RTT; both directly affect Raft and API responsiveness.

Goal: Keep p99 commit latency low (e.g., < 500ms) and inter-peer RTT tight (e.g., < 50ms).

- alert: EtcdHighCommitLatencyP99

expr: histogram_quantile(0.99, rate(etcd_disk_backend_commit_duration_seconds_bucket[5m])) > 0.5

for: 5m

labels:

severity: critical

annotations:

summary: "High ETCD commit latency (p99)"

description: "p99 commit latency > 500ms. Check disk IO (fsync), node pressure, and storage class performance."

Reliability & Consensus

What to watch: Raft proposal backlog and failures; drops in committed proposals indicate instability.

Goal: Keep failures near zero and prevent sustained backlogs.

- alert: EtcdProposalBacklog

expr: etcd_server_proposals_pending > 100

for: 10m

labels:

severity: warning

annotations:

summary: "ETCD proposals piling up"

description: "Pending proposals > 100 for 10m on {{ $labels.instance }}. Investigate CPU, disk, or network contention."

Storage & Database Growth

What to watch: Total DB size vs. in-use size, key count growth, and fragmentation over time.

Goal: Avoid disk-full scenarios; keep compaction/defrag effective.

- alert: EtcdDatabaseNearCapacity

expr: (etcd_mvcc_db_total_size_in_bytes / node_filesystem_size_bytes{fstype!~"tmpfs|overlay"}) > 0.80

for: 15m

labels:

severity: warning

annotations:

summary: "ETCD database approaching disk capacity"

description: "DB > 80% of underlying filesystem. Run compaction/defrag or expand storage."

Backups & Maintenance

What to watch: Snapshot success and duration; WAL fsync times, which often precede latency incidents.

Goal: Snapshots succeed on schedule, and WAL sync stays fast.

- alert: EtcdSlowSnapshotSaves

expr: histogram_quantile(0.99, rate(etcd_debugging_snap_save_total_duration_seconds_bucket[10m])) > 10

for: 10m

labels:

severity: warning

annotations:

summary: "Slow ETCD snapshot saves"

description: "p99 snapshot save > 10s. Verify storage throughput and snapshot window."

Certificates & Security

What to watch: Certificate expiration for ETCD peers/clients; early notice prevents avoidable outages.

Goal: Renew certs well before expiry (e.g., 30 days).

- alert: EtcdCertExpiringSoon

expr: etcd_cert_expiry_seconds < (30 * 24 * 3600)

for: 0m

labels:

severity: warning

annotations:

summary: "ETCD certificate expires within 30 days"

description: "Rotate ETCD and API-server client certs to avoid control-plane disruption."

Conclusion

Monitoring Kubernetes ETCD is not just a best practice—it is essential for keeping clusters stable, performant, and reliable. Since ETCD is the single source of truth for Kubernetes, any slowdown or failure in this component quickly cascades into API delays, failed deployments, or even full control-plane outages.

With CubeAPM, teams get a complete view of ETCD health through unified metrics, logs, and traces. Smart alerting ensures you catch issues like leader loss, high commit latency, or database growth before they affect workloads. CubeAPM’s OpenTelemetry-native design makes setup seamless and cost-effective.

By monitoring Kubernetes ETCD with CubeAPM, you reduce downtime, speed up troubleshooting, and ensure a smooth experience for both developers and end-users. Start monitoring your Kubernetes ETCD with CubeAPM today and keep your clusters always healthy and resilient.

Disclaimer: The information in this article reflects the latest details available at the time of publication and may change as technologies and products evolve.

FAQs

1. What happens if Kubernetes ETCD is not monitored properly?

If ETCD is left unmonitored, small issues like slow disk writes or frequent leader elections can escalate into major outages. This often results in API server timeouts, pods stuck in Pending, failed deployments, and, in worst cases, a completely unresponsive control plane.

2. Can ETCD performance impact application availability?

Yes. ETCD performance directly affects Kubernetes API responsiveness. If commit latency increases, API requests slow down, delaying pod scheduling, autoscaling, and service discovery. This ripple effect quickly reaches customer-facing applications.

3. How often should I back up ETCD data?

Best practice is to take regular snapshots—daily or more frequently, depending on cluster activity. Snapshots ensure you can recover quickly from corruption, accidental deletion, or control-plane failures without losing critical state data.

4. Is ETCD monitoring useful in managed Kubernetes services like EKS or GKE?

Absolutely. Even managed services rely on ETCD under the hood. While cloud providers maintain the control plane, teams still need visibility into ETCD metrics and logs to understand performance issues, tune workloads, and meet compliance requirements.

5. What tools can integrate with ETCD monitoring besides CubeAPM?

Prometheus and Grafana can scrape ETCD metrics, and tools like Datadog or New Relic provide partial ETCD insights. However, CubeAPM offers the most comprehensive solution with unified metrics, logs, traces, and cost efficiency tailored for Kubernetes-native environments.