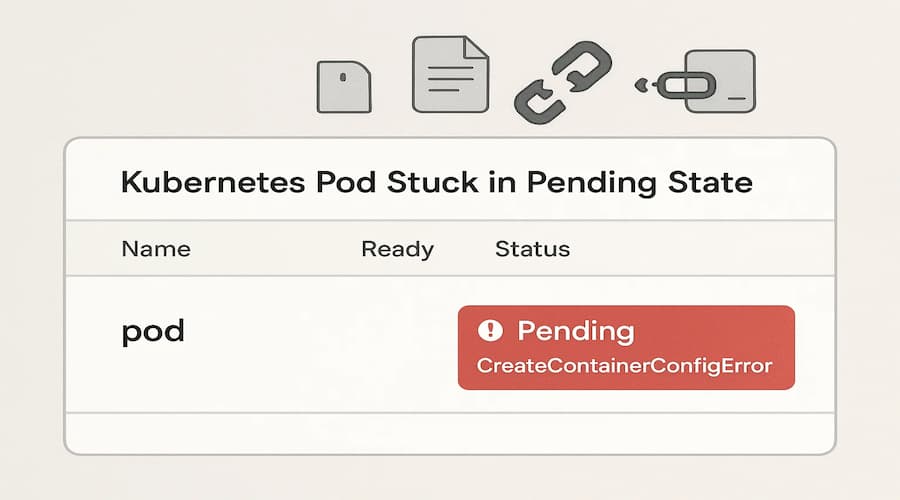

Kubernetes simplifies container deployment and scaling, but misconfigurations remain a serious risk. Researchers found over 900,000 misconfigured Kubernetes clusters exposed online, highlighting how common these issues are. Pods may fail before startup with errors like CreateContainerConfigError, where Kubernetes can’t build a container configuration due to invalid references or misconfigured settings.

This is where CubeAPM comes in. CubeAPM ingests Kubernetes events, Prometheus metrics, and application signals into a single place. It highlights Pods stuck in CreateContainerConfigError, alerts engineers in real time, and correlates failures with logs and traces. With CubeAPM, teams can debug faster, reduce downtime, and keep deployments reliable — all at predictable costs.

In this guide, we’ll cover what CreateContainerConfigError is, why it happens, how to fix it, and how to monitor it effectively using CubeAPM.

What is CreateContainerConfigError in Kubernetes

CreateContainerConfigError is a Pod waiting state that appears when Kubernetes fails to create a container’s configuration. It happens before the container runtime even starts, which means the container never launches, and no logs are generated. This makes it more difficult to troubleshoot compared to runtime errors like CrashLoopBackOff, because you have no application output to investigate.

The error typically occurs when the Pod specification points to something invalid or missing. Common triggers include references to a ConfigMap or Secret that doesn’t exist, a mismatched volume and volumeMount, or an invalid command or argument defined in the container spec. In all of these cases, Kubernetes can schedule the Pod, but it cannot assemble the container definition, so it marks the Pod with CreateContainerConfigError.

You can confirm the error by running kubectl describe pod <pod-name> and reviewing the Events section. Kubernetes usually provides a direct message, such as “configmap not found”, “secret key does not exist”, or “invalid volumeMount”. These clues are often enough to identify which reference in the Pod spec is incorrect and needs fixing.

Why CreateContainerConfigError in Kubernetes Happens

Kubernetes marks a Pod with CreateContainerConfigError when it cannot assemble a valid container specification. This usually comes down to misconfigured references or invalid fields in the Pod definition. Here are the most common causes:

1. Missing or mistyped ConfigMap key

If a Pod references a key that doesn’t exist in a ConfigMap, Kubernetes fails during container setup.

Example:

env:

- name: PORT

valueFrom:

configMapKeyRef:

name: app-config

key: PORTIf the PORT key is missing from app-config, the Pod will be stuck in CreateContainerConfigError.

Quick check:

kubectl get configmap app-config -o yaml2. Missing or mistyped Secret key

A common mistake is pointing to a Secret that doesn’t exist or using the wrong key name.

Quick check:

kubectl get secret db-secret -o yamlIf the error says “key not found in Secret”, you’ll need to fix the key or update the Pod spec.

3. Invalid volume or volumeMount pairing

Every volumeMount must match a defined volume. If names don’t align, or the mount path is invalid, config creation fails.

Quick check:

kubectl describe pod <pod-name>Look for messages like “invalid volumeMount” in the Events.

4. Incorrect file permissions or defaultMode

When using ConfigMaps or Secrets as volumes, invalid octal values (like 8888) or mismatched modes can block startup.

Fix by setting proper octal values such as 0644.

5. Bad command or args configuration

Improperly defined commands or args in the Pod spec can break validation. For example, leaving args empty while using bash -c.

Fix by using a consistent format:

command: ["sh","-c"]

args: ["<your command>"]6. Bad imagePullSecrets reference

If the Pod references an imagePullSecret that doesn’t exist in the namespace, the kubelet cannot attach credentials.

Quick check:

kubectl get secret -n <namespace>7. Unsupported security context or runtime fields

Fields like runAsUser, Linux capabilities, or certain volume types may be invalid for the runtime or restricted by policies.

Review the Pod’s securityContext and cluster policies to confirm compatibility.

How to Fix Kubernetes CreateContainerConfigError

The fastest way to resolve this error is to inspect the Pod events, identify the broken reference, and correct the Pod spec. Here’s a structured approach:

1. Describe the Pod for exact error details

The first step is to see what Kubernetes is reporting. The Events section almost always reveals the root cause, like “configmap not found” or “invalid volumeMount.”

kubectl describe pod <pod-name> -n <namespace>2. Validate ConfigMaps and Secrets

If a Pod references a missing or mistyped key, the container config fails. Always confirm that the object exists and contains the right data.

kubectl get configmap <name> -n <namespace> -o yaml

kubectl get secret <name> -n <namespace> -o yaml3. Confirm volumes and mounts

Each volumeMounts[].name must match a volumes[].name. A simple typo is enough to block container creation.

kubectl describe pod <pod-name>4. Check file modes on ConfigMap/Secret volumes

When mounting ConfigMaps or Secrets, invalid octal values like 8888 cause instant validation errors. Stick to proper modes such as 0644.

volumes:

- name: config

configMap:

name: app-config

defaultMode: 06445. Fix container commands and args

Misconfigured command and args are another common trigger. Use a safe pattern so Kubernetes can validate the spec properly.

command: ["sh","-c"]

args: ["<your command>"]6. Validate imagePullSecrets

If the Pod references an imagePullSecret that doesn’t exist in the namespace, the config will fail. Always confirm the Secret name matches.

kubectl get secret -n <namespace>7. Review security context settings

Unsupported values for runAsUser, restricted capabilities, or disallowed volumes can block creation. Adjust them according to cluster policies.

securityContext:

runAsUser: 1000

allowPrivilegeEscalation: false8. Redeploy and verify

After fixing the configuration, restart the Pod or Deployment to confirm the error is resolved. Watch the status to ensure it transitions to Running.

kubectl rollout restart deploy/<name> -n <namespace>

kubectl get pods -wMonitoring CreateContainerConfigError with CubeAPM

Fixing a CreateContainerConfigError once is fine, but preventing it from blocking rollouts again is even better. That’s where monitoring comes in. CubeAPM gives Kubernetes teams a complete view of pod configuration errors, image pulls, and rollout health in one platform, helping them detect issues early and resolve them before users feel the impact.

Here’s how CubeAPM makes CreateContainerConfigError easier to handle:

1. Make sure CubeAPM is running (Helm)

Install CubeAPM in your cluster using Helm. This ensures the backend is ready to ingest Kubernetes metrics, events, and logs.

helm repo add cubeapm https://charts.cubeapm.com

helm repo update cubeapm

helm show values cubeapm/cubeapm > values.yaml

# edit values.yaml as needed

helm install cubeapm cubeapm/cubeapm -f values.yaml📖 See: CubeAPM Installation Guide

2. Install the OpenTelemetry Collector

Run the Collector as both a DaemonSet (for node and pod-level signals) and a Deployment (for cluster-wide metrics and events). This ensures all Kubernetes error states are captured.

helm repo add open-telemetry https://open-telemetry.github.io/opentelemetry-helm-charts

helm repo update open-telemetry

helm install otel-collector open-telemetry/opentelemetry-collector -f values.yaml📖 See: OpenTelemetry Collector Setup

3. Enable Kubernetes metrics

CreateContainerConfigError shows up in the kube_pod_container_status_waiting_reason metric. Make sure the Collector scrapes kube-state-metrics.

receivers:

prometheus:

config:

scrape_configs:

- job_name: 'kube-state-metrics'

static_configs:

- targets: ['kube-state-metrics.kube-system.svc.cluster.local:8080']📖 See: Kubernetes Metrics Integration

4. Ingest Kubernetes events

Events provide descriptive messages like “configmap not found” or “secret key missing”. Configure the Collector to forward them into CubeAPM.

processors:

k8sattributes:

extract:

metadata:

- k8s.namespace.name

- k8s.pod.name

- k8s.container.name📖 See: Kubernetes Events Integration

5. Stream data into CubeAPM

Use the OTLP exporter to send metrics and events into CubeAPM for storage and visualization.

exporters:

otlp:

endpoint: https://ingest.cubeapm.com:4317

headers:

authorization: "Bearer ${CUBEAPM_API_TOKEN}"📖 See: OTLP Exporter Setup

6. Build dashboards

Create a CubeAPM dashboard widget filtered on:

reason="CreateContainerConfigError"This makes it easy to track blocked Pods, how long they’ve been stuck, and whether the problem is limited or cluster-wide.

📖 See: CubeAPM Dashboards

7. Create alerts and route notifications

Define alerts that trigger if Pods remain in CreateContainerConfigError for too long, then route them to your on-call system. CubeAPM supports Slack, PagerDuty, Opsgenie, and generic webhooks.

- alert: ContainerCreateContainerConfigError

expr: kube_pod_container_status_waiting_reason{reason="CreateContainerConfigError"} == 1

for: 2m

labels:

severity: critical

annotations:

description: "Pod {{ $labels.pod }} in {{ $labels.namespace }} is stuck in CreateContainerConfigError."Example Alert Rules

1. CreateContainerConfigError Alert

A Pod that fails with CreateContainerConfigError is stuck before startup, which means no logs or traces are generated. This alert fires if the condition lasts longer than 2 minutes, giving your team a clear signal that a rollout is blocked due to misconfigured ConfigMaps, Secrets, or volume mounts.

- alert: ContainerCreateContainerConfigError

expr: max by (namespace, pod, container) (

kube_pod_container_status_waiting_reason{reason="CreateContainerConfigError"} == 1

)

for: 2m

labels:

severity: critical

annotations:

summary: "CreateContainerConfigError in {{ $labels.namespace }}/{{ $labels.pod }} ({{ $labels.container }})"

description: "Container config failed. Check ConfigMap/Secret refs and volumeMounts."2. Missing ConfigMap or Secret (Events/Logs Alert)

The specific cause behind CreateContainerConfigError is usually spelled out in Kubernetes Events (e.g., “configmap not found”, “key does not exist”, “secret not found”). Use a log/event alert in CubeAPM to catch these human-readable messages quickly—even when the container never starts and app logs don’t exist.

# CubeAPM log/event alert (conceptual example -- configure in CubeAPM's UI)

# Query: message contains "configmap not found" OR message contains "secret not found"

# Scope: namespace =~ "prod|production" (optional: reduce noise)

# Window: at least 1 match within 5m

# Action: route to Slack/PagerDuty/OpsgenieConclusion

CreateContainerConfigError blocks containers before they ever start, so you won’t have app logs or traces to lean on. The fastest path is: read the Pod Events, fix the broken reference or invalid field, and redeploy. With CubeAPM watching kube-state-metrics and Kubernetes Events, you get instant visibility, proactive alerts, and a single place to correlate failures with recent deploys—so rollouts stay on track and downtime stays off your status page.

FAQs

1) Why can’t I see container logs for CreateContainerConfigError?

Because the container never starts—this state occurs during config validation. Check kubectl describe pod <pod> Events and your CubeAPM Events stream for the exact message.

2) What’s the most common cause of CreateContainerConfigError?

Misnamed or missing ConfigMap/Secret references, followed by mismatched volume/volumeMount names. Fix the reference or key and redeploy.

3) How do I distinguish CreateContainerConfigError from image-pull issues?

CreateContainerConfigError is a config failure; image-pull problems show up as ErrImagePull or ImagePullBackOff. Your dashboard should filter by reason so you can see each failure class clearly.

4) Can CubeAPM alert me even if the container never started?

Yes. Use the metric rule on kube_pod_container_status_waiting_reason{reason=”CreateContainerConfigError”} and a log/event alert to match messages like “configmap not found.”

5) What’s a good starting threshold for alerting?

Two minutes is a practical default to avoid noise during brief scheduling churn. Tighten or relax it per namespace (e.g., stricter in prod, looser in dev).