Monitoring Kubernetes nodes is becoming non-negotiable: as of mid-2025, enterprises are running over 1,000 nodes across multiple clusters and cloud environments, increasing the stakes for node health. Every minute a node is down or under pressure (CPU, memory, disk) can cascade into degraded performance, service disruption, and inflated cloud bills.

Many teams today still suffer from blind spots: delayed alerts, tools that only observe pods or services, not the underlying node issues, and piles of uncorrelated logs that make root cause analysis slow. In complex clusters, a kubelet crash or disk pressure may go unnoticed until downstream services fail, costing hours of diagnostics and impacting business SLAs.

CubeAPM is the best solution for monitoring Kubernetes nodes with unified observability, real-time metrics, log aggregation, error tracing, and event insights, all tailored for node health. With CubeAPM, you can track node conditions like Ready, DiskPressure, MemoryPressure; collect kubelet and system logs; detect anomalies; and receive intelligent alerts.

In this article, we’re going to cover what Kubernetes nodes are, why monitoring Kubernetes nodes is important, key metrics to watch, and Kubernetes nodes monitoring with CubeAPM.

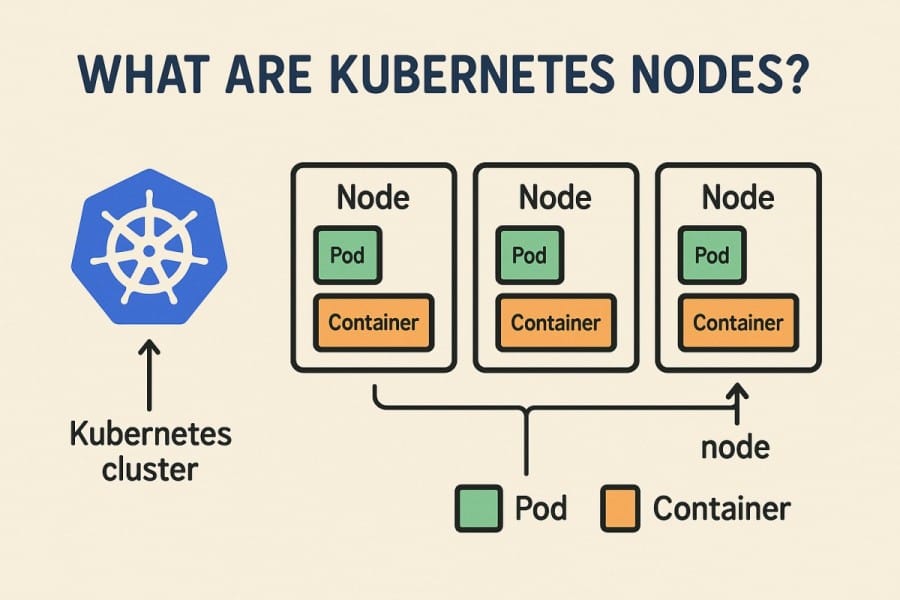

What Are Kubernetes Nodes?

A Kubernetes node is a worker machine—virtual or physical—that runs pods, manages container runtimes, and communicates with the control plane. Each node reports critical resource availability (CPU, memory, disk, and network) as well as health conditions (Ready, DiskPressure, MemoryPressure).

Nodes come in two types:

- Control plane nodes: handle scheduling, cluster state, and API operations

- Worker nodes: host and execute workloads assigned by the scheduler

If a node becomes NotReady, Kubernetes will try to reschedule workloads, but this often leads to performance degradation, pod evictions, and user-visible downtime.

Benefits: For businesses today, nodes are the backbone of application availability and scalability. Their benefits include:

- Elastic scaling: Nodes allow clusters to grow or shrink automatically with demand, reducing over-provisioning.

- High availability: Workloads can shift across nodes to maintain uptime even when one fails.

- Cost efficiency: By monitoring node health and utilization, teams can right-size infrastructure and control cloud bills.

- Security & compliance: Nodes enforce isolation and provide visibility into workloads, helping meet industry regulations.

Example: Monitoring E-commerce Checkout Nodes

An online retailer running thousands of transactions per minute depends on healthy nodes to handle checkout requests. If even one node hits memory pressure and evicts pods, customers can face failed orders. By monitoring node conditions and resource usage, the business ensures seamless transactions, prevents revenue loss, and maintains customer trust.

Why Monitoring Kubernetes Nodes Is Critical

Node health is directly tied to uptime and SLAs

When a node slips into NotReady, DiskPressure, or MemoryPressure, Kubernetes evicts pods and marks the node unschedulable. This triggers reschedules and cold starts that impact both latency and availability. As clusters scale, a single unhealthy node can put SLAs at risk if not caught early.

Node-level metrics give the earliest warning

Issues like kubelet restarts, CPU throttling, or a full disk rarely show up in service dashboards until downstream pods start failing. Monitoring kubelet logs, node exporter data, and daemonset status provides early signals before these problems cascade. Even hyperscalers are prioritizing this: in April 2025, Amazon EKS introduced node monitoring and auto-repair, underscoring that node health is now a first-class reliability concern.

Downtime from node failures is costly

Industry research shows the average cost of downtime now exceeds $300,000 per hour for 90% of mid-to-large enterprises (ITIC 2024). Node-level failures—whether a NotReady state, kernel panic, or networking drop—are among the most common root causes of unplanned downtime in Kubernetes clusters. Proactive node monitoring shortens MTTR by helping engineers quickly pinpoint the failing machine, reducing financial and reputational losses.

Evictions and reschedules create operational risks

When nodes experience memory or disk pressure, Kubernetes evicts pods to maintain cluster stability. Without carefully set Pod Disruption Budgets (PDBs), these evictions can unintentionally wipe out critical replicas. Monitoring node pressure conditions alongside eviction events ensures services remain resilient and the user experience is preserved.

Capacity planning starts at the node level

Node telemetry—CPU/memory usage, pod density, disk I/O, and network throughput—is the foundation for right-sizing clusters. Without it, teams often over-provision, inflating cloud bills. More adoption means more nodes, and more cost-saving opportunities when they are monitored effectively.

Reliability during upgrades and maintenance

Rolling out OS patches, kernel upgrades, or CNI updates can destabilize nodes long before services show issues. Many community post-mortems cite node-level misconfigurations as the source of outages during upgrades. Tracking node readiness, taints, and kubelet performance during maintenance helps avoid unplanned downtime.

Security and compliance depend on node visibility

The NSA/CISA Kubernetes Hardening Guide emphasizes monitoring nodes and their services as a baseline security control. Nodes are often the entry point for misconfigured workloads or kernel vulnerabilities. By watching for unexpected daemons, system log anomalies, and drift in node configurations, organizations strengthen compliance with regulatory frameworks.

Key Metrics for Kubernetes Node Monitoring

CPU & Processing Power

CPU is the first bottleneck for nodes. If the compute runs hot, pods slow down, and autoscaling kicks in too late.

- CPU utilization: Shows how much of the node’s compute is actively used. Sustained high usage signals the need for scaling or load redistribution, as bottlenecks here affect every pod on the node. Alert if CPU > 90% for 5 minutes.

- CPU throttling: Indicates when workloads are forced to run slower because of resource limits, often causing lag in critical services. Prolonged throttling highlights under-provisioned clusters or overly strict limits. Alert if throttling > 10% of total CPU time.

- Load averages: Highlight whether a node’s processor is handling more work than it can keep up with. Elevated load averages often point to scheduling queues growing longer. Alert if load > 2× number of cores for 5 minutes.

Memory Usage & Pressure

Memory shortages lead directly to pod evictions. Monitoring memory ensures workloads stay healthy.

- Available memory: Tracks how much RAM is left for scheduling new pods. Drops here often precede pod evictions, which can disrupt stateful services like databases. Alert if free memory < 10%.

- Swap usage: Heavy reliance on swap slows performance and signals pressure. If swap grows consistently, it usually indicates memory leaks or runaway processes. Alert if swap > 500MB sustained.

- Memory pressure conditions: Kubernetes marks nodes with MemoryPressure when they cannot handle workloads. Ignoring this signal can lead to cascading failures. Alert if MemoryPressure = true for more than 2 minutes.

Disk Usage & Storage Health

Nodes rely on local storage for logs, images, and workloads. Disk exhaustion stalls deployments.

- Free disk space: Low disk capacity prevents new workloads from starting. Monitoring space avoids unexpected scheduling failures. Alert if disk is < 15% free.

- Inodes usage: Even with space left, nodes can fail if inodes (used to track files) are exhausted. This is especially important for logging-heavy applications. Alert if inode usage > 90%.

- Disk I/O activity: Slow reads/writes signal bottlenecks that degrade performance. High I/O wait times also slow upgrades and rollouts. Alert if disk I/O wait > 20% for 5 minutes.

Networking & Connectivity

Network health at the node level keeps pods communicating and services discoverable.

- Bandwidth usage: Tracks incoming and outgoing traffic. Sudden spikes may point to noisy workloads or DDoS attempts, while drops may suggest outages. Alert if traffic > 2× baseline unexpectedly.

- Packet errors/drops: Highlight faulty NICs or misconfigurations that cause instability. Consistent drops degrade user experience at the application layer. Alert if the error rate > 1% for 2 minutes.

- DNS & connectivity checks: Ensure nodes can resolve and route traffic reliably across the cluster. DNS latency is often the earliest indicator of cluster-wide issues. Alert if DNS latency > 500ms.

Node Conditions & Health Signals

Node conditions are built-in signals that Kubernetes uses to decide scheduling.

- Ready/NotReady: Shows if a node can accept pods. NotReady means workloads must move elsewhere, often triggering reschedules. Alert if NotReady for > 2 minutes.

- MemoryPressure, DiskPressure, PIDPressure: Indicate resource strain that leads to evictions. Monitoring these keeps planning realistic and avoids noisy evictions. Alert immediately if any = true.

- NetworkUnavailable: Signals when node networking is broken or misconfigured. This condition usually results in failed service discovery. Alert immediately if NetworkUnavailable = true.

Kubelet & System Services

The kubelet and system daemons are the “heartbeat” of a node.

- Kubelet logs & restarts: Frequent errors or restarts show instability in the agent. Early detection prevents cluster-wide disruption. Alert if kubelet restarts > 3 times in 10 minutes.

- Runtime operations: Container runtime (containerd/CRI-O) failures often manifest as pods stuck in ContainerCreating. Alert if runtime errors > 20 in 5 minutes.

- Proxy health: The kube-proxy routes traffic inside the cluster. Its crashes break service discovery. Alert if kube-proxy restarts > 3 times/hour.

Pod Density & Scheduling Efficiency

Balanced pod placement ensures efficient, cost-effective clusters.

- Pod counts per node: Too many pods cause contention; too few waste resources. Keeping density optimized supports autoscaling efficiency. Alert if pod count > 80% of the node’s max capacity.

- Eviction rates: High eviction counts point to overloaded nodes. Frequent evictions also suggest an imbalance across the cluster. Alert if > 5 evictions per node in 10 minutes.

- Allocatable vs used resources: Shows the gap between what’s available and what’s consumed. Tracking this prevents over-provisioning and wasted spend. Alert if CPU/memory usage > 90% of allocatable.

Telemetry Collection, Error Tracking & Alerting with CubeAPM

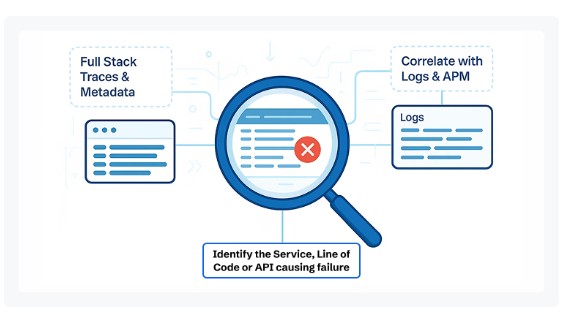

Unified telemetry: metrics, logs, traces—on one timeline

CubeAPM ingests Prometheus-class metrics (CPU, memory, disk, network, node conditions), Kubernetes events (cordon/drain, taints, evictions), kubelet & runtime logs, and distributed traces, then stitches them into a single, drill-down timeline. That means you can hop from a red NodeNotReady event → affected pods → exact kubelet errors → related trace slowdowns without leaving the product.

Built for Kubernetes nodes (not just apps)

Node-specific views highlight Ready/NotReady, Disk/Memory/PIDPressure, kubelet restarts, pod density, and NIC error spikes. Prebuilt dashboards and a guided UX shorten MTTR versus DIY stacks.

Real-time error & status tracking

CubeAPM ingests node and cluster events in real time, so flips like NotReady, SchedulingDisabled, or NetworkUnavailable surface immediately with context (last kubelet logs, top evicting pods, namespaces at risk). The platform narrative across recent posts also spotlights smart sampling for traces—keeping the most valuable error/latency traces while cutting noise—so engineers see what matters during spikes.

Intelligent alerting & anomaly detection

You can enable actionable alerts out of the box—CPU > 90% (5m), free disk < 15%, MemoryPressure=true (2m), DNS latency > 500 ms—and route them to Slack/Email with rich node context. CubeAPM learns baselines to flag anomalous inode consumption or NIC error bursts without paging you for normal traffic swings.

Open standards & fast setup

Deployment follows straightforward steps: install CubeAPM (Helm for K8s), ship infra metrics via OTel Collector, and configure through flags/env/values—no exotic agents required. OpenTelemetry and Prometheus compatibility are first-class, so you can keep existing exporters and dashboards while centralizing analytics in CubeAPM.

Compliance, data residency & on-prem options

If you seek alignment or data localization regulations, CubeAPM supports self-hosting/BYOC so telemetry stays inside your cloud—avoiding public egress and cross-border storage.

Predictable costs at scale

Compared to legacy vendors, CubeAPM’s model (flat, transparent pricing; no surprise egress) routinely delivers 60%+ savings in like-for-like scenarios, while retaining high-value traces via Smart Sampling.

How to Monitor Kubernetes Nodes with CubeAPM

Monitoring Kubernetes nodes in CubeAPM involves a few clear steps—from installation to configuration, instrumentation, and setting up alerts. Below is a practical, documentation-based walkthrough with direct links to the official docs.

1. Install CubeAPM in Your Environment

The first step is to deploy CubeAPM in your infrastructure. You can install it via Helm charts or directly into your Kubernetes cluster. The installation guide provides instructions for setting up the core components and ensuring your CubeAPM backend is ready to receive telemetry.

If you’re running Kubernetes, there’s a tailored guide that walks through installing the CubeAPM Collector and agent as a DaemonSet so that each node’s metrics and logs are captured.

(Install on Kubernetes)

2. Configure CubeAPM for Node Monitoring

Once installed, CubeAPM needs to be configured to capture node-level telemetry. You’ll define how metrics, logs, and traces are ingested, stored, and visualized. The configuration docs detail settings for data pipelines, environment variables, and dashboards.

3. Instrument Kubernetes with OpenTelemetry

To capture detailed node and workload signals, CubeAPM integrates natively with OpenTelemetry. You’ll configure exporters to send kubelet, container runtime, and node-exporter metrics to CubeAPM. This ensures CPU, memory, disk, and network telemetry flows in seamlessly.

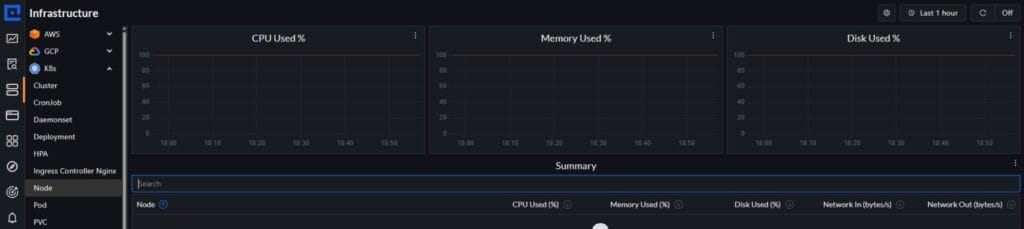

4. Collect Infrastructure Metrics

CubeAPM includes an Infrastructure Monitoring module that ingests node-level metrics like CPU, memory pressure, DiskPressure, and PIDPressure. This module provides ready-to-use dashboards for node health, pod density, and cluster resource efficiency.

5. Aggregate and Search Logs

Logs from kubelet, kube-proxy, and system services can be sent to CubeAPM using common agents such as Fluentd, Logstash, or Loki. CubeAPM indexes logs for full-text search, correlation with metrics, and stream-based organization.

6. Configure Alerts and Notifications

Finally, set up alert rules to proactively detect unhealthy nodes. For example, trigger alerts when a node is NotReady for more than 2 minutes or CPU usage stays above 90%. CubeAPM supports integrations with email, Slack, and other channels to route notifications.

Following these steps ensures that CubeAPM continuously tracks your Kubernetes nodes, surfacing metrics, logs, and events in real time, while triggering intelligent alerts to cut MTTR.

Real-World Example: Improving Node Reliability with CubeAPM

The Challenge

A fast-growing SaaS company was running over 200 Kubernetes nodes across multiple availability zones. Despite having monitoring in place, they faced frequent NodeNotReady events and MemoryPressure conditions that led to pod evictions and cascading service disruptions. Engineers often spend hours correlating node logs, pod evictions, and application latency to find the root cause. Customer-facing services, such as their real-time analytics API, experienced downtime at least twice a month, impacting SLAs and customer trust.

The Solution

The team deployed CubeAPM’s Kubernetes node monitoring by installing the CubeAPM Collector as a DaemonSet across all nodes. They enabled infrastructure monitoring for CPU, memory, disk, and network metrics, while also forwarding kubelet and system logs into CubeAPM’s log pipeline. Smart dashboards and anomaly detection helped them tie node pressure conditions directly to pod restarts and application slowdowns.

The Fixes Implemented

- Set proactive alerts: Alerts for NodeNotReady > 2m, DiskPressure, and eviction spikes were configured using CubeAPM’s alerting module.

- Node-level dashboards: Engineers monitored pod density per node, quickly identifying hotspots where scheduling was uneven.

- Log correlation: By streaming kubelet logs into CubeAPM, they pinpointed a recurring runtime bug that caused kubelet restarts on certain node types.

- Anomaly detection: CubeAPM flagged abnormal NIC error spikes in one AZ, which helped the team work with their cloud provider to fix a faulty network configuration.

The Result

Within three months, node-related incidents dropped by 70%. Mean time to resolution (MTTR) for node outages was cut from 2 hours to under 30 minutes. The business met its SLA targets consistently, while engineering teams regained confidence in rolling out new workloads at scale. Most importantly, CubeAPM replaced a fragmented toolchain with a single pane of glass for metrics, logs, and events, helping the team scale operations without scaling cost or complexity.

Verification Checklist & Example Alert Rules with CubeAPM’s K8 Node Monitoring

Verifying your Kubernetes node monitoring setup ensures you’re not just collecting data, but actively preventing downtime. With CubeAPM, you can validate that the right metrics, logs, and alerts are in place for reliable operations.

Verification Checklist

- All nodes report Ready status: Nodes should consistently show as Ready in CubeAPM dashboards. A NotReady node signals it can’t schedule workloads, often leading to pod reschedules or downtime.

- CPU and memory utilization within safe thresholds: Nodes running above 80% CPU or memory for long periods risk throttling or evictions. CubeAPM helps you track these metrics in real time and forecast when to scale.

- Disk usage and inode consumption monitored: Running out of disk space or inodes prevents pods from writing logs and files, causing crashes. Monitoring both prevents hidden failures from surfacing during high load.

- Kubelet and runtime logs ingested: The kubelet and container runtime (containerd/CRI-O) manage pod lifecycles. By shipping their logs into CubeAPM, you gain visibility into restarts, errors, or misconfigurations before they snowball.

- Pod density per node is balanced: Too many pods on one node cause contention, while underutilized nodes waste money. CubeAPM dashboards make it easy to spot hotspots and redistribute workloads.

- Eviction events tracked: Frequent pod evictions are a symptom of node stress—usually from memory, disk, or PID limits. Monitoring eviction trends with CubeAPM helps teams intervene before applications degrade.

- Network latency and packet errors collected: Packet drops or rising latency at the node level can break service-to-service communication. CubeAPM surfaces these metrics, helping teams catch network instability early.

- Alerts connected to notification channels: Even with dashboards, alerts are your safety net. Configuring CubeAPM to push notifications to Email or Slack ensures engineers are paged the moment node health degrades.

Example Alert Rules

Node Not Ready

- alert: NodeNotReady

expr: kube_node_status_condition{condition="Ready",status="false"} > 0

for: 2m

labels:

severity: critical

annotations:

description: "Node {{ $labels.node }} has been NotReady for over 2 minutes"

High CPU Utilization

- alert: NodeCPUOverload

expr: instance:node_cpu_utilisation:rate5m > 0.9

for: 5m

labels:

severity: warning

annotations:

summary: "High CPU load detected on {{ $labels.node }}"

description: "The node {{ $labels.node }} has sustained CPU usage above 90% for the past 5 minutes."

Disk Pressure

- alert: NodeDiskPressure

expr: kube_node_status_condition{condition="DiskPressure",status="true"} > 0

for: 2m

labels:

severity: critical

annotations:

description: "Node {{ $labels.node }} is under DiskPressure"

Memory Pressure

- alert: NodeMemoryPressure

expr: kube_node_status_condition{condition="MemoryPressure",status="true"} > 0

for: 2m

labels:

severity: critical

annotations:

description: "Node {{ $labels.node }} is under MemoryPressure"

High Eviction Rate

- alert: HighPodEvictions

expr: increase(kube_pod_evict_events_total[10m]) > 5

labels:

severity: warning

annotations:

description: "High pod eviction rate detected on node {{ $labels.node }} in the last 10 minutes"

Conclusion

Monitoring Kubernetes nodes is about ensuring the backbone of your cluster remains stable, resilient, and cost-efficient. Nodes are where workloads actually run, and issues at this level ripple across applications, services, and ultimately, customer experience. Without proper visibility, businesses risk downtime, SLA violations, and runaway cloud costs.

CubeAPM provides a unified platform for node monitoring by combining metrics, logs, events, and error tracking into a single view. It eliminates the guesswork by capturing CPU, memory, disk, and network signals, correlating node conditions with pod evictions, and offering intelligent alerting and anomaly detection.

As Kubernetes adoption continues to grow, reliable node monitoring is essential for scaling safely and sustainably. Start monitoring Kubernetes nodes with CubeAPM today to improve uptime, reduce costs, and innovate without fear of infrastructure failures.

Disclaimer: The information in this article reflects the latest details available at the time of publication and may change as technologies and products evolve.

FAQs

1. What’s the difference between monitoring Kubernetes nodes and pods?

Node monitoring focuses on the health of the physical or virtual machines that run workloads, including CPU, memory, disk, and network conditions. Pod monitoring focuses on containerized applications scheduled on those nodes. Both are important, but node failures often trigger widespread pod issues.

2. How often should Kubernetes nodes be monitored?

Nodes should be monitored continuously in real time. Since node conditions like NotReady or MemoryPressure can change within minutes, relying on periodic checks risks missing critical events. Tools like CubeAPM provide live telemetry streaming to avoid blind spots.

3. Can CubeAPM monitor managed Kubernetes services like EKS, GKE, or AKS?

Yes. CubeAPM integrates with both self-hosted clusters and managed Kubernetes services. By deploying the CubeAPM Collector as a DaemonSet, you can capture node metrics, logs, and events regardless of the cloud provider.

4. How does node monitoring improve autoscaling decisions?

Node-level metrics reveal when a cluster is close to resource saturation or underutilization. Feeding this data into autoscalers ensures new nodes are added before workloads fail, and idle nodes are removed to save costs.

5. What’s the best way to reduce noise in Kubernetes node alerts?

Excessive alerts can overwhelm teams. Using anomaly detection, context-rich alerting, and smart sampling—features built into CubeAPM—helps reduce noise by highlighting only unusual or critical conditions, not normal fluctuations.