Monitoring Kubernetes Pods is essential as adoption keeps climbing. 80% of IT organizations now run Kubernetes in production, with another 13% actively piloting. Pods are the core execution units in Kubernetes, and failures like restarts, resource exhaustion, or scheduling delays quickly cascade into downtime and rising cloud costs.

Still, teams face pain points when monitoring Kubernetes Pods. Diagnosing CrashLoopBackOff or OOMKilled events, delayed detection of unhealthy states, and difficulty correlating metrics, logs, and traces across dynamic clusters remain common challenges. Monitoring costs often spike due to noisy telemetry, straining budgets while slowing resolution.

CubeAPM is the best solution for monitoring Kubernetes Pods. It unifies metrics, logs, and traces via OpenTelemetry, provides real-time dashboards for Pod health and errors, and uses Smart Sampling to cut ingestion costs. In this article, we’ll explore Pods, why monitoring them matters, key metrics, and how CubeAPM simplifies Kubernetes Pod monitoring.

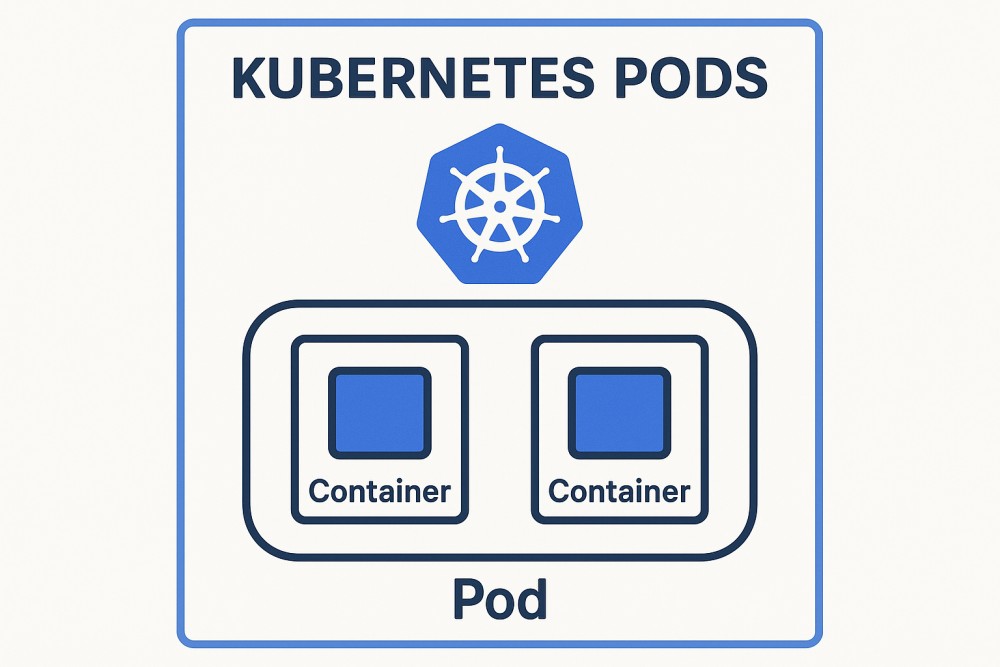

What are Kubernetes Pods?

Kubernetes Pods are the smallest deployable units in a Kubernetes cluster, designed to run one or more tightly coupled containers together. Each Pod shares the same network namespace and can access shared storage volumes, making it the basic building block for running applications in Kubernetes. Instead of managing containers individually, Kubernetes schedules Pods, ensuring workloads scale and recover automatically.

For businesses, Pods are powerful because they:

- Enable microservices by grouping containers that need to work side by side.

- Simplify scaling since Pods can be replicated horizontally to handle demand spikes.

- Improve resilience with automatic restarts and rescheduling across nodes.

- Optimize resources by letting teams define CPU, memory, and storage requests or limits.

These advantages allow companies to deliver applications faster, keep services reliable, and reduce infrastructure waste in cloud or hybrid environments.

Example: Using Kubernetes Pods for E-commerce Checkout

An e-commerce platform might deploy a checkout Pod containing a payment container and a fraud-detection container. Running them together ensures low-latency communication, shared resources, and consistent scaling, while Kubernetes automatically restarts the Pod if either container fails, keeping the checkout process smooth for customers.

Why Monitoring Kubernetes Pods is Critical

Pod lifecycle volatility makes (or breaks) reliability

Pods churn constantly as deployments roll out, autoscalers react, and nodes drain—moving through Pending → Running → Succeeded/Failed and back again. Miss a transition, and you miss the root cause. The official docs emphasize phase changes and failure paths (eg, CrashLoopBackOff, ImagePullBackOff) as first-class lifecycle events you must observe.

Restart storms (CrashLoopBackOff/OOMKilled) demand rapid RCA

Most outage-starting pod issues begin as restart loops from misconfig, bad startup commands, memory pressure, or failing dependencies. Google’s guidance calls out OOM as a frequent driver of CrashLoopBackOff; pairing event logs with pod metrics is how you confirm and fix it fast. Resource requests/limits directly impact pod QoS and stability—get them wrong and you’ll chase recurring OOMKills.

Resource pressure and evictions are pod-level failure modes

Under node memory, disk, or inode pressure, the kubelet evicts pods to keep the node healthy. If you’re not monitoring per-pod memory/CPU/ephemeral-storage and eviction signals, you’ll see cascading app errors with no obvious cause. Red Hat and Kubernetes docs detail eviction thresholds and storage-limit behavior (eg, overall pod storage exceeding a limit triggers eviction).

Readiness/liveness probe failures silently degrade SLAs

Mis-tuned probes cause flapping: liveness restarts healthy-but-slow containers; readiness flips “not ready,” removing pods from service and spiking tail latency. Kubernetes’ probe guidance makes it clear—probe signals directly gate traffic and restarts, so they must be monitored with context (initial delays, startup probes, gRPC probes).

Image pulls are a supply-chain chokepoint

Typos, missing tags, private-registry auth, or rate limits surface as ErrImagePull/ImagePullBackOff. Monitoring must correlate pod events + registry errors + retry backoffs to shorten MTTR and prevent rollout failures. The Kubernetes images documentation highlights pull behavior and the prerequisites you need to validate.

Multi-cloud & multi-cluster amplify visibility gaps (real stat)

Organizations increasingly run across multiple cloud providers; in the CNCF Annual Survey 2024, 37% report using 2 CSPs, and 26% use 3—complexity that multiplies pod states, policies, and telemetry sources. Without unified pod-level observability, incidents straddle clusters and escape detection.

High release velocity requires continuous pod-level feedback (real stat)

With 38% of teams automating 80–100% of releases (CNCF 2024), pods are constantly created/replaced. Only continuous monitoring of pod readiness, restarts, and probe health can keep pace with this deploy tempo and prevent regressions from sticking in production.

Downtime costs are rising—pod issues are often the first domino (real stat)

When a pod restart storm or readiness regression hits production, the clock is expensive: 90% of firms report hourly downtime costs >$300,000, and 41% say $1M–$5M/hour (ITIC 2024). Pod-level signals are the earliest indicators; catching them reduces incident blast radius and cost.

Community experience: probe tuning and noisy telemetry

Practitioners report probe misconfiguration under load and the difficulty of correlating metrics/logs/events across ephemeral pods. These real-world threads underline the need for context-rich, pod-aware monitoring rather than generic host metrics.

Key Metrics for Kubernetes Pod Monitoring

Pod Lifecycle & Status

Monitoring Pod states helps identify deployment health, crashes, and scheduling issues before they escalate.

- Pod phase counts: Track how many Pods are Pending, Running, Succeeded, or Failed. A rise in Pending Pods often signals scheduler bottlenecks or insufficient node capacity, while Failed Pods may indicate misconfigured manifests.

- Restart counts: Frequent restarts highlight instability from faulty images, bad configs, or dependency crashes. Tracking restart trends also helps distinguish between one-off glitches and systemic failures.

- Termination reasons: Kubernetes assigns reasons like CrashLoopBackOff, OOMKilled, or ImagePullBackOff. Surfacing these reasons directly shortens root cause analysis by pointing to memory leaks, bad registry credentials, or missing tags.

Resource Utilization

Pods consume compute and storage resources, and imbalances here often trigger throttling, evictions, or downtime.

- CPU and memory usage per Pod: Comparing usage against requests and limits ensures Pods get adequate resources. Sudden spikes highlight runaway processes, while sustained throttling points to under-provisioned workloads.

- Ephemeral storage consumption: Pods exceeding disk thresholds can be evicted under node pressure. Monitoring this prevents unexpected crashes caused by large log files, caching layers, or temp data accumulation.

- Pod quotas and limits: Namespaces impose quotas to protect multi-tenant clusters. Watching these ensures one team’s Pods don’t starve others by exceeding assigned CPU, memory, or storage budgets.

Health & Probes

Probe metrics reveal whether Pods are truly ready and alive, directly affecting uptime and SLAs.

- Liveness probe failures: Failures trigger container restarts, often due to frozen processes or overly strict checks. Tracking them helps tune thresholds and distinguish real errors from false positives.

- Readiness probe failures: These remove Pods from service endpoints until healthy. Frequent failures reduce load-balanced capacity and can cause elevated latency or dropped requests.

- Startup probe results: Slow-booting apps rely on startup probes for extra time. Failures often mean misconfigured timeouts or initialization bugs, blocking Pods from ever becoming ready.

Scheduling & Placement

Scheduler efficiency determines whether Pods launch quickly and distribute evenly across nodes.

- Pending Pod duration: Long Pending times usually mean no nodes meet resource or affinity requirements. Tracking duration highlights cluster under-provisioning or overly strict constraints.

- Node-to-Pod density: Uneven Pod distribution can overload some nodes while leaving others idle. Monitoring this ensures balanced workloads, better performance, and fairer resource use.

- Affinity/taint toleration failures: Pods with unmet node affinity rules or taint tolerations remain unscheduled. Keeping an eye on these failures simplifies debugging placement issues.

Networking

Pod networking metrics ensure microservices can talk to each other reliably under load.

- Network I/O per Pod: Monitoring traffic volume surfaces Pods that act as noisy neighbors or suffer from bandwidth starvation. Sudden spikes may point to DDoS or runaway loops.

- Connection errors: Failed connections and DNS resolution errors disrupt service discovery. Tracking them prevents cascading microservice failures.

- Latency per Pod: Rising Pod-level latency often signals overloaded containers, slow dependencies, or poor network routing, all of which degrade user experience.

Reliability & Scaling

Reliability metrics capture Pod-level resilience while scaling metrics confirm workloads respond to demand.

- Crash frequency: Pods crashing repeatedly highlight bad rollouts, buggy code, or misconfigured dependencies. Trending these events helps decide when to roll back or redeploy.

- Horizontal Pod Autoscaler activity: Scale events show how Pods react to load. Frequent up/down oscillations may indicate unstable traffic patterns or poorly set thresholds.

- Pod eviction count: Evictions occur when nodes face CPU, memory, or disk pressure. High eviction rates mean workloads are overloading nodes, requiring rebalancing or larger capacity.

Telemetry Collection, Error Tracking & Alerting with CubeAPM

Unified Telemetry with Logs, Metrics & Traces

One of the biggest challenges in Kubernetes is connecting the dots between Pod events, metrics, and container logs. CubeAPM solves this by natively supporting OpenTelemetry and seamlessly ingesting data from sources like kube-state-metrics, container runtimes, and application traces.

Instead of juggling multiple dashboards, teams get a single pane of glass that shows Pod status, restarts, probe failures, CPU/memory usage, and the correlated container logs behind them. For example, when a Pod enters CrashLoopBackOff, CubeAPM links the event to the exact error trace and system metrics, saving hours of manual debugging.

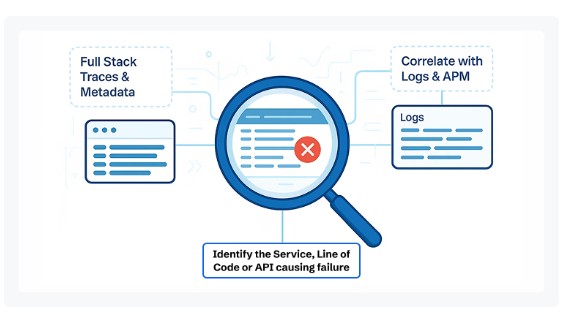

Real-Time Error Tracking

Pod errors are often noisy and repetitive — crashes, failed image pulls, or OOMKilled events flood logs without clear context. CubeAPM’s Errors Inbox filters through the noise by grouping similar errors and applying Smart Sampling, which keeps the most meaningful events while reducing ingestion costs.

Engineers see error spikes as they happen, with full context across stack traces, Pod manifests, and runtime conditions. Whether it’s a failing liveness probe or a container running out of memory, CubeAPM highlights the root cause in real time, enabling faster recovery and fewer blind spots.

Intelligent Alerting & Anomaly Detection

Static thresholds don’t cut it in Kubernetes environments where Pods scale up and down constantly. CubeAPM brings intelligent anomaly detection that learns Pod baselines and flags abnormal behavior like sudden restart storms, probe failure surges, or unusual latency. Alerts can be configured via YAML or directly in the UI, and they integrate with Slack, Teams, and WhatsApp for instant notifications.

This ensures SREs and developers don’t just get alerted when something is “off,” but also when it’s business-critical. Prebuilt alert templates for common Pod issues — CrashLoopBackOff, OOMKilled, Pending too long — mean teams can get started without spending weeks tuning rules.

How to Monitor Kubernetes Pods with CubeAPM

CubeAPM’s official documentation provides a step-by-step path to set up pod-level metrics, logs, and error tracking inside Kubernetes.

1. Install CubeAPM on Kubernetes

Use the CubeAPM Kubernetes installation guide to deploy CubeAPM via Helm. This installs the core components: the backend, collector endpoints, and default dashboards. Customizing the values.yaml lets you set pod metrics, log endpoints, and resource limits up front.

2. Enable Infra Monitoring for Kubernetes

Go to Infra Monitoring → Kubernetes to set up the OpenTelemetry Collector in both DaemonSet and Deployment modes. This captures node-level and pod-level metrics, kubelet stats, host metrics, and logs from pods.

3. Pull Metrics via Prometheus Exports

Use the Prometheus Metrics docs to configure scraping of metrics endpoints. Whether you’re exporting pod CPU/memory, kube_state metrics, or custom app metrics, these get pushed into CubeAPM for dashboards and alerts.

4. Configure Core Settings & Token Authentication

Refer to Configure CubeAPM to set essential parameters like your API token, base-url, environment tags, and cluster peers. Proper configuration ensures all pod events, metrics, and logs are authenticated and surfaced correctly.

5. Consolidate Pod & Container Logs

Navigate to the Logs page to enable log collection from containers in pods. This ties together restart events, image pull failures, and probe failures by correlating with metrics and pod statuses.

Real-World Example: 60% Fewer Checkout Failures in with CubeAPM K8 Pod Monitoring

The Challenge

A global e-commerce retailer faced checkout disruptions during peak sales campaigns. Checkout pods frequently flipped between Ready and NotReady because of aggressive readiness probes. This caused payment errors, frozen carts, and high customer frustration — with downtime costing the business millions in lost transactions.

The Solution

The SRE team turned to CubeAPM’s Kubernetes Pod monitoring setup. They instrumented the cluster following the installation and infra monitoring guides to capture pod-level metrics, logs, and traces. They also enabled log ingestion to unify container runtime logs with metrics and errors.

The Fixes Implemented

- Metrics dashboards exposed a surge in readiness probe failures during traffic spikes.

- Log correlation revealed database connection timeouts as the root cause.

- Error Tracking grouped thousands of failures into one actionable issue, preventing alert fatigue.

- Alerting rules configured via CubeAPM Alerts notified engineers immediately when failures crossed thresholds.

- Engineers tuned probe thresholds and fixed missing connection pool settings in the deployment.

The Results

Pod stability improved by 60%, checkout errors dropped sharply, and cart abandonment during flash sales decreased significantly. More importantly, incidents that once took hours to diagnose were resolved in minutes, cutting MTTR by more than half. The business protected millions in revenue during peak shopping seasons.

Verification Checklist & Example Alert Rules with CubeAPM’s K8 Pod Monitoring

Before running Kubernetes Pods in production, teams should confirm that monitoring and alerting are correctly configured. This checklist and alert library help ensure pod-level issues are detected early and resolved quickly.

Verification Checklist

- Pod lifecycle states: Confirm that you are capturing Pods across all phases — Pending, Running, Failed, and Succeeded. This ensures visibility into scheduling delays, deployment errors, and completed workloads.

- Restart counts: Track how many times containers within Pods restart. High restart numbers usually point to instability, such as application crashes, dependency failures, or misconfigured images.

- Probe failures: Monitor liveness, readiness, and startup probes. Frequent probe failures indicate unhealthy applications or services not ready to receive traffic, directly impacting availability.

- Resource usage: Collect Pod-level CPU, memory, and ephemeral storage metrics against defined requests and limits. This helps identify resource bottlenecks and prevents OutOfMemory kills, throttling, or evictions caused by node pressure.

- Logs ingestion: Ensure logs from container runtimes are centralized. Logs provide essential context for why Pods restart, why image pulls fail, or why probes time out.

- Error tracking: Use an error aggregation system to group repetitive failures such as CrashLoopBackOff or OOMKilled. This reduces noise and surfaces the most critical issues for faster resolution.

- Alerts configuration: Verify that alert rules are firing correctly for Pod-level issues — restarts, probe failures, evictions, and pending states. Alerts should be tested to avoid false positives and missed incidents.

- Dashboards: Build dashboards that correlate Pod health, resource consumption, and latency trends. Dashboards should provide a clear picture across namespaces and also allow drilling down into specific Pods.

Example Alert Rules

CrashLoopBackOff Alert

This rule detects Pods stuck in a restart loop due to application crashes or misconfigurations.

- alert: PodCrashLoopBackOff

expr: kube_pod_container_status_waiting_reason{reason="CrashLoopBackOff"} > 0

for: 2m

labels:

severity: critical

annotations:

description: "Pod {{ $labels.pod }} in namespace {{ $labels.namespace }} is in CrashLoopBackOff for over 2 minutes"

By catching CrashLoopBackOff early, teams can fix failing deployments before they impact user traffic.

OOMKilled Alert

This rule triggers when a Pod is terminated due to exceeding its memory limits.

- alert: PodOOMKilled

expr: kube_pod_container_status_terminated_reason{reason="OOMKilled"} > 0

for: 1m

labels:

severity: warning

annotations:

description: "Pod {{ $labels.pod }} in namespace {{ $labels.namespace }} was OOMKilled"

Monitoring OOMKilled events helps engineers adjust resource requests/limits and prevent repeated memory-related crashes.

Readiness Probe Failure Alert

This rule monitors repeated readiness probe failures that remove Pods from service endpoints.

- alert: PodReadinessProbeFailures

expr: increase(kube_pod_container_status_ready{condition="false"}[5m]) > 3

labels:

severity: critical

annotations:

description: "Pod {{ $labels.pod }} in namespace {{ $labels.namespace }} failed readiness probes multiple times in 5m"

Alerting on readiness failures ensures degraded Pods are detected quickly before they impact load balancing or service uptime.

Pending Pods Alert

This rule identifies Pods that cannot be scheduled and remain in a Pending state.

- alert: PendingPods

expr: kube_pod_status_phase{phase="Pending"} > 5

for: 10m

labels:

severity: warning

annotations:

description: "More than 5 Pods have been Pending for over 10 minutes in namespace {{ $labels.namespace }}"

Pending Pods usually indicate cluster resource shortages or placement constraints, and this alert helps trigger proactive scaling or investigation.

Pod Eviction Alert

This rule fires when Pods are evicted due to node resource pressure.

- alert: PodEvicted

expr: kube_pod_status_reason{reason="Evicted"} > 0

for: 1m

labels:

severity: critical

annotations:

description: "Pod {{ $labels.pod }} in namespace {{ $labels.namespace }} has been evicted due to resource pressure"

Pod eviction alerts highlight when workloads are overloading nodes, prompting rebalancing or cluster scaling.

With this checklist and alert library, teams can ensure that Kubernetes Pods are monitored at the right level of detail. The combination of lifecycle metrics, resource monitoring, logs, error tracking, and proactive alerts forms the foundation for reliable Pod operations.

Conclusion

Monitoring Kubernetes Pods is essential for maintaining reliable, scalable, and cost-efficient applications. Pods are the first layer where failures emerge, whether through restarts, probe failures, resource exhaustion, or scheduling bottlenecks. Without visibility into these signals, businesses risk extended downtime, unpredictable performance, and higher cloud bills.

CubeAPM provides a complete solution for Kubernetes Pod monitoring. By unifying metrics, logs, traces, and error tracking, it offers engineers a single view of Pod health across clusters. Features like real-time error grouping, intelligent anomaly detection, and cost-efficient Smart Sampling ensure teams resolve issues faster while keeping data ingestion affordable. The result is shorter MTTR, fewer blind spots, and more resilient workloads.

For teams running Kubernetes in production, CubeAPM delivers Pod observability that scales with your business. Book a free demo today and see how CubeAPM can help you prevent failures, cut costs, and achieve end-to-end reliability.

Disclaimer: The information in this article reflects the latest details available at the time of publication and may change as technologies and products evolve.

FAQs

1. How do you monitor Kubernetes Pods without adding too much overhead?

Lightweight metrics collectors like kube-state-metrics and OpenTelemetry reduce overhead by focusing only on essential pod data. Tools like CubeAPM use Smart Sampling to keep costs low while still retaining critical signals for troubleshooting.

2. What’s the difference between monitoring Pods and monitoring Nodes in Kubernetes?

Pod monitoring focuses on containerized workloads — restarts, probe failures, resource usage — while node monitoring covers infrastructure health like CPU saturation or disk pressure. Both are important, but Pod monitoring directly impacts application reliability.

3. How can you detect abnormal behavior in Kubernetes Pods automatically?

Anomaly detection tools compare pod metrics against historical baselines. For example, CubeAPM can flag unusual restart spikes or probe failures without fixed thresholds, ensuring teams catch issues faster than manual monitoring.

4. What are common mistakes teams make when setting up Pod monitoring?

Some common mistakes include ignoring probe metrics, not setting alert thresholds, over-collecting verbose logs, and failing to correlate metrics with container logs. These gaps can lead to alert fatigue or missed incidents.

5. Can Pod monitoring help with compliance and audit requirements?

Yes. Pod-level telemetry provides audit trails of workload activity, including restarts, evictions, and error logs. Platforms like CubeAPM can store and organize this data to help teams meet compliance requirements.