As systems scale, observability starts reacting less to growth and more to structure. A new service, a longer retention window, or a subtle change in sampling can shift costs far more than expected. What looks like a minor configuration decision often multiplies across ingestion, storage, indexing, and query paths.

Industry data shows this pattern clearly. In fact, more than 60% of engineering leaders report that observability spend is growing faster than infrastructure costs. Observability now consumes around 11–20% of total cloud infrastructure spend in many organisations. Telemetry volume grows faster than application traffic, driven by microservices, high-cardinality data, and default instrumentation. Teams often see observability data expand at multiples of their core workload growth, turning stable monthly bills into volatile ones.

At scale, small changes in pricing dimensions, retention policy, or telemetry volume can translate into huge cost increases.

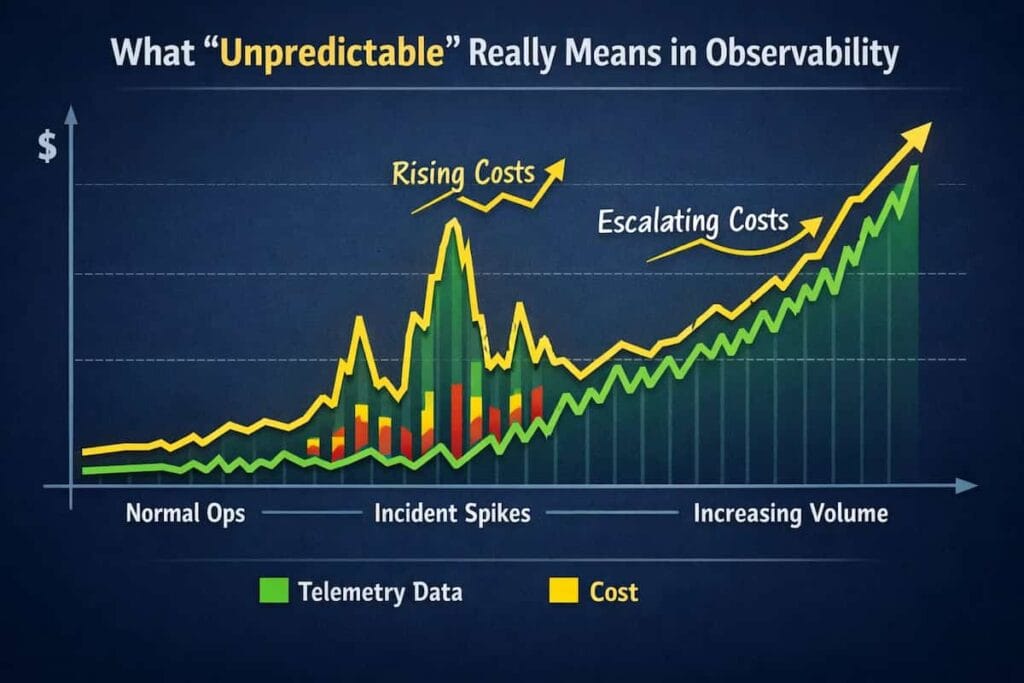

What “Unpredictable” Really Means in Observability

In the observability market, the term “unpredictable” describes a growing disconnect between engineering activity and financial reality. The historical correlation between business growth and operational expenditure no longer holds true for modern telemetry.

In previous eras, a rise in costs generally signalled an increase in users, revenue, or system traffic. Today, organizations frequently encounter significant bill fluctuations while their underlying infrastructure remains entirely stable. This volatility stems from the fact that a single change in service configuration can generate a massive surge in data volume without any corresponding increase in customer value.

The Forecasting Paradox

Financial planning for observability remains difficult even when teams can reasonably estimate traffic, host counts, or raw telemetry ingestion. The uncertainty comes from decisions later on that are harder to predict at design time, such as how much trace and log data will be indexed, how long high-cardinality data needs to be retained, and how many custom metrics teams introduce over time. These choices often evolve reactively during incidents or performance investigations, causing costs to grow faster than underlying system scale.

- Visibility Constraints: When teams attempt to stabilize budgets, they are often forced to truncate logs or drop metrics, which creates dangerous blind spots during a production crisis.

- The Silent Surge: Because microservices environments are highly interconnected, small updates in one area can trigger an exponential increase in spans and traces across the entire distributed system.

Disconnected Ownership

The current operational model creates a structural divide between those who generate data and those who pay for it.

- Platform Liability: Central platform teams typically manage the vendor relationship and provide the budget, yet they possess no direct control over how application teams instrument their code.

- The Accountability Gap: Application developers focus on shipping features quickly and rarely have insight into the downstream financial impact of the telemetry their services produce.

Without a direct feedback loop between data generation and budgetary impact, the cost of observability will continue to scale independently of business success.

The Cost Equation Most Teams Do Not Model

Most teams only care about how much they can take in. In reality, there are four interacting multipliers that affect the cost of observability, not just one.

The five main multipliers are:

1. Volume: Logs, Metrics, Traces, Profiles, Events

The amount of telemetry data is not the same.

- Logs come in different sizes and levels of detail: They vary in size and verbosity, making them expensive when overly detailed.

- Traces get bigger as the architecture fanout grows: They grow with architectural fanout, not just traffic volume.

- Metrics change with cardinality, not samples: They scale with cardinality rather than sample frequency.

One request can make dozens of log lines, hundreds of spans, and thousands of metric points.

2. Retention: Hot, Warm, Cold, and More

Retention is not usually a single setting.

Most platforms have different prices for:

- Hot storage: Indexed and fast, but the most expensive tier.

- Warm storage: Slower queries with reduced storage cost.

- Cold storage: Cheap to retain but expensive to restore or rehydrate.

Restore operations, rehydration, and long-range query scanning are some of the hidden costs.

3. Hosts: Billing Units Stop Matching Reality

After volume and retention, observability costs often start to drift because of how platforms price infrastructure. Many tools still treat hosts as the primary billing unit, even though modern systems scale around services, containers, and workloads, not machines.

Host-based pricing then amplifies other cost mechanics:

- Hosts: Each additional host directly increases spend, regardless of how much work it actually performs.

- Autoscaling and burst capacity: Temporary scale-ups for traffic spikes, deployments, or resilience planning can permanently raise baseline costs if hosts are billed by peak usage.

- Container density mismatch: High container density concentrates more workloads onto fewer hosts, increasing telemetry volume without changing the host count that pricing is based on.

- Infrastructure abstraction gaps: Virtual machines, node pools, and ephemeral compute make host counts unstable and difficult to reason about for budgeting.

Example: Why Host-Based Pricing Become Expensive at Scale

In a mid-sized setup, host-based pricing compounds quickly once you account for all host layers. With 125 APM hosts at $35 each, APM alone costs about $4,375 per month. Profiling adds another layer: 40 profiled hosts at $40 comes to $1,600, plus container profiling across 100 hosts at $2 adds $200 more. Infrastructure monitoring is billed separately again, with 200 infra hosts at $15 adding $3,000 per month. Before logs, spans, or usage-based charges enter the picture, host-based costs alone reach roughly $9,175 per month. As autoscaling, failover capacity, and short-lived hosts appear during incidents, each of these host counts rises independently, pushing spend higher even when traffic and telemetry volume remain unchanged.

4. Users: Access-Based Expansion

The final multiplier is users.

Many platforms charge per user or per role, turning access into a recurring cost driver. As observability adoption spreads across engineering, SRE, product, and security teams, user counts grow faster than telemetry volume.

Costs increase simply because more teams need visibility, not because the system is generating more data.

5. The Hidden Fourth Multiplier: Operational Behavior

Even when teams think they understand their volume, retention, and pricing dimensions, costs still drift. The reason is not traffic alone. It’s how people and systems behave once software is running in production. Day-to-day operational actions change telemetry patterns in ways that pricing models rarely account for upfront.

That behavior shows up in a few consistent ways:

Incident Response Increases Telemetry Volume

When something goes wrong, teams often:

- Turn off sampling: All traces are collected, multiplying data volume instantly.

- Increase log levels: Debug logs flood systems during already stressed periods.

- Add temporary debug fields: Temporary fields often remain longer than intended, permanently increasing cardinality.

These changes make telemetry volume bigger and often last longer than planned.

New Deployments Increase Cardinality

Every deployment can bring:

- New endpoints: Each endpoint adds new trace paths and metrics.

- New tags: Tags accumulate over time, increasing series count.

- New dimensions: More dimensions increase indexing and query complexity.

Cardinality keeps growing, even when traffic stays the same.

Incremental Dashboards, Alerts, and Integrations Add Cost

Small additions add up:

- New dashboards increase query scans: Dashboards continuously execute queries, consuming scan quotas.

- Alerts add extra work for evaluation: Alert engines evaluate conditions frequently, adding compute cost.

- Exports and integrations are what make egress happen: Sending data out of the platform introduces additional fees.

Each one seems safe, but they are collectively very expensive.

Why Costs Stop Scaling Linearly as Systems Scale

Observability costs often feel predictable at first. Traffic goes up, spend goes up, and the relationship seems manageable. As systems grow more dynamic, that linearity breaks. Cardinality, instrumentation changes, and tracing behavior introduce cost growth that no longer tracks cleanly with usage or business growth.

Often, major aspects that stop linear cost increase as systems scale include:

High-Cardinality Blowups

The shift toward Kubernetes and microservices has introduced a massive volume of metadata that inflates metric costs.

- The Metadata Tax: When running Kubernetes, every pod ID, container name, and ephemeral IP adds up. Each unique combination creates new metric series or log dimensions. Even when traffic is stable, this churn forces observability systems to store and index far more distinct data than teams expect, driving costs up quickly.

- Unbounded Dimensions: High-cardinality tags like user IDs or request IDs create millions of unique time series. These small instrumentation choices frequently lead to sudden budget spikes that are disconnected from actual traffic levels.

Distributed Tracing Growth is Non-linear

Distributed tracing volume grows significantly faster than the number of requests entering the system.

- The Fanout Effect: The fanout effect is common in microservices architectures, where a single user request can trigger dozens of internal spans across multiple backend services.

- Sampling Overhead: Tail-based sampling adds overhead. Because traces must be buffered and evaluated after completion, the approach requires extra routing and compute capacity that adds to total ownership costs.

In modern cloud environments, observability costs do not scale in a linear fashion alongside business growth. Instead, they often follow an exponential curve driven by technical complexity rather than user demand.

Multi-Layer Log Inflation

Logs remain one of the most expensive components of observability because their costs accumulate at every stage of the data lifecycle.

- Ingestion and Indexing: Most vendors charge based on the raw volume of data processed and the computing power required to make that data searchable.

- Storage and Rehydration: Long-term retention is often marketed as inexpensive, yet the true cost emerges during “rehydration” or restoration operations. When an incident occurs, and an engineer must move cold, archived data back into an indexed state for querying, the associated egress and scanning fees can be substantial.

The combined cost of storing data and the occasional necessity of rehydration makes it difficult to predict monthly spend during periods of high system instability.

Feature Bundling Creates Multiple Cost Meters

Feature bundling introduces multiple cost meters into a single observability stack.

APM, infrastructure monitoring, logs, RUM, synthetics, and security signals are each metered differently.

- Meter fragmentation: APM, logs, infrastructure metrics, RUM, synthetics, and security data are priced using different units and thresholds. Each stream grows independently, even when driven by the same workload.

- Uneven scaling behavior: Scaling behavior is rarely uniform. While some parts of the system scale alongside user traffic, others are tied to data retention or the sheer volume of queries. Over time, it becomes nearly impossible to forecast what your final bill will actually look like.

- Cross-module coupling: A change intended for one feature often increases usage in others. New tracing can raise log volume. RUM adoption can amplify backend spans. Costs compound across modules rather than remaining isolated.

Pricing Models and How They Create Bill Volatility

Ingestion-based Pricing

Predictability depends on strict telemetry controls. During incidents, migrations, or periods of verbose logging, ingestion volume spikes sharply and costs rise at the worst possible time.

Host-based Pricing

Costs remain predictable only when host counts are stable. In autoscaling and container-dense environments, short-lived nodes and replica churn push spend up even when application load remains unchanged.

Per-metric and custom metric pricing

The growth of cardinality makes costs go up faster than teams can respond. Small instrumentation changes can multiply the number of time series and double spend before anyone notices.

Consumption-based pricing in managed platforms

Query scans, rehydration, and export fees add secondary cost paths. Optimization usually happens after usage is recorded and the bill has already increased.

Per-User Pricing

Costs scale with team size rather than system behavior. As access expands across engineering, SRE, and support teams, spend grows even when telemetry volume stays flat.

Operational Triggers That Cause Surprise Bills

Operational triggers that cause surprise observability bills rarely come from mistakes. They come from normal engineering behavior. Actions taken to improve reliability, ship faster, or reduce risk often increase telemetry volume in ways that pricing models do not absorb smoothly.

- Turning off sampling during incident response: When things break, teams often disable sampling to see everything. It feels necessary in the moment. Telemetry switches to full fidelity, sometimes within minutes. Ingestion and processing volumes spike right when the underlying infrastructure is already under stress, pushing costs up fast.

- New service launches: Launching a new service always feels like a minor addition, but each one carries its own baggage of logs and traces. Individually, they’re tiny. However, they slowly pile up on existing infrastructure, gradually bloating your storage and ingestion costs so that you never really see the “big” price jump until the bill arrives.

- Kubernetes churn: Short-lived pods and frequent redeployments generate transient telemetry. Cardinality rises. Metric series multiply. Storage and indexing pressure increase, even though the application itself may not be growing in any meaningful way.

- Multi-region expansion: Multi-region deployments duplicate observability pipelines and retention costs.

- Using parallel tools during migrations: During migrations, teams often run two observability platforms side by side to reduce risk. For that period, telemetry is ingested and stored twice, causing a temporary but significant increase in spend.

What Teams Commonly Encounter as Observability Scales

Cost volatility usually doesn’t show up until systems start to scale. Even with steady traffic and stable infrastructure metrics, spend can fluctuate month to month. Seemingly small instrumentation changes, including new tags or longer retention windows, tend to amplify cost at scale. Consequently, optimization efforts become purely reactive.

At that stage, optimization becomes reactive. Engineering teams reduce data after invoices arrive. Finance teams often struggle to forecast observability spend ahead of growth, launches, or architectural changes. Over time, that uncertainty leads to bigger questions about whether the pricing model can really support long-term scale.

When Teams Realize “Cost Is Broken”

Most teams don’t realize observability cost is broken all at once. The moment usually arrives during an incident or traffic spike, when usage hasn’t changed much but the bill suddenly has. By then, the cost model is already baked into how the system runs.

This realization usually comes out of the blue:

Unexpected bill increase

Teams notice higher observability spend without any clear operational change. Traffic is stable. Infrastructure looks the same. No major releases stand out.

No obvious change

Engineering reviews recent deployments and configurations and finds nothing that explains the increase. From their perspective, nothing meaningful changed.

Invisible cost drivers

The root cause is often indirect. Cardinality growth, retention extensions, or background telemetry expansion accumulate quietly and surface only in billing.

At this point, teams not only look at how things are used but also at the basic ideas behind pricing and governance.

A Practical Framework to Regain Predictability

Regaining predictability does not start with cutting data blindly. It starts with understanding where observability spend is created, how it grows, and which controls actually work at scale.

Build a Telemetry Inventory

Teams need a clear view of what they are emitting and why. Identify top telemetry sources by volume. Track services with the fastest cardinality growth.

Track the following:

- Top sources by GB/day: This shows which services, pipelines, or teams are generating the most telemetry volume on a daily basis and where ingestion costs are coming from.

- Queries with the most scans: Reveals dashboards, alerts, or ad-hoc queries that scan large amounts of data and quietly drive up query and compute costs.

- Services that grow in cardinality the fastest: Points to metrics and tags whose cardinality is growing quickly over time. Left unchecked, these are the ones that destabilize costs and make observability unpredictable.

Allocate Budgets Where Spend Is Generated

Global budgets hide the real drivers of growth.

Start:

- Implement per-service telemetry budget: Implement per-service telemetry budgets to keep data growth intentional and accountable:

- Budgets for each environment: Separate budgets for production, staging, and development ensure non-production environments cannot consume production-scale telemetry.

- Alerts for changes: Detects cost-impacting behavior early instead of at invoice time.

Cost is no longer a surprise; it’s a signal.

Manage Volume Without Losing Sight

Reducing volume does not mean sacrificing insight. It is critical to integrate the following measures into operations:

- Apply risk-based sampling: High-risk paths get full fidelity while low-risk paths are sampled.

- Retention levels based on use cases: Tailor storage timelines to actual needs. If a piece of data isn’t actively helping the team solve problems or keep systems running, it shouldn’t be taking up space.

- Prioritization based on incidents: Critical signals are preserved while background noise is reduced.

Cut Down on Waste Structurally

- Get rid of logs that are low in value but high in volume: Eliminates noise that provides little diagnostic benefit.

- Set tags to normal and limit them: Prevents unbounded cardinality growth.

- Remove duplicates from data during migrations: Avoids paying twice for the same telemetry.

What Scalable Cost-Intelligent Observability Looks Like

At scale, observability maturity is not defined by how much data a platform can ingest. It is defined by how predictably teams can operate, plan, and grow without cost becoming a recurring source of friction.

Cost-intelligent observability makes spend understandable before it becomes visible on an invoice. This includes:

Predictable unit economics

Costs scale with clear, stable units that teams can reason about ahead of growth. No surprises. As usage increases through traffic, services, or new regions, spend follows explainable patterns rather than sudden jumps driven by hidden multipliers. That matters at scale.

Clear service-level cost attribution

Teams can trace observability spend back to individual services, environments, and teams. This makes cost a shared engineering concern rather than a centralized finance problem and allows optimization to happen at the source.

Policy-driven sampling and retention

Data volume is governed by intent, not defaults. By design. Sampling and retention policies are applied deliberately based on risk, use case, and lifecycle stage, rather than being tuned reactively only after costs start climbing.

Minimal surprise fees tied to querying or storage

Querying, rehydration, exports, and historical access do not introduce unexpected cost paths. Teams understand the financial impact of using the data they already collect, without needing to second-guess routine analysis.

Where CubeAPM Fits

CubeAPM is built for teams that want observability costs to stay predictable as their systems grow. No hidden layers. No surprise multipliers. Instead of pricing across dozens of dimensions, cost is tied directly to telemetry volume, with control staying where it belongs, with the team running the infrastructure.

CubeAPM Offers:

Ingestion-based pricing

CubeAPM uses ingestion-based pricing of $0.15/GB tied to telemetry volume, not to infrastructure shape or team size. There are no charges based on the number of hosts, containers, Kubernetes pods, users, or environments. As systems autoscale or architectures evolve, pricing remains stable because it does not depend on ephemeral infrastructure units.

Smart Sampling

CubeAPM includes Smart Sampling to reduce cost without sacrificing visibility. Instead of blindly dropping data, Smart Sampling prioritizes high-value signals such as errors, slow requests while reducing low-signal noise. This keeps ingestion predictable while preserving the data teams actually need during incidents.

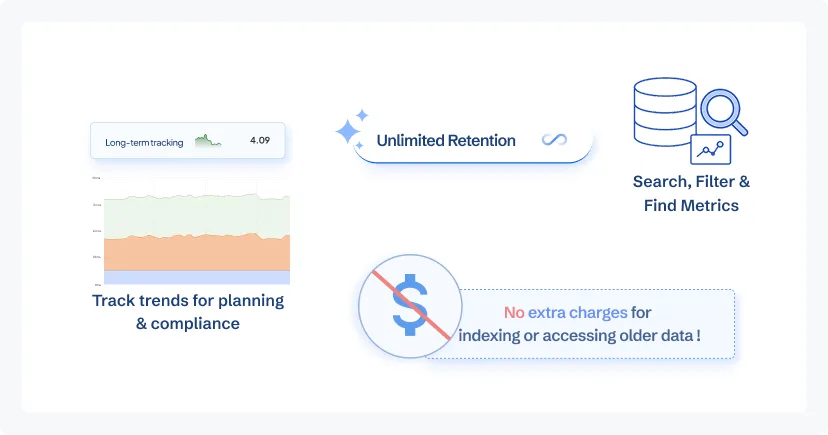

Unlimited retention without additional charges

CubeAPM offers unlimited retention. Teams can retain telemetry data without worrying about hot, warm, or cold storage boundaries, rehydration fees, or long-range query penalties. Historical access does not introduce new cost paths, making long-term analysis and audits easier to plan for.

Enterprise support included by default

Enterprise-grade support is included without additional fee. There are no separate charges for support tiers, response times, or escalation paths. Teams get predictable access to help when systems are under pressure, without cost becoming another barrier during incidents.

OpenTelemetry-native instrumentation and governance

CubeAPM is built around OpenTelemetry as a first-class standard, not an add-on, on purpose. This lets teams control what is emitted, sampled, retained, or dropped using open, portable instrumentation rather than vendor-specific agents or opaque processing rules, while governance decisions stay visible and enforceable directly at the telemetry layer.

Predictable pricing without feature-based cost multipliers

Pricing stays predictable because there are no feature-based multipliers. Custom metrics, indexed logs, indexed spans, longer retention, and support access are not broken out into separate charges.

This gets rid of the hidden multipliers that make traditional SaaS platforms unstable.

CubeAPM is:

- Built on OpenTelemetry, which makes sure that instrumentation is the same across the board

- Governance first, which lets you sample and keep data based on policy

- By design, it is predictable because it ties costs directly to the amount of telemetry instead of the number of features

Checklist: signs your observability costs are about to become unpredictable

Cardinality increasing month over month

Metrics and tags keep growing over time without much notice. That usually points to unbounded labels or dynamic attributes. Consequently, this slowly driving up storage and query costs as the system scales.

Incident response regularly changes ingestion behavior

During incidents, teams often raise sampling rates, add new metrics, or enable extra logging. When these changes are not rolled back, temporary decisions become permanent cost multipliers.

Multiple modules billed independently

When logs, metrics, traces, and add-ons are billed as separate products, it becomes hard to see how a single change affects the total cost. A small adjustment in one area can ripple across the bill, and instead of scaling as one predictable system, costs break into disconnected pieces.

Query scanning or restore fees rising

Retention grows. Queries scan more data. Simple investigations turn into wide, expensive reads. Restore and rehydration fees follow, especially when teams revisit historical data for audits or deeper analysis.

Inability to explain spend by service ownership

If observability spend can’t be tied back to specific services or teams, discussions turn reactive. The bill shows up first. Explanations come later.

Conclusion

Unpredictable observability costs are rarely caused by scale alone. They emerge when data growth, ingestion behavior, and pricing mechanics drift beyond intentional control. Teams that recognize these signals early can regain predictability by tying telemetry decisions to ownership, enforcing clear budgets, and governing data by design rather than reaction. At scale, cost stability is not a finance problem. It is an observability architecture decision.

Disclaimer: The information in this article reflects the latest details available at the time of publication and may change as technologies and products evolve.

FAQs

1. Why do observability bills go up during incidents?

Because incidents change behavior, teams turn on extra logs, widen traces, lower sampling, and run more queries while the system is already noisy. The moment you need visibility the most is also the moment you generate the most data.

2. What is cardinality, and how does it affect the cost?

Cardinality is the number of unique combinations your metrics and labels create. Every new dimension multiplies storage, indexing, and query work. High-cardinality data does not look scary at first, but it compounds quietly until costs jump.

3. How do sampling strategies affect how much money is spent on observability?

Sampling is how you decide what data is worth keeping. Aggressive sampling cuts costs but can hide rare failures. Conservative sampling preserves detail but increases volume. The real challenge is matching sampling decisions to actual reliability risk, not traffic alone.

4. What metrics should FinOps teams keep an eye on?

Raw ingest is only the starting point. Teams also need to watch cardinality trends, how much data each query scans, and retention windows. Cost problems usually show up in these second-order metrics first.

5. How can the cost of observability be broken down by service or tenant?

Costs become visible when telemetry is tied back to ownership. That usually means service-level tagging, tenant awareness, and cost models that reflect how data is produced and queried, not just how much is ingested.