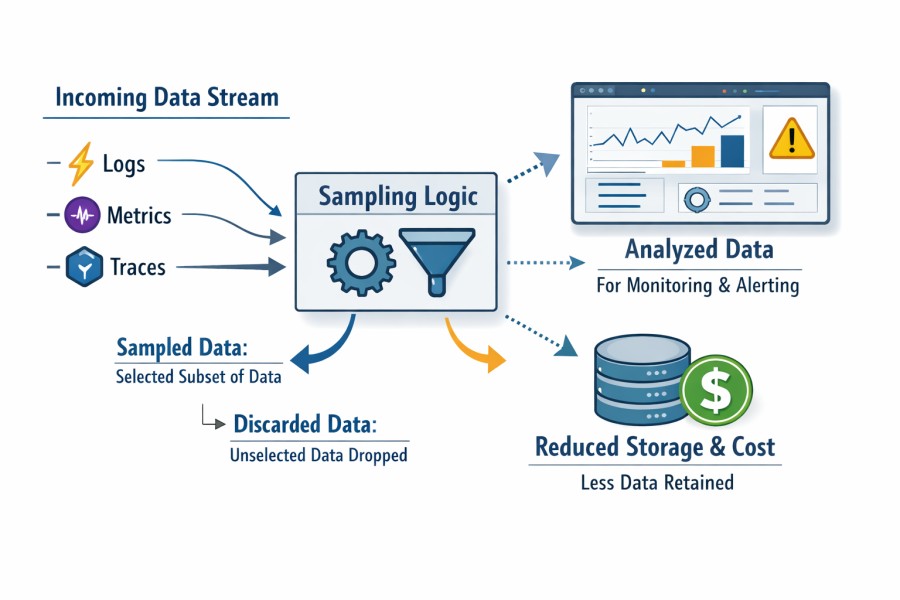

Sampling in observability is the practice of deciding which telemetry data to keep and which to drop. Instead of storing every request, teams retain a representative and meaningful subset of data that helps them understand system behavior, performance issues, and failures.

Traditional sampling strategies, such as head-based or tail-based, are useful but have certain limitations when data volumes grow. Modern businesses use microservices and distributed systems, which generate heavy data volumes. If you try collecting all this data, observability becomes noisy and expensive. If you drop too much data, failures can go unnoticed.

Smart Sampling by CubeAPM is context-based, which keeps only important data for observability. Let’s understand sampling in detail, its benefits and limitations, and how Smart Sampling is useful.

What Is Sampling in Observability?

Sampling is a process where a system decides which pieces of observability data are worth keeping and which ones it can skip. Teams use this sample of data to figure out system activities, incidents, system performance, etc. Not storing unnecessary data helps them save on storage costs or be drowned in noise.

So, when you sample data, you are not throwing data away. You are actually prioritizing important data to understand what’s happening with your systems. Apart from cost savings, you can keep your systems running efficiently without losing sight of how requests actually flow through systems.

Sampling plays a slightly different role depending on the type of telemetry data.

- Logs are often filtered, indexed selectively, or retained for different durations.

- Metrics are aggregated by design (counts, averages, percentiles), so raw volume is already reduced.

- Traces represent individual requests end-to-end, and their volume grows directly with traffic.

That’s why most conversations about sampling in observability are about trace sampling. Also, there are many approaches or strategies for sampling data, such as head-based and tail-based. Most observability platforms now use industry standards, such as OpenTelemetry for instrumentation, which includes built-in support for sampling.

Why Sampling Is Necessary in Modern Distributed Systems

Sampling has become important because applications now generate far more data (e.g., requests, background jobs, retries, or database calls) than any system can realistically store or analyze completely.

Storing and querying all that data is expensive and makes observability slower and harder to use. Teams want to keep systems usable, responsive, and meaningful, even as traffic and complexity grow. Here are the reasons in detail.

- Unmanageable data volumes: Applications are created using many small services. Each user request goes through many services or components. It could be an API gateway, backend services, caches, databases, etc. Each step adds more telemetry. And that can mean millions of traces and billions of spans per day.

- Storage difficulty: You may think it’s safe to keep every trace from every request. But it’s difficult to store or scale this data. Over time, observability tools become harder to use instead of more helpful.

- Rising Costs: It’s costly to store, index, and query too many traces. They consume heavy compute, storage, and bandwidth resources. This means your overall observability costs can increase fast.

- Slow queries: When you store too many traces, the system takes longer to answer. But engineers need fast answers during incidents. Otherwise, there’s no point in having an observability tool if it can’t respond in time.

- Reliability issues: Many think more data means greater visibility. But there’s an issue. Collecting everything can gradually make system operation less reliable. You may face difficulty finding important indications, such as slow or failing user requests.

When you apply sampling, you can preserve vital information to debug system issues and get rid of low-value data.

At scale, sampling is less about reducing data volume and more about preserving diagnostically useful signals under load. This is where newer, behavior-aware sampling approaches have emerged. Platforms such as CubeAPM focus on retaining traces that explain failures, latency, and abnormal execution paths, rather than relying solely on fixed rates or early decisions.

Common Sampling Strategies in Observability Tools

For years, most observability platforms have relied on some common sampling strategies. They are useful, but have tradeoffs that teams usually notice only after they run systems at scale.

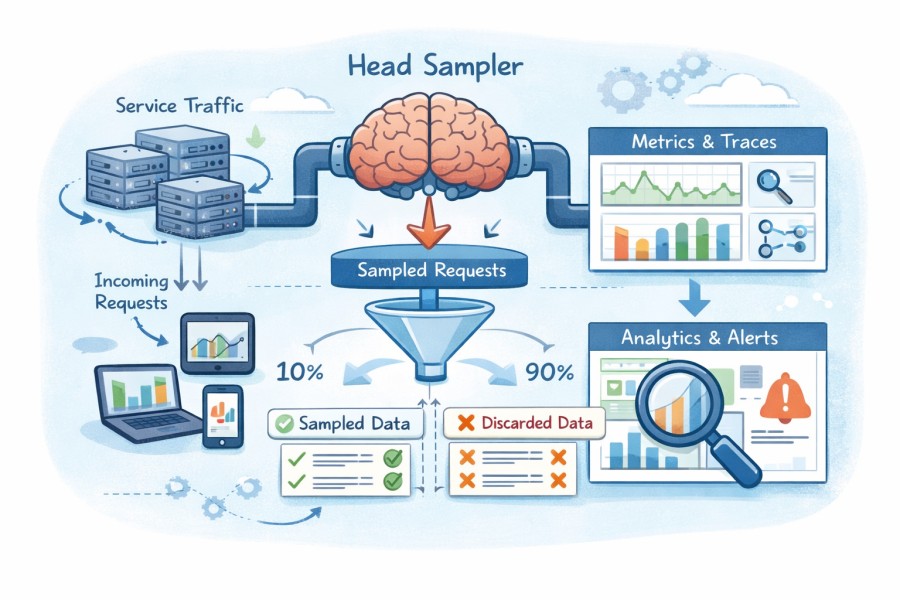

Head-Based Sampling

As soon as a service receives a request, the system decides whether to keep or drop the trace at the start of the request. This is head-based sampling. The system applies that decision to the entire path of the request as it moves through different services.

Pros: Head-based sampling is easy to apply and affordable to run because the decision happens early. There’s no waiting, buffering, or extra infrastructure. Many organizations find head-based sampling useful due to its simplicity.

For example, a service might keep one out of every hundred requests and drop the rest. At steady traffic levels, this allows teams to understand how the system behaves mostly.

Cons: The problem shows up when things go wrong later in the request. If a trace gets dropped at the start, the system never sees the slow database call, the timeout, or the downstream failure that happens seconds later. So, head-based sampling can miss the exact traces engineers want during incidents to be able to do root cause analysis.

Tail-Based Sampling

Instead of deciding upfront, the system collects spans as the request flows through services. Once the trace completes, it evaluates what actually happened. This is tail-based sampling. If a user request is slow, fails, or behaves suspiciously, the system keeps that trace. If there’s a normal request, the system can drop it safely.

Pros: Tail-based sampling provides extra context for each request. So, it’s more accurate. For example, the system can retain a trace if it takes 10x longer to respond than usual or if it returns an error.

Cons: Tail-based sampling is more complex than head-based sampling. The system must temporarily store spans while the request completes. This could mean data buffering in memory or storage. When traffic is high, the compute cost or operational overhead increases. Also, it may need regular adjustments to keep the system reliable.

Probabilistic and Fixed-Rate Sampling

Probabilistic and fixed-rate sampling strategies keep only a portion of all traces. This is to control data volume and costs.

- Fixed-rate sampling: The system keeps a ‘fixed’ percentage of traces (e.g., 5%), regardless of traffic patterns or request behavior.

- Probabilistic sampling: There’s a probability for each trace to be chosen. The system uses randomization to choose traces to be able to yield unbiased results. Over time, the result looks roughly the same: a predictable fraction of traces is retained.

Pros: These approaches are easy to understand and easy to budget for. If traffic doubles, sampled data usually doubles as well.

Cons: Neither of the sampling strategies understands what’s inside a trace. They may treat a fast, healthy request the same as a slow, failing request. During rare incidents or short spikes, important traces can fall into the portion that gets dropped. Engineers may realize this during debugging or RCA.

Limitations of Traditional Sampling Strategies

These approaches solve the basic problem of scale, but they all hit similar limits as systems grow:

- Missed anomalies: When sampling doesn’t offer enough context, rare errors and short-lived issues can slip through.

- Manual tuning: As traffic patterns change, teams may have to adjust rates and rules repeatedly.

- Reduced trust: Trust issues arise when engineers can’t rely on observability data during outages.

Sampling Is a System Design Decision, Not a Configuration Toggle

Many treat sampling like a setting they can tweak later. Sampling shapes how observability behaves when your systems are under stress. So, it’s more of a design decision. Here’s why sampling decisions are important at a system level:

- Incident response: Sampling helps you decide which traces are available during an outage. When you design it well, it shows engineers slow paths, failing services, and error propagation. But if you treat it as an afterthought, useful traces may disappear when you need them, such as during incidents.

- Budgeting: Sampling directly relates to how your telemetry volume grows over time. Collecting everything may shoot up the cost, but dropping data aggressively may lower your visibility on important matters.

- Trust issues: When engineers consistently encounter issues while debugging, it becomes hard for them to trust observability data. They begin to rely more on guesswork than on these tools.

When you treat sampling as a core system design, you can see important data at the right time. This keeps observability reliable as your systems grow.

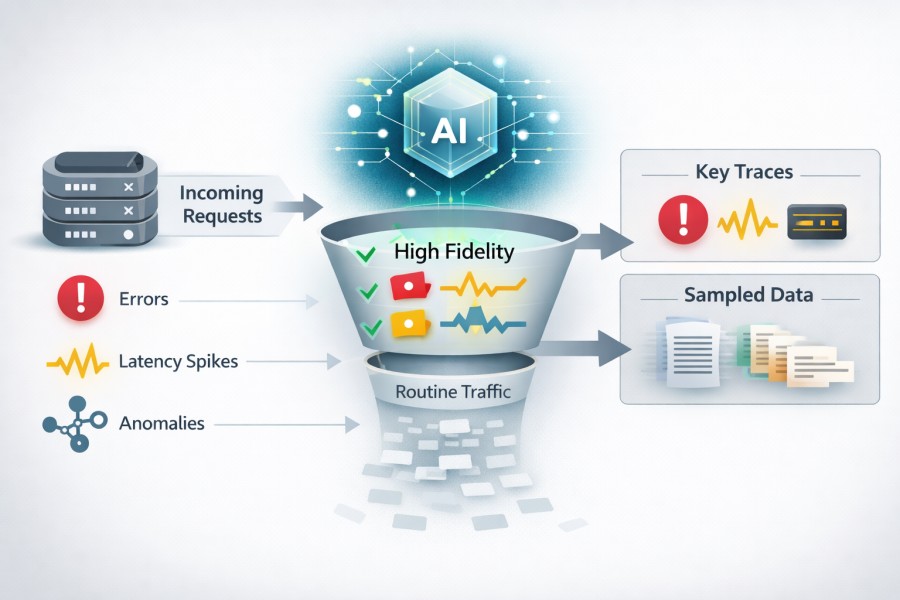

Introducing Smart Sampling in CubeAPM

Traditional sampling forces teams to choose between simplicity, cost, and accuracy. CubeAPM’s Smart Sampling takes a different approach: instead of asking how much data to keep, it asks which data matters.

Smart Sampling is context-aware and adaptive. As requests flow through the system, CubeAPM uses advanced AI algorithms to evaluate their behavior in real time. Traces associated with errors, latency spikes, or abnormal execution paths are retained at higher fidelity, while routine traffic is sampled more aggressively.

This preserves critical context during failures without storing millions of low-value traces.

Smart sampling keeps important data, and doesn’t focus just on its quantity. It adapts to real behavior, so teams can see cause and effect clearly during debugging, without storing millions of requests that add little value.

How Smart Sampling Works

Let’s understand how CubeAPM’s Smart Sampling works with the three phases: decision phase, adjustment phase, and adaptive phase.

Decision Phase

CubeAPM performs all the metric computations on unsampled data. For example, error percentage, RPM, etc, are calculated on the entire dataset. Next, CubeAPM’s AI-based Smart Sampling algorithm decides which traces to retain and which ones to drop.

Smart Sampling’s decisions are context-based, instead of fixed percentages or probabilities. When a user request goes through different services in a system, CubeAPM constantly evaluates data that correlates with issues, such as:

- Errors: These are failed requests, unexpected status codes, and errors in services

- Latency: These are requests that take longer to respond to than usual

- Abnormal behavior: These are deviations in request paths or suspicious patterns

CubeAPM tracks these signals to identify which traces are routine or normal and which are not normal (worth keeping for investigation).

Adjustment Phase

Now that the system has context on data, it will adjust retention. It will down-sample normal, healthy requests. These traces tend to look similar, add little new information, and dominate volume at scale. Reducing them lowers storage and query pressure, and doesn’t impact context.

At the same time, the system preserves slow, failing, or anomalous traces at higher fidelity. It keeps the spans that explain where time was spent, how errors propagated, and which dependencies played a role. So, when engineers investigate an issue, they have useful data that they can use.

Adaptive Phase

Smart Sampling adapts as the system changes. It’s not static like other sampling strategies.

- When traffic increases, but behavior stays healthy, CubeAPM continues to reduce low-value noise.

- When error rates shoot up or latency patterns shift, retention automatically increases around those signals.

- When systems recover, sampling scales back without the need for manual reconfiguration.

Because of this adaptive behavior, engineers don’t need to constantly tune as workloads and traffic change.

Example: Smart Sampling in Practice

Consider a high-traffic microservices system handling 50,000+ requests per second across dozens of services:

- During normal operation, ~98–99% of requests complete quickly and follow predictable execution paths. These routine traces are aggressively sampled to reduce noise and storage overhead.

- When a subset of requests begins to experience elevated latency for example, p95 latency increasing from 120 ms to 800+ ms due to a downstream database slowdown Smart Sampling automatically increases retention for those affected traces.

- If error rates rise from a baseline of <0.1% to 2–3%, failed requests and their full execution paths are preserved, capturing how errors propagate across services and where time is spent.

- Once latency and error rates return to normal, sampling automatically scales back, reducing retained trace volume by 60–80% without any manual configuration changes.

This approach ensures engineers have full visibility into performance degradation and failures when they occur, while avoiding the cost and operational overhead of retaining every healthy request.

Benefits of Smart Sampling for Engineering Teams

Smart Sampling changes how teams interact with observability. The biggest gains show up in clarity, cost control, reliability, and operational effort. Let’s understand these benefits of Smart Sampling in detail.

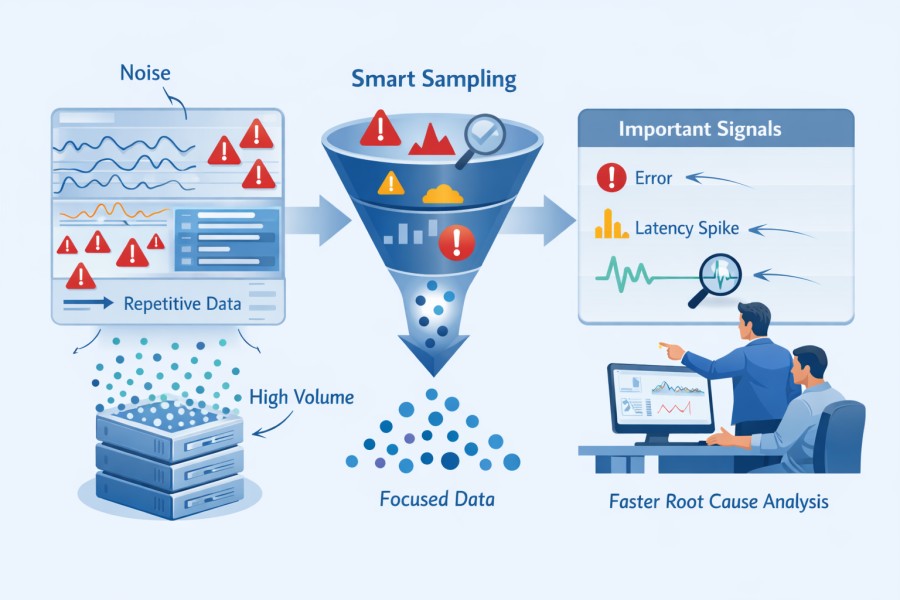

Higher Signal-to-Noise Ratio

Most observability tools suffer from the same problem. They either struggle with repetitive and too much data. When a system treats every request equally, dashboards fill up with low-value data. Finding important data becomes difficult in this case.

Smart Sampling reduces repetitive requests and keeps important traces, such as those linked to errors, latency spikes, or abnormal behavior. As a result, you may notice that dashboards feel more focused. This way, engineers need to spend less time filtering noise and more time looking at important signals that pinpoint real problems.

This also speeds up root cause discovery. When an incident happens, the most relevant traces are already there. Teams do not have to hunt through thousands of low-value traces to find the one that explains the failure.

Cost and Storage Efficiency

Uncontrolled tracing data grows fast in microservices and high-throughput systems. Storing every trace can be expensive and hard to manage.

As Smart Sampling keeps important traces and drops low-value ones, storage and compute costs reduce. The system becomes more efficient even with traffic growth. It also makes it easier for teams to scale effortlessly with higher workloads. They can better plan capacity and costs.

Improved Reliability and MTTR

Sampling decisions are necessary during incidents. Traditional approaches, such as fixed-rate or head-based sampling, can drop the very traces engineers need when systems behave badly.

Smart Sampling increases retention when something goes wrong. It automatically preserves slow requests, errors, and unusual patterns. That means teams get access to essential traces when they need them most.

This reduces mean time to resolution (MTTR). Engineers can easily see failed requests, high latency, and interaction between services during the incident.

Easier Operations

Traditional sampling may require you to do constant tuning, such as adjusting rates, customizing sampling rules, or revising settings when traffic changes. This consumes time and costs.

Smart Sampling adapts automatically to system behaviour. As a result, engineers don’t have to guess the right sampling percentages or maintain complex rules. This simplifies operations. Teams can instead focus more on improving the reliability and performance of systems.

Smart Sampling vs Traditional Sampling Strategies

Smart Sampling vs Head-Based Sampling

Head-based sampling makes a decision at the very start of a request, before the system knows how that request will behave. Once the decision is made, it applies to the entire trace, even if the request later fails or becomes slow.

Early decisions might miss rare but critical failures. Some issues only appear under specific conditions or deep in a request path. But head-based sampling drops those traces if they don’t come in the beginning.

- What it optimizes for: Simplicity and low overhead while ingesting data

- What it sacrifices on: Accuracy during unexpected cases, such as slow requests or failures during execution

Smart Sampling, in contrast, makes decisions based on context. It considers the events when a request moves through various components inside a system to evaluate system behavior and outcomes carefully.

Smart Sampling vs Tail-Based Sampling

Tail-based sampling makes the decision whether to keep a trace or not after the trace completes its path. This allows decisions to use the full context, such as total latency or error status. The tradeoff is that the system must buffer large volumes of spans while waiting for traces to complete.

The result is operational simplicity. Smart Sampling reduces infrastructure pressure while still preserving high-value traces when behavior becomes abnormal.

- What it optimizes for: Decision quality by evaluating complete traces

- What it sacrifices: Operational simplicity. It involves buffering, extra compute, and careful capacity planning during traffic spikes.

Smart Sampling also uses observed behavior, but it avoids heavy-tailed buffers. It prioritizes traces based on signals, such as errors and latency.

Smart Sampling vs Fixed-Rate or Probabilistic Sampling

Fixed-rate or probabilistic sampling keeps a constant percentage of traffic, regardless of what that traffic represents. A healthy request and a failing request have the same chance of being retained.

Equal treatment becomes dangerous during incidents. When failure rates are low, but impact is high, fixed-rate sampling can easily drop the few traces that explain the problem.

- What it optimizes for: Predictable data volume and simple setups

- What it sacrifices: Situational awareness during unexpected failures, where every relevant trace matters.

Smart Sampling does not treat all traffic equally. It differentiates between healthy behavior and abnormal behavior, increasing retention when errors or latency appear and reducing it when systems are stable.

Smart Sampling and OpenTelemetry Compatibility

OpenTelemetry is a vendor-neutral observability framework that provides a standard for collecting and exporting traces, metrics, and logs. CubeAPM supports OTel natively and is compatible with many SDKs, collectors, and exporters. Unlike many other tools, OTel is not just a supporting feature.

- Ingestion: CubeAPM accepts telemetry in the OpenTelemetry Protocol (OTLP) format and works with existing OpenTelemetry-instrumented services without extra adjustments. This means teams can still keep their current instrumentation setup and tooling.

- Decision: Because Smart Sampling happens at the platform level, CubeAPM does not require you to embed sampling logic inside the OpenTelemetry SDK itself. Instruments send full OTLP telemetry to the observability backend, and CubeAPM applies context-aware decisions as data arrives, based on signals, such as latency, error rates, and anomalies.

Benefits: This design avoids lock-ins with vendor-specific agents or custom agents. It saves your engineers from rewriting tracing code or adopting proprietary instrumentation. For smart retention, they can simply auto-instrument or perform manual spans. It reserves important traces and reduces noise and cost.

When Smart Sampling Matters Most: Use Cases

Smart Sampling is useful in environments where telemetry volume grows faster and it’s difficult for teams to store or analyze it. Here are some uses of Smart Sampling:

- High-throughput systems with massive trace volumes: Systems that handle a large number of requests yield massive trace volumes. Sampling every request is expensive and difficult. Smart Sampling reduces routine traffic and allows you to keep slow and failing requests.

- Microservices and distributed systems: In these systems, a user request touches many services in its path. Traditional sampling drops important data somewhere along that path. But Smart Sampling preserves traces that show abnormal behavior across services. Teams can use this insight to understand the issue thoroughly.

- Limited budget: Teams with budget constraints look for cost-efficient observability. With fixed-rate sampling, teams have to choose between visibility and budget. Smart Sampling retains high-value data to help you control storage and compute costs and still get deep visibility.

- Scaling teams: Telemetry data grows faster when your systems grow. Smart Sampling cut dow noise and retains meaningful context to keep observability useful. Teams have access to important data for debugging and root cause analysis without being flooded with low-value data.

What Goes Wrong When Sampling Is Implemented Incorrectly

Sampling is useful in observability, not issues surface when you apply poorly. The problem is that it’s difficult to detect these issues until an incident is in progress. Here are some of those issues:

- Latency: Many performance issues are in the slowest few percent of requests. Because they look normal at the start, fixed-rate or early sampling may drop these outliers. When tail latency is missed, dashboards may show healthy averages, but your users might be experiencing slow or stalled requests.

- Error bursts: Probabilistic sampling treats all traffic equally. During short error bursts, the system may capture only a small fraction of failing requests. When that happens, error patterns might appear smaller or less frequent than they really are. This may delay error detection and diagnosis.

- System failures: Partial system failures are dangerous. A downstream service may degrade or fail for a fraction of users or a region. Poor sampling can miss these patterns. So, you may believe systems are healthy bit the real users are suffering.

- Debugging difficulties: Request volumes and risks of failure increase during traffic spikes. At this stage, many sampling systems drop more data to protect infrastructure. But if it drops essential traces during these moments, engineers don’t get full visibility into issues and at the correct time.

Conclusion

Sampling is unavoidable in observability but how you sample determines what you can see.

Traditional approaches reduce volume, but often discard the most valuable signals. CubeAPM’s Smart Sampling preserves meaning by retaining traces tied to errors, latency, and abnormal behavior, while aggressively reducing low-value noise. This keeps observability accurate, affordable, and trustworthy in high-throughput, distributed systems when visibility matters most.

Disclaimer: The information in this article reflects the latest details available at the time of publication and may change as technologies and products evolve.

FAQs

1. What is Smart Sampling in observability?

Smart Sampling is a context-aware approach to sampling that prioritizes retaining high-value telemetry, such as errors, slow requests, and abnormal behavior, while aggressively reducing routine, healthy traffic. Unlike fixed-rate or random sampling, it adapts based on how the system behaves at runtime.

2. How is Smart Sampling different from head-based or tail-based sampling?

Head-based sampling decides early, before outcomes are known, which can drop important traces. Tail-based sampling waits for full trace completion but adds buffering and operational overhead. Smart Sampling evaluates runtime context and behavior to prioritize traces without relying on rigid percentages or heavy tail buffers.

3. Does Smart Sampling reduce observability accuracy?

No. When designed correctly, Smart Sampling improves accuracy by preserving the most diagnostically useful traces. Instead of spreading visibility evenly across all traffic, it concentrates signal around failures, latency spikes, and anomalies where engineers actually need insight.

4. Is Smart Sampling compatible with OpenTelemetry?

Yes. Smart Sampling works with OpenTelemetry instrumentation and standard trace pipelines. Teams can continue using OpenTelemetry SDKs while applying smarter, context-driven sampling decisions at the platform level.

5. When does Smart Sampling matter most?

Smart Sampling matters most in high-throughput systems, microservices architectures, and production environments where tracing everything is too costly. It is especially useful during traffic spikes or incidents, when retaining the right traces is more important than retaining all traces.