Observability is an infrastructure and ownership decision that shapes how systems behave under load, how predictable costs remain over time, and how much control teams retain over their telemetry data.

The trade-offs between SaaS, BYOC, and self-hosted observability models become harder to ignore as systems scale or during high traffic or incidents. Teams must reassess these models based on their operational reality, instead of the feature list.

This article discusses how SaaS vs self-hosted observability, along with BYOC, compare in production, based on cost dynamics, latency under stress, and control over data and decisions.

This analysis is based on vendor documentation, production usage patterns, and real-world observability deployments across distributed systems.

What Do We Mean by SaaS, BYOC, and Self-Hosted Observability?

SaaS, BYOC, and self-hosted observability represent fundamentally different architectural and operational models. These differences determine who owns telemetry data, who controls cost and performance behavior, and who is accountable when observability systems are under stress.

SaaS Observability

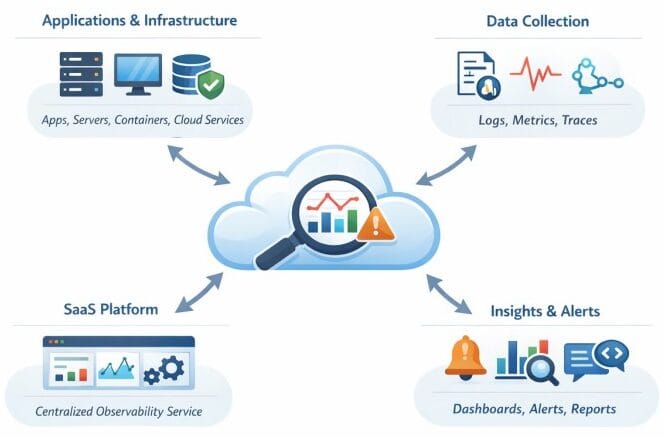

In a SaaS observability model, the vendor operates the ingestion, storage, and query layers as a managed, multi-tenant service.

Key characteristics:

- Telemetry is sent outside the customer environment into vendor-managed infrastructure

- Customers configure instrumentation, sampling, and retention through exposed controls

- Architecture, scaling behavior, and query execution are abstracted away

- Cost, performance, and retention are constrained by vendor policy and shared system design

SaaS reduces infrastructure ownership, but it also limits visibility into how observability behaves under load or during incidents.

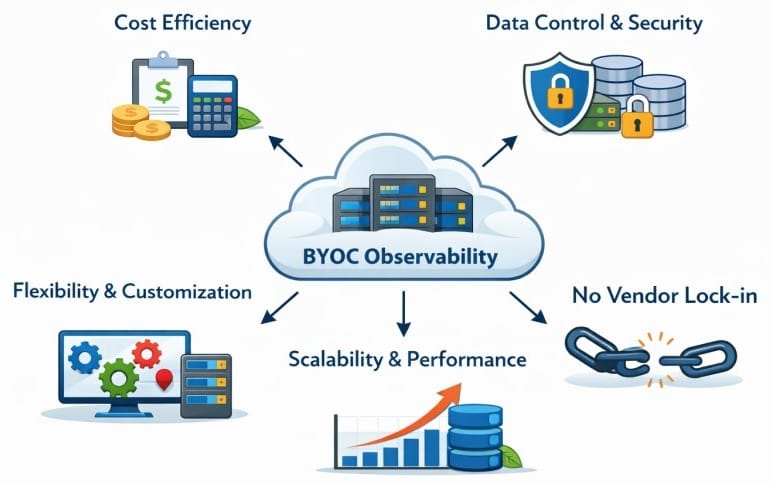

Bring Your Own Cloud (BYOC)

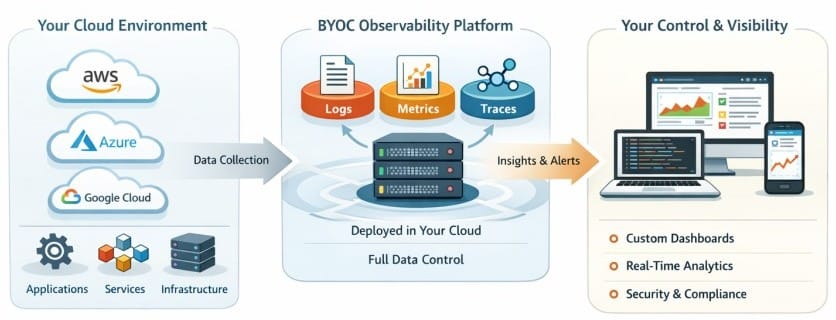

BYOC sits between SaaS and fully self-hosted models. The observability stack runs inside the customer’s cloud account, but the vendor still provides and manages the software.

Key characteristics:

- Telemetry remains within the customer’s cloud boundary

- Operational responsibility shifts, instead of disappearing

- The vendor defines architecture, supported configurations, and upgrade paths

- The vendor is responsible for monitoring the observability tool itself

- The customer gets full ownership of sampling, storage, and retention decisions

- The customer gains stronger data locality and easier compliance alignment

BYOC improves control over where data lives, but internal system behavior and lifecycle management are often still vendor-defined.

Self-Hosted Observability

Self-hosted observability refers to models where the customer owns and operates the observability infrastructure end-to-end.

Key characteristics:

- Deployment, scaling, and failure handling are owned by the customer

- Sampling, retention, and storage decisions are explicit

- Modern implementations are cloud-native and OpenTelemetry-aligned

- Observability becomes part of the platform stack, not an external service

This model trades abstraction for transparency and control, making system behavior more predictable as scale and complexity increase.

Common misconceptions to clear up:

- Self-hosted does not mean legacy or manually managed on-prem systems

- SaaS does not eliminate operational responsibility; it shifts where decisions are made

- BYOC does not guarantee full control; ownership depends on which layers remain abstracted

How OpenTelemetry Changes the SaaS vs Self-Hosted Decision

OpenTelemetry changed observability by separating how telemetry is produced from where it is analyzed. This makes deployment models a real choice again, not a consequence of early tooling decisions. With OpenTelemetry in place, teams can evaluate SaaS, BYOC, and self-hosted models based on ownership and behavior.

Vendor-Neutral Instrumentation Reduces Lock-in Risks

Before OTel, “instrumentation” usually meant a vendor agent plus a vendor SDK. Your code, your deployment, and your telemetry schema quietly bent around that vendor’s model. That is why switching platforms was expensive, even if the new tool looked better.

OpenTelemetry changes where lock-in actually happens. It lets you standardize three things early:

- APIs and SDKs for traces, metrics, and logs

- context propagation (how trace context moves between services)

- semantic conventions (what you call common attributes like http.method, db.system, service.name)

Once those are in place, your applications produce telemetry in a vendor-neutral format, and your choice becomes a back-end decision. You can send the same telemetry to different backends without rewriting your services. That is the practical definition of reduced lock-in.

CNCF data shows OTel adoption has been climbing fast for several years, which is a strong signal that this “standardize first, choose backends later” pattern is becoming normal. In CNCF’s Annual Survey 2022 report, OpenTelemetry usage rose from 4% in 2020 to 20% in 2022.

This means:

- SaaS remains viable, but it is no longer the only realistic option to get good instrumentation.

- Self-hosted and BYOC are easier to justify because you are not betting the codebase on a proprietary telemetry format.

- You can run hybrid models longer (some teams do) because the pipeline can fan out.

Telemetry Ownership Shifts Closer to Platform Teams

OTel pushes observability “left” into platform engineering in a very specific way. The OpenTelemetry Collector becomes the control plane for telemetry. It is a first-class piece of infrastructure that lets platform teams enforce policy without touching app code.

Common platform-level controls that become straightforward with OTel:

- Data minimization: drop or hash sensitive attributes before they leave your network

- Tenant boundaries: route by namespace, cluster, environment, or business unit

- Sampling governance: apply consistent sampling rules at gateways, not inside 50 services

- Multi-destination routing: send high-value signals to one backend and long-retention or compliance copies to another

- Cost and blast-radius control: cap exporters, rate-limit noisy sources, quarantine broken emitters

OTel gives platform teams a clean place to implement choices, such as:

- Who decides what gets retained

- Who decides what gets sampled

- Who decides what leaves the VPC

- Who can change those decisions during an incident

That naturally makes BYOC and self-hosted models more credible, because you can run the policy layer inside your boundary even if you keep the query UI elsewhere.

Makes Self-Hosted and BYOC Models More Viable Than Before

A decade ago, when teams tried self-hosted observability, they got stuck on two problems:

- Instrumentation debt (too many agents, too many SDKs, too many schemas)

- Pipeline debt (no consistent way to filter, sample, and route at scale)

OTel addresses both:

- Consistent libraries and conventions reduce instrumentation entropy

- The Collector gives you a programmable pipeline that is vendor-neutral

That is why BYOC has become a real middle path. You can keep data local to your cloud account, run the telemetry pipeline under your policies, and still let a vendor operate parts of the stack. CNCF also documents the project’s maturity timeline, which helps explain why this conversation has accelerated in the last few years: OpenTelemetry entered CNCF in 2019 and moved to incubating in 2021. It has had enough time to become production-grade in many organizations.

Moreover, OTel enables three deployment patterns that make BYOC and self-hosting less risky:

- dual-write during migrations (old backend and new backend at the same time)

- region-local processing (collect and process in-region, export only what you need)

- segmented storage (hot data vs cold data, different retention tiers, different systems)

Why is OpenTelemetry Now Important in Modern Observability Decisions?

If your telemetry generation and your telemetry back-end are coupled, your tool choice becomes a long-term architectural constraint.

OTel decouples them. So, for deciding between SaaS, BYOC, and self-hosted, OpenTelemetry is the baseline that keeps the decision reversible. Because of this, mature teams now start with these questions:

- Where should telemetry be processed (inside boundary or outside)

- What policies must be enforced before export (PII, residency, audit)

- What is the acceptable failure mode (drop, buffer, degrade, sample)

- Who owns pipeline changes during incidents (platform team vs vendor)

- How portable does the data need to be over a 2 to 3-year horizon

Cost Is Not Pricing: How Each Model Behaves at Scale

Pricing models only describe how that cost is surfaced. This difference is hard to see when you operate at a smaller scale. But at the production scale, it defines whether cost is controllable or reactive.

Ingestion-Based Pricing vs Infrastructure-Based Cost

SaaS observability is typically priced on ingestion units, hosts, or indexed events. Every emitted span, log line, or metric has a marginal cost, regardless of its operational value. The system has no native concept of “enough data.” Cost scales with emission.

BYOC and self-hosted models anchor cost to infrastructure. Storage, compute, and network become the primary drivers. Telemetry still has cost, but it is mediated by capacity limits, backpressure, and explicit resource allocation. Teams pay for provisioned headroom, not every event.

This difference matters under load. In SaaS, cost rises linearly with telemetry volume. In infrastructure-backed models, cost rises in steps and is bounded by design.

Cardinality Amplification and Unmodeled Usage Growth

Cardinality is the dominant cost multiplier in modern observability systems. High-cardinality attributes expand index size, memory pressure, and query fan-out.

In SaaS models, this expansion remains invisible until the billing comes. Labels derived from request IDs, user IDs, or dynamic payloads quietly multiply cost.

In BYOC and self-hosted setups, index growth, compaction pressure, and query latency increase before cost explodes. That feedback loop forces instrumentation discipline. Teams see the problem while it is still fixable.

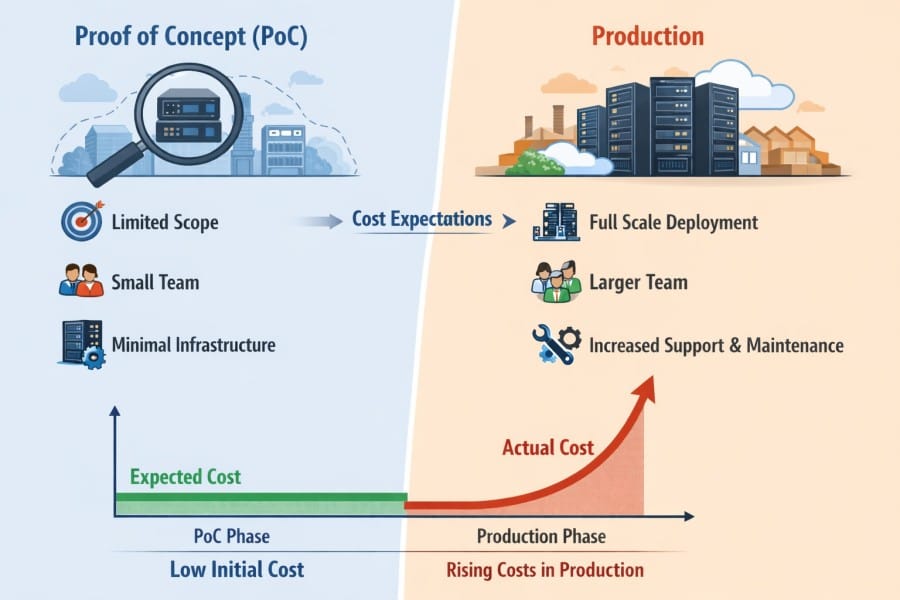

Why Costs Often Look Reasonable in PoC But Diverge in Production

Proofs of concept (PoCs) validate basic functionality. They usually run on limited traffic, short time windows, and ideal system behavior. But PoCs rarely tell real failure scenarios, retries, partial outages, long-lived services, full data retention, or realistic query patterns.

In production environments, incidents increase telemetry volume at the same time engineers run more queries to investigate issues. In SaaS observability models, both ingestion and querying are typically metered, so the moments when visibility matters most also tend to generate the highest costs.

Infrastructure-backed models handle these spikes differently. Capacity limits, sampling policies, and degraded operating modes help contain costs even when telemetry volume surges. This makes spend more predictable during high-stress events.

Cost Predictability vs Cost Optimization Across Models

Cost optimization asks how low the bill can go, but cost predictability asks how the system behaves under stress.

SaaS models optimize for frictionless onboarding and elastic scaling. Predictability declines as systems grow and cardinality increases. BYOC and self-hosted models trade convenience for bounded behavior. Cost becomes a function of architecture, without surprise usage. At scale, observability cost becomes uncorrelated with operational value.

Latency as a Decision Factor (Not an Afterthought)

Many teams treat latency in observability as secondary to application performance. In reality, it behaves like a separate system with its own bottlenecks and failure modes. Observability latency determines how quickly engineers can understand what is happening in production when systems are unstable, and decisions must be made under pressure.

As systems scale, the gap between what is happening and what engineers can see widens. Architectural choices around where telemetry flows, how it is processed, and who controls the infrastructure it runs on determine this gap.

SaaS, BYOC, and self-hosted observability models introduce latency in different places, and those differences only become visible under load.

Telemetry Latency vs Application Latency

Application latency measures how long a request takes to complete. Telemetry latency measures how long it takes for evidence of that request to reach an engineer. These timelines often diverge.

An application can remain fast while observability becomes slow. Requests complete in milliseconds, but traces arrive seconds later, logs appear in bursts, and metrics lag behind reality. During incidents, this delay turns observability into a retrospective tool rather than a live debugging tool.

So, fast applications do not guarantee fast observability. The deployment model determines whether telemetry delay is predictable or opaque.

How deployment models influence telemetry latency

- In SaaS observability, telemetry is optimized for throughput and cost efficiency. Data is buffered, batched, sampled, and exported asynchronously to remote ingestion endpoints. These optimizations reduce host overhead, but they increase end-to-end latency between execution and visibility.

- In BYOC and self-hosted models, telemetry processing runs closer to the application. Batching and sampling still exist, but flush intervals, buffer sizes, and failure behavior are controlled locally. Instead of remote ingestion queues, latency is bounded by known infrastructure limits.

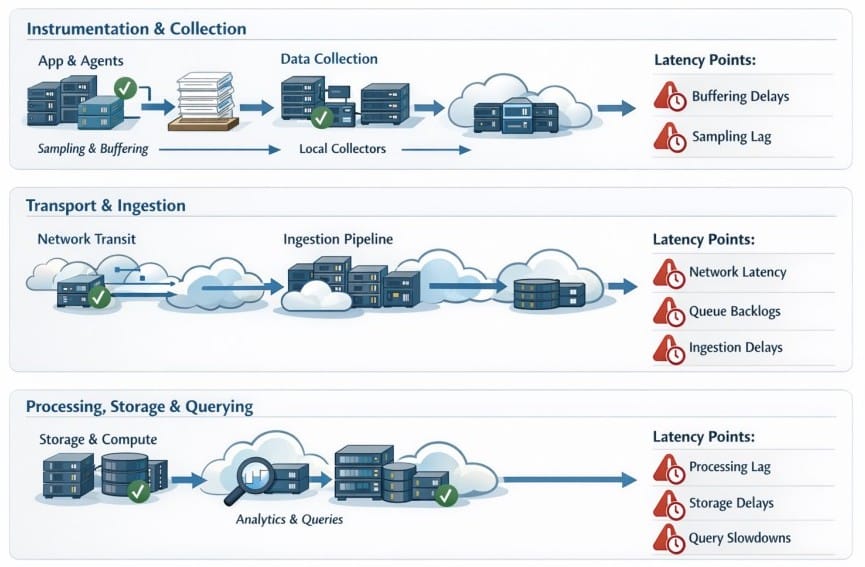

Where Latency Enters the Observability Pipeline

Observability latency is cumulative and enters at multiple stages. Each model concentrates on latency in different stages.

Instrumentation and Collection

- In SaaS systems, agents help minimize network usage and avoid overwhelming shared ingestion services. Telemetry is buffered and exported in batches. Sampling decisions are applied early to control downstream cost. This keeps ingestion stable but may increase the delay before data is visible.

- In BYOC and self-hosted systems, collectors typically run within the same cluster or network as the application. Buffering still occurs, but thresholds are tuned against local capacity. When collectors slow down, teams see it immediately through resource pressure rather than delayed data.

Transport and Ingestion

- SaaS models require telemetry to cross public networks and regions before entering multi-tenant ingestion pipelines. Under load, queues grow, retries multiply, and backpressure introduces variable delay.

- BYOC shortens this path by placing ingestion and processing inside the customer’s cloud account. Network hops remain, but contention is isolated.

- Self-hosted systems minimize transport latency further by keeping ingestion within private networks. Failures still occur, but they reflect known capacity limits rather than shared infrastructure.

Processing, Storage, and Querying

- SaaS platforms optimize for fairness and cost across tenants. Processing and queries run on shared compute, so contention during incidents makes latency inconsistent.

- BYOC and self-hosted models use dedicated compute. When queries slow down, the cause is visible in CPU, memory, or I/O pressure. Slowness is predictable and tunable.

- Predictable degradation is easier to operate under stress than variable responsiveness.

Query Responsiveness During Incidents

Incidents invert normal usage patterns. Common effects are:

- Retries and failures cause the ingestion volume to spike

- Engineers run broader, more frequent queries

- Dashboards refresh aggressively

How Query Behavior Diverges Across SaaS, BYOC, and Self-Hosted Models

In SaaS observability, ingestion pipelines saturate while query engines compete for shared resources. Dashboards lag, ad-hoc queries time out, and partial results appear. Engineers wait longer for answers while systems continue to degrade.

In BYOC and self-hosted models, ingestion pressure and query pressure contend within a fixed resource envelope. Systems slow down earlier, but more deterministically. Engineers see saturation as it happens and can choose how to trade freshness, fidelity, and scope without waiting for vendor-level throttling. Latency here directly affects decision quality alongside user experience.

Why Network Distance, Data Movement, and Compute Control Matter Under Stress

Under stress, physical limits start to matter more than design abstractions. Network distance adds unavoidable delay, cross-region data movement increases coordination overhead, and shared compute makes it harder to prioritize critical queries.

- SaaS observability abstracts these factors away, which works well in a steady state. During incidents, that abstraction hides the causes of latency. Engineers experience delays without being able to explain or influence them.

- BYOC shifts those limits into the customer’s cloud environment. Data locality improves, and latency correlates more closely with known infrastructure behavior.

- Self-hosted observability maximizes locality and control. Latency reflects physical constraints rather than vendor-level scheduling or multi-tenant contention.

Observability latency, therefore, reflects architectural choices about where observability runs and who owns its failure modes.

What Breaks First When Observability Is Under Stress

Observability systems are usually evaluated in a steady state. Dashboards load, queries return results, and costs appear predictable. Stress changes the rules. Traffic surges, partial failures, and cascading retries push observability pipelines into conditions they were not optimized for. What breaks first is rarely the part that teams expect.

This section reflects common patterns seen during real incidents, where architectural assumptions are tested under pressure rather than in design documents.

Cost spikes during traffic surges or incidents

Incidents increase telemetry volume. Error paths generate more logs. Retries multiply traces. Metrics cardinality rises as new dimensions appear. These effects compound quickly.

What teams experience:

- Ingestion volume grows faster than application traffic

- Usage-based pricing rises unexpectedly

- Short-lived incidents leave long-lived cost impact

The bigger issue here is not the lack of control during it. Cost controls that work in a steady state often lag behind incident dynamics. This turns observability into an unplanned expense precisely when teams can least afford distractions.

Query latency when engineers need fast answers

Under stress, observability usage changes. Engineers want to quickly move from dashboards to exploratory queries. They widen time ranges, join signals, and refresh frequently. At the same time:

- Ingestion pipelines are saturated

- Processing queues grow

- Query engines compete for shared resources

The result is slower, less predictable queries. Dashboards lag. Ad-hoc analysis times out. Engineers wait longer for answers while systems continue to degrade. Latency here directly slows incident response and increases the likelihood of incorrect mitigation.

Sampling and retention trade-offs made under pressure

When observability systems approach their limits, teams are forced to make decisions quickly, such as:

- Increasing sampling rates to control volume

- Dropping high-cardinality dimensions

- Shortening retention windows temporarily

These decisions are rarely made with full context. Data that might explain the root cause is often discarded first, because it is also the most expensive. Once dropped, that visibility cannot be recovered, even after the incident stabilizes.

Loss of confidence in observability data during outages

Teams stop relying on observability if they see gaps in traces, delays or inconsistencies in metrics, or queries giving partial or conflicting results. They may instead start relying on their intuition more or consider direct system intervention. Here, their decision-making becomes reactive.

When systems recover, teams may question whether they should trust observability data or not under stress. Stress reveals the true role of observability, a system that must remain reliable, predictable, and trustworthy when everything else is failing.

Control: Ownership of Data, Retention, and Decisions

Control is where observability stops being a tooling discussion and becomes an operating model decision. It determines who can change behavior under pressure, who can explain what happened later, and whether observability scales safely as systems and teams grow.

Cost and latency are felt immediately. Control is tested over time. That matters because most organizations still struggle to govern cloud usage effectively. Flexera’s 2025 State of the Cloud report notes that 84% of organizations say managing cloud spend remains a challenge, driven largely by limited visibility and control across teams.

Who Controls Sampling, Retention, and Resolution?

Sampling, retention, and resolution decide what survives the observability pipeline and what does not.

- Sampling control: means defining policy, changing behavior safely during incidents, knowing what was dropped and why, and enforcing consistency across services and environments.

- Retention control: goes beyond time windows. It could mean tiered retention for different signals, legal or security holds that override defaults. It could also mean provable deletion with audit trails and cost-aware retention without silent data loss.

- Resolution control: determines span aggregation vs raw traces, attribute truncation and cardinality limits, and partial vs full log indexing.

Many teams only discover the limits of these controls when cost pressure or incidents force rapid changes. A 2024 observability report highlights unpredictable observability costs and limited control as key blockers to adoption at scale.

Limits of SaaS Configurability

SaaS platforms often provide strong surface-level controls but limited system-level authority, such as

- Opaque data handling across managed pipelines

- Delayed enforcement of sampling or retention changes

- Packaged retention tiers with limited exceptions

- Deletion without end-to-end proof

- No ability to reserve or prioritize query compute during incidents

As cloud usage grows, these limits become more visible. Gartner forecasts public cloud end-user spending to reach $723.4B in 2025, increasing pressure on engineering teams to align cost, governance, and accountability.

Flexibility of Self-Hosted and BYOC Models

Self-hosted and BYOC models increase control by moving enforcement closer to the team’s environment. Typical areas of increased control include:

- In-region or in-VPC processing

- Storage layout and indexing strategy

- Encryption boundaries and key management

- Retention and deletion workflows with audits

- Query compute isolation during incidents

BYOC sits between SaaS and self-hosted:

- Stronger data residency and boundary control

- Shared operational responsibility

- Less abstraction, but not full autonomy

Why control becomes critical as systems and teams grow

As systems scale, telemetry volume grows non-linearly. Incidents span multiple teams, cost becomes a platform-level concern, and governance shifts from informal to provable. Similarly, as teams scale, inconsistent policies create blind spots. Local optimizations harm global visibility, and auditability becomes mandatory.

Grafana’s 2025 observability survey reports that observability spend averages around 17% of total infrastructure spend, making it too significant to manage without explicit control. At that point, control becomes a requirement for operating observability as long-lived infrastructure.

Governance: The Decision Point That Finalizes the Choice

Governance is where observability decisions stop being reversible. Engineering teams may evaluate cost, latency, and control, but governance determines whether a model is acceptable to run in production long term.

Once compliance, security, and audit requirements enter the picture, preference gives way to obligation. This is why many observability decisions are effectively finalized outside the engineering org.

Data Residency and Compliance Requirements

Data residency is no longer an edge case. It is a baseline requirement for many industries and geographies. This is because of these reasons:

- Telemetry often contains sensitive metadata (user IDs, IPs, request payloads)

- Traces and logs are increasingly classified as regulated data

- Cross-border data transfer rules apply even to operational telemetry

Regulatory pressure is rising. According to Cisco’s 2024 Data Privacy Benchmark Study, 94% of organizations say customers would not buy from them if data were not properly protected, and 62% report that data localization requirements directly affect where they deploy systems.

SaaS observability can complicate residency guarantees when ingestion, storage, indexing, or support access crosses regions. BYOC and self-hosted models are often chosen specifically to align observability data paths with existing residency and sovereignty controls.

Security Reviews and Audit Ownership

Observability platforms are increasingly in scope for security audits because they sit across the entire system. Security teams now ask:

- Where is telemetry stored and replicated

- Who can access raw production data

- How access is logged and reviewed

- How long sensitive telemetry persists

- How deletion and redaction are enforced

Audit ownership matters. In SaaS models, parts of the audit trail live outside the organization’s direct control. In self-hosted (on-prem or BYOC) models, audit responsibility shifts inward, but so does visibility and proof.

PwC’s 2024 Global Digital Trust Insights survey reports that 75% of organizations expect to increase audit scope for operational and platform systems over the next two years, driven by regulatory and board-level scrutiny.

Access Control and Internal Governance Models

As organizations scale, observability access stops being binary. Common requirements include:

- Fine-grained RBAC across teams and services

- Separation of duties between operators, developers, and auditors

- Temporary elevated access during incidents

- Alignment with corporate identity and access systems

In many SaaS models, access control is constrained by platform abstractions. Self-hosted and BYOC deployments more easily integrate with internal IAM, approval workflows, and governance tooling.

This matters because observability often becomes a shared system across engineering, SRE, security, and compliance. Governance failures here tend to be organizational, and not necessarily technical.

Why Governance Often Overrides Engineering Preference in Enterprise Decisions

Engineering teams may prefer speed, simplicity, or familiarity. Governance teams optimize for risk, accountability, and proof. In enterprise environments:

- Compliance failures carry legal and financial penalties

- Audit gaps delay certifications and contracts

- unclear data ownership blocks customer approvals

- Security exceptions accumulate until they force redesign

This is why observability decisions frequently change as organizations grow. Instead of replacing engineering judgment, governance constrains it. And in observability, those constraints often determine whether SaaS, BYOC, or self-hosted models are viable long before feature comparisons matter.

Bring Your Own Cloud (BYOC): The Middle Ground Between SaaS and Self-Hosted

Teams now want stronger control and clearer governance than pure SaaS allows, but they do not always want to fully own and operate an observability stack end to end. BYOC sits in between, blending vendor-managed software with customer-owned infrastructure boundaries.

In a BYOC model, the observability platform is deployed inside the customer’s cloud account or VPC, while parts of the lifecycle may still be managed by the vendor. BYOC changes where trust is placed. Teams trust their own cloud controls, identity systems, and network policies more than a vendor’s shared environment.

How BYOC Differs from Pure SaaS (Data Location & Control)

The biggest difference between BYOC and SaaS is where data lives and how it moves. Compared to SaaS:

- Telemetry does not leave the customer’s cloud boundary

- Data residency is enforced by infrastructure placement, not policy promises

- Network paths are visible and auditable

- Integration with internal IAM, KMS, and networking is more direct

However, BYOC improves boundary control but does not automatically grant full transparency. Some processing logic, configuration abstractions, or operational decisions may still be handled by the vendor’s software layer.

How BYOC Differs from Fully Self-Hosted

BYOC differs from a fully self-hosted model. Compared to self-hosted:

- Vendors often manage software upgrades and compatibility

- Scaling behavior may be automated or guided by the platform

- Operational runbooks are shared rather than fully internal

- Deep system tuning may be constrained

Self-hosted observability maximizes authority but also responsibility. BYOC reduces some operational load, but teams still need to understand cost, capacity planning, and failure modes.

Why Do Teams Choose BYOC Observability?

- Data residency: BYOC helps teams meet data residency requirements without building or operating a fully-fledged observability platform. Observability runs inside their own cloud, so telemetry data stays in-region. This is useful for teams in regulated industries where data location matters.

- Security and compliance: BYOC fits more naturally into a company’s existing security models. Teams can reuse their cloud security controls and define audit boundaries. They can confidently answer questions about data location and access permissions. Even security and compliance teams are often more comfortable approving BYOC systems than those using full SaaS platforms.

- Lower vendor lock-ins: BYOC keeps data closer to the customer’s control. This means telemetry storage and formats are more accessible, and migration paths are clearer. They also don’t have to rely on proprietary ingestion endpoints. So, the risks of vendor lock-ins are lower.

- Managed service: Unlike open-source self-hosted setups that are self-managed (by customers), BYOC is a vendor-managed service. So, operational overhead is lower.

- Operational responsibility: BYOC redistributes responsibilities. Teams own cloud infrastructure costs, capacity planning, and coordination during infrastructure-level incidents. Vendors may assist with software and support, but accountability for the environment ultimately rests with the customer.

- Cost predictability: Not all BYOC models behave the same. Predictability varies based on how infrastructure usage is billed, how much control teams have over retention and sampling, and whether compute and storage scale tightly with usage. BYOC improves cost control only when these drivers are visible and manageable.

BYOC works best for teams that want stronger governance and clearer boundaries without fully operating an observability stack themselves. It is not a shortcut. It is a deliberate architectural trade-off that exchanges simplicity for control and clarity.

Operational Reality: Who Owns What?

Every model runs software, consumes infrastructure, fails in specific ways, and requires people to respond. The difference is not whether there is operational work, but who owns it, when it shows up, and how visible it is to the team. Understanding this upfront prevents mismatched expectations later.

Operational Responsibility in SaaS, BYOC, and Self-Hosted Models

SaaS: In SaaS models, the vendor typically owns the ingestion, storage, and query infrastructure, along with scaling, capacity management, upgrades, and overall platform reliability. From an infrastructure perspective, this removes the need for teams to provision or operate observability systems directly.

However, teams still retain responsibility for how the platform is used. Instrumentation quality, telemetry volume, and cardinality decisions remain fully in the customer’s control, as do the cost outcomes driven by those choices. Teams are also responsible for diagnosing issues.

BYOC: Here, operational responsibility is shared between the vendor and customer. The observability stack runs inside the customer’s cloud environment, but the vendor often manages parts of the software lifecycle, upgrades, etc. Teams are responsible for maintaining cloud infrastructure reliability, capacity planning, networking, and controlling costs.

Since responsibilities are shared, incidents require coordination between the customer and the vendor. Teams gain more visibility and control than pure SaaS, but they also take on more responsibility, so the environment behaves predictably even under stress.

Self-hosted: Here, ownership is clear and end-to-end. Teams operate the infrastructure, manage the software, handle scaling and upgrades, and respond to failures internally. This increases operational responsibility, but it also increases clarity. Cost, latency, and reliability characteristics are fully visible, and failure modes can be reasoned about directly without depending on external systems or support paths.

“Zero-Ops” Observability Is a Myth

SaaS observability: “Zero-ops” in SaaS means that the vendor handles infrastructure operations. But this doesn’t mean the customers have absolute “zero” operational responsibility.

- Customers still need to tune telemetry pipelines.

- Sampling and retention still need governance

- Cost spikes still demand attention

When data gaps appear during incidents, teams must explain them even if they did not control the platform internals. Teams manage fewer servers, but they still manage outcomes or situations when visibility is degraded.

BYOC observability: Compared to full self-hosting, BYOC reduces some operational burden, but it’s not zero-ops. Customers manage Infrastructure issues, capacity constraints, and cloud-level failures, often alongside vendor support.

Self-hosted observability: Here, all operational responsibility is direct, including maintenance, scaling, and failure recovery. There is no abstraction layer to hide complexity. This increases effort, but eliminates ambiguity. Teams understand exactly where responsibility lies, so they can plan it accordingly.

Where Operational Effort Shifts

- SaaS observability: Operational effort shifts toward managing cost and usage. To stay within budget, teams spend time monitoring telemetry volume, explaining bills, and adjusting ingestion patterns. Incident response relies on vendor tooling, platform efficiency, and customer support responsiveness.

- BYOC observability: The effort shifts toward cloud capacity planning and boundary management. Although it gives teams better visibility on infrastructure, shared ownership can add friction during incidents.

- Self-hosted observability: The effort is more on site reliability engineering (SRE) and automation. Teams invest in scaling strategies, runbooks, and upgrade processes. Failures are fully owned, but are fully diagnosable, with direct access to every layer of the system.

Team Maturity and Platform Ownership

- SaaS observability: Less mature may value faster onboarding, fewer infrastructure decisions, and reduced operational responsibilities. It’s useful for teams that need speed and are willing to accept trade-offs in control and cost predictability.

- BYOC observability: BYOC appeals to teams that are growing and need stronger governance without fully internalizing observability operations. It suits organizations transitioning observability from a team-level tool to shared infrastructure.

- Self-hosted observability: More mature teams tend to prioritize predictable behavior under load. They also want to see clear ownership boundaries and to be able to reason about failures end-to-end. As organizations scale, observability often becomes shared platform infrastructure, where implicit ownership becomes a liability.

Operational reality consistently favors models where responsibility is explicit, visible, and aligned with how teams already run production systems.

Where CubeAPM Fits in the Self-Hosted & BYOC Spectrum

The models discussed above are implemented by different platforms in different ways. CubeAPM is one example of how BYOC and self-hosted observability are designed in practice.

CubeAPM fits on the self-hosted and BYOC end of the observability spectrum. It is designed for teams that want observability to run inside their own cloud or infrastructure boundaries, instead of treating it as an external, vendor-operated service.

The positioning is architectural rather than feature-driven. CubeAPM assumes observability is part of the platform stack and should follow the same rules as other production systems.

Deployment Model: Self-Hosted and BYOC

CubeAPM is designed for environments where observability runs inside the team’s own cloud or infrastructure. Ingestion, storage, and query execution operate within defined network and data residency boundaries. Teams can clearly see how observability behaves under load and during incidents.

The model aligns naturally with organizations that already operate core infrastructure and want observability to live alongside it, not outside it.

Data Ownership and Control

CubeAPM is built for teams that want unambiguous ownership of their telemetry data. Observability data stays within the organization’s environment, access follows existing identity and security controls, and retention, deletion, and audit policies can be enforced using internal standards.

As systems grow and compliance requirements tighten, this clarity reduces uncertainty around who owns the data, how it is governed, and how decisions about telemetry are made.

Governance and Compliance Alignment

Since CubeAPM runs inside the organization’s infrastructure, observability inherits existing governance and compliance models. Teams can apply internal access controls and approval workflows and support audits with clearer system boundaries. It helps them align observability data handling with residency requirements.

Platform-First Mindset

CubeAPM is typically adopted by organizations that treat observability as long-lived platform infrastructure, instead of a short-term tooling choice. These teams prioritize architectural clarity over:

- Convenience

- Ownership over abstraction, and

- Consistency with how the rest of the platform is operated

In that context, observability is an integral part of the system itself, and CubeAPM fits naturally into that operating model. As with any self-hosted or BYOC approach, these benefits depend on having clear platform ownership and the ability to operate observability as production infrastructure.

Predictable Cost

With CubeAPM, teams can predict costs comfortably as systems evolve. It doesn’t confuse them with multiple pricing dimensions or with user-based or feature-based pricing, which can grow unexpectedly at scale.

CubeAPM’s simple ingestion-based cost is just $0.15/GB. This means customers won’t have to face surprise cost spikes during incidents. They can instead plan observability as a planned infrastructure expense.

When SaaS Observability Makes Sense

In many environments, SaaS observability is a rational and well-aligned choice. The key is understanding the conditions under which its trade-offs work in your favor, rather than against you.

From an architectural point of view, SaaS-based observability performs best when teams prioritize operational simplicity and speed more than long-term control or customization. Here are some of the cases:

Smaller Teams

SaaS observability fits well when teams are small and cross-functional and need faster operations. It suits teams where infrastructure ownership is still evolving, and reliability processes are informal or emergent.

In these environments, outsourcing observability operations reduces stress. Teams can instrument quickly, gain visibility, and stay focused on product delivery, instead of managing the system by themselves.

Limited Compliance Requirements

SaaS observability is often viable when regulatory and governance requirements are minimal:

- No handling of regulated or sensitive data

- Data residency constraints are weak,

- Audit and deletion needs are straightforward, and

- Security reviews are lightweight

Under these conditions, vendor-managed environments can satisfy compliance expectations without introducing unnecessary complexity.

Faster Time-to-Value

SaaS models excel at minimizing the gap between instrumentation and insight. Teams can see value quickly with quick onboarding, prebuilt dashboards, managed upgrades, and ready-made integrations.

Lower-Cardinality Workloads

SaaS observability behaves most predictably when telemetry characteristics are stable. It works best when:

- Request patterns are consistent

- Cardinality is naturally bounded

- Traffic growth is gradual

- The telemetry volume scales roughly in line with usage

Usage-based pricing is justifiable in these cases. Observability costs tend to track business growth without sudden spikes or complex governance concerns.

When BYOC or Self-Hosted Observability Becomes the Better Choice

BYOC and self-hosted observability models tend to emerge from changes in scale, risk, and organizational responsibility. As systems grow and observability becomes a shared platform concern, the assumptions that make SaaS attractive early on often stop holding.

From an architectural standpoint, these models become compelling when predictability, control, and ownership matter more than convenience.

Large or Fast-Growing Systems

Growth changes the shape of observability problems. In large or rapidly expanding systems:

- The telemetry volume grows non-linearly as services, dependencies, and dimensions increase

- The incident blast radius widens across teams and components

- Steady-state behavior becomes less representative of peak conditions

At this scale, observability systems must handle sustained high volume and sudden spikes without degrading visibility. BYOC and self-hosted models provide more leverage to shape ingestion, storage, and query behavior in line with system growth.

Cost Predictability

Organizations that value cost predictability typically need:

- Observability cost that they can easily forecast

- Controls that respond immediately during incidents

- Clear attribution of cost drivers to teams or services

BYOC and self-hosted models expose cost mechanics more directly. Teams can customize retention, sampling, and storage strategies that align better with their financial constraints.

Strong Data Control and Governance Needs

BYOC and self-hosted observability are preferable when:

- Observability data falls within security or regulatory scope

- Teams need to enforce data residency

- Prove auditability and deletion

- Align access controls with internal IAM and approval workflows

These models allow observability to inherit existing governance frameworks.

Ownership Mandates

Platform teams are typically responsible for:

- Defining global observability standards

- Enforcing consistent policies across teams

- Balancing reliability, cost, and governance

- Owning failures and trade-offs explicitly

BYOC and self-hosted models define responsibilities clearly. Hence, they align better with these requirements. When observability becomes part of the platform rather than a supporting service, BYOC and self-hosted models stop being “advanced options.” They become the natural architectural choice.

SaaS vs BYOC vs Self-Hosted: A Practical Decision Matrix

This comparison is meant to surface behavioral differences, not rank options. Each model optimizes for a different set of constraints, and those differences become most visible under scale, stress, and governance pressure.

Cost Behavior

- SaaS observability typically ties cost to usage signals, such as ingestion volume, cardinality, and query behavior. This can be efficient early on, when workloads are small and predictable, but it becomes harder to forecast as systems grow and telemetry patterns diversify.

- In BYOC, infrastructure, storage, and data movement are more directly observable. But vendor pricing still applies at the software layer. This usually improves predictability, but outcomes vary depending on how transparent and configurable the BYOC implementation is.

- Self-hosted observability maps cost directly to infrastructure and operational choices. When you plan capacity, retention, and sampling intentionally, it’s easier to predict spending.

Latency Under Stress

- In SaaS models, observability latency depends on shared ingestion and query infrastructure. During incidents, multi-tenant contention and shared compute can increase query latency precisely when engineers need answers the fastest.

- BYOC keeps telemetry inside the customer’s cloud environment to reduce network distance and improve data localization. This offers more consistent performance under load.

- Self-hosted models allow telemetry processing and query execution to be colocated with production workloads. This gives teams full control over compute prioritization and isolation during incidents. So, latency behavior is more predictable, but at the cost of managing the infrastructure.

Control and Customization

- SaaS platforms offer strong configuration options but limited visibility into end-to-end data handling. Since it may abstract away sampling, aggregation, and indexing behavior, teams face difficulties enforcing policy across services.

- BYOC lets you control your data boundaries and infrastructure behavior. So, teams know where and how telemetry is processed. However, deep system-level customization depends on how much the vendor exposes and how responsibilities are shared.

- Self-hosted observability provides full control over sampling, retention, resolution, storage layout, and query behavior. Here, you get more customization options, but it depends on your team’s expertise and operational maturity.

Governance and Compliance

- SaaS observability relies on vendor certifications and policy guarantees. It enforces data residency logically and not physically. Audit or deletion proofs may require coordination with the vendor.

- BYOC enforces data residency through infrastructure placement to strengthen governance and align observability with existing security and audit models. It improves compliance outcomes, but still depends on how much transparency and control the platform provides.

- Self-hosted observability allows enforcing governance requirements directly through infrastructure, access controls, and internal processes. It allows full auditability and provable deletion, provided the organization’s governance practices are mature.

Operational Ownership

- In SaaS models, vendors own infrastructure reliability and platform operations. Teams handle instrumentation quality, telemetry volume, and cost outcomes. This model reduces operational effort, but accountability for results remains.

- BYOC introduces shared ownership. Teams own cloud reliability, capacity, and cost, while vendors often manage the software lifecycle. They may coordinate incident response and troubleshooting.

- Self-hosted observability places full operational responsibility on the organization. This maximizes clarity on who is responsible for what, diagnosability, and alignment with teams that already operate the platform. But this increases operational burden.

Conclusion

SaaS, BYOC, and self-hosted observability are all useful for different scenarios. Their trade-offs usually become visible as systems and teams grow and governance requirements harden.

The key difference between the three is ownership. Where observability infrastructure runs and who operates it determine how predictable observability remains during incidents, audits, and changes.

Teams that evaluate observability as architecture rather than tooling tend to avoid painful migrations later. Teams also need to revisit this decision early, with a clear understanding of scale, risk tolerance, and operational ownership. This way, observability can evolve smoothly alongside the platform.

Disclaimer: The information in this article reflects the latest details available at the time of publication and may change as technologies and products evolve.

FAQs

1. Is self-hosted observability cheaper at scale?

It can be, but not automatically. Self-hosted observability often shifts costs from variable usage-based pricing to infrastructure and operational expenses. At larger scales, this can lead to more predictable spending, especially when data volumes, retention needs, and query patterns are stable. However, teams must account for storage growth, reliability engineering, and ongoing maintenance when evaluating total cost.

2. How does BYOC compare to fully SaaS observability?

BYOC places observability infrastructure inside the customer’s cloud environment while keeping parts of the stack vendor-managed. This improves data locality, residency, and control compared to pure SaaS, but it does not eliminate operational responsibility. Costs, performance, and control vary widely depending on how much of the data plane and control plane remains vendor-owned.

3. Does self-hosted observability increase operational risk?

Self-hosting increases responsibility, not necessarily risk. Teams gain more control over failure modes, upgrades, and data handling, but they also own availability and recovery. For organizations with mature platform teams, this can reduce long-term risk. For smaller teams without dedicated ownership, it can increase operational burden if not carefully planned.

4. How does OpenTelemetry affect this decision?

OpenTelemetry reduces lock-in by standardizing instrumentation and data collection, making it easier to change backends or deployment models over time. This flexibility lowers the cost of moving between SaaS, BYOC, and self-hosted approaches and makes architecture decisions less irreversible. It does not remove trade-offs, but it makes them easier to revisit.

5. Can teams run hybrid SaaS plus BYOC or self-hosted observability?

Yes. Many teams operate hybrid setups, often during migrations or when different workloads have different requirements. Hybrid models can balance convenience and control, but they also introduce complexity in data consistency, tooling, and operational ownership. Most teams treat hybrid approaches as transitional rather than permanent.