OpenTelemetry has rapidly solidified its position as the industry’s foundational observability standard. Its rise is not accidental. Backed by the Cloud Native Computing Foundation (CNCF), the same organization behind Kubernetes, OpenTelemetry has seen rapid adoption as teams look for a common, vendor-agnostic way to instrument distributed systems. This trajectory is validated by CNCF data, which tracks a fivefold increase in adoption, climbing from 4% in 2020 to 20% in 2022, marking an aggressive shift toward unified, vendor-agnostic instrumentation across the global enterprise landscape.

OpenTelemetry is commonly adopted to standardize instrumentation, improve service-to-service visibility, and preserve flexibility as architectures and vendors change. Although OpenTelemetry successfully standardizes telemetry ingestion and transport, it remains agnostic toward the downstream complexities of ingestion volume, fiscal governance, and long-term data lifecycle management.

Understanding the value that OpenTelemetry provides, where it’s ineffective, and how to apply it successfully is critical. The goal is practical clarity on what it takes to achieve observability that remains credible in real systems.

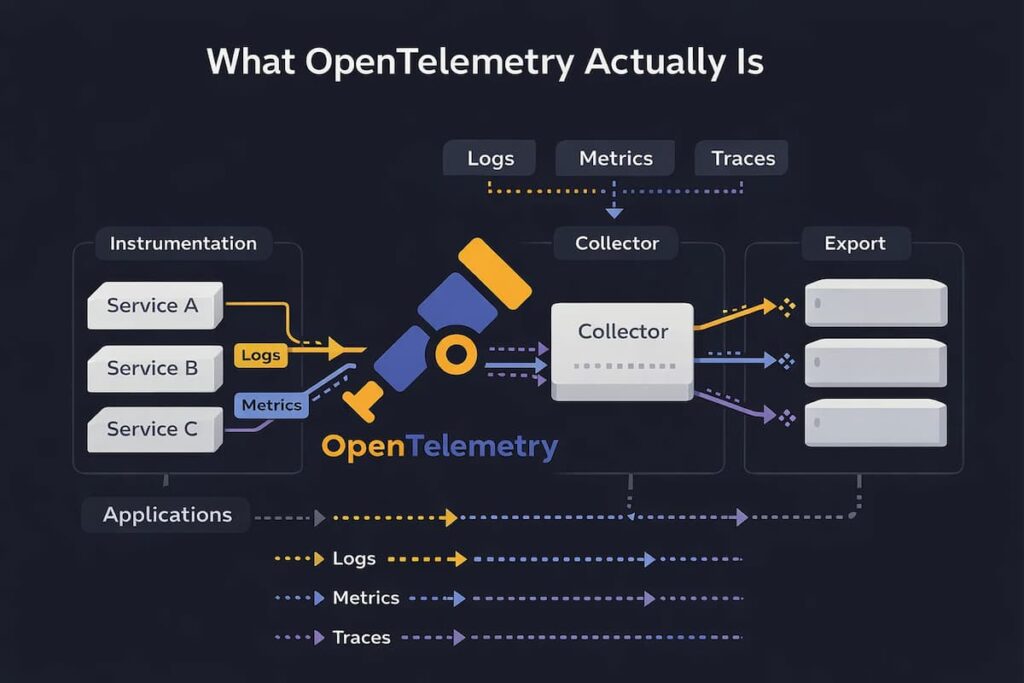

What OpenTelemetry Actually Is

OpenTelemetry (often shortened to OTel) provides a unified, vendor-agnostic standard for generating, collecting, processing, and exporting telemetry data such as logs, metrics, and traces from cloud-native systems.

Instead of each vendor defining its own agents, formats, and conventions, OpenTelemetry standardizes how telemetry is produced and moved through the system. This makes instrumentation portable across languages, platforms, and backends, even as architectures and vendors change.

The key to using OpenTelemetry well is understanding its boundaries.

- A standard, not a product: It gives teams APIs, SDKs, and shared conventions to generate telemetry, but it does not ship dashboards, alerting systems, or long-term storage. Those pieces are intentionally left to downstream platforms.

- What it standardizes: What it does standardize is the mechanics of instrumentation. This includes how APIs and SDKs behave, how context is propagated across services, the semantic conventions used to describe telemetry, and OTLP as the protocol for moving that data through the pipeline.

- Clear boundaries: OpenTelemetry stops at data collection. Querying, alerting, visualization, retention, and cost control are responsibilities of observability platforms.

- Infrastructure, not monitoring: OpenTelemetry delivers a solid telemetry data pipeline. It moves data reliably, but it doesn’t interpret that data for you.

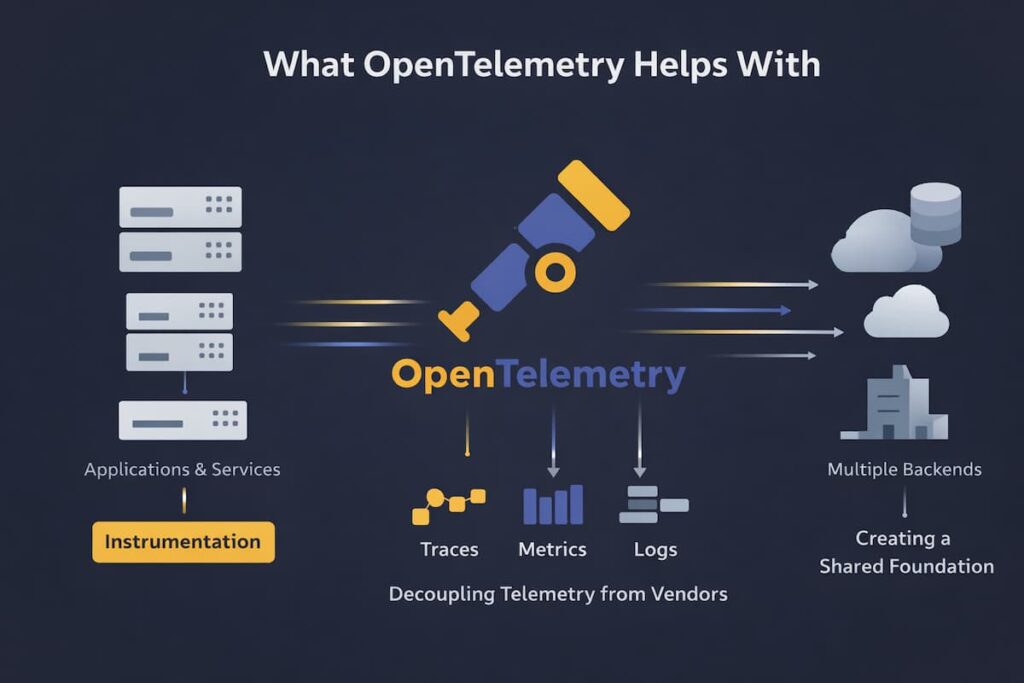

What OpenTelemetry Helps With

OpenTelemetry helps teams build a clean, flexible foundation for telemetry in distributed systems. It doesn’t give you answers by itself, but it makes sure the right data is available when you go looking for them.

The key areas where open telemetry is significant include:

- Unified instrumentation across your teams: Services emit telemetry in a consistent way, even when teams use different languages and frameworks.

- Decoupling telemetry from vendors: Collection is no longer tied to a single observability backend or proprietary SDK.

- Creating a shared foundation: Distributed systems finally produce data that fits together instead of fragmenting across tools.

The major benefits include

Reducing Vendor Lock-In

Vendor lock-in is a long-term risk in observability because it ties instrumentation, data formats, and workflows to a single provider. OpenTelemetry reduces this risk by standardizing how telemetry is generated and exported.

OTel enables teams to:

- Instrument once, export anywhere: The same instrumentation can send data to multiple backends at the same time.

- Vendor Portability: Lock-in is obsolete. By decoupling the telemetry generation layer from the storage backend, engineering organizations can migrate between observability providers without the traditional requirement of refactoring codebases or re-instrumenting entire service clusters.

- Architectural flexibility: Observability strategies can evolve as systems and budgets grow.

For many teams, this flexibility alone justifies the move to OpenTelemetry.

Improving Distributed Tracing Coverage

Distributed tracing often breaks down when different SDKs and propagation methods collide. OpenTelemetry brings consistency to this chaos.

Request paths cease to vanish when trace context flows properly.

- Consistent context propagation: Trace context is propagated between microservices in a clean manner

- Clearer request flows: Hence, engineers can view the flow of requests of an entry point to the downstream dependencies.

- Fewer broken traces: Standardization reduces issues caused by mixed or proprietary SDKs.

Good tracing still requires discipline, but OpenTelemetry makes it realistic.

Accelerating Instrumentation With Auto-Instrumentation

Getting started with observability is usually slow. Auto-instrumentation removes much of that initial friction.

It gives teams fast, usable visibility without a large upfront effort.

- Fast initial setup: An automatic instrumentation of common frameworks and libraries

- Less manual work: Teams receive baseline traces and metrics requiring few code modifications.

- Manual spans still matter: Custom spans are still required in business logic, asynchronous workflows, and domain events.

Auto-instrumentation helps you start, not finish.

Centralizing Telemetry Processing With the OpenTelemetry Collector

As systems grow, managing telemetry inside applications is a challenge. OpenTelemetry emerges as a gateway of observability between backends and services.

- Enrichment: Add consistent service, environment, and infrastructure metadata.

- Filtering and routing: Drop low-value data and route critical signals differently.

- Pipeline hygiene: Maintain simple instrumentation, but with the implementation of rules at the center.

When used well, the Collector acts as an efficient safety valve for growing systems.

Enabling Cross-Signal Correlation

Observability works best when signals support each other. OpenTelemetry lays the groundwork for this connection.

Consistency across signals makes investigations faster and less frustrating.

- Shared resource attributes: True observability isn’t just about collecting signals; it’s about ensuring those signals actually reinforce one another.

- Service-level analysis: Signals can be grouped and compared more easily.

- Stronger investigations: With a unified foundation, root cause analysis shifts from a manual correlation exercise to a connected, data-driven workflow that feels cohesive rather than disjointed.

- Low resource footprint: The OpenTelemetry Collector is designed to be lightweight and efficient. When configured correctly, it adds minimal CPU and memory overhead, making it suitable for centralized gateway deployments.

Correlation isn’t automatic, but the foundation is finally there.

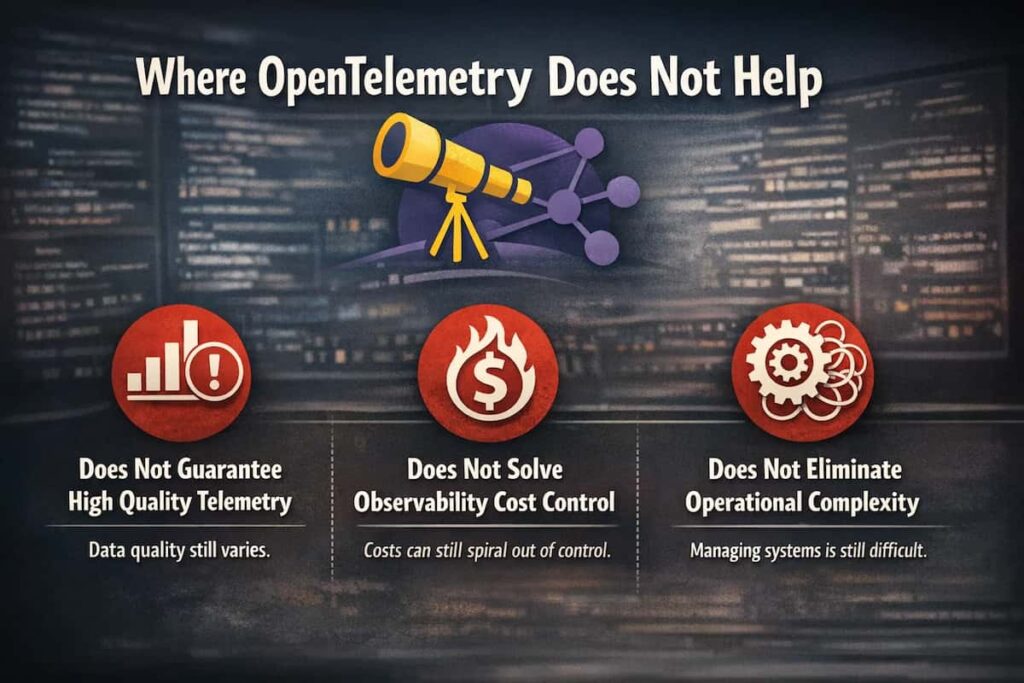

Where OpenTelemetry Does Not Help

OpenTelemetry excels at making data collection consistent across backends without the need for additional instrumentation. It does not solve what happens after that. This distinction often causes confusion.

It Does Not Guarantee High-Quality Telemetry

Standardization does not automatically mean quality.

OpenTelemetry provides a common mechanism for collecting telemetry but does not dictate the quality of that telemetry or its utilization.

- Poor span names still hurt: Bad naming, missing attributes, or inconsistent conventions make traces hard to understand.

- Unclear service boundaries: when the architecture of the system is not very clear, it will be seen in the telemetry.

- Does Not Enforce Governance: Without a mandated discipline regarding attribute standards and instrumentation rigor, teams will produce siloed, non-standardized data that undermines the entire goal of a unified observability stack.

Good telemetry is a people and process problem and not something tooling can fix.

It Does Not Solve Observability Cost Control

More visibility often comes with a hidden price.

OpenTelemetry makes it easy to collect more data, but it does not decide what data is worth keeping.

- The Lifecycle Management Gap: It is a common misconception that the framework handles the “afterlife” of data. OpenTelemetry remains agnostic toward data lifecycles; it is purely a collection and transport mechanism that offers no native functionality for retention policies, tiered storage, or automated aging-out of stale signals.

- Policies are required: Cost control comes from governance decisions such as what to collect, what to filter, and how backends handle the data.

Without clear rules, observability costs tend to grow faster than expected.

It Does Not Automatically Deliver Effective Sampling

Sampling looks easy in a steady state. It breaks down during traffic spikes, partial outages, and failures.

While OpenTelemetry supports sampling mechanisms, it does not tell you when to sample, what to prioritize, or how to adapt sampling during incidents.

- Head-based limits: It is a sampling decision that is made prior to the complete request context.

- Lack of system awareness: Instrumentation cannot be used to determine which traces are most important.

- Incident blind spots: It is common to lose significant traces when an outage occurs.

It takes system-level intelligence to effectively sample as opposed to SDK settings.

It Does Not Replace an Observability Backend

This is one of the most common misconceptions.

OpenTelemetry collects and exports data, but everything after that still needs a backend.

- OTel is not storage. The framework remains entirely agnostic toward data persistence, providing no native functionality for long-term telemetry storage or the execution of complex analytical queries.

- No dashboards or alerts: Visualization and alerting logic exist outside the OTel scope, requiring that dashboards and incident response workflows be managed within the observability platform.

- Must be paired: A backend platform is required to make telemetry usable.

It Does Not Eliminate Operational Complexity

Integrating a standardized instrumentation layer often introduces new architectural variables that require disciplined oversight to prevent the emergence of fresh technical debt.

- Collector management: Deployments, upgrades, and configurations require care.

- Pipeline scaling: Telemetry pipelines must be reliable under load.

- More moving parts: The observability stack grows, even if it becomes more flexible.

Complexity doesn’t disappear; it shifts.

It Does Not Simplify Metrics Semantics

Metrics are easy to collect but difficult to design correctly. Even with OpenTelemetry, teams still have to make deliberate choices about aggregation, temporality, and label structure.

- Temporality confusion: Different aggregation models can lead to unexpected results.

- Cardinality risks: Uncontrolled labels can overwhelm backends.

- Design still matters: Choosing what to measure, how to aggregate it, and how it will be interpreted remains a design responsibility.

Expectations vs Reality With OpenTelemetry

OpenTelemetry has emerged as the default open-source standard for application instrumentation. It promises vendor-neutral, unified collection of traces, metrics, and logs, all through a single, consistent approach. On the surface, it sounds like the answer to years of fragmented tooling and lock-in.

However, when teams get past demos into actual production systems, the situation changes. As a matter of fact, OpenTelemetry is frequently used to enhance consistency at the instrumentation layer without addressing more fundamental observability issues. The collection and export of telemetry have become uniform, but the cost growth remains a challenge, and the complexity of the operation increases as the Collector pipelines increase in size.

To explore the reasons behind such a gap, it is critical to unearth the expectations vs reality once systems are deployed and scaled

- What Teams Expect When Adopting OpenTelemetry: The expectations of most teams are high. They believe that open Telemetry can ease observability and remove the various pain points over the years.

- What Actually Changes in Production Environments: When implemented at scale, OpenTelemetry delivers solid improvements in consistency and portability. However, it does not resolve issues related to cost growth, data prioritization, or operational overhead.

- Why OpenTelemetry Improves Foundations, Not Outcomes: OpenTelemetry standardizes how telemetry data is produced and transported. It does not determine how that data is sampled, stored, queried, or acted upon. Observability outcomes continue to depend on downstream platforms, architectural decisions, and operational practices.

Common OpenTelemetry Failures in Production

OpenTelemetry often looks clean and simple in diagrams, but weaknesses are exposed in production. When things go wrong, they usually go wrong in familiar patterns.

Most OpenTelemetry failures in production don’t come from the OpenTelemetry specification itself. They come from misconfiguration, under-sizing, and treating telemetry as an afterthought. Resource exhaustion, unstable Collectors, degraded application performance, and missing or low-quality data are all common symptoms.

Here are the failure modes teams run into most often once OpenTelemetry is running at scale.

- Broken Traces From Inconsistent Context Propagation: When trace context breaks, visibility breaks with it. This usually happens when services use mixed propagation formats or skip instrumentation in key paths.

- Cardinality Explosions From Unbounded Attributes: High-cardinality data is one of the fastest ways to overwhelm an observability system. It often starts with good intentions and ends with runaway costs.

- Collector Bottlenecks From Under-Sizing or Misconfiguration: The OpenTelemetry Collector is powerful, but it’s also easy to underestimate the problems it can cause. When misconfigured, it becomes a single point of failure.

- Collecting Everything Without Prioritizing What Matters: More data does not automatically mean better observability.

A Practical OpenTelemetry Adoption Plan

A successful OpenTelemetry rollout focuses on standards, control, and governance from the start. These three areas help teams avoid chaos while still getting the flexibility OpenTelemetry promises.

- Establish Instrumentation and Naming Standards: Consistency is the foundation everything else depends on. If teams lack shared standards, observability tools can be working perfectly while the data still feels confusing and unreliable.

- Use Consistent service naming and environment identifiers: Reliability in cross-service correlation depends on an immutable identity schema. Every microservice must be bound to a standardized naming convention and explicit environment identifiers; without this level of metadata hygiene, the ability to isolate anomalies within distributed traces during an incident becomes functionally impossible.

- Uniform attribute conventions across teams: Teams should use the same attribute names and meanings to avoid fragmented or misleading telemetry.

- One propagation strategy enforced everywhere: A single context propagation format prevents broken traces and partial visibility.

Good standards remove ambiguity before it becomes a problem.

Build a Controlled Telemetry Pipeline

Telemetry should be treated like production traffic. Here, OpenTelemetry Collector is at centre stage and must not be an afterthought

- Deploying the Collector as a first-class system component: Size, monitor, and respond to failure with effectiveness.

- Filtering low-value telemetry early: Dropping noisy or unused data after it is generated.

- Routing critical signals differently from baseline data: Telemetry with high priority must be able to withstand spikes and outages at the moment it counts.

Introduce Governance for Sampling, Retention, and Cost

Observability only stays useful when it’s sustainable.

Governance turns OpenTelemetry from a data firehose into a decision-making tool.

- Defining what telemetry must be kept and why: Retention of data should have a purpose. Data utility should be the primary driver. We must move away from “hoarding by default” toward a model where every retained signal serves a specific functional purpose.

- Aligning retention with reliability and compliance needs: Retention policies need to reflect real risk. That includes operational needs such as post-incident analysis, as well as regulatory requirements. This should shape how long data is kept.

- Reviewing telemetry policies as systems evolve: Telemetry needs change as architectures change. Signals that are useful today may lose relevance as traffic patterns, dependencies, or failure modes shift

What to Look for in an OpenTelemetry-Compatible Backend

OpenTelemetry defines how telemetry is produced and exported. In practice, the quality of observability depends less on how data is collected and more on how it is stored, processed, and explored.

When choosing an OpenTelemetry-compatible backend, teams should look beyond basic compatibility. Native OTLP support, strong handling of all three signals (logs, metrics, and traces), and reliable correlation between them all matter.

The following capabilities tend to matter most once OpenTelemetry is deployed at scale.

- Native OTLP support: Built-in OTLP ingestion eliminates the situational latency, complexity, and data loss of translation layers.

- Strong signal correlation: Logs, metrics and traces must be related in a natural manner, and thus investigations will be faster and more intuitive.

- Predictable costs: With increasing data volume, pricing must increase linearly in a fashion that is comprehensible and controllable.

- Access and retention controls: Fine-grained access and retention policies become imperative, particularly in controlled or multi-team settings.

How These Gaps Are Addressed in Practice

Learning the boundaries of OpenTelemetry becomes relevant only when the teams understand how to bypass them. Practically, effective teams do not use OpenTelemetry. They combine it with systems and mechanisms intended to provide control, context, and decision-making over raw telemetry

The common pattern is simple: limit data early, prioritize what matters during incidents, and align observability costs with business value.

A Practical Example: Re-thinking the Delta Airlines Outage

This example is illustrative and based on publicly reported incident details combined with common observability failure patterns, not an internal postmortem.

In January 2017, Delta Airlines experienced a major system outage that led to the cancellation of more than 170 flights and an estimated $8.5 million in losses. The outage was both a data and observability failure. The outage revealed a profound disconnect between system architecture and observability, where highly integrated service dependencies masked the failure points and paralyzed the recovery efforts.

OpenTelemetry plays a supporting role. It ensures consistent instrumentation across services and systems, making telemetry available. The critical decisions happen elsewhere: which traces are retained during failure modes, how sampling adapts under load, and whether cost and retention policies prevent the observability system itself from becoming a bottleneck.

Delta could have instrumented its systems with OpenTelemetry to give uniform traces, metrics, and logs, rather than fragmented logs and delayed alerts, among booking, scheduling, crew management, and operational services.

- Early detection through correlated signals: Latency spikes, error rates and failed transactions amongst services may have been correlated on the fly, and the root cause made visible.

- Controlled telemetry pipelines: The OpenTelemetry Collector’s layer would have prioritized important operational signals over low-value data during the incident.

- Focused sampling during failure: Standard sampling often fails when it is needed most. In this case shifting towards “failure-first” sampling policies that intelligently increase the retention of traces for degraded services and failing request paths, ensuring that the evidence required for root-cause analysis isn’t dropped due to generic rate-limiting or load-shedding.

- Faster root cause analysis: Traces, metrics, and logs tied together would have helped engineers detect the root cause of the problem earlier and save more time with recovery.

The result would not have been the ideal prevention; failures still occur. However, observability would have been improved to reduce the detection and recovery time, mitigate cascading failures, and minimize the overall business impact.

What OpenTelemetry Changes and What It Never Will

OpenTelemetry fundamentally changes how telemetry is produced and transported, but it does not change the economics or operational realities of observability. It removes vendor lock-in at the instrumentation layer while leaving decisions about cost, retention, prioritization, and investigation firmly in the hands of platform teams. Organizations that mistake standardization for outcomes often struggle; those that treat OpenTelemetry as infrastructure, governed like any other critical system, extract its real value.

Conclusion: OpenTelemetry Is a Foundation, Not a Full Solution

OpenTelemetry’s real strength lies in standardization. It gives teams a shared, vendor-neutral way to collect traces, metrics, and logs across increasingly complex systems.

However, improved data collection does not automatically result in positive outcomes. What teams ultimately experience depends on how that data is governed, where it is stored, how it is sampled, and how effectively it is analyzed. Without clear standards, cost controls, and operational discipline, OpenTelemetry can just as easily amplify noise as it can enable insight.

Successful teams use OpenTelemetry to standardize telemetry production, not to define observability outcomes. When paired with systems designed for cost predictability, strong signal correlation, and operational clarity, it becomes a powerful enabler of real observability. Used on its own, it’s only the beginning.

Disclaimer: The information in this article reflects the latest details available at the time of publication and may change as technologies and products evolve.

FAQs

1. Does OpenTelemetry replace an observability platform?

No. OpenTelemetry standardizes how telemetry is produced and exported, but it does not provide storage, querying, alerting, dashboards, or investigation workflows. An observability backend is still required to turn telemetry into operational insight.

2. Does OpenTelemetry replace Prometheus or logging systems?

No. It complements them. OpenTelemetry can standardize how metrics and logs are produced, but systems like Prometheus, logging backends, and observability platforms are still needed for storage, querying, and alerting.

3. Can OpenTelemetry control observability costs on its own?

No. In fact, OpenTelemetry acts as a catalyst for telemetry generation, which can exponentially drive up ingestion costs if left ungoverned. True fiscal control is an architectural layer built on top of the framework; it requires the implementation of aggressive sampling strategies, intelligent filtering at the Collector level, and rigorous data retention policies.

4. When is OpenTelemetry the right choice, and when is it not enough?

OpenTelemetry is the right choice when teams need consistent, vendor-neutral instrumentation across services. It is not sufficient when teams expect it to deliver cost control, prioritization, or investigative workflows without additional systems and operational discipline.

5. Does OpenTelemetry solve sampling problems in production incidents?

Not by itself. While OpenTelemetry supports sampling mechanisms, it does not decide what should be kept during incidents. Effective incident-time sampling requires system-level context and backend intelligence beyond SDK-level configuration.