Monitoring Kubernetes Ingress Controller is becoming non-optional as clusters power critical systems: your apps, APIs, and customer-facing services, TLS termination, and external traffic management. Over 43% of cloud environments are vulnerable to Remote Code Execution flaws in Ingress-NGINX admission controllers, putting public clusters at severe risk.

Many teams suffer when monitoring Kubernetes Ingress Controller: error spikes (502s/503s) go undetected during high traffic, latency at the p99 creeping above SLA, TLS certs expiring unexpectedly, misrouted requests, and costly debugging cycles. Without unified visibility, root cause often spans logs, metrics, and traces living in different tools.

CubeAPM is the best solution for monitoring the Kubernetes Ingress Controller. It brings together high-cardinality metrics, access & error logs, distributed tracing, and real-time alerting under one dashboard. With features like Smart Sampling, anomaly detection, and unlimited error tracking, it can catch 4xx/5xx surges, routing errors, TLS failures, and latency outliers.

In this article, we’ll explore Kubernetes Ingress Controllers, why monitoring them is important, key metrics to track, and how CubeAPM provides Kubernetes Ingress Controller monitoring.

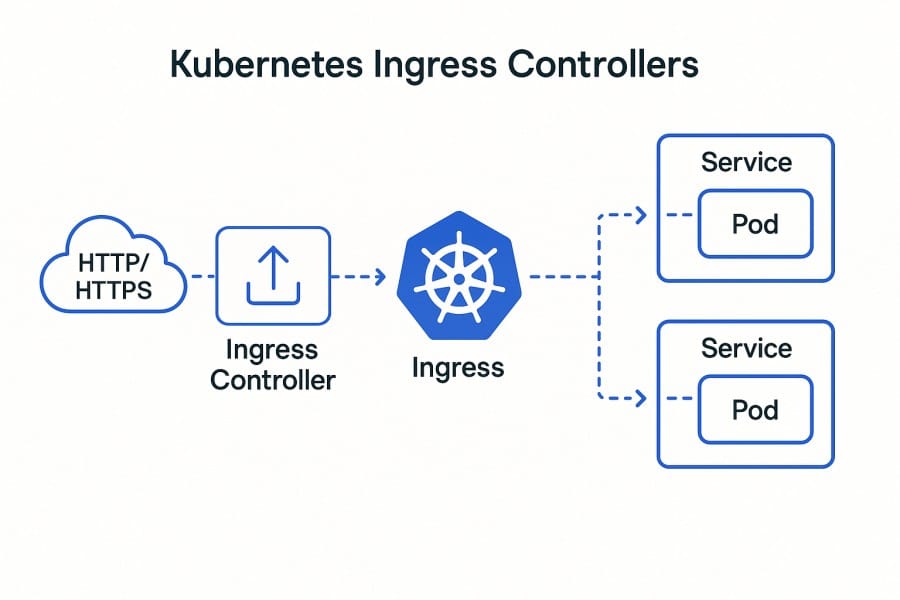

What are Kubernetes Ingress Controllers?

A Kubernetes Ingress Controller is the component responsible for implementing the routing rules defined in a cluster’s Ingress resources. It acts as a smart reverse proxy—managing HTTP and HTTPS traffic, terminating TLS/SSL connections, and forwarding requests to the right backend services. Popular implementations include NGINX Ingress, HAProxy, Traefik, and Istio gateways, each extending core Kubernetes networking with advanced load balancing and routing features.

For businesses, Ingress Controllers are not just about connectivity—they are about scalability, reliability, and cost-efficiency. By consolidating external access into a single entry point, they:

- Simplify SSL certificate management across multiple services.

- Improve user experience with intelligent routing and low-latency load balancing.

- Provide a security layer by filtering traffic before it hits workloads.

- Reduce infrastructure costs by eliminating the need for per-service LoadBalancers.

Example: Using Ingress Controllers for Order Processing

Imagine a large e-commerce platform handling thousands of concurrent checkouts. Instead of exposing separate LoadBalancers for checkout, payment, and shipping services, a single Ingress Controller routes requests based on paths (/checkout, /pay, /ship). With TLS termination at the edge and load balancing to healthy pods, the business delivers a seamless, secure user experience while keeping operational costs in check.

Why Monitoring Kubernetes Ingress Controllers is Critical

Flaw at the edge gateway

Your Ingress Controller is the doorman to every app behind Kubernetes—if it falters, users hit errors before workloads even get a chance to respond. Recent Ingress-NGINX vulnerabilities (e.g., CVE-2025-1974) showed how a single flaw at the edge can expose secrets or enable remote code execution, forcing emergency patches across fleets. That risk surface makes proactive, continuous monitoring at the controller layer non-negotiable.

Security exposure requires real-time visibility

Ingress enforces who can talk to what, over TLS, and under which routes. When admission webhooks or config templates are exploitable, attackers can pivot quickly. Multiple 2025 advisories recommended tight log/metric scrutiny and anomaly detection specifically at ingress to spot exploitation attempts early—such as unexpected config reloads, surges in 4xx/5xx from suspicious IP ranges, or webhook errors.

TLS/SSL lifecycles are shrinking, and outages are rising

Certificate validity windows are getting shorter. The CA/Browser Forum timeline sets max validity at 200 days by March 2026, 100 days by March 2027, and just 47 days by March 2029. Miss one renewal, and your ingress endpoints slam shut with browser warnings. Monitoring requires expiry dashboards, handshake error alerts, and chain/cipher checks directly at the controller.

Configuration changes break routing more than you think

Most large outages are triggered by bad config pushes, not by hardware. Industry outage reviews in 2024–2025 highlight misconfiguration as a leading cause of downtime. For ingress, a single faulty host rule, regex, or backend mapping can cause instant 502/503 storms. Monitoring must correlate config reload events with latency/error spikes to cut MTTR.

Latency and saturation start at the edge

Before pods or services saturate, your Ingress pods often do: CPU throttling, worker exhaustion, and queue back-pressure show up first as rising p95/p99 latency at the controller. Watching RPS, concurrency, upstream connect times, and worker status at ingress helps teams autoscale earlier and avoid downstream cascades.

Multi-tenant, multi-path complexity magnifies impact

One controller frequently fronts dozens of domains and hundreds of routes (gRPC, WebSockets, REST) with unique timeouts, limits, and rewrites. Without granular per-host/per-path metrics and error slicing, teams chase ghosts across microservices when the real issue is a path-specific ingress rule or policy.

The business cost of ingress-layer downtime is massive

When your edge breaks, revenue suffers first—checkout, auth, and APIs all sit behind ingress. According to industry research, the average cost of downtime is $2,300–$9,000 per minute. Monitoring ingress specifically—where user sessions first terminate—protects those critical minutes.

Compliance and audit readiness live in ingress logs

Ingress access/error logs are often the only canonical record of who accessed which resource, from where, and over what cipher. For regulated environments, dashboards and retention around TLS posture, geo/IP patterns, and 4xx authentication failures at ingress help satisfy audit trails and support breach investigations.

Key Metrics to Monitor for Kubernetes Ingress Controller

Monitoring Kubernetes Ingress Controllers effectively means keeping an eye on traffic, performance, errors, resource usage, and security signals. Below are the most critical categories and metrics you should track.

Traffic & Request Metrics

These metrics show how much load your Ingress Controller is handling and whether traffic is distributed smoothly.

- Request Rate (RPS): Measures the number of incoming HTTP/HTTPS requests per second. A sudden spike can indicate a surge in user activity or a potential DDoS attack. Threshold: Watch for >2x your baseline traffic within 5 minutes.

- Request Distribution: Tracks how requests are balanced across backend services and pods. Imbalances may reveal routing misconfigurations or uneven pod scaling. Threshold: A single backend should not consistently exceed 60–70% of total requests.

Latency & Performance Metrics

Latency at ingress directly impacts user experience. These metrics highlight whether traffic is flowing smoothly.

- Response Time (p50, p95, p99): Percentile latencies help identify slow paths that affect only a fraction of users but can still hurt SLAs. Threshold: p95 latency >500 ms sustained for 10 minutes should trigger alerts.

- Upstream Connection Time: Tracks how long it takes for the Ingress Controller to connect to backend pods. Rising values suggest network congestion or backend pod saturation. Threshold: Alert if >200 ms at p95 over 5 minutes.

Error & Reliability Metrics

Ingress should surface routing and backend errors immediately. Monitoring these ensures issues don’t go unnoticed.

- HTTP 4xx Error Rate: Captures client-side errors (e.g., invalid routes, unauthorized requests). A sudden increase often signals misconfigured ingress rules or auth failures. Threshold: >5% of total requests over 5 minutes.

- HTTP 5xx Error Rate: Represents server-side issues like 502/503 from failing backends. Persistent spikes degrade availability. Threshold: >1% of total requests sustained for 5 minutes.

- Ingress Config Reloads: Each reload reflects changes in ingress rules. Unexpected reload frequency may correlate with errors or downtime. Threshold: More than 3 reloads within 30 minutes is unusual.

Resource Utilization Metrics

Ingress Controllers are pods themselves; they need enough compute and memory to handle peak load.

- CPU Usage: High CPU consumption slows request processing, leading to latency spikes. Threshold: >80% sustained usage for 10 minutes.

- Memory Usage: Memory leaks or excessive caching in Ingress can cause OOM restarts. Threshold: >85% of pod memory allocation for 15 minutes.

- Pod Restarts: Frequent restarts signal instability, often tied to resource starvation or crashes. Threshold: More than 2 restarts per hour per pod.

TLS & Security Metrics

Since Ingress often handles TLS termination, monitoring certs and handshake health is critical for security and uptime.

- TLS Handshake Errors: Failures here indicate misconfigured certs or unsupported cipher suites. Threshold: >1% of total TLS requests over 10 minutes.

- Certificate Expiry: Monitoring expiry prevents outages caused by lapsed certs. Threshold: Alert when expiry is within 14 days.

- Unauthorized Access Attempts: Tracks repeated 401/403 errors that may point to brute-force or bot traffic. Threshold: More than 50 failed attempts per minute from a single IP.

Telemetry Collection, Error Tracking & Alerting with CubeAPM for Kubernetes Ingress Controller

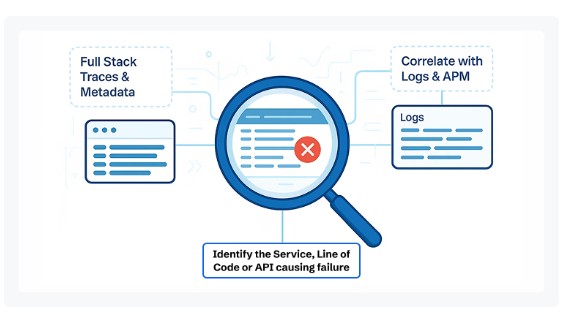

Monitoring Kubernetes Ingress Controllers requires pulling together signals from logs, metrics, and traces into one place. Traditional tools scatter this data across silos, slowing down root cause analysis. CubeAPM solves this by providing unified telemetry, deep error tracking, and intelligent alerting tailored for Ingress workloads.

Unified Telemetry with Logs, Metrics & Traces

CubeAPM ingests Ingress access logs, error logs, Prometheus-style metrics, and distributed traces into a single observability pipeline. For example, you can view how a 503 error in the NGINX Ingress Controller correlates with backend pod latency in one dashboard. Since CubeAPM is OpenTelemetry-native, you can deploy lightweight collectors to scrape nginx_ingress_controller_requests and request_duration_seconds metrics, alongside structured access logs. This eliminates context-switching and enables faster triage.

Real-Time Error Tracking

Ingress-related issues often appear as sudden spikes in 502/503 errors, invalid route 404s, or failed TLS handshakes. With CubeAPM’s Error Tracking inbox, engineers get a live feed of these incidents grouped by severity, source, and route. Instead of drowning in noisy logs, CubeAPM applies Smart Sampling to prioritize errors tied to real latency or user impact. That means teams can immediately trace “503 Service Unavailable” back to a specific ingress rule or unhealthy pod, cutting MTTR drastically.

Intelligent Alerting & Anomaly Detection

CubeAPM goes beyond static thresholds with anomaly-based alerts. For Ingress Controllers, it can automatically baseline normal RPS, p95 latency, error ratios, and TLS cert usage, then trigger alerts when deviations occur. This includes:

- Detecting a surge in 5xx errors tied to a misconfigured backend.

- Flagging TLS expiry within 14 days to prevent unexpected outages.

- Spotting latency spikes above 500ms at p95, even before customers complain.

Alerts are delivered instantly to Slack, Teams, or WhatsApp, with full context (error logs + traces + dashboards) attached—so teams don’t waste time piecing the story together.

How to Monitor Kubernetes Ingress Controller with CubeAPM

Here are the detailed steps, with links to CubeAPM’s documentation, to monitor Kubernetes Ingress Controllers end-to-end with logs, metrics, tracing, and alerting.

Step 1: Install CubeAPM on Kubernetes

Start by deploying CubeAPM into your Kubernetes cluster. Use the official installation method described in the Kubernetes Installation Guide. This involves:

- Adding the CubeAPM Helm repository

- Customizing the values.yaml for your cluster’s specifics (e.g., storage, resource requests/limits, ingress to CubeAPM itself)

- Installing the chart so that the CubeAPM backend, collectors, and required agents are running

This sets the foundation to collect data from your Ingress Controller.

Step 2: Configure CubeAPM Settings

Once installed, you’ll want to adjust settings for how CubeAPM ingests, stores, and alerts on data. The Configure Guide lets you:

- Set up retention periods for logs and metrics

- Configure sampling rules to avoid overwhelming storage (especially important for high-throughput ingress traffic)

- Enable TLS and authentication for dashboard access

- Define global thresholds that affect error monitoring and logging

Step 3: Instrument OpenTelemetry for Ingress Traffic & Tracing

To get metric and trace data from your Ingress Controller, use OpenTelemetry instrumentation. The OpenTelemetry Instrumentation Docs explain how to:

- Deploy the OpenTelemetry Collector (as a sidecar, DaemonSet, or standalone service)

- Scrape ingress metrics such as nginx_ingress_controller_requests and request_duration_seconds

- Trace request paths from ingress to backend pods to pinpoint latency issues per route

Step 4: Stream Logs from Ingress Controllers

Metrics and traces tell part of the story; logs fill in the gaps. Use the Logs Setup Guide to:

- Collect access logs (who is requesting what path, status codes, response size)

- Collect error logs (backend failures, TLS handshake issues, routing problems)

- Ensure logs are structured (JSON preferred) so CubeAPM dashboards can filter by host, route, or error code

- Correlate logs with metrics and traces to identify root causes quickly

Step 5: Set Up Alerting Channels and Rules

Proactive notifications help prevent downtime. The Alerting via Email Configuration Guide explains how to:

- Define rules for 5xx error spikes, latency breaches, or TLS expiry

- Configure alert channels like email, Slack, or Teams

- Deliver full context in each alert (error logs + traces + dashboards) for faster resolution

Step 6: Monitor Infrastructure & Use Dashboards

With logs, metrics, and alerts in place, CubeAPM’s Infrastructure Monitoring Docs show you how to:

- Visualize ingress dashboards for traffic volume, latency by host/path, and error breakdowns

- Monitor resource usage of Ingress Controller pods (CPU, memory, restarts)

- Track TLS health, certificate expiry, and handshake success rates

- Use insights to tune autoscaling, resource allocation, and ingress rules for reliability

Real-World Example: Improving Ingress Reliability for an E-commerce Platform

The Challenge

A leading e-commerce company struggled with sudden 503 errors during flash sales and seasonal traffic peaks. Despite having monitoring in place, the engineering team only saw raw error counts without clarity on why requests were failing. High p99 latency during checkout hurt conversions, and troubleshooting took hours as logs, metrics, and traces were spread across different tools.

The Solution

The company adopted CubeAPM for monitoring Kubernetes Ingress Controllers. With unified logs, metrics, and distributed traces, the team could view ingress access logs, error rates, and backend pod health all in one dashboard. CubeAPM’s Error Tracking inbox grouped ingress-related failures, while Smart Sampling preserved high-value traces for checkout transactions.

The Fixes

- Configured OpenTelemetry collectors to scrape nginx_ingress_controller_requests and latency histograms

- Set up alerting rules for 5xx errors exceeding 2% of requests and TLS expiry within 14 days

- Streamed structured ingress access and error logs into CubeAPM for correlation with backend pod restarts

- Tuned HPA scaling policies for ingress pods after CubeAPM dashboards highlighted CPU saturation

The Result

With CubeAPM, the company reduced Mean Time to Resolution (MTTR) by 70%. Check out uptime improved to 99.99% during peak traffic, and incidents that previously took hours to resolve were narrowed down to minutes. By proactively catching TLS expiry and ingress bottlenecks, the team eliminated critical outages and preserved customer trust during high-stakes sales events.

Verification Checklist & Example Alert Rules with CubeAPM

Before rolling CubeAPM into production for ingress monitoring, it’s important to verify that your dashboards, alerts, and log pipelines are capturing the right data. Below is a practical checklist and sample alerting rules to help you get started.

Verification Checklist

- Ingress Metrics Dashboards: Confirm that panels for request rate, latency, and error codes (nginx_ingress_controller_requests, nginx_ingress_controller_request_duration_seconds) are visible and updating in real time.

- TLS Monitoring: Ensure certificate expiry and handshake success/failure are tracked, with alerts configured for upcoming expiry.

- Logs Pipeline: Verify that both access and error logs from ingress pods are streaming into CubeAPM, structured in JSON, and filterable by host/path.

- Trace Propagation: Test that traces pass through ingress to backend services, so you can see end-to-end latency waterfalls.

- Alert Testing: Simulate errors or high latency (e.g., load tests) to confirm alerts are triggered in Slack, email, or other configured channels.

- Autoscaling Readiness: Validate that HPA thresholds for ingress pods match observed RPS and latency metrics.

Example Alert Rules

Below are sample Prometheus-style alert rules that can be configured in CubeAPM. Each rule focuses on a specific ingress reliability signal.

High Error Rate Alert

- alert: IngressHigh5xxErrorRate

expr: sum(rate(nginx_ingress_controller_requests{status=~"5.."}[5m]))

/ sum(rate(nginx_ingress_controller_requests[5m])) > 0.01

for: 5m

labels:

severity: critical

annotations:

summary: "High 5xx error rate on Ingress"

description: "More than 1% of requests are failing with 5xx status for the last 5 minutes."

Latency Spike Alert

- alert: IngressHighLatency

expr: histogram_quantile(0.95, sum(rate(nginx_ingress_controller_request_duration_seconds_bucket[5m])) by (le))

> 0.5

for: 10m

labels:

severity: warning

annotations:

summary: "Ingress latency p95 too high"

description: "p95 latency exceeded 500ms for more than 10 minutes."

TLS Certificate Expiry Alert

- alert: IngressTLSCertExpiringSoon

expr: (nginx_ingress_controller_ssl_expire_time_seconds - time()) < 1209600

labels:

severity: warning

annotations:

summary: "Ingress TLS certificate expiring soon"

description: "TLS certificate will expire within the next 14 days."

Pod Restart Alert

- alert: IngressPodRestarts

expr: increase(kube_pod_container_status_restarts_total{container="ingress-nginx"}[15m]) > 3

labels:

severity: critical

annotations:

summary: "Ingress pod restarts too frequently"

description: "Ingress Controller pod has restarted more than 3 times in the last 15 minutes."

Conclusion

Monitoring Kubernetes Ingress Controllers is essential for keeping applications secure, reliable, and performant. As the entry point to your cluster, the ingress layer directly impacts user experience—whether through latency, routing errors, or expired TLS certificates. Without the right visibility, even small issues can quickly cascade into major outages.

CubeAPM simplifies this challenge by unifying metrics, logs, and traces in one platform. With real-time error tracking, anomaly detection, and smart alerting, teams can catch 5xx spikes, latency bottlenecks, and certificate risks before customers notice.

By adopting CubeAPM, organizations reduce MTTR, improve uptime, and cut monitoring costs significantly. Now is the time to secure your ingress layer—get started with CubeAPM today and ensure your Kubernetes applications remain always-on.

Disclaimer: The information in this article reflects the latest details available at the time of publication and may change as technologies and products evolve.

FAQs

1. How do Kubernetes Ingress Controllers differ from a standard LoadBalancer?

A LoadBalancer service exposes a single application to external traffic, often provisioned per service. An Ingress Controller, on the other hand, centralizes traffic management for multiple services and routes, reducing cost and complexity while adding features like path-based routing and TLS termination.

2. Can I use Prometheus alone to monitor Kubernetes Ingress Controllers?

Prometheus can scrape ingress metrics (like request rates and latency), but it doesn’t provide full correlation with logs and traces. For complete visibility, tools like CubeAPM integrate Prometheus metrics with structured logs and distributed tracing in one platform.

3. What’s the best way to debug 502/503 errors in an Ingress Controller?

Start by checking ingress error logs for backend connection failures, then confirm pod health and autoscaling events. Tools like CubeAPM let you correlate these logs with request traces and error spikes, so you can pinpoint whether the issue lies in ingress rules, TLS, or backend pods.

4. How does ingress monitoring improve security posture?

Ingress logs and metrics surface brute-force attempts, unusual traffic patterns, and TLS handshake errors. Continuous monitoring ensures suspicious traffic is flagged early, and compliance teams can use ingress logs as an audit trail for access patterns and encryption ciphers.

5. Can I monitor multiple Ingress Controllers in the same cluster?

Yes. Many teams run multiple ingress controllers (e.g., NGINX for HTTP apps and Traefik for APIs). Each controller exposes separate metrics endpoints. CubeAPM can ingest data from all of them into unified dashboards, so you get visibility across environments without fragmentation.