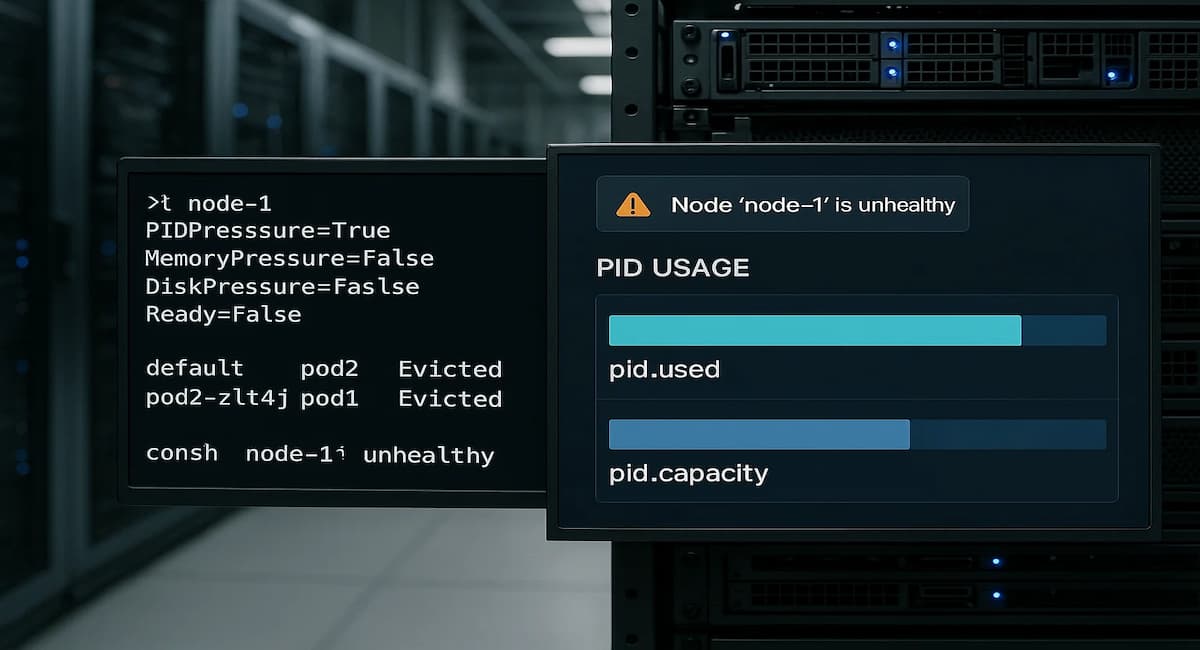

The Kubernetes PID Pressure error occurs when a node runs out of available process IDs (PIDs), preventing new containers from starting and causing workload disruptions. This state often triggers pod evictions, frequent restarts, and degraded cluster performance, especially in high-density or microservice-heavy deployments.

CubeAPM provides deep visibility into Kubernetes PID pressure error by correlating kernel-level metrics such as pid.capacity and pid.used with kubelet events, eviction logs, and pod lifecycle traces. It enables DevOps teams to identify nodes nearing PID exhaustion, isolate noisy processes, and visualize eviction chains across namespaces — helping prevent cascading failures and ensuring consistent node health.

In this guide, we’ll explain what the Kubernetes PID Pressure Error means, why it happens, how to fix it, and how to monitor it effectively with CubeAPM.

What is Kubernetes PID Pressure Error

In Kubernetes, PID Pressure indicates that a node is running low on available process IDs (PIDs) — the unique identifiers the Linux kernel assigns to every running process. Each container, sidecar, and system service consumes PIDs, and when this pool nears exhaustion, the kubelet marks the node as being under PID pressure.

Once this condition is triggered, Kubernetes takes defensive action to protect node stability. The scheduler stops assigning new pods to the affected node, and the kubelet may start evicting running pods to free up PIDs. This prevents the entire node from freezing but can interrupt active workloads or critical services.

PID pressure is often subtle at first but quickly escalates in environments where:

- Large numbers of short-lived containers are spawned.

- Processes fail to terminate properly, leaving zombie processes behind.

- Applications fork too many child processes without cleanup.

Because PID exhaustion occurs at the kernel level, traditional monitoring tools often miss the early warning signals — making proactive detection essential for maintaining healthy Kubernetes nodes.

Why Kubernetes PID Pressure Error Happens

1. Excessive process forking inside containers

Applications that spawn too many child processes without cleanup quickly consume available PIDs on the node. This is common with servers using fork() or exec() calls in tight loops, or misconfigured background jobs.

Quick check:

kubectl exec -it <pod-name> -- ps -eLf | wc -lIf the process count per container is abnormally high, the node may be nearing PID exhaustion.

2. Zombie or orphaned processes not being reaped

When child processes terminate but their parent processes fail to reap them, they become zombies and continue to occupy PIDs. Over time, these accumulate and trigger PID pressure.

Quick check:

kubectl exec -it <pod-name> -- ps aux | grep 'Z'Zombie processes will appear with a “Z” status; many such entries indicate poor process management within containers.

3. High container churn or crash loops

Frequent container restarts, image pulls, or job completions create short-lived processes that inflate PID usage. Each crash or restart temporarily consumes additional PIDs before cleanup.

Quick check:

kubectl get pods -A | grep -E 'CrashLoopBackOff|Error'Multiple pods in CrashLoopBackOff or Completed states suggest recurring process creation spikes.

4. Overloaded system daemons or runtime shims

Processes from system components like containerd, kubelet, or runtime shims (runc) may accumulate if garbage collection lags behind workload turnover.

Quick check:

ps -ef | grep containerd-shim | wc -lA large number of shim processes indicates the node runtime is not cleaning up properly.

5. Misconfigured PID cgroup limits

Kubernetes relies on the pid.max cgroup parameter to control per-node PID limits. When set too low, even normal workloads can exhaust the limit prematurely.

Quick check:

cat /sys/fs/cgroup/pids/pids.maxCompare this value with the total active PIDs. A small limit relative to workload size can cause early PIDPressure conditions.

6. Node-level resource leaks or kernel bugs

Certain kernel versions or runtime bugs can cause PID leaks where processes remain in the table even after termination. Over time, these invisible leaks reduce available PIDs.

Quick check:

cat /proc/sys/kernel/pid_maxcat /proc/sys/kernel/pid_ns_last_pidIf pid_ns_last_pid consistently grows close to pid_max, but active process count stays low, it suggests a potential kernel PID leak.

How to Fix Kubernetes PID Pressure Error

Fixing this issue requires validating each possible failure point. Here’s a step-by-step approach with real fixes and code snippets.

1. Remove zombie or orphaned processes

Zombie processes keep PIDs allocated even after they’ve stopped running, causing PID exhaustion.

Quick check:

kubectl exec -it <pod-name> -- ps aux | grep 'ZFix: Restart affected pods to release stuck PIDs and ensure your applications properly reap child processes.

kubectl delete pod <pod-name> --grace-period=0 --force2. Limit excessive process forking

Applications that spawn unbounded child processes (e.g., workers or cron jobs) rapidly deplete available PIDs.

Quick check:

kubectl exec -it <pod-name> -- ps -eLf | wc -lFix: Update application code or configuration to limit forking, or scale horizontally instead of vertically. Restart the workload after adjustment.

kubectl rollout restart deployment <deployment-name> -n <namespace>3. Increase PID allocation on the node

If legitimate workloads need more processes, increase the kernel PID limit.

Fix:

sudo sysctl -w kernel.pid_max=131072To persist the change:

echo "kernel.pid_max=131072" | sudo tee -a /etc/sysctl.conf && sudo sysctl -p4. Tune kubelet eviction thresholds

The kubelet can prematurely evict pods when PID availability drops. Adjust the pid.available threshold to give nodes more headroom.

Fix:

Edit the kubelet config file /var/lib/kubelet/config.yaml:

evictionHard:

pid.available: "1000"

evictionPressureTransitionPeriod: 5mThen restart kubelet:

sudo systemctl restart kubelet5. Restart container runtime daemons

Stuck container runtime shims (containerd-shim, runc) can retain PIDs after container exit.

Fix:

sudo systemctl restart containerdIf PID pressure resolves, upgrade to a newer runtime version to prevent recurrence.

6. Enforce per-pod PID limits

Prevent a single pod from consuming too many PIDs by setting explicit limits.

Fix:

resources:

limits:

pid: 1000Apply this in pod or deployment specs to ensure fair PID distribution across containers.

7. Reboot affected nodes

If PID counts remain abnormally high after cleanup, rebooting resets the kernel’s process table and clears leaked entries.

Fix:

kubectl drain <node-name> --ignore-daemonsets

sudo reboot

kubectl uncordon <node-name>8. Implement proactive PID monitoring

Once fixed, monitor metrics like pid.used, pid.capacity, and eviction counts to catch early pressure signals. Use alerting thresholds to prevent future disruptions.

Monitoring Kubernetes PID Pressure Error with CubeAPM

Detecting and diagnosing PID pressure quickly requires correlating kernel signals, kubelet events, and pod lifecycle logs. CubeAPM simplifies this by combining four signal streams — Metrics, Events, Logs, and Rollouts — to identify which node or container is exhausting PIDs. Instead of relying on multiple tools, CubeAPM surfaces metrics like pid.used, pid.capacity, and pod_evictions_total alongside the exact workload or process responsible.

Step 1 — Install CubeAPM using Helm

Deploy CubeAPM in your cluster for full MELT (Metrics, Events, Logs, Traces) observability.

helm repo add cubeapm https://charts.cubeapm.com && helm install cubeapm cubeapm/cubeapm --namespace cubeapm --create-namespaceTo upgrade later:

helm upgrade cubeapm cubeapm/cubeapm -n cubeapmYou can customize configuration in values.yaml to adjust exporters, retention, and sampling.

Step 2 — Deploy the OpenTelemetry Collector (DaemonSet + Deployment)

CubeAPM uses the DaemonSet to collect node-level data such as PID metrics, kubelet conditions, and container counts. The Deployment handles centralized log and trace pipelines.

helm install cube-otel cubeapm/opentelemetry-collector --namespace cubeapmBoth collectors work together to forward correlated data to CubeAPM for unified analysis.

Step 3 — Collector Configs Focused on PID Pressure

DaemonSet Config (node-level PID metrics):

receivers:

hostmetrics:

collection_interval: 30s

scrapers:

processes:

load:

processors:

batch:

exporters:

otlp:

endpoint: cubeapm.cubeapm.svc.cluster.local:4317

service:

pipelines:

metrics:

receivers: [hostmetrics]

processors: [batch]

exporters: [otlp]- hostmetrics: Collects PID usage (pid.used, pid.capacity) and node load metrics

- batch: Aggregates samples efficiently before export

- otlp: Sends metrics to CubeAPM for visualization

Deployment Config (events and logs):

receivers:

k8s_events:

filelog:

include: ["/var/log/kubelet.log"]

processors:

attributes:

batch:

exporters:

otlp:

endpoint: cubeapm.cubeapm.svc.cluster.local:4317

service:

pipelines:

logs:

receivers: [filelog, k8s_events]

processors: [attributes, batch]

exporters: [otlp]- k8s_events: Captures eviction and PID pressure events from kubelet

- filelog: Streams kubelet logs indicating PID-related system messages

- attributes: Adds node, namespace, and workload identifiers to logs

Step 4 — Supporting Components

Add Kubernetes state metrics for workload correlation and context.

helm install kube-state-metrics prometheus-community/kube-state-metrics -n cubeapmThis enhances visibility into pod restarts, namespace metadata, and deployment relationships during PID pressure events.

Step 5 — Verification Checklist

After deploying CubeAPM, verify the setup:

- Events: PIDPressure events are visible in the Kubernetes Events view.

- Metrics: pid.used, pid.capacity, and pid.available metrics appear in Node Metrics dashboards.

- Logs: Kubelet eviction logs (e.g., “PIDPressure node condition set”) appear in the log explorer.

- Restarts: Pod restart counts increase alongside PID usage spikes.

- Rollouts: Deployment timelines show which rollout triggered PID pressure.

With these signals aligned, CubeAPM automatically correlates the affected node, namespace, and workloads, giving teams full visibility into PID pressure root causes and propagation.

Example Alert Rules for Kubernetes PID Pressure Error

Alerting on PID usage and eviction trends helps teams react before the cluster enters an unrecoverable state. Below are practical alert examples tailored for detecting PID pressure early.

1. Alert: High PID Usage on Node

This rule triggers when PID usage exceeds 90% of total available process IDs, indicating imminent PID pressure.

alert: HighPIDUsage

expr: (pid_used / pid_capacity) > 0.9

for: 2m

labels:

severity: warning

annotations:

summary: "High PID usage on {{ $labels.node }}"

description: "Node {{ $labels.node }} has reached {{ $value | humanizePercentage }} of its PID capacity. Investigate active processes before eviction occurs."2. Alert: Node Under PID Pressure

This alert fires when the kubelet reports the PIDPressure condition as true, signaling that Kubernetes is evicting pods.

alert: NodePIDPressure

expr: kube_node_status_condition{condition="PIDPressure",status="true"} == 1

for: 1m

labels:

severity: critical

annotations:

summary: "Node under PID Pressure"

description: "Kubelet on {{ $labels.node }} reports PIDPressure=True. Pods may be evicted or unschedulable."3. Alert: Frequent Pod Evictions Due to PID Pressure

This alert detects repeated pod evictions tied to PID exhaustion within a short period.

alert: HighPIDEvictions

expr: increase(kube_pod_container_status_terminated_reason{reason="Evicted"}[5m]) > 3

labels:

severity: warning

annotations:

summary: "Frequent PID-related pod evictions"

description: "Multiple pods have been evicted due to PIDPressure within the last 5 minutes. Review node process usage and system daemons."Conclusion

The Kubernetes PID Pressure error signals that a node is running out of process IDs, leading to pod evictions and halted workloads. Without early visibility, this can quickly impact cluster reliability.

CubeAPM helps teams detect PID pressure instantly by correlating kernel metrics, kubelet events, and pod lifecycle data in one place. It highlights which node or process is causing exhaustion before it escalates.

Start monitoring Kubernetes PID pressure with CubeAPM today to keep nodes stable, prevent evictions, and maintain smooth cluster operations.

FAQs

1. What does PID pressure mean in Kubernetes?

The Kubernetes PID Pressure Error occurs when a node runs out of available process IDs (PIDs), stopping new pods from starting. CubeAPM helps detect this early by tracking kernel-level PID usage and kubelet events across all nodes.

2. What causes PID pressure on a node?

PID pressure is typically caused by unbounded process forking, zombie processes, or low pid.max limits. With CubeAPM, you can visualize process spikes and identify which workloads or daemons are exhausting PIDs in real time.

3. How do I prevent PID pressure in Kubernetes?

You can prevent PID pressure by tuning system PID limits, cleaning up zombie processes, and enforcing per-pod PID limits. CubeAPM provides proactive alerts and dashboards to detect rising PID usage before evictions occur.

4. How can CubeAPM help with PID pressure monitoring?

CubeAPM correlates PID metrics, kubelet logs, and pod lifecycle data to reveal the root cause of PID exhaustion. It links metrics like pid.used and pid.capacity to affected workloads, helping teams resolve issues faster.

5. Is PID pressure related to CPU or memory pressure?

No. While CPU and memory pressure deal with compute and memory resources, PID pressure is a kernel-level exhaustion of process IDs. CubeAPM helps monitor all three conditions together for complete node health visibility.