The “Kubernetes Pod Resource Quota Exceeded” error occurs when a namespace or workload requests more CPU, memory, or storage than allowed by its configured limits. It’s a common operational challenge in large clusters where strict quotas control resource usage. Such violations can block new Pod deployments, slow workloads, or even halt CI/CD rollouts.

CubeAPM, an OpenTelemetry-native observability platform, helps teams detect and resolve quota breaches instantly. It correlates Events, Metrics, Logs, and Rollouts to show which Pods are violating limits and how those violations affect overall cluster performance. With CubeAPM, teams can visualize resource utilization per namespace and adjust limits before performance degrades.

In this guide, we’ll explore what the Pod Resource Quota Exceeded error means, why it happens, how to fix it, and how to monitor it efficiently with CubeAPM.

What is Kubernetes Pod Resource Quota Exceeded Error

The Kubernetes Pod Resource Quota Exceeded error appears when a namespace or workload requests more CPU, memory, or storage than the limits defined in its ResourceQuota configuration. These quotas are designed to prevent a single team or service from monopolizing cluster resources and to maintain stability across multi-tenant environments.

When a Pod’s resource request goes beyond these limits, the Kubernetes API server blocks its creation or scaling. As a result, deployments can fail, workloads stay in a pending state, and automation pipelines may be interrupted until resource quotas are adjusted.

Key Characteristics of Kubernetes Pod Resource Quota Exceeded Error :

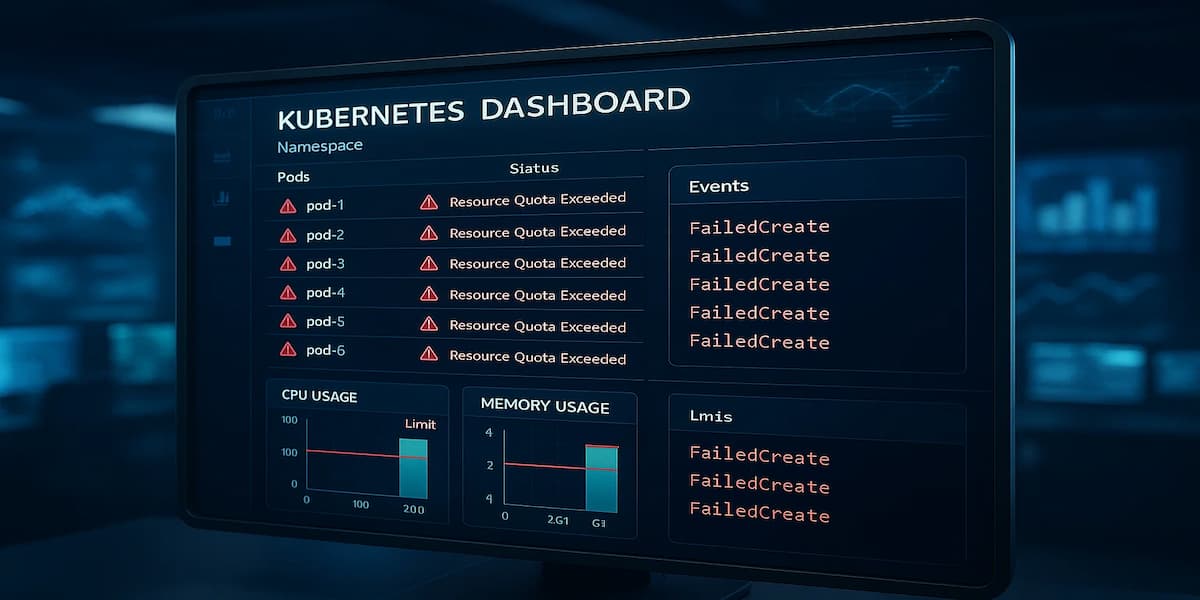

- Pods remain in a FailedCreate or Forbidden state.

- Namespace usage exceeds the defined CPU, memory, or storage quotas.

- CI/CD rollouts may stop midway due to blocked Pod creation.

- Resource utilization dashboards show saturation at the namespace level.

- Cluster administrators notice pending Pods even with available node capacity.

Why Kubernetes Pod Resource Quota Exceeded Error Happens

1. Namespace Resource Limits Reached

When a namespace reaches its defined CPU, memory, or storage limits, new Pods that request additional resources cannot be created. This is the most common trigger for the Kubernetes Pod Resource Quota Exceeded error, especially in tightly controlled production environments.

2. Excessive Resource Requests in Pod Specs

Developers may set requests and limits that exceed what the namespace quota allows. For instance, requesting 4 vCPUs in a namespace capped at 2 vCPUs causes immediate rejection from the API server.

3. Persistent Volume or Storage Quota Exhaustion

If the namespace has a storage quota and existing PersistentVolumeClaims (PVCs) already use most of it, new volume claims will fail. This prevents Pods from mounting additional volumes until space is released or quotas are expanded.

4. Aggregate Usage from Multiple Deployments

In shared namespaces, multiple Deployments or StatefulSets can collectively consume resources close to the quota ceiling. Adding new replicas or workloads pushes usage past the threshold, triggering the error for new Pods.

5. Misconfigured ResourceQuota or LimitRange Objects

Incorrectly defined ResourceQuota or LimitRange objects can impose unrealistic limits. For example, setting CPU limits in millicores (m) instead of cores can drastically restrict available capacity and cause quota violations.

6. Stale Resource Accounting

Sometimes the Kubernetes controller cache doesn’t immediately update after Pod deletions. The namespace appears to have reached its quota even though resources have been freed, leading to false positives until the cache syncs.

How to Fix Kubernetes Pod Resource Quota Exceeded Error

Fixing Kubernetes Pod Resource Quota Exceeded error involves inspecting namespace quotas, adjusting Pod configurations, and reclaiming unused resources. Follow these steps to resolve the issue effectively:

1. Identify the Resource Causing the Violation

When a namespace exceeds its resource quota, Kubernetes blocks new Pod creation. The first step is to identify which specific quota (CPU, memory, or storage) is overused.

Quick check:

kubectl describe resourcequota -n <namespace>Fix: Review the “Used” vs “Hard” limits in the output and plan a quota adjustment accordingly.

kubectl get resourcequota <quota-name> -n <namespace> -o yaml2. Reduce Pod Resource Requests

Pods may request excessive CPU or memory resources, exhausting the namespace quota. Lowering requests and limits for non-critical Pods helps release quota capacity.

Quick check:

kubectl get pods -n <namespace> -o=jsonpath='{range .items[*]}{.metadata.name}{" => "} {.spec.containers[*].resources.requests}{"\n"}{end}'Fix: Edit your deployment manifests to reduce requests and limits, then apply the changes.

kubectl patch deployment <name> -n <namespace> -p '{"spec":{"template":{"spec":{"containers":[{"name":"<container>","resources":{"requests":{"cpu":"250m","memory":"256Mi"},"limits":{"cpu":"500m","memory":"512Mi"}}}]}}}}'3. Scale Down Idle or Non-Production Pods

Idle or test workloads can occupy quota space unnecessarily. Scaling them down helps free up CPU and memory for active Pods.

Quick check:

kubectl top pods -n <namespace>Fix: Scale down unused Deployments or StatefulSets temporarily.

kubectl scale deployment <deployment-name> --replicas=0 -n <namespace>4. Expand Namespace Resource Quotas

If workloads genuinely require more resources, you can safely expand the namespace quota with admin privileges.

Quick check:

kubectl get resourcequota -n <namespace> -o yamlFix: Update the ResourceQuota definition and reapply it.

kubectl apply -f updated-resourcequota.yamlExample snippet inside updated-resourcequota.yaml:

apiVersion: v1

kind: ResourceQuota

metadata:

name: team-quota

namespace: dev

spec:

hard:

requests.cpu: "10"

requests.memory: 20Gi

limits.cpu: "15"

limits.memory: 30Gi5. Clean Up Unused Persistent Volumes

Old or unreferenced PersistentVolumeClaims (PVCs) can quickly consume storage quotas. Removing them frees up space for new workloads.

Quick check:

kubectl get pvc -n <namespace>Fix: Delete unused PVCs after verifying they’re not linked to running Pods.

kubectl delete pvc <pvc-name> -n <namespace>6. Wait for Resource Cache Sync

After deleting Pods or PVCs, Kubernetes controllers may take time to update usage metrics. Waiting for the sync helps clear false positives.

Quick check:

Re-run the quota check after a few minutes:

kubectl describe resourcequota -n <namespace>Fix: If usage metrics remain outdated, restart the controller manager or force a sync.

kubectl rollout restart deployment kube-controller-manager -n kube-systemMonitoring Kubernetes Pod Resource Quota Exceeded Error with CubeAPM

The fastest way to identify and prevent “Pod Resource Quota Exceeded” issues is by continuously correlating Events, Metrics, Logs, and Rollouts across namespaces.

CubeAPM gives you this correlation out of the box — linking Kubernetes resource metrics with real-time events to show which workloads are breaching limits, which quotas are full, and how these violations impact cluster scheduling.

Step 1 — Install CubeAPM (Helm)

CubeAPM provides a lightweight Helm chart that deploys the core observability stack with default values optimized for Kubernetes workloads.

helm repo add cubeapm https://helm.cubeapm.com && helm install cubeapm cubeapm/cubeapm --namespace cubeapm --create-namespaceTo update existing deployments:

helm upgrade cubeapm cubeapm/cubeapm -n cubeapmYou can customize namespace-level resource tracking in values.yaml to match your cluster’s quota configurations.

Step 2 — Deploy the OpenTelemetry Collector (DaemonSet + Deployment)

CubeAPM uses a dual-collector model:

DaemonSet collectors scrape node- and Pod-level metrics (CPU, memory, storage).

helm install cube-otel-daemon cubeapm/opentelemetry-collector --set mode=daemonset -n cubeapmDeployment collectors handle centralized metric processing and data export.

helm install cube-otel-main cubeapm/opentelemetry-collector --set mode=deployment -n cubeapmThis setup ensures both node metrics and cluster events related to quota usage are captured in real time.

Step 3 — Collector Configs Focused on Pod Resource Quota Exceeded

DaemonSet Config (metrics + kubelet receiver)

receivers:

kubeletstats:

collection_interval: 30s

metric_groups: ["container", "pod"]

processors:

batch: {}

exporters:

otlp:

endpoint: cubeapm.cubeapm.svc.cluster.local:4317

service:

pipelines:

metrics:

receivers: [kubeletstats]

processors: [batch]

exporters: [otlp]- kubeletstats receiver captures per-Pod CPU/memory usage.

- batch processor optimizes data transmission to reduce overhead.

- OTLP exporter forwards data securely to CubeAPM.

Deployment Config (events + state metrics)

receivers:

kube_events:

namespaces: ["*"]

kubestatemetrics:

collection_interval: 60s

processors:

filter:

metrics:

include:

match_type: regexp

metric_names:

- "kube_resourcequota*"

exporters:

otlp:

endpoint: cubeapm.cubeapm.svc.cluster.local:4317

service:

pipelines:

metrics:

receivers: [kubestatemetrics]

processors: [filter]

exporters: [otlp]

logs:

receivers: [kube_events]

exporters: [otlp]- kubestatemetrics receiver tracks namespace quota usage and limits.

- filter processor focuses on quota-specific metrics only.

- kube_events receiver streams “Exceeded quota” and “FailedCreate” events to CubeAPM.

Step 4 — Supporting Components (Optional)

For deeper context, deploy kube-state-metrics to enhance visibility into ResourceQuota objects:

helm install kube-state-metrics prometheus-community/kube-state-metrics -n cubeapmThis adds metadata such as quota names, used vs hard limits, and associated namespaces directly into CubeAPM dashboards.

Step 5 — Verification (What You Should See in CubeAPM)

Once the setup is complete, verify that CubeAPM is receiving correlated data across all MELT (Metrics, Events, Logs, Traces) layers.

In CubeAPM you should see:

- Events: “Exceeded quota” and “FailedCreate” messages tied to specific namespaces.

- Metrics: kube_resourcequota_usage and kube_resourcequota_hard visualized over time.

- Logs: Scheduler or controller logs showing blocked Pod creations.

- Restarts: Drops in replica count or pending Pods visible in rollout timelines.

- Rollouts: Linked context showing which deployments triggered the quota violation.

Example Alert Rules for Kubernetes Pod Resource Quota Exceeded Error

Proactive alerting ensures your team is notified before Pods start failing due to quota exhaustion. Below are two example alert rules you can set up in CubeAPM using PromQL or integrated OpenTelemetry alerting.

1. Alert — Namespace Resource Usage Above 90%

This alert triggers when CPU or memory utilization within a namespace approaches 90% of the defined quota. It helps teams take preventive action before new Pod deployments are blocked.

alert: NamespaceResourceUsageHigh

expr: (sum(kube_resourcequota_usage{resource="limits.cpu"}) by (namespace) / sum(kube_resourcequota_hard{resource="limits.cpu"}) by (namespace)) > 0.9

for: 2m

labels:

severity: warning

annotations:

summary: "Namespace resource usage exceeds 90% of quota"

description: "Namespace {{ $labels.namespace }} is using over 90% of its CPU quota. Consider increasing the limit or scaling down non-critical workloads."2. Alert — Pod Creation Blocked by Resource Quota

This alert detects events where new Pods cannot be created because a namespace has exceeded its ResourceQuota. It uses event logs to identify blocked workloads immediately.

alert: PodCreationBlockedByQuota

expr: count_over_time(kube_event_reason{reason="FailedCreate"}[5m]) > 0

for: 1m

labels:

severity: critical

annotations:

summary: "Pod creation blocked due to ResourceQuota limit"

description: "Pod creation failed in namespace {{ $labels.namespace }} because the ResourceQuota limit was exceeded. Review resource usage and adjust quotas."3. Alert — Persistent Storage Quota Near Exhaustion

This alert triggers when PersistentVolumeClaims (PVCs) are close to exhausting the allocated storage quota. It helps prevent database and StatefulSet crashes due to insufficient disk space.

alert: NamespaceStorageUsageHigh

expr: (sum(kube_resourcequota_usage{resource="requests.storage"}) by (namespace) / sum(kube_resourcequota_hard{resource="requests.storage"}) by (namespace)) > 0.85

for: 3m

labels:

severity: warning

annotations:

summary: "Persistent storage usage exceeds 85% of quota"

description: "Namespace {{ $labels.namespace }} is using more than 85% of its storage quota. Consider cleaning unused PVCs or expanding storage capacity."Conclusion

The Kubernetes Pod Resource Quota Exceeded error often signals that workloads are consuming more CPU, memory, or storage than allowed by namespace limits. If ignored, it can block new deployments, delay automation pipelines, and affect service uptime — especially in shared or production clusters.

CubeAPM helps teams stay ahead of such quota issues with unified visibility across Events, Metrics, Logs, and Rollouts. Its OpenTelemetry-native monitoring detects quota saturation in real time, correlates affected workloads, and visualizes resource usage trends to help teams adjust limits proactively.

Take control of your Kubernetes performance before quotas slow you down. Start using CubeAPM today to monitor resource utilization, prevent quota bottlenecks, and keep deployments running smoothly.

FAQs

1. What does “Pod Resource Quota Exceeded” mean in Kubernetes?

The “Pod Resource Quota Exceeded” error occurs when a workload tries to request more CPU, memory, or storage than the namespace limit allows. CubeAPM helps detect these violations instantly by correlating events and usage metrics in real time.

2. How can I check if a namespace has exceeded its resource quota?

You can identify exceeded quotas by reviewing namespace usage metrics and Kubernetes events. CubeAPM provides a live view of quota consumption across namespaces, highlighting those nearing or breaching limits.

3. Why does this error affect CI/CD pipelines?

You can identify exceeded quotas by reviewing namespace usage metrics and Kubernetes events. CubeAPM provides a live view of quota consumption across namespaces, highlighting those nearing or breaching limits.

4. How does CubeAPM help detect Resource Quota Exceeded errors faster?

CubeAPM continuously monitors CPU, memory, and storage usage against namespace quotas. It aggregates Events, Metrics, and Logs to identify which workloads are violating quotas and automatically triggers alerts before downtime occurs.

5. Can I monitor namespace quotas and usage trends with CubeAPM?

Yes. CubeAPM tracks quota usage trends over time, displaying real-time dashboards for CPU, memory, and storage. This helps DevOps teams forecast capacity, optimize resources, and maintain stable Kubernetes operations.