A Kubernetes Node NotReady error means a cluster node stops reporting as healthy to the control plane. It’s often triggered by kubelet failures, resource pressure, or network issues, and can quickly cascade—Pods get evicted, Deployments stall, and services degrade. The CNCF Annual Survey 2023 shows that over 84% of organizations cite node instability as a top cause of downtime.

CubeAPM pinpoints Node NotReady incidents by correlating Events, Metrics, Logs, and Rollouts into a single view. It highlights whether the root cause is kubelet failure, resource saturation, or network loss, helping teams restore cluster health before workloads are impacted.

In this guide, we’ll cover what the Node NotReady error means, why it happens, how to fix it, and how to monitor it effectively with CubeAPM.

What is Kubernetes Node NotReady Error

A Node NotReady error occurs when a node fails to pass the Kubernetes control plane’s health checks. The kubelet on that node stops reporting a healthy status, which means the scheduler can no longer place Pods there. Once marked NotReady, the node enters a tainted state and workloads running on it are either evicted or left pending.

This condition is not just a warning—it’s Kubernetes signaling that the node is unavailable for scheduling and may already be impacting running workloads. Typical side effects include:

- Evicted Pods failing to restart on other nodes due to resource constraints

- Services degrading as fewer Pods remain available

- Deployment rollouts stalling because the desired replica count cannot be met

In short, Node NotReady reflects a critical cluster health issue, and detecting it quickly is essential for maintaining availability.

Why Kubernetes Node NotReady Error Happens

1. Kubelet Failure

The kubelet is responsible for reporting node health to the control plane. If it crashes, hangs, or is misconfigured, the API server immediately marks the node as NotReady. Logs often show errors like failed to get node info.

2. Network Partition

If the node loses connectivity to the control plane, heartbeats stop and Kubernetes assumes the node is unavailable. This can be caused by CNI plugin misconfigurations, DNS failures, or cloud networking glitches.

3. Resource Starvation

Nodes under heavy CPU, memory, or disk pressure may fail readiness checks. For example, if disk pressure is triggered (NodeHasDiskPressure), the node status flips to NotReady until resources are reclaimed.

4. Node Certificate or Time Drift Issues

Expired TLS certificates or clock skew can prevent kubelet from authenticating to the API server. In this case, the node itself may be healthy, but the cluster rejects its status updates.

5. Infrastructure or Cloud Failures

On cloud-managed Kubernetes (EKS, GKE, AKS), VM termination, hypervisor issues, or underlying host maintenance can cause nodes to suddenly drop into NotReady until they are replaced or rejoined.

How to Fix Kubernetes Node NotReady Error

To resolve a Kubernetes Node NotReady error, you need to validate common failure points like kubelet health, node connectivity, and resource pressure.

1. Restart the Kubelet Service

The most common cause of Kubernetes Node NotReady error is kubelet becoming unresponsive. A quick restart usually restores the heartbeat.

Check:

systemctl status kubeletFix:

sudo systemctl restart kubelet2. Verify Node Connectivity

If the node can’t reach the API server, Kubernetes marks it NotReady. Network loss is often the culprit.

Check:

ping <apiserver-ip>Fix:

kubectl get nodes -o wide(Validate the node’s network routes or CNI configuration.)

3. Inspect Node Resource Pressure

CPU, memory, or disk pressure can taint a node and move it to NotReady.

Check:

kubectl describe node <node-name>Fix:

Free disk space, kill runaway processes, or increase instance size to relieve pressure.

4. Renew Node Certificates

If kubelet’s certificates expire, it can’t authenticate to the API server.

Check:

journalctl -u kubelet | grep certificateFix:

sudo kubeadm cert renew all5. Drain and Rejoin the Node

For recurring NotReady issues, drain workloads and rejoin the node cleanly.

Check:

kubectl get pods -o wide --all-namespaces | grep <node-name>Fix:

kubectl drain <node-name> --ignore-daemonsets --delete-emptydir-data && kubectl delete node <node-name>6. Replace the Node (Cloud Environments)

In cloud-managed clusters, it’s sometimes faster to replace a faulty VM than repair it.

Check:

kubectl get nodesFix:

Delete the node and let the autoscaler or node pool recreate it:

kubectl delete node <node-name>Monitoring Kubernetes Node NotReady Error with CubeAPM

CubeAPM provides the fastest path to diagnosing a Kubernetes Node NotReady error by correlating Events, Metrics, Logs, and Rollouts in one place. For Node NotReady, node-level metrics and kubelet logs confirm the cause, while rollout context shows how workloads are impacted. CubeAPM’s setup uses the OpenTelemetry Collector as both a DaemonSet (node scraping/logs) and a Deployment (central pipelines).

Step 1 — Install CubeAPM (Helm)

Use the official chart and manage config with values.yaml.

Install:

helm repo add cubeapm https://charts.cubeapm.com && helm repo update cubeapm && helm show values cubeapm/cubeapm > values.yaml && helm install cubeapm cubeapm/cubeapm -f values.yamlUpgrade:

helm upgrade cubeapm cubeapm/cubeapm -f values.yamlStep 2 — Deploy the OpenTelemetry Collector (DaemonSet + Deployment)

Run both modes:

- DaemonSet = per-node kubelet/host metrics + logs.

- Deployment = centralized processors/exporters.

Add repo:

helm repo add open-telemetry https://open-telemetry.github.io/opentelemetry-helm-charts && helm repo update open-telemetryInstall DaemonSet:

helm install otel-ds open-telemetry/opentelemetry-collector -f otel-collector-daemonset.yamlInstall Deployment:

helm install otel-deploy open-telemetry/opentelemetry-collector -f otel-collector-deployment.yamlStep 3 — Collector Configs Focused on Node NotReady

- A) DaemonSet config (otel-collector-daemonset.yaml):

mode: daemonset

image:

repository: otel/opentelemetry-collector-contrib

presets:

kubernetesAttributes:

enabled: true

hostMetrics:

enabled: true

kubeletMetrics:

enabled: true

logsCollection:

enabled: true

storeCheckpoints: true

config:

processors:

batch: {}

resource/node.name:

attributes:

- key: k8s.node.name

value: "${env:K8S_NODE_NAME}"

action: upsert

exporters:

otlphttp/metrics:

metrics_endpoint: http://<cubeapm_endpoint>:3130/api/metrics/v1/save/otlp

otlphttp/logs:

logs_endpoint: http://<cubeapm_endpoint>:3130/api/logs/insert/opentelemetry/v1/logs

headers:

Cube-Stream-Fields: k8s.namespace.name,k8s.node.name- hostMetrics/kubeletMetrics surface node conditions like memory/disk pressure.

- logsCollection ingests kubelet/system logs to pinpoint cert or network issues.

- exporters push data directly into CubeAPM for correlation.

- B) Deployment config (otel-collector-deployment.yaml):

mode: deployment

image:

repository: otel/opentelemetry-collector-contrib

replicaCount: 2

config:

receivers:

otlp:

protocols:

http:

grpc:

processors:

batch: {}

exporters:

otlphttp/metrics:

metrics_endpoint: http://<cubeapm_endpoint>:3130/api/metrics/v1/save/otlp

otlphttp/logs:

logs_endpoint: http://<cubeapm_endpoint>:3130/api/logs/insert/opentelemetry/v1/logs

otlp/traces:

endpoint: <cubeapm_endpoint>:4317

tls:

insecure: true- otlp receiver gathers telemetry from DaemonSets and apps.

- batch smooths spikes during node outages.

- exporters unify metrics, logs, and traces in CubeAPM.

Step 4 — Supporting Components (Optional)

Add-ons like kube-state-metrics can enrich node condition data.

helm install kube-state-metrics kube-state-metrics/kube-state-metricsStep 5 — Verification (What You Should See in CubeAPM)

- Events: Node transitions Ready=False with reasons (KubeletNotReady, NodeStatusUnknown).

- Metrics: CPU/memory/disk pressure spikes near the error.

- Logs: Kubelet/system logs showing timeouts, auth failures, or cert issues.

- Restarts: Evicted pods rescheduled onto healthy nodes.

- Rollout context: Deployments showing stalled or unavailable replicas.

Example Alert Rules for Kubernetes Node Not Ready Error

1) Node NotReady (Critical)

Fire when a node’s Ready condition stays false long enough to impact scheduling. This catches the primary symptom and gives you the exact node to investigate first.

groups:

- name: node-notready.rules

rules:

- alert: NodeNotReady

expr: max by(node) (kube_node_status_condition{condition="Ready",status="true"} == 0) == 1

for: 5m

labels:

severity: critical

category: k8s-node

annotations:

summary: "Node NotReady: {{ $labels.node }}"

description: "Node {{ $labels.node }} has been NotReady for >5m. Likely kubelet crash, network loss, or resource pressure."2) Kubelet Heartbeat Missing (Critical)

If the kubelet on a node stops scraping/reporting, the node will quickly flip to NotReady. This rule alerts on missing kubelet targets so you can act before the scheduler fully gives up on the node.

groups:

- name: kubelet-heartbeat.rules

rules:

- alert: KubeletDown

expr: max by(instance) (up{job="kubelet"} == 0) == 1

for: 2m

labels:

severity: critical

category: k8s-node

annotations:

summary: "Kubelet down: {{ $labels.instance }}"

description: "Kubelet target {{ $labels.instance }} is down for >2m. Expect Node NotReady and pod evictions if not restored."3) Node Pressure Predictive (Warning)

Disk/Memory/CPU pressure often precedes a NotReady flip. Alert early on pressure to prevent the node from dropping out.

groups:

- name: node-pressure.rules

rules:

- alert: NodeDiskPressure

expr: max by(node) (kube_node_status_condition{condition="DiskPressure",status="true"} == 1) == 1

for: 5m

labels:

severity: warning

category: k8s-node

annotations:

summary: "Disk pressure on node: {{ $labels.node }}"

description: "Node {{ $labels.node }} reports DiskPressure for >5m. Free disk or scale up to avoid NotReady."

- alert: NodeMemoryPressure

expr: max by(node) (kube_node_status_condition{condition="MemoryPressure",status="true"} == 1) == 1

for: 5m

labels:

severity: warning

category: k8s-node

annotations:

summary: "Memory pressure on node: {{ $labels.node }}"

description: "Node {{ $labels.node }} reports MemoryPressure for >5m. Reclaim memory or right-size nodes."4) Pending Pods Spike After Node Drop (Warning)

A NotReady node usually causes pending pods and rollout stalls. This rule flags sudden increases in Pending pods so you can verify capacity and rescheduling.

groups:

- name: pending-pods.rules

rules:

- alert: PendingPodsSpike

expr: increase(kube_pod_status_phase{phase="Pending"}[5m]) > 20

for: 5m

labels:

severity: warning

category: k8s-scheduling

annotations:

summary: "Pending pods spike in cluster"

description: "Pending pods increased by >20 in 5m. Correlate with NotReady nodes and check scheduler capacity/taints."Conclusion

The Kubernetes Node NotReady error is one of the most disruptive signals in a cluster, capable of stalling deployments and degrading workloads across multiple services. Left undetected, it often cascades into service outages and degraded customer experience.

With CubeAPM, teams can immediately see the correlation between node events, kubelet logs, metrics, and rollout failures. This unified view helps pinpoint whether the root issue lies in kubelet, resource pressure, or infrastructure instability.

By combining precise diagnostics with affordable pricing and 800+ integrations, CubeAPM ensures that Node NotReady errors are no longer hidden risks but manageable signals in a reliable observability workflow.

FAQs

1. What does Node NotReady mean in Kubernetes?

It means a node has failed Kubernetes health checks and is no longer able to run or schedule Pods until the issue is resolved.

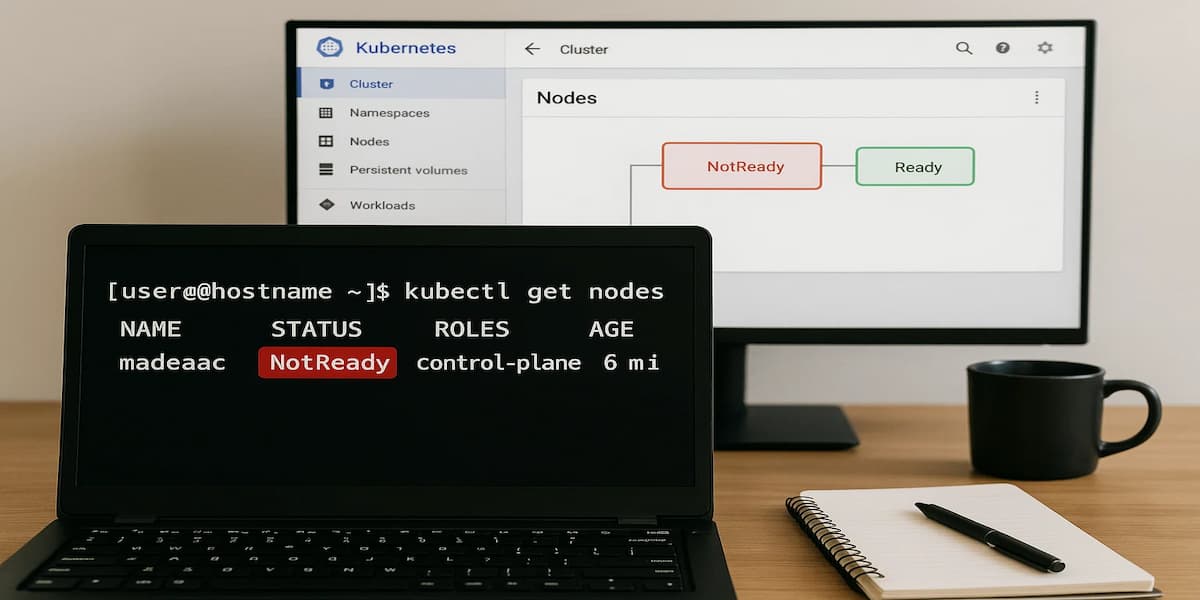

2. How do I quickly check which node is NotReady?

Run kubectl get nodes — any node with a NotReady status indicates a failure in kubelet, resources, or connectivity.

3. Can CubeAPM detect Node NotReady errors automatically?

Yes, CubeAPM ingests Events, Metrics, Logs, and Rollout context to flag NotReady transitions in real time and highlight the root cause.

4. What are common causes of Node NotReady?

Kubelet crashes, resource exhaustion, networking partitions, expired certificates, and cloud infrastructure failures are the most frequent triggers.

5. Why use CubeAPM for monitoring this error instead of basic metrics?

Basic metrics show symptoms, but CubeAPM correlates signals across the cluster — letting you see why the node went NotReady and how it affects deployments, all in one place.