Monitoring Helm Deployments ensures visibility into installs, upgrades, rollbacks, and failures across Kubernetes clusters. With GitOps and microservices adoption surging, observability is critical. Nearly 80% of production outages stem from system changes.

For many teams, monitoring Helm Deployments is painful. A helm upgrade may succeed, yet pods crash, hooks fail, or dependencies misalign. These hidden issues ripple through services, causing downtime and slowing incident resolution.

CubeAPM is the best solution for monitoring Helm deployments. It unifies metrics, logs, and error tracing, correlating them with Helm lifecycle events. With Smart Sampling, custom dashboards, and Helm-aware alerts, CubeAPM delivers cost-efficient, full-stack visibility.

In this article, we’ll explain what Helm Deployments are, why monitoring matters, key metrics, and how CubeAPM simplifies it.

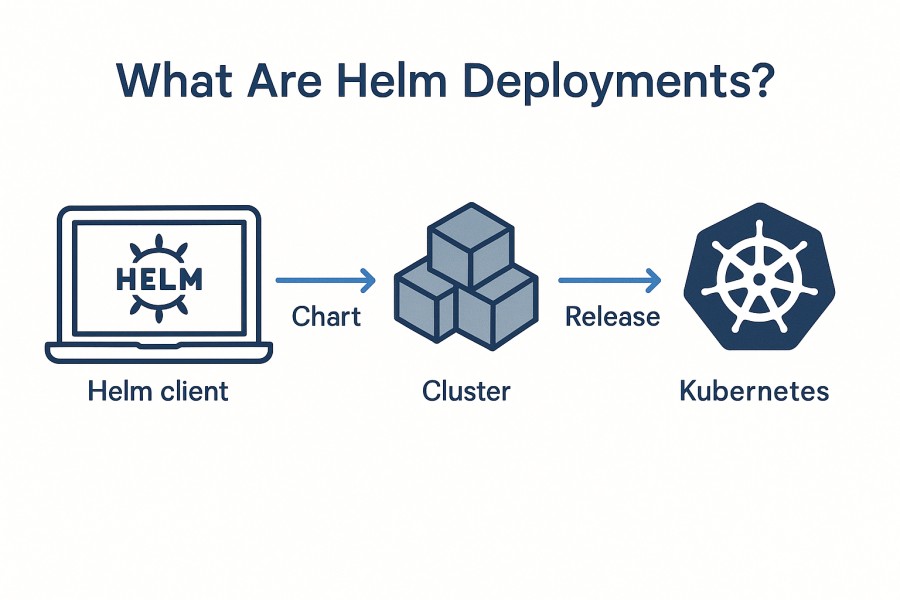

What Are Helm Deployments?

A Helm Deployment is the process of installing, upgrading, or rolling back applications on Kubernetes using Helm charts. Helm works like a package manager for Kubernetes, bundling manifests such as Deployments, Services, ConfigMaps, and Secrets into reusable templates. This allows engineers to deploy complex applications with a single command (helm install) instead of manually managing dozens of YAML files.

For businesses, Helm Deployments are game-changing because they:

- Accelerate delivery: Teams can roll out consistent applications across environments with less manual effort.

- Simplify upgrades: Versioned charts make it easy to roll forward or back without downtime.

- Ensure consistency: Standardized templates reduce configuration drift between dev, staging, and production.

- Support scale: Multi-service apps (like microservices or data pipelines) can be deployed as a single Helm release.

Example: Using Helm to Deploy a Payment Service

A fintech team packages its microservices — API gateway, database, and fraud detection engine — into a single Helm chart. With one helm upgrade, they roll out a new version across clusters. If a config error slips in, Helm’s rollback command instantly restores the last stable version, ensuring uptime for thousands of daily transactions.

Why Monitoring Helm Deployments Matters

Visibility into Hook Failures and Rollbacks

Helm hooks like pre-install and post-upgrade can make or break a release. If a hook Job fails, the entire deployment may fail, leaving little visibility beyond a generic “release failed” message. Monitoring Helm Deployments at the hook level exposes which Job, script, or policy gate broke, allowing faster recovery and targeted rollbacks.

Change-Related Incidents and Business Impact

Change remains the number one cause of production outages in Kubernetes environments. The Komodor 2025 Enterprise Kubernetes Report found that nearly 80% of production outages are caused by system changes—including Helm upgrades and rollouts. Monitoring ensures you can link each Helm release directly to its runtime effects on pods, jobs, and end-user experience.

Detecting Chart Drift and Outdated Dependencies

Helm deployments often involve multiple charts and sub-charts. Over time, teams may update the application but not the underlying chart version, leading to missed security patches or dependency mismatches. The 2024 Kubernetes Benchmark Report revealed that 70% of organizations run 11%+ of workloads on outdated Helm charts, highlighting how common this risk is. Monitoring chart versions and dependency freshness helps prevent vulnerable or broken rollouts.

Avoiding Silent Failures and Rollout Loops

A helm upgrade may report success while pods quietly fail readiness probes or services remain unavailable. Without Helm-aware monitoring, teams only catch these issues after user complaints. Observability that correlates Helm release events with Kubernetes logs and metrics helps detect rollout loops, partial upgrades, and hidden errors before they escalate into customer-facing downtime.

Key Metrics & Events to Track in Helm Deployments

Release Lifecycle Events

Monitoring the lifecycle of Helm releases ensures you know exactly what happened during installs, upgrades, or rollbacks, and how those actions impacted your cluster.

- Install/Upgrade Success Rate: Track whether Helm installs and upgrades complete successfully. Failed upgrades often point to misconfigured values.yaml or dependency charts. A healthy environment should maintain a>95% success rate.

- Rollback Frequency: Frequent rollbacks suggest instability in charts or broken pipelines. By monitoring rollback counts, you can identify services that require better pre-deployment testing. Ideally, rollbacks should remain <5% of all Helm upgrades.

- Release Duration: Measure how long Helm deployments take, including hook execution. Longer durations may indicate performance bottlenecks in jobs or readiness probes. Keep release duration under 2–3 minutes for critical apps.

Kubernetes Resource Health

Helm deployments ultimately manage Kubernetes resources. Tracking resource states ensures pods, jobs, and services behave as expected after a release.

- Pod Readiness/Availability: Monitor the percentage of pods that transition to Ready after a Helm upgrade. Slow readiness often signals probe misconfigurations or insufficient resources. Aim for >99% pod readiness within 30–60s.

- CrashLoopBackOff/Failed Pods: Count pods stuck in CrashLoopBackOff after deployment. This often results from bad images, memory limits, or failed init containers. Threshold: 0 CrashLoopBackOff pods post-deployment.

- Job Completion Rate: Many Helm charts rely on jobs for migrations or setup tasks. Failed jobs block successful rollouts. Ensure >98% of Helm jobs complete successfully.

- Service/Endpoint Matching: Monitor whether deployed Services map correctly to running pods. A Service without Endpoints means traffic won’t flow. Threshold: 0 unmatched Services per release.

Chart and Dependency Health

Charts often include sub-charts like databases, ingress controllers, or caches. Monitoring their state avoids cascading failures from missing dependencies.

- Dependency Chart Readiness: Verify that sub-charts (e.g., Redis, NGINX ingress) initialize before the main app starts. Failing dependencies cause pods to stall. Success rate should remain >95% across releases.

- Chart Version Drift: Track chart versions across environments. Using outdated charts leaves workloads vulnerable. Organizations should aim to keep all charts updated within 30 days of a new stable release.

- Configuration Drift: Monitor mismatches between values.yaml files across dev, staging, and production. Drift introduces bugs that only appear in higher environments. Threshold: 0 unapproved config drifts per release.

Infrastructure and Performance Metrics

Helm deployments can introduce sudden load, impacting nodes, networking, and cluster performance. Monitoring infrastructure ensures smooth scaling after releases.

- CPU/Memory Utilization: Observe resource usage spikes after Helm upgrades. Misconfigured limits often cause throttling or OOM kills. Threshold: keep CPU <80% and memory <75% utilization post-release.

- Network Errors/Latency: Track packet loss and DNS errors when new services register. Networking misconfigurations can delay pod communication. Threshold: <1% error rate per release.

- API Server Latency: Helm interacts heavily with the Kubernetes API during installs/upgrades. Rising API latencies indicate overloaded control planes. Keep API response latency under 200ms.

Common Helm Deployment Issues

- Failed Hooks: Pre- and post-install hooks may fail silently, blocking releases or leaving stale resources. Monitoring hook jobs ensures migration or cleanup errors are caught early, reducing rollout downtime.

- Misconfigured ConfigMaps and Secrets: Errors in values.yaml can break configurations, causing pods to crash or lose access to external systems. Continuous monitoring of applied configs helps detect mismatches before they impact production.

- Image and Registry Issues: Wrong image tags, missing credentials, or registry path errors trigger ImagePullBackOff events. Tracking image pull failures provides early warning so teams can fix references or credentials quickly.

- RBAC and Permission Errors: Missing Roles or RoleBindings stop workloads from accessing resources. By monitoring RBAC denials and service account bindings, you ensure apps run securely with the correct permissions.

- Upgrade and Rollback Failures: Incomplete upgrades or repeated rollback loops leave clusters unstable. Observing Helm release status and rollback events highlights instability early and reduces recovery time.

How to Monitor Helm Deployments with CubeAPM

Monitoring Helm Deployments isn’t just about collecting logs and metrics — it’s about tying every Helm release event to what’s really happening in your Kubernetes cluster. With CubeAPM, you can map helm install, helm upgrade, or helm rollback directly to infrastructure health, logs, and application traces. Here’s how to set it up step by step.

Step 1: Install CubeAPM in Your Kubernetes Cluster

The first step is to deploy CubeAPM alongside your Helm workloads so it can observe release activity. Using the Kubernetes installation guide, you can install CubeAPM via Helm or manifests. Once running, it automatically starts collecting cluster events, pod states, and system metrics from the same environment where Helm releases are applied.

Step 2: Configure Helm-Aware Labels and Annotations

Each Helm release generates metadata like release name, namespace, and revision. By setting up label collection in CubeAPM (configuration docs), you can tag all telemetry with Helm release details. This makes it easy to filter dashboards or queries by a specific release — for example, “show errors for helm upgrade checkout-service –namespace prod.”

Step 3: Instrument Helm-Deployed Applications with OpenTelemetry

Helm charts often package multiple services (APIs, databases, and ingress). Adding OpenTelemetry instrumentation ensures CubeAPM traces requests across all workloads deployed via Helm. This lets you spot if a spike in errors or latency coincides with a particular chart version or values.yaml change.

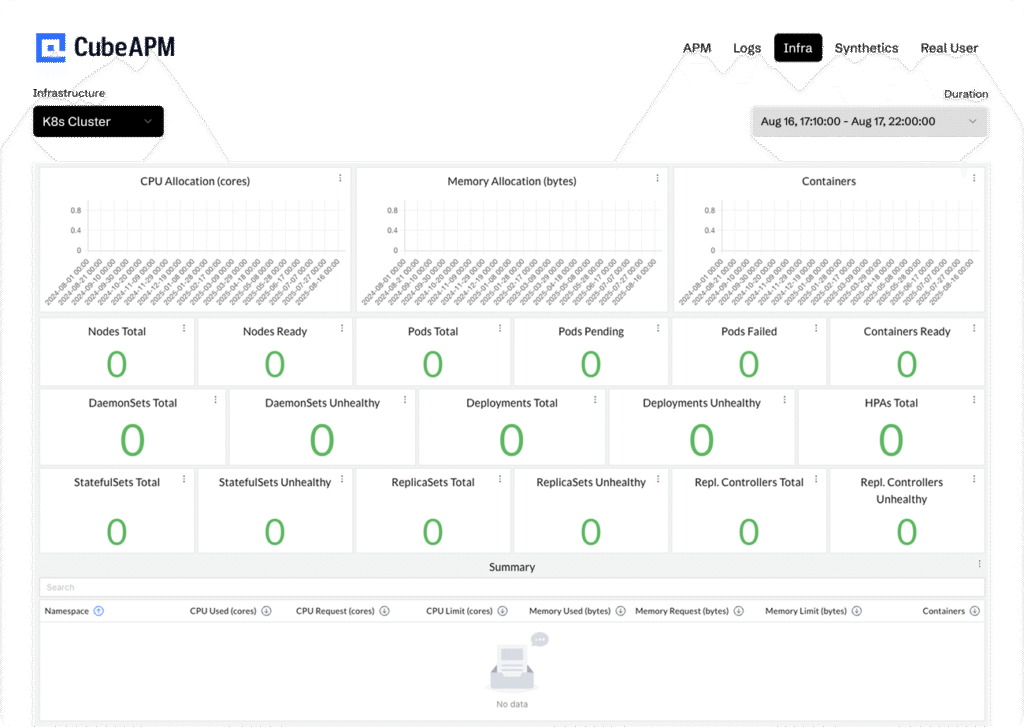

Step 4: Monitor Infrastructure Metrics After Upgrades

Helm upgrades often change pod specs, replicas, or resource allocations. With CubeAPM’s infrastructure monitoring, you can track CPU, memory, and node utilization immediately after each release. A sudden jump in resource usage usually signals a misconfigured values.yaml or dependency misalignment.

Step 5: Ingest and Correlate Helm & Kubernetes Logs

Helm CLI output only tells you if a release succeeded or failed. To go deeper, forward Kubernetes logs into CubeAPM using its log monitoring pipeline. This lets you correlate “helm upgrade failed” messages with pod-level issues like CrashLoopBackOff or failing Jobs, giving full context behind the failure.

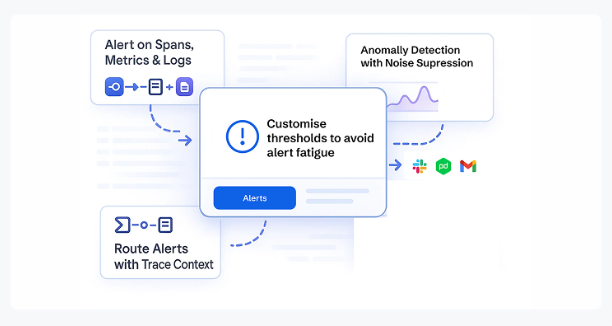

Step 6: Set Up Alerts for Failed Hooks and Rollbacks

A common pain in Helm deployments is silent hook failures or repeated rollback loops. With CubeAPM’s alerting integrations, you can trigger alerts when a release enters FAILED state, rollback loops persist, or pods remain unready beyond a defined threshold. These alerts keep your team ahead of downtime by flagging issues the moment they occur.

Real-World Example: Monitoring Helm Deployments with CubeAPM

Challenge: Frequent Rollback Loops During Helm Upgrades

A SaaS company managing a multi-tenant platform deployed all its services with Helm. During upgrades, releases are frequently rolled back because of failing post-install hooks and pods stuck in CrashLoopBackOff. The Helm CLI showed only a generic “release failed,” leaving engineers blind to the root cause. This slowed recovery and often caused 20–30 minutes of downtime per release cycle.

Solution: End-to-End Observability with CubeAPM

The team installed CubeAPM in their Kubernetes cluster and configured Helm-aware labels. Every Helm release event was tagged with chart name, version, and namespace, then correlated with Kubernetes metrics, pod logs, and distributed traces. This made it clear which Helm hook or pod crashed immediately after an upgrade.

Fixes: Targeted Remediation with Better Visibility

Using CubeAPM’s log monitoring, engineers spotted that a failing database migration job was causing Helm to roll back. They adjusted the migration script, added resource limits in values.yaml, and used infrastructure monitoring to validate node performance during the next upgrade. Alerts were set up to trigger whenever a Helm release entered FAILED state.

Result: Faster Recovery and More Stable Releases

With CubeAPM in place, failed hooks and pod crashes were detected instantly. Rollback loops dropped by 40%, and release recovery time improved from 20+ minutes to under 5 minutes. The team gained confidence to push updates faster, reducing downtime and improving customer SLA compliance.

Best Practices for Monitoring Helm Deployments

- Enable Structured Logging: Capture Helm release events and Kubernetes logs in structured JSON format. This makes it easy to parse hook failures, rollbacks, or CrashLoopBackOff events in observability tools like CubeAPM.

- Correlate Helm Releases with Kubernetes Metrics: Don’t just stop at Helm’s CLI output. Link each release event to pod readiness, job completions, and service availability. This correlation highlights the real impact of a Helm upgrade on application health.

- Detect and Prevent Config Drift: Monitor for mismatches between values.yaml files across dev, staging, and production. Config drift is one of the leading causes of Helm-based deployment failures in multi-environment setups.

- Set Granular Alerts Around Releases: Create alerts for failed hooks, repeated rollbacks, or pods stuck unready after Helm upgrades. Granular, release-specific alerts reduce noise compared to broad cluster-wide notifications.

- Use Data Retention Tiers: Not all release data needs to stay hot forever. Store 30 days of Helm logs and metrics in “hot” storage for quick debugging, and push older data into long-term retention for compliance or audits.

- Instrument Dependencies in Helm Charts: Sub-charts like databases, ingress controllers, or caches are often the hidden failure points. Ensure they’re instrumented with OpenTelemetry so you can trace issues back to the correct component.

Verification Checklist & Example Alert Rules for Monitoring Helm Deployments

Use this quick, post-release pass to confirm a Helm upgrade is healthy. Each check maps cleanly to CubeAPM metrics/logs, so you can verify fast and automate alerts.

- Hooks & jobs succeeded: Confirm pre/post hook Jobs report Succeeded with no retries spiraling; this catches failed migrations/initializers early. Pass if: 100% of release-scoped hook Jobs completed within the deployment window.

- Pods ready & stable: New ReplicaSets reach Ready and stay there (no flapping readiness probes) for a few minutes after rollout. Pass if: >99% ready within 60–120s; zero NotReady after 5m.

- No CrashLoopBackOff / ImagePullBackOff: Scan for crash loops, image pulls, or init container errors tied to the release labels. Pass if: 0 CrashLoopBackOff/ImagePullBackOff pods in 5m post-deploy.

- Services have live endpoints: Every updated Service points to healthy pods; traffic should not 404/503 due to missing Endpoints. Pass if: 0 Services without Endpoints.

- Rollback/failed release absent: The release didn’t enter FAILED, and no automatic rollback was triggered. Pass if: 0 failed/rollback events for this revision.

- Error rate & latency within SLOs: Compare p95 latency and error rate to the pre-deploy baseline for the same service. Pass if: <5% regression (or your change budget) over a 10m window.

- Logs clean of critical errors: No “hook failed,” “permission denied,” or migration exceptions during the release window. Pass if: 0 critical errors tied to release labels.

- Config/values applied as intended: Values that influence limits, replicas, and feature flags match what was approved for this environment. Pass if: 0 unapproved drift items.

- Chart & image versions recorded: Chart version, app version, and image digests are captured as labels for traceability. Pass if: 100% telemetry tagged with release, chart, revision.

Step 1: Example Alert: Hook/Job Failure (Helm-aware)

alert: HelmHookJobFailed

description: "Helm hook job failed for release {{ $labels.release }} (rev={{ $labels.revision }})"

type: metric

query: sum by (release,namespace,revision) (kube_job_failed{job_type="helm-hook"}) > 0

for: 2m

notify:

- email: [email protected]

labels:

severity: high

Step 2: Example Alert: Pods Not Ready After Upgrade

alert: PostDeployPodsNotReady

description: "Pods not Ready after Helm upgrade for {{ $labels.release }} in {{ $labels.namespace }}"

type: metric

query: sum by (release,namespace) (kube_pod_status_ready{condition="false"}) > 0

for: 3m

notify:

- email: [email protected]

labels:

severity: high

annotations:

hint: "Check readinessProbe, resource limits, init containers; correlate with current revision."

Step 3: Example Alert: Rollback/Failed Release Detected

alert: HelmReleaseFailedOrRolledBack

description: "Helm release failed/rolled back for {{ $labels.release }} (rev={{ $labels.revision }})"

type: logs

query: release="{{ $labels.release }}" AND ( "rollback" OR "release failed" OR "hook failed" )

window: 5m

threshold: count > 0

notify:

- email: [email protected]

labels:

severity: critical

annotations:

hint: "Open values.yaml diff and hook job logs; validate Services→Endpoints and CrashLoopBackOff."

Pro tip: parameterize release, namespace, and revision in dashboards/alerts so you can reuse the same rules across environments with your Helm labels.

Conclusion

Monitoring Helm Deployments is essential for teams running Kubernetes at scale. Helm simplifies installs, upgrades, and rollbacks, but without visibility, even small misconfigurations can cascade into downtime, failed hooks, or unstable rollouts. Businesses need assurance that every release is healthy and traceable.

CubeAPM delivers that assurance with full-stack observability built for Helm. From metrics and logs to error tracing and infrastructure health, CubeAPM ties every Helm release event to real outcomes in your cluster. Its Smart Sampling and flat $0.15/GB pricing keep costs predictable without sacrificing depth.

With CubeAPM, you can monitor Helm Deployments end-to-end, reduce failures, and improve release confidence. Start monitoring smarter today with CubeAPM and keep your Kubernetes deployments always in check.

Disclaimer: The information in this article reflects the latest details available at the time of publication and may change as technologies and products evolve.

FAQs

1. Can I monitor Helm Deployments without installing extra tools?

Yes, you can use helm status, helm get all, and kubectl commands to get basic information. However, these are point-in-time checks and don’t provide continuous visibility. For production-grade monitoring, integrating with observability platforms like CubeAPM gives you logs, metrics, and alerts tied to each release.

2. How do I trace which Helm Deployment caused a specific issue?

You can enable release labels and annotations in your Helm charts. When telemetry is ingested into CubeAPM, you can filter by release, namespace, or revision to trace which deployment introduced the error or performance regression.

3. Is it possible to get real-time alerts for failed Helm Deployments?

Yes, tools like CubeAPM allow you to configure real-time alerts when a Helm release fails, hooks time out, or pods don’t reach readiness. This is more reliable than manually checking logs or running CLI commands after each release.

4. How can I monitor Helm rollbacks effectively?

Helm rollbacks often indicate instability. By monitoring release events and correlating them with pod health, you can see when and why a rollback was triggered. CubeAPM also alerts on repeated rollback loops so you can resolve root causes faster.

5. Can Helm Deployments monitoring integrate with GitOps workflows?

Yes. If you manage Helm charts with GitOps tools like ArgoCD or Flux, CubeAPM can ingest deployment events and cluster metrics in real time. This lets you monitor not just the GitOps commit but also the Helm release impact on your workloads.