Telemetry data serves as an indicator of a system’s true health, performance, and behavior in modern cloud-native environments. Logs, metrics, and traces represent a piece of reality about the system’s runtime behavior under real user load. However, many organizations continue to treat telemetry data as disposable exhaust rather than a monitored, high-value asset.

DevOps teams collect more telemetry, yet sometimes fail to get a clear picture of how things are running. Microservices and distributed systems are making systems more complicated. In fact, IDC estimates global enterprise data will reach nearly 394 zettabytes by 2028, with observability data contributing heavily to that growth, but much of this data adds storage and processing overhead without proportionally improving incident response.

Safely managing the telemetry lifecycle is now essential for maintaining system reliability, as mature observability can reduce resolution times by up to 66%. Beyond performance, rigorous management ensures compliance with global data privacy standards (like GDPR and HIPAA) and prevents spiraling infrastructure costs caused by unmonitored data growth.

From Separate Monitoring to Full Observability

Traditional monitoring tools only looked at one signal at a time. Modern architectures need full observability that links logs, metrics, and traces across serverless functions, temporary workloads, and deployments across multiple clouds. This transition increased data volumes and raised compliance stakes.

Therefore, unsafe retention policies can violate regulations, and poor sampling can erase the signals needed during incidents. This high-stake ecosystem calls for safe telemetry lifecycle management. Precisely, in modern observability, retention, storage, and sampling are strategic decisions that determine whether your telemetry becomes a competitive advantage or a liability.

Defining “Safe” Management in Observability Telemetry

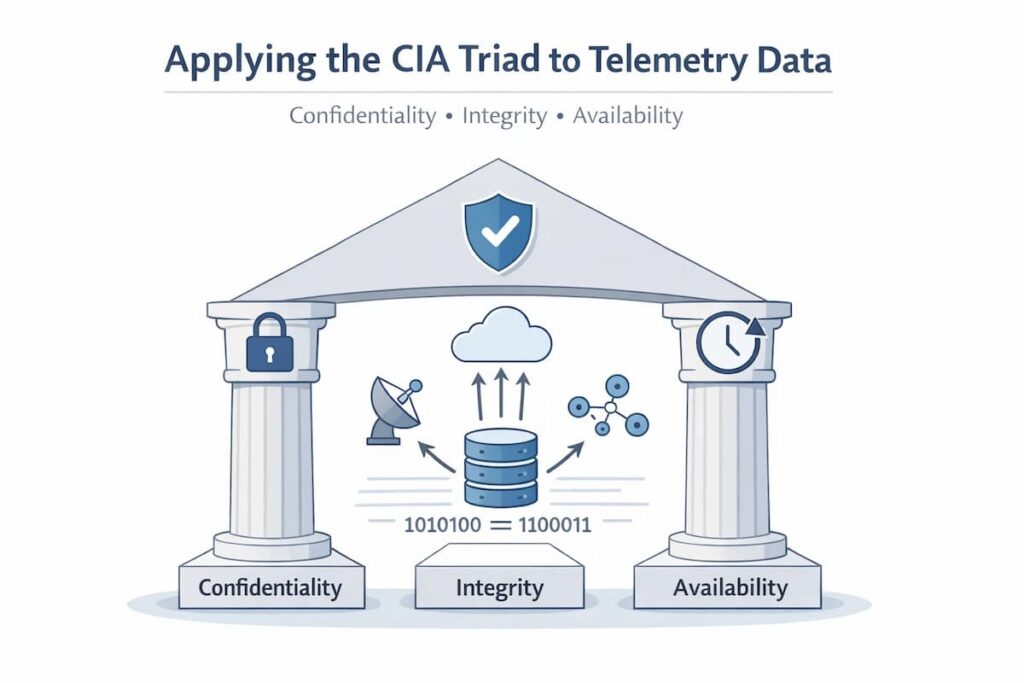

Applying the CIA Triad to Telemetry Data

CubeAPM anchors telemetry safety to the CIA Triad:

- Confidentiality: Sensitive telemetry, such as traces containing IP addresses, user IDs, or payload snippets, must be protected from unauthorized access.

- Integrity: Telemetry needs to be always complete and unaltered. Sampling, indexing, or storage failures that silently drop or bias data compromise trust.

- Availability: Engineers must reliably access telemetry when incidents occur, without data loss from premature purging or storage outages.

Telemetry must meet all three of these pillars to be considered safe.

Safety vs. Security vs. Compliance

While the terms “safety,” “security,” and “compliance” are often conflated, they are not interchangeable.

- Security primarily entails the prevention of unauthorized access or breaches.

- Compliance focuses on ensuring that there is strict adherence to regulatory frameworks.

- Safety on its part, covers both security and compliance and also includes operational reliability and analytical correctness.

From these definitions, it is apparent that a system can be compliant and still remain unsafe. For instance, encrypting logs that are not being used meets the need for privacy, but it doesn’t stop biased sampling that hides rare failures.

Why Encryption Alone Isn’t Enough

In real environments, teams often assume encryption alone solves telemetry risk until governance, access control, and retention policies are tested during audits or incident response.

Encryption is a good way to protect data, but it has some problems that make it not enough:

- Encryption doesn’t guarantee integrity because broken indexes or pipelines that aren’t set up correctly can change datasets without anyone knowing.

- It doesn’t guarantee availability. Telemetry may not work during incidents if the encryption keys are lost or the region goes down.

- It doesn’t stop sampling bias, which makes blind spots without sending out alerts.

Because of these limits, safe telemetry management needs more than just cryptography. It needs governance, provenance, and constant validation.

Telemetry as a Governed Asset

Governance gaps usually surface as observability usage spreads across teams, environments, and regions without a single owner accountable for lifecycle decisions.

Modern telemetry carries production truths such as system performance, customer behavior, and failure modes. Therefore, it should not be taken as a tooling exhaust but rather a governed asset. As a governed asset, telemetry requires:

- Lifecycle policies

- Classification

- Stewardship

- Ownership

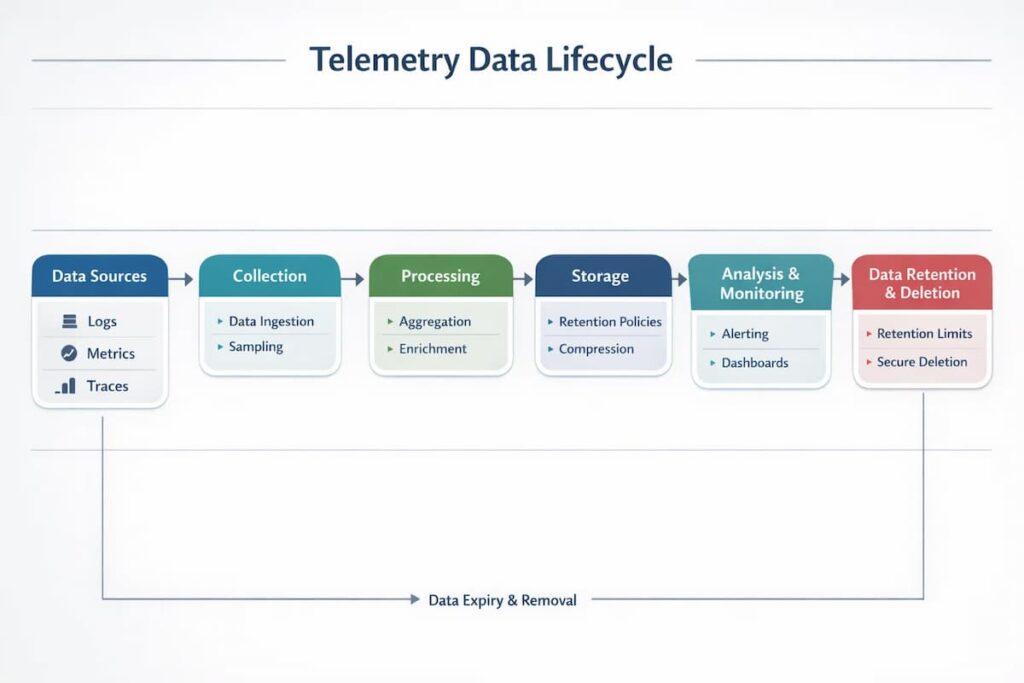

The Telemetry Data Lifecycle: Foundations for Safe Management

Safe telemetry starts with a holistic understanding of its lifecycle, from the start to the end. You need to understand the whole lifecycle from instrumentation through ingestion, processing, retention, and deletion, including all the unique safety risks and failures associated with each stage.

Data Collection and Instrumentation

Safety can be undermined as early as the instrumentation stage. In fact, the instrumentation stage can pose a subtle yet significant safety risk in observability. Several best practices help improve telemetry safety, including:

Standardization Through OpenTelemetry

Standardizing on OpenTelemetry as the instrumentation layer provides:

- Vendor-neutral signal generation which prevents lock-in and opaque data handling.

- Semantic rules that make sure metrics, logs, and traces are the same across teams.

- Extensibility that lets you add to and filter without having to re-instrument apps.

Therefore, standardization helps organizations avoid the accumulation of fragmented telemetry schemas that undermine correlation, governance, and long-term integrity.

Avoiding Over-Instrumentation and Signal Noise

Teams often discover over-instrumentation only after ingestion pipelines saturate or costs spike during incidents. Precisely, excessive span creation and high-cardinality labels lead to:

- Explosive data volumes that overwhelm ingestion pipelines.

- More money spent on storage but less useful information.

- More access to sensitive metadata.

Therefore, there is need to adopt safe instrumentation that focuses on intentional signal design, including:

- Capturing spans at service boundaries, not every internal function call

- Avoiding unbounded labels, such as raw user IDs and session tokens.

- Prioritizing telemetry that supports defined SLOs and debugging workflows

Industry benchmarks reveal that an estimated 70% of spans in large environments provide little diagnostic value despite the fact that they consume the majority of observability budgets.

Explicit Classification of Sensitive Fields

Telemetry frequently contains sensitive data unintentionally. Examples of sensitive telemetry data include request headers, user identifiers, error payloads, or URL parameters. Safe instrumentation requires:

- Explicit tagging of fields likely to contain PII or regulated data

- Early masking, hashing, or tokenization at the source

- Metadata flags that downstream systems can enforce consistently

It is always important to identify sensitive data at collection time because it becomes extremely difficult to enforce confidentiality guarantees later in the lifecycle.

Ingestion and Processing Pipelines: Enforcing Safety at Scale

After the instrumentation stage, ingestion pipelines become a key element in controlling the safety, cost, and reliability of telemetry.

Intelligence at the Edge

Modern observability architectures are moving processing closer and closer to the data source. Using intelligence at the edge makes it easier to:

- Early filtering that gets rid of telemetry that isn’t worth much before it gets to centralized systems.

- Backpressure control that keeps traffic spikes from triggering outages.

- Enforcing policies, such as removing fields or avoiding collecting signals that are not needed.

It is worth noting that the use of edge-level intelligence reduces both risk and cost. In large-scale systems, filtering at the edge can cut downstream storage requirements.

Inline Enrichment Without Payload Duplication

While enrichment helps in adding context to telemetry, using unsafe enrichment strategies can lead to duplication of payloads and inflation of data size and exposure.

There is therefore need to adopt safe enrichment practices such as:

- Attaching lightweight references instead of copying large objects.

- Making sure that schema validation is always used to stop bad metadata.

- Using deterministic enrichment to avoid inconsistencies in the analysis.

This will help lower the risk of data integrity being silently damaged, especially when different pipelines make changes that don’t match up.

Integrated Anomaly Detection

Adding anomaly detection to ingestion pipelines makes it easier to find:

- Sudden increases in volume, which could be due to bad instruments or attacks.

- Field values that aren’t what you expect could mean that the data is corrupt.

- Sampling drift that makes things not fair.

Early detection of anomalies keeps telemetry data safe and accessible by keeping bad data out of long-term datasets. So, telemetry is still reliable during emergencies, when getting in and getting the right information are the most important things.

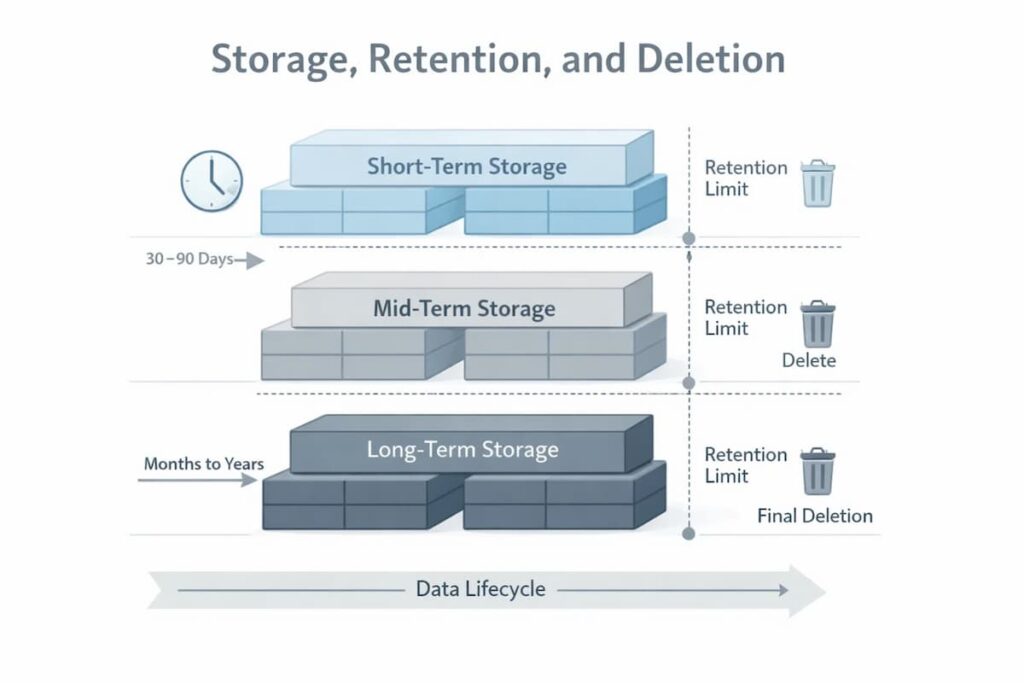

Storage, Retention, and Deletion

To keep data safe, you need more than simply a “delete” button; you need automated, policy-driven workflows. To do lifecycle management well, you need to:

- Set retention windows to the specific type of signal you’re tracking.

- Make sure that deletions are lawful and safe, with no chance of legal or technical problems.

- Build in safeguards to protect against accidental or premature data loss.

Signal-Aware Retention Strategies

Effective retention isn’t one-size-fits-all. It’s about balancing cost with utility. For example:

- High-volume, low-value logs should be kept on a short leash to save space.

- Aggregated metrics deserve longer storage to support meaningful trend analysis.

- Traces linked to incidents or SLO breaches should be locked down for as long as the investigation requires.

Ultimately, your retention policy should be driven by business risk and diagnostic value, not just the monthly storage bill.

Automated, policy-driven flows are necessary for safe lifecycle management:

- Set retention windows based on the type of signal

- Deletion that is safe and meets compliance standards

- Protection against accidental or early deletion

Retention decisions often change after outages, audits, or compliance reviews, when teams temporarily extend storage windows and later discover the long-term cost impact.

Guarding Against Silent Data Loss

Silent data loss is a dangerous failure mode in observability. This occurs when telemetry disappears without alerts. To avoid this, systems implement:

- Retention health monitoring.

- Alerts on unexpected drops in data volume.

- Validation checks to confirm policy execution.

This is rarely visible at ingestion time and is often discovered only during post-incident analysis, when expected telemetry is missing or incomplete.

Above safeguards help teams discover gaps early before it is too late.

Transparency Gaps in Tooling

Lack of transparency is a major obstacle to safe telemetry management. This is because many proprietary observability tools:

- Sampling decisions are hidden, so teams cannot see why certain traces or logs were kept while others were dropped.

- Retention rules are described at a high level, but teams have no clear way to verify when data is deleted or how long it is actually stored.

- There is little to no visibility into data that has been discarded, modified, or downsampled, making it hard to trust what remains.

Because of this lack of transparency, teams have to assume safety instead of checking it.

This is often the point where teams begin reassessing their observability platforms and exploring alternatives that provide clearer visibility into sampling, retention, and data handling decisions.

On the other hand, open systems and unified observability platforms offer:

- End-to-end visibility across logs, metrics, and traces, so teams can see how data flows through the entire system.

- Clear, verifiable reporting on what data is retained, what is dropped, and how sampling is applied in practice.

- Continuous ability to inspect, validate, and audit telemetry lifecycle behavior instead of relying on assumptions.

Observability: Access Control, Auditing, and Governance

Zero-Trust Access Control

Safe observability platforms require:

- Role-Based Access Control (RBAC) is limited to teams and environments.

- Defaults for least privilege.

- Assume that internal users can’t be trusted.

Forensics and Auditing

Immutable audit trails are important for the following reasons:

- You should keep track of every query, export, and policy change.

- Audit logs should work with SIEM systems.

- It is not possible to negotiate tamper resistance.

Data Classification and Anonymization

Telemetry often contains sensitive information unintentionally. Safe systems support:

- Tokenization of identifiers.

- Field-level redaction.

- Context-aware anonymization.

Telemetry governance directly supports confidentiality and integrity across the CIA triad.

Fundamentals of Data Retention in Observability Tools

Retention is where safety, cost, and following the rules all come together.

Retention Policy Models

Here are some common ways:

- Time-based retention: set time frames like 7, 30, and 90 days.

- Event-based retention: This keeps track of data about events, problems, or service level agreement (SLA) violations.

The best way to keep things is with granular methods. You usually have to keep metrics, logs, and traces for different amounts of time.

Compliance and Automated Purging

Safe systems make sure that:

- Limits on how long things can be kept by design

- Automatic deletion to keep storage from getting too full

- Tools for investigations that are legal hold

Best Practices

- Different types of storage, such as hot, warm, and cold storage.

- Retention defined as configuration-as-code.

- Regular audits of policy effectiveness.

We’ve seen teams retain logs indefinitely “just in case,” only to later discover compliance violations or spiraling storage costs that forced emergency purges—often during active incidents.

Secure Storage Architectures for Observability Data

Storage Models

At scale, safe observability relies on:

- Distributed object stores that are S3-compatible.

- Columnar databases for high-cardinality traces

Security Protocols

- AES-256 encryption at rest.

- TLS in transit.

- Features that make it impossible to change.

These protections come with some trade-offs that need to be carefully managed instead of being ignored:

Immutability can make it harder to delete things when you have to follow strict retention rules or respond to requests to delete things. We solve this problem by combining immutability with policy-driven retention windows and scoped delete mechanisms.

Encryption adds risk to management. Telemetry may not be available during incidents if encryption keys are set up wrong, rotated, or lost. We deal with this problem by using controlled rotation policies, checking key accessibility all the time, and integrating centralized key management across all storage tiers.

Scaling Safely

- Compression and deduplication lower costs.

- Partitioning keeps sensitive data separate.

- Self-observing storage keeps an eye on its own health.

Encryption, immutability and zero-trust access ensure holistic telemetry safety.

Advanced Sampling Strategies and Their Safe Implementation

Sampling determines the reality the system shows or not.

Sampling Types

- Head-based sampling involves making a decision the moment a request starts. This is the easiest way to do it, and it keeps resource use low, but it could miss rare or complicated problems that don’t show up until later in the request’s life.

- Tail-based sampling waits until a request is fully complete before deciding whether to keep the data. This is much more effective for troubleshooting because it allows you to specifically target and save traces for errors or slow performance.

- Adaptive sampling automatically changes gears by changing the rates at which it collects data dependent on how busy the system is at the time. This makes sure you have high-resolution data when things are slow and don’t fill up your storage when traffic is high.

- Intelligent sampling utilizes logic that is aware of the situation and follows rules to figure out what is important. It doesn’t just pick a random sample; it puts more weight on data that is in line with your unique business goals or important service-level goals.

Safety Measures

Safe sampling requires:

- Statistical bias detection.

- Guaranteed capture of rare or high-impact events.

- Incident-triggered full-fidelity modes.

Poor sampling such as head-based sampling can make systems miss serious issues such as outages. In contrast, probabilistic and tail-based strategies preserve insight while reducing volume by 70–90%.

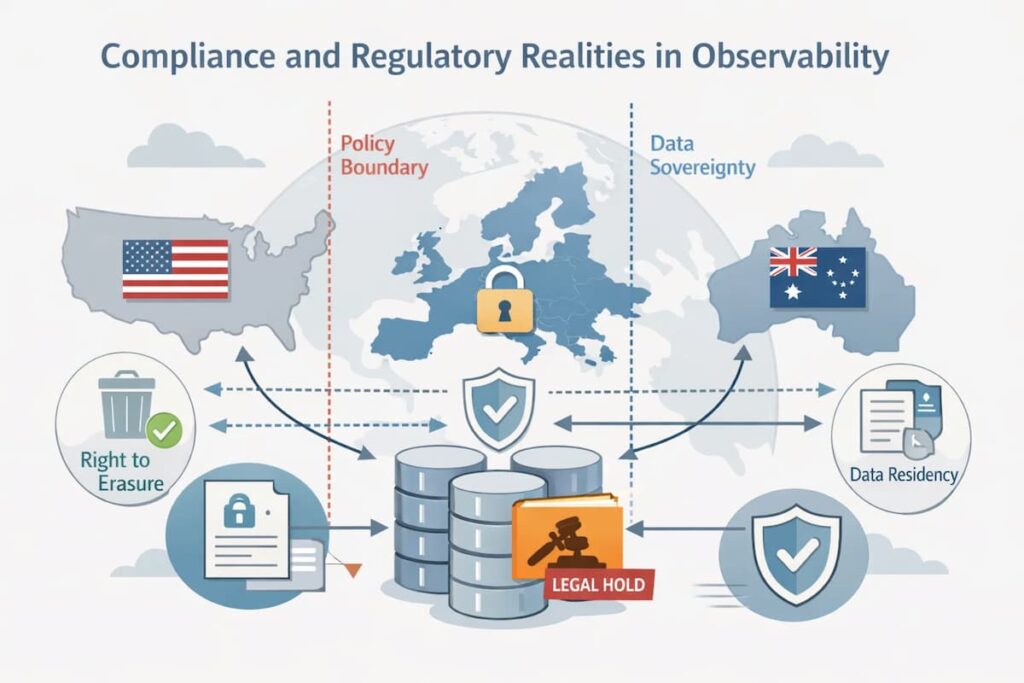

Compliance and Regulatory Realities in Observability

Compliance is dynamic and is influenced by a number of realities, including:

- Data residency and sovereignty requirements.

- Right-to-erasure workflows.

- Legal holds that are stronger than normal retention.

Cost Control Without Compromising Safety

The need to cut costs should never get in the way of getting good information.

Main Cost Drivers

- Smart sampling

- Tiered retention

Safety Guardrails

- Forensics datasets that are safe

- Alerts about drop rates and gaps in coverage

- Cost modeling that is in line with SLOs

Companies that align observability spending with MTTR and SLOs consistently do better than those that only look at costs.

Dealing with Major Problems in Safe Management

Explosion of Data Volume

- Scaling based on predictions

- Processing at the edge

- Extensions based on anomalies

Integrity and Privacy

- Provenance trails

- Corruption recovery

- Making PII anonymous

Interoperability

For safety in heterogeneous stacks, tools like Prometheus, Loki, and Jaeger need to have the same rules.

Best Ways to Manage Observability Data in a Safe and Complete Way

A tested framework:

- Sort telemetry data

- Write policies as code

- Use the same method on all signals

- Keep auditing

Success Metrics

- Data freshness

- Delay in queries

- Ratios of coverage

- Retention and sampling health dashboards

Culture Matters

Safe observability requires a data stewardship mindset across DevOps and SRE teams, in addition to tooling changes.

At this stage, teams evaluating telemetry lifecycle strategies are usually balancing rapid system growth, longer retention needs, and increasing pressure to keep observability costs predictable and governed.

Applying Telemetry Lifecycle Principles in Practice (One Implementation Example)

Telemetry lifecycle principles such as controlled ingestion, intentional sampling, and retention aligned to operational needs can be implemented in different ways depending on architecture and governance requirements. One implementation example is how CubeAPM applies these concepts.

In this approach, telemetry is governed early in the pipeline rather than treated as unlimited downstream data. Key characteristics include:

- Sampling and filtering at ingestion, helping control volume while preserving high-value signals

- Retention shaped by investigative and compliance needs, rather than default long-term storage

- Clear separation between signal value and raw data volume, reducing over-instrumentation risk

Instead of retaining all telemetry indefinitely, retention windows can be adjusted when needed such as during incidents or audits without permanently increasing baseline storage costs.

It’s important to note that this is one way to apply telemetry lifecycle management. Teams with different operational models, compliance constraints, or preferences for fully managed SaaS platforms may implement these principles differently. The broader takeaway is that effective observability depends on treating telemetry as a governed asset with an explicit lifecycle, regardless of tooling choice.

If you want to see how different approaches affect cost, control, and long-term scalability, explore Comparing observability cost and control models and evaluate which model aligns best with your engineering and governance goals.

Conclusion

As cloud-native systems scale, telemetry becomes a critical source of operational truth rather than incidental exhaust. Logs, metrics, and traces increasingly influence how teams detect incidents, diagnose failures, and meet reliability and compliance requirements.

In practice, cost overruns, blind spots, and missing data tend to surface gradually as observability moves from early debugging into core production operations. These issues are rarely caused by tooling alone, but by how telemetry is generated, sampled, stored, and governed over time.

Effective telemetry lifecycle management requires deliberate trade-offs, clear ownership, and ongoing reassessment as systems evolve. Treating observability data as a governed asset rather than an unlimited stream helps teams balance visibility, cost, and trust at scale.

FAQ

1. What is telemetry lifecycle management?

Telemetry lifecycle management refers to how logs, metrics, and traces are generated, sampled, stored, retained, and eventually discarded over time. It treats observability data as a governed asset rather than unlimited exhaust, helping teams balance visibility, cost, reliability, and compliance.

2. When do teams usually need to think about telemetry lifecycle management?

Most teams begin addressing telemetry lifecycle challenges once systems reach sustained production scale for example, after adopting microservices, autoscaling, or always-on instrumentation. At this stage, telemetry volume often grows faster than application traffic, exposing cost, noise, and governance issues.

3. How does sampling affect observability reliability?

Sampling reduces telemetry volume but introduces trade-offs. While it helps control cost and storage, poorly designed sampling can create blind spots, especially during rare or high-impact incidents. Effective lifecycle management balances sampling strategies with the need to preserve critical signals during failures.

4. Why is retention an operational decision, not just a storage choice?

Retention affects more than storage costs. Longer retention can be essential during audits, investigations, or compliance reviews, while excessive default retention can increase costs without improving outcomes. Teams often adjust retention dynamically based on investigative value, compliance needs, and operational risk.

5. Does better tooling alone solve telemetry lifecycle problems?

No. While observability platforms can support lifecycle controls, long-term success depends on clear ownership, governance, and disciplined practices. Telemetry lifecycle management is ultimately an operational and organizational discipline, not just a tooling feature.