Distributed tracing tools are essential for microservices architectures, where a single request can traverse dozens of APIs, databases, and containerized services. The global distributed tracing software market is projected to grow at a 20% CAGR, reaching US$2.5 billion by 2032.

But traditional tracing platforms—designed for monoliths, struggle with incomplete context propagation, weak real-time visibility, and expensive data retention. Modern teams need tracing that captures trace IDs and spans across distributed systems, exposing latency, errors, and dependencies that logs or metrics alone cannot resolve.

CubeAPM is one of the best distributed tracing tools for microservices by providing deep visibility into all your services, cross-layer correlation, high granularity spans, SLAs, visualization for service maps, and more. It also offers OpenTelemetry-native support, smart sampling, and on-prem deployment, as well as other useful features.

In this article, we’ll explore the top distributed tracing tools for microservices—their features, pricing, and best use cases.

Top 8 Distributed Tracing Tools for Microservices

- CubeAPM

- Dynatrace

- Datadog

- New Relic

- Jaegar

- Zipkin

- Grafana

- Honeycomb

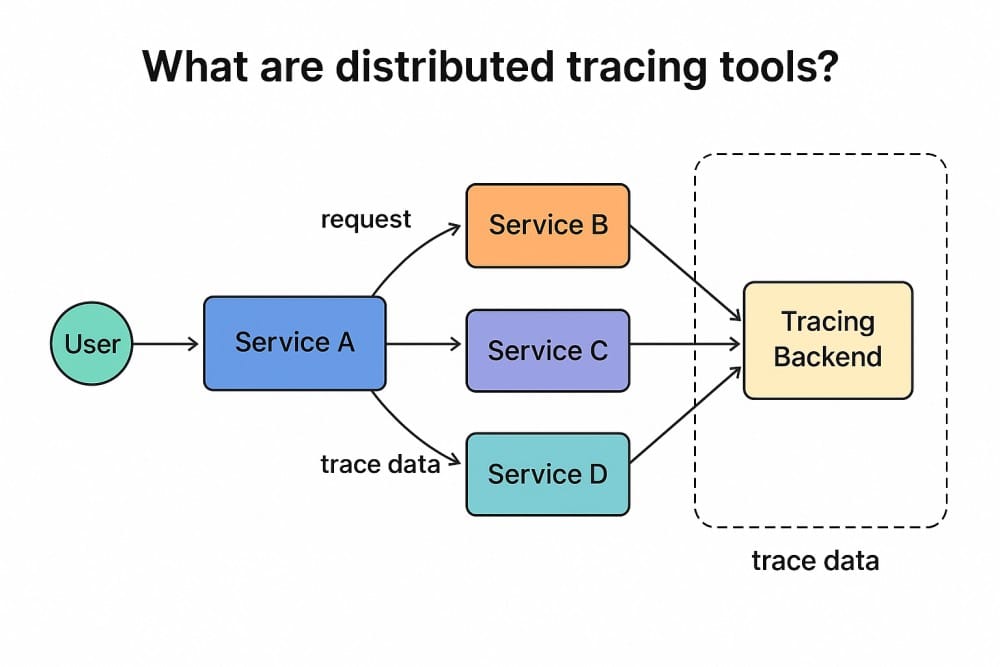

What Are Distributed Tracing Tools for Microservices?

Distributed tracing is the process of tracking user requests as they flow through multiple services in a system. Each request is assigned a trace ID, and every step along its journey is recorded as a span. These spans capture timing, metadata, and success or failure signals, which are then stitched together into a complete trace. This gives engineering teams an end-to-end timeline of how requests behave, far beyond what logs or metrics alone can reveal.

In monolithic systems, debugging is simpler because all logic lives in a single codebase. But in microservices, a single action can span dozens of independently deployed services, often managed by different teams. Without distributed tracing, diagnosing issues like cascading failures, bottlenecks, or latency spikes becomes extremely difficult. Distributed tracing fills this gap by mapping dependencies across services and showing exactly where things break down.

Example: Trace the Entire Checkout Flow with CubeAPM

In a microservices-based e-commerce app, a single checkout request may pass through Cart, Pricing, Inventory, Payment, and Shipping services. When the checkout slows down, it’s often unclear which service is to blame.

With CubeAPM’s distributed tracing, every call is automatically tagged with trace and span IDs, letting teams see the full journey in one timeline. Intelligent sampling ensures that error-prone or high-latency spans (e.g., a slow Payment API) are always retained, while keeping storage costs predictable. Engineers can instantly pinpoint the bottleneck, correlate it with logs and metrics, and resolve the issue before it impacts more customers.

Why It Matters?

Did you know that intelligent sampling can reduce trace storage needs by up to 80% while still preserving almost all critical error and latency insights?

For developers and SREs, distributed tracing reduces mean time to resolution (MTTR) by pinpointing issues in complex microservice chains. For non-technical stakeholders, trace visualizations clearly show how dependencies affect customer experience, for example, explaining why a “slow payment API” might stall the entire checkout process.

Why Teams Choose Different Distributed Tracing Tools for Microservices?

Cost Model vs. Traffic Shape

Microservices often generate high volumes of short-lived requests, leading to unpredictable costs with host-based or per-GB ingestion pricing. Teams look for tools that offer transparent, predictable pricing or cost-saving mechanisms like ingestion-based billing or OSS backends with cheap storage. This ensures observability scales with the business instead of punishing teams as services multiply.

Tail-Based and Dynamic Sampling

Because microservices produce massive amounts of spans, storing every trace is impractical. Teams prefer solutions with tail-based or dynamic sampling, which retain only slow, error-prone, or high-value traces while dropping routine noise. This keeps costs under control without sacrificing the traces needed for effective debugging.

OpenTelemetry Maturity

OpenTelemetry (OTel) has become the standard for microservices instrumentation. The depth of OTel support, collectors, semantic conventions, SDKs, directly influences tool adoption, since teams want vendor flexibility and consistent instrumentation across services. Tools with strong OTel maturity reduce vendor lock-in and speed up onboarding of new microservices.

Kubernetes and Service Mesh Awareness

Modern microservices run on Kubernetes and service meshes like Istio or Linkerd. Engineers choose tracing tools that automatically discover workloads, visualize pod-to-pod traffic, and map service-to-service dependencies without manual setup. This helps teams quickly diagnose cross-service latency inside dynamic, ephemeral environments.

Query Power and Usability for Large Traces

When debugging across dozens of microservices, engineers need fast, expressive query capabilities and intuitive UIs. Features like Grafana’s TraceQL or Honeycomb’s BubbleUp help teams drill into high-cardinality data and spot bottlenecks quickly. Without these capabilities, teams waste valuable time hunting for the right trace among millions.

Correlation Across MELT (Metrics, Events, Logs, Traces)

Distributed tracing alone is not enough. Teams value tools that correlate spans with logs, metrics, and events, enabling a full-stack view of incidents and helping reduce mean time to resolution (MTTR). This correlation accelerates incident response by showing both the what (traces) and the why (logs/metrics).

Data Residency, Retention, and Compliance

In industries like finance or healthcare, compliance is non-negotiable. Teams often select tracing tools that support regional data storage, long retention policies, or on-prem deployment, ensuring regulatory alignment without inflated costs. This also prevents sensitive microservice traces from leaving controlled environments.

Auto-Instrumentation vs. Engineering Effort

With dozens of services to manage, auto-instrumentation can save enormous developer time. Tools like Dynatrace’s OneAgent automate discovery and tracing, while OSS options like Jaeger require more manual setup but provide greater control. The choice often depends on whether teams prefer speed of deployment or long-term customization.

High-Cardinality and Scale Handling

Microservices generate traces tagged with attributes like tenant ID, region, and request IDs. Not all tools handle this well. Teams gravitate toward platforms that can manage high-cardinality data at scale without slowing down queries or dashboards. This capability is crucial for multi-tenant SaaS platforms or globally distributed services.

Top 8 Distributed Tools for Microservices

1. CubeAPM

Overview

CubeAPM is an OpenTelemetry-native application monitoring platform that caters to hybrid and cloud-native microservices. It unifies traces, logs, metrics, RUM, and synthetics, runs on-prem for full data control, and is positioned as a cost-efficient, privacy-first alternative to legacy suites. The platform advertises 800+ integrations across cloud, DevOps, and incident tooling, so it plugs cleanly into existing stacks.

Key Advantage

Real-time, end-to-end visibility for microservices powered by smart (AI-driven) sampling and interactive service graphs. You can run full-fidelity (zero-sampling) tracing for critical paths when you need every span, then switch to tail-aware sampling to retain error/slow traces at scale—keeping storage and costs predictable without losing signal. Combined with RED/SLO views and contextual alerts, this shortens MTTR in busy, multi-team environments.

Key Features

- End-to-end distributed tracing across services: See end-to-end latency across services, APIs, and databases.

- Cross-layer correlation: Jump from trace to related logs, infra, and metrics easily to debug faster with this feature.

- High granularity spans: Drill deeper into your spans with full metadata, contextual attributes, and stack traces.

- Root Cause Identification: Spot bottlenecks instantly, whether it’s a slow DB query, API timeout, or code regression.

- Service-level intelligence: Track RED metrics per service/endpoint, drill into SLO violations and error hotspots, and visualize service graphs with live latency/error overlays.

- Infrastructure-aware insights: Span-level DB latency breakdowns, external API monitoring, and runtime stats (memory, GC, threads) tied to traces.

- Context-rich alerting: Alert on any span/metric/log combo, anomaly detection with noise suppression, route to Slack/PagerDuty/email with trace context.

Pros

- Transparent ingestion pricing with predictable scale

- 800+ integrations; easy fit with cloud, DevOps, and incident tools

- OpenTelemetry-first architecture; quick lift-and-shift from other agents

- Scalable solution at lower cost, saves 60-80% spend than traditional SaaS solutions

- Low-latency on-prem processing; 4x faster trace visualization

- Deployment flexibility on cloud, hybrid, or on-prem for data residency and compliance

Cons

- May not suit businesses focusing on SaaS-only platforms

- No cloud security management capabilities available

CubeAPM Pricing at Scale

CubeAPM uses a transparent pricing model of $0.15 per GB ingested. For a mid-sized business generating 45 TB (~45,000 GB) of data per month, the monthly cost would be ~$7,200/month.

*All pricing comparisons are calculated using standardized Small/Medium/Large team profiles defined in our internal benchmarking sheet, based on fixed log, metrics, trace, and retention assumptions. Actual pricing may vary by usage, region, and plan structure. Please confirm current pricing with each vendor.

Tech Fit

Strong for Kubernetes, containers, and serverless running Java, Go, Python, Node.js, and .NET via OpenTelemetry. Plays well alongside Prometheus/Grafana and common incident channels (Slack, PagerDuty), and can be self-hosted to satisfy compliance and data-localization requirements.

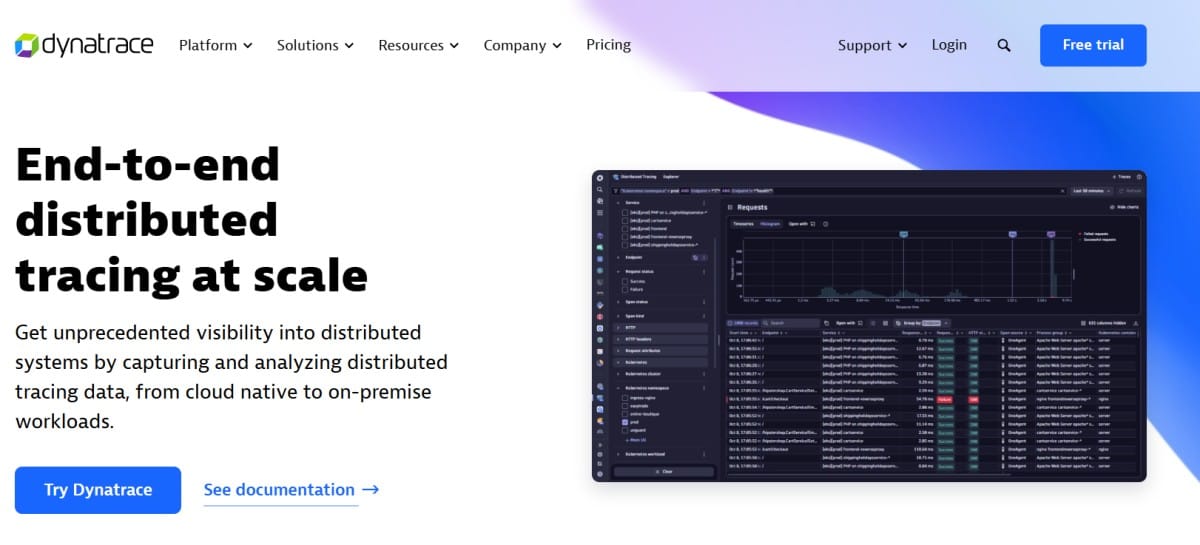

2. Dynatrace

Overview

Dynatrace is an enterprise observability platform known for automatic instrumentation (OneAgent), end-to-end distributed tracing (PurePath), and AI-assisted root cause analysis (Davis AI) at large microservices scale. It links traces with logs, metrics, topology (Smartscape), RUM, and security, and it natively ingests OpenTelemetry alongside its own agent, backed by Grail®, a causational data lakehouse for long-term trace retention and powerful querying.

Key Advantage

Hands-off, at-scale microservices tracing with AI assistance. Dynatrace excels when you want automatic discovery and tracing across fast-changing Kubernetes and serverless estates. Its Davis AI engine cuts through monitoring noise to surface the precise root cause across services, pods, and dependencies. With Grail, teams can query raw trace data in near real-time and retain it for years, making it particularly strong for enterprises managing sprawling service architectures.

Key Features

- End-to-end distributed tracing with code-level visibility and deep analysis.

- OpenTelemetry ingest plus OneAgent for seamless auto-instrumentation.

- Davis AI performs automated baselining and RCA on microservices.

- Smartscape topology mapping to visualize service and infrastructure dependencies.

- Trace retention in Grail with flexible retention and powerful querying options.

Pros

- Auto-instrumentation reduces onboarding time in Kubernetes/serverless setups

- Strong AI-based RCA with the help of Davis for service topology

- Unified observability across traces, logs, metrics, RUM, and security

- Large integration ecosystem and full OpenTelemetry compatibility

Cons

- Expensive for smaller teams

- Learning curve

Dynatrace Pricing at Scale (45 TB/month example)

Dynatrace pricing is based on a transparent but multi-dimensional model. For distributed tracing with Grail, ingest costs around $0.20 per GiB, with additional charges for retention and queries.

For a similar 45 TB volume, the cost would be $21,850/month.

Tech Fit

Dynatrace is best for large-scale, dynamic microservices deployed on Kubernetes, containers, and serverless (AWS Lambda, Azure Functions). It’s well-suited for enterprises that want one platform to cover traces, logs, metrics, user experience, and even security. Dynatrace supports a wide range of languages and frameworks, Java, .NET, Go, Node.js, Python, through OneAgent and OpenTelemetry.

3. New Relic

Overview

New Relic offers a mature distributed tracing solution for microservices. It supports standard (head-based) sampling through its language agents and tail-based sampling with Infinite Tracing. It also natively ingests OpenTelemetry (OTLP), making it easy to bring in data from diverse microservices environments. Its tracing UI allows teams to search, filter, and analyze spans in detail, making it easier to debug complex service chains.

Key Advantage

Flexible sampling with a polished trace UI. Teams can start with standard tracing and then scale into Infinite Tracing to analyze 100% of spans and retain the most important ones. Combined with span-attribute search and interactive waterfalls, New Relic gives engineering teams the visibility they need to manage noisy, high-volume microservices systems.

Key Features

- Standard and tail-based sampling: Choose between agent-led tracing and Infinite Tracing for large-scale microservices.

- Trace search & filtering: Find traces by service, error type, latency, or custom span attributes.

- OpenTelemetry ingest: Native OTLP endpoint and collector for seamless instrumentation.

- UI with detailed trace info: offers waterfall and attributes view so you can better understand request journeys in different services.

- Architecture guidance: Documentation and tooling to plan consistent tracing across teams.

Pros

- SaaS-only; no self-hosting available

- Complex pricing due to a mix of host-based and ingestion-based

Cons

- Can be expensive as services scale because of per GB pricing

- Infinite Tracing adds extra setup and configuration overhead

- Cost forecasting is harder compared to flat-rate models

New Relic Pricing at Scale (45 TB/month example)

New Relic includes 100 GB/month free, after which ingest is billed at $0.40/GB (with a higher tier at $0.60/GB).

For a business ingesting 45 TB of logs per month, the cost would come around $25,990/month.

Tech Fit

New Relic is best for teams running diverse microservices architectures that want a balance of agent-based tracing and OTEL ingest pipelines. It works with languages such as Java, .NET, Python, PHP, and others, and integrates with Kubernetes (K8), serverless, and hybrid environments.

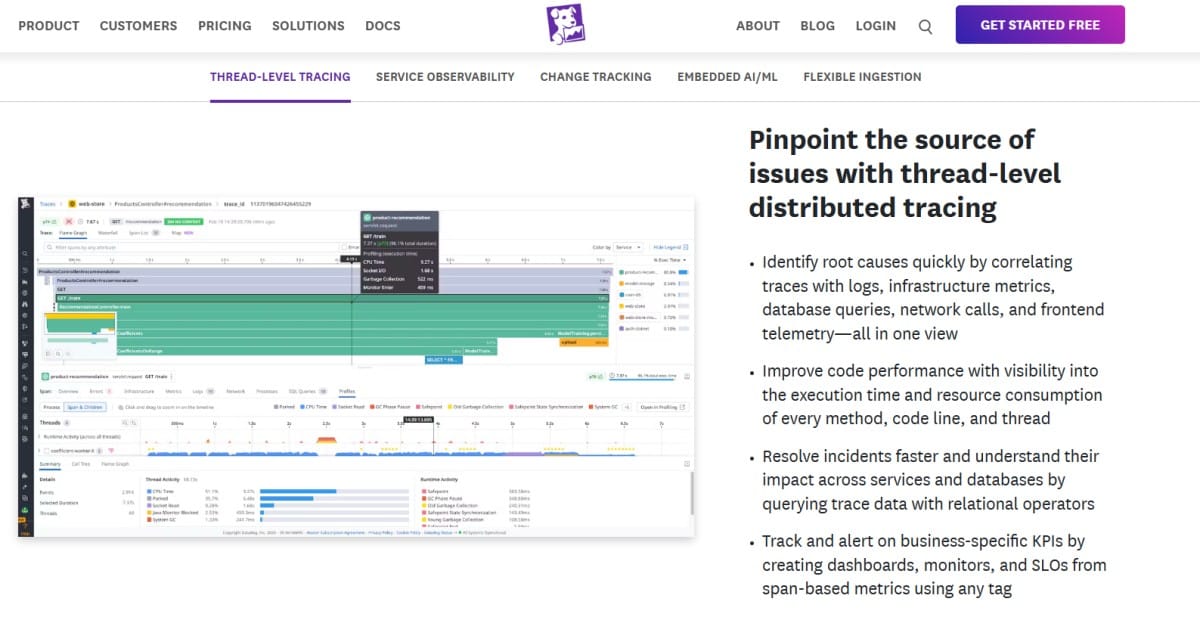

4. Datadog

Overview

Datadog offers end-to-end distributed tracing for your microservices. It auto-discovers services, visualizes call graphs with Service Map, lets teams search millions of spans in Trace Explorer, and correlates traces with logs, metrics, RUM, and synthetics. Datadog supports OpenTelemetry ingestion alongside its own tracers and offers built-in controls to manage trace volume through ingestion sampling and intelligent retention filters.

Key Advantage

Microservices-friendly tracing with powerful volume controls. In fast-moving Kubernetes estates that generate huge span volumes, Datadog’s intelligent retention filters and ingestion controls help teams retain slow/error traces and key business flows while shedding routine traffic. Coupled with Service Map and Trace Explorer, this makes it easier to zero in on the exact hop or dependency causing latency without drowning in data.

Key Features

- Service Map and Trace Explorer: Auto-discover microservices, visualize dependencies, and drill into spans and attributes at scale.

- Ingestion sampling & retention filters: Control cost by sampling at the agent/SDK and index only the spans or traces that matter.

- OpenTelemetry support: Ingest OTLP data or run Datadog tracers; mix and match per service.

- Correlated troubleshooting: Pivot from a problematic span to related logs, metrics, RUM sessions, and synthetic tests.

- Guides for data-volume challenges: Sampling strategies and best practices for distributed systems.

Pros

- Deep microservices visualization and span search at scale

- Robust ingestion and retention controls to curb noisy traffic

- 850+ integrations for cloud-native tech stacks

- Strong workflows to connect traces with logs and metrics, as well as RUM and synthetics

Cons

- Complex pricing across hosts, indexed spans, and add-ons

- SaaS-only deployment

Datadog Pricing at Scale (45 TB/month example)

Datadog uses a modular model. APM is billed per APM host (commonly around $31–$40/host/month) plus usage for ingested and indexed spans; logs, RUM, and synthetics are separate line items.

For a mid-sized business ingesting around 45 TB (~45,000 GB) of data per month, the cost would come around $27,475/month.

Tech Fit

Best for teams that want a unified SaaS platform with mature microservices maps, span search, and strong ecosystem integrations. Works well across Java, .NET, Go, Node.js, Python, Ruby, and in Kubernetes/serverless environments using either Datadog tracers or OpenTelemetry pipelines.

5. Jaeger

Overview

Jaeger is a CNCF-graduated, open-source distributed tracing system originally built at Uber and widely adopted for microservices. In late 2024, it released Jaeger v2, which re-architects core components on top of the OpenTelemetry Collector and tightens OTEL compatibility—making it easier to plug into modern, vendor-neutral pipelines while scaling out in Kubernetes and hybrid clouds.

Key Advantage

Vendor-neutral, OTEL-first tracing that you can control end-to-end. Jaeger excels when you want maximum flexibility: instrument with OpenTelemetry, route data through the Collector, and choose your own storage (OpenSearch/Elasticsearch or Cassandra) with retention tuned to your microservices’ needs. This gives teams fine-grained control over sampling, retention, and costs, without licensing fees.

Key Features

- OpenTelemetry support: Jaeger v2 is built on the OTEL Collector, which simplifies the instrumentation process for different programming languages and services.

- Multiple storage backends: Supports OpenSearch/Elasticsearch, Cassandra, Kafka as buffer, Badger/memory for dev and small setups.

- Service dependency graphs and trace UI: Visualize cross-service call paths, latency, and error hotspots in microservices.

- Adaptive sampling: Keep high-value traces (errors/slow paths) while reducing noise at scale.

- Kubernetes-friendly deployment: Proven patterns with the Jaeger Operator and OTEL Collector for cluster-wide tracing.

Pros

- No license fees; full control over data and retention

- OTEL-first architecture; easy to standardize instrumentation across services

- Flexible storage choices to balance performance and cost

- Scales horizontally; mature in Kubernetes environments

Cons

- You run and operate the stack (storage clusters, upgrades, backups)

- Occasional driver issues

Jaegar Pricing at Scale (45 TB/month example)

Jaeger is free and open source, which means no license cost. At 45 TB/month of traces, your expense is the infrastructure: cluster compute, storage, and operational overhead. Typical large-scale setups use OpenSearch/Elasticsearch with replication and hot/warm tiers; costs depend on retention goals, compression, and query demands.

Tech Fit

Best for OTEL-standardized microservices that prefer self-hosted control and composability. Works across all major languages via OpenTelemetry SDKs and auto-instrumentation and deploys cleanly to Kubernetes with the Jaeger Operator and OTEL Collector. Choose OpenSearch/Elasticsearch for powerful search or Cassandra for write-heavy, ID-based lookups, depending on workload.

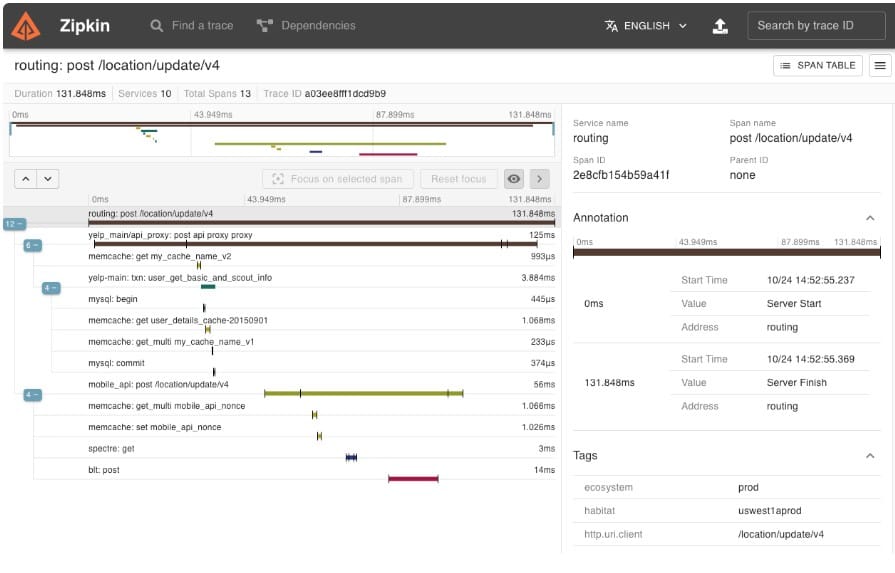

6. Zipkin

Overview

Zipkin is a lightweight, open-source distributed tracing system widely used in microservices, especially in Java/Spring ecosystems. It implements a simple model (traces, spans, annotations) and a clean API that many frameworks and proxies can emit to, making it easy to drop into existing architectures and start following requests across services.

Key Advantage

Straightforward, low-overhead tracing for teams that want quick visibility with minimal ceremony. Zipkin’s simplicity, broad emitter support (e.g., Spring/Micrometer, Brave), and easy deployment make it a practical choice for engineering teams who want to instrument microservices without adopting a heavy, full-suite platform.

Key Features

- Service and dependency graphs: Visualize how services call one another to spot hot paths and fan-out/fan-in patterns.

- Trace and span search: Filter by service, operation, latency, tags, and time windows to find problem requests fast.

- Multiple storage backends: Run dev/test in-memory, or persist production traces in Elasticsearch, Cassandra, or MySQL.

- Flexible ingestion formats: Accepts Zipkin/Brave emitters and Zipkin-compatible exports from gateways, sidecars, and meshes.

- Kubernetes-friendly deployment: Simple Docker/K8s manifests for shipping spans from pods/namespaces to a central collector.

Pros

- Easy to deploy and operate for small to mid-sized microservices estates

- Well supported in Java/Spring via Micrometer/Brave; community clients exist for many languages

- Zipkin API compatibility across proxies/meshes and libraries makes onboarding fast

- No license cost; you control retention and data footprint

Cons

- UI can be improved

- Limited reviews online to gauge the platform

Zipkin Pricing at Scale (45 TB/month example)

Zipkin itself is free; your cost is infrastructure. At 45 TB/month of traces, expect expenses in storage (e.g., Elasticsearch/Cassandra clusters with replication, hot/warm tiers), compute for ingestion/query, and ops time.

Tech Fit

A great fit for Java/Spring Boot microservices and teams standardizing on Micrometer/Brave, with workable paths for Go, Node.js, Python, and .NET via community emitters and Zipkin-compatible exporters. Works well in Kubernetes when you want a small, self-hosted tracer that integrates with gateways, sidecars, and service meshes emitting Zipkin-format spans.

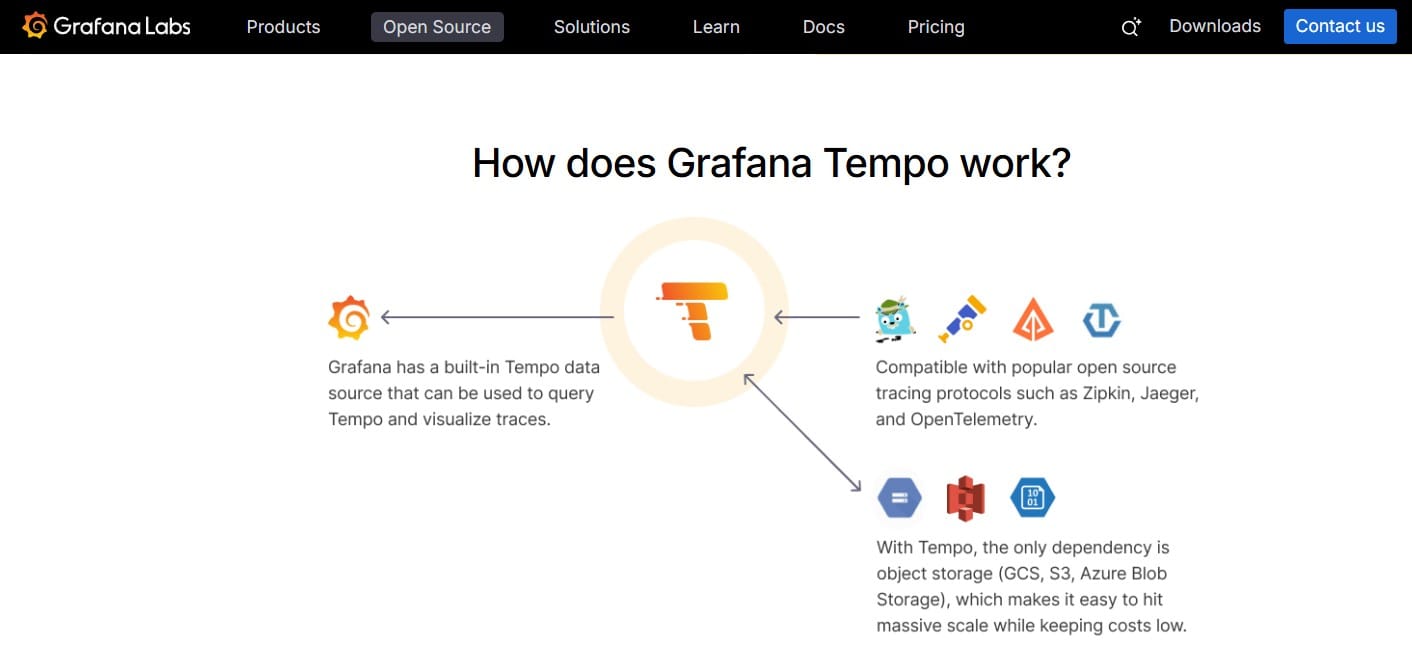

7. Grafana Tempo

Overview

Grafana Tempo is a high-scale, open-source tracing backend built for cloud-native microservices. It ingests spans from OpenTelemetry, Jaeger, or Zipkin, stores them cheaply in object storage (S3, GCS, Azure), and lets you explore traces in Grafana using TraceQL, a query language designed for distributed tracing. Tempo is also available fully managed as Grafana Cloud Traces if you don’t want to operate it yourself.

Key Advantage

Low-cost, scalable trace storage for Kubernetes-heavy microservices. Tempo avoids heavyweight indexing and relies on object storage, which keeps costs down as span volumes grow. When paired with Grafana, teams can drill into traces with TraceQL, link a span to its logs and metrics, and build workflows that accelerate debugging across multi-service environments.

Key Features

- OpenTelemetry native: makes migration easier with OTLP-based ingestion as well as Jaeger/Zipkin formats.

- TraceQL for powerful queries: Filter on span attributes, timing, and relationships; use aggregates to surface bottlenecks.

- Object-storage architecture: Store traces in S3/GCS/Azure for massive scale at lower cost.

- Grafana integration: Explore traces in Grafana, pivot to related logs/metrics, and generate metrics from traces.

- Managed option: Grafana Cloud Traces provides a hosted Tempo with a free tier (50 GB traces, 14-day retention).

Pros

- Scales economically on object storage for high-volume microservices

- Strong OTEL, Jaeger, and Zipkin compatibility for gradual migrations

- TraceQL provides expressive, fast querying at scale

- First-class Grafana experience for linking traces with logs and metrics

Cons

- DIY deployments require SRE effort for upgrades, capacity, and retention policies

- Learning curve

Grafana Tempo Pricing at Scale (45 TB/month example)

With Grafana Cloud, at 45 TB/month, the bill comes to about $11,875.

Tech Fit

Tempo is a strong fit for Kubernetes and service-mesh environments that generate large span volumes and want OTEL-first pipelines with economical storage. It’s compatible with languages such as Java, Go, Python, .NET , and Go via OTEL SDKs. It also pairs with Grafana to help you troubleshoot issues related to traces, logs, and metrics.

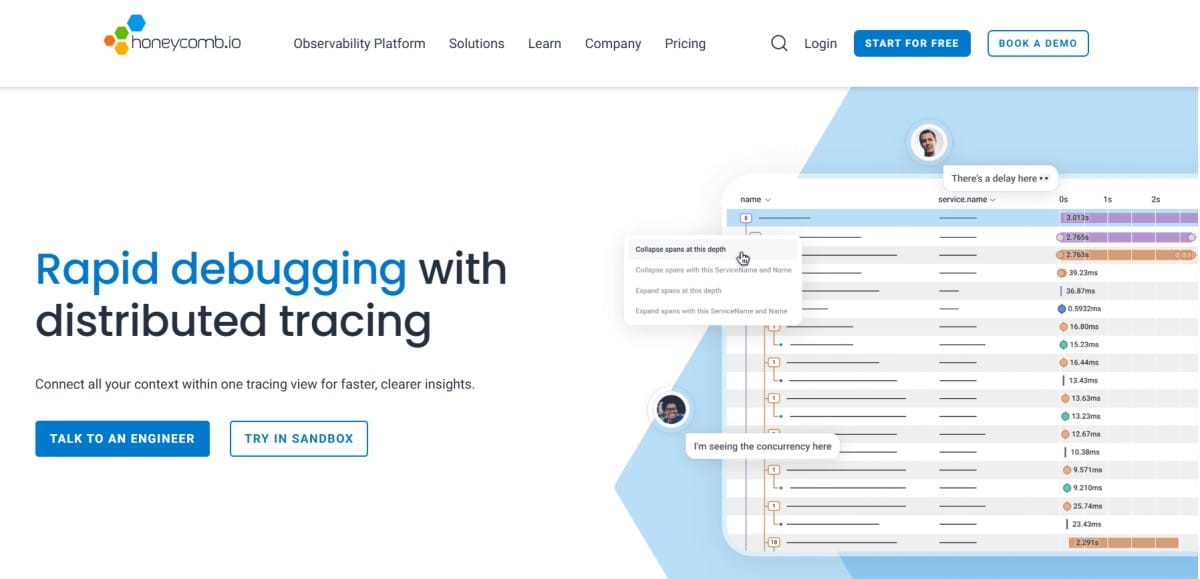

8. Honeycomb

Overview

Honeycomb is a developer-centric observability platform with powerful distributed tracing for microservices. It’s built to explore high-cardinality data quickly, correlate spans with rich attributes, and surface outliers via interactive visual analysis. Honeycomb supports OpenTelemetry pipelines and offers Refinery for tail-based sampling, plus a growing ecosystem around trace-based testing with Tracetest.

Key Advantage

High-signal debugging at microservices scale. Honeycomb’s combination of tail-based sampling (Refinery) and BubbleUp anomaly detection helps teams keep only the most valuable traces (errors, latency spikes, rare paths) and then pinpoint exactly which fields differ from normal traffic—ideal when thousands of spans per second make traditional triage noisy.

Key Features

- Distributed tracing UI and waterfalls: Follow requests across services, drill into spans/attributes, and jump to the slow or failing hop fast.

- BubbleUp anomaly detection: Select outliers on a heatmap to see which attributes explain the difference.

- Tail-based sampling: The tool lets you keep “interesting” traces and drops noise to help you manage expenses.

- OpenTelemetry ingest & pipelines: Use OTLP/Collector to standardize instrumentation across languages and services.

- Trace-based testing with Tracetest: Turn real traces into pre-prod checks to prevent regressions in complex microservice chains.

Pros

- Excellent at high-cardinality, exploratory debugging for complex microservices

- Tail-based sampling keeps costs predictable without losing critical signal

- Strong docs and workflows for OTel pipelines and trace analysis

- Ecosystem support for trace-based testing and modern DevEx practices

Cons

- Costly for smaller teams

- Difficulty learning

Honeycomb Pricing at Scale

Honeycomb prices by events, not by GB. The public pricing page shows Pro from $100/month and Enterprise starting with 10 billion events/year, with volume tiers. Translating 10 TB/month of trace data to events depends on your average span size; as a rough illustration, if your average event were ~1 KB, 10 TB ≈ 10 billion events per month, well above the base Enterprise yearly allowance and into negotiated tiers.

Tech Fit

Great for Kubernetes- and service-mesh-heavy microservices that need fast, exploratory debugging across millions of spans. Works smoothly with OpenTelemetry across major languages (Java, Go, Node.js, Python, .NET). Teams that value tail-sampling + interactive analytics often find Honeycomb a strong complement—or alternative—to traditional APMs.

How to Choose the Right Distributed Tracing Tool for Microservices

OpenTelemetry Depth and Vendor Neutrality

Microservices involve many languages and frameworks. A tracing tool must provide strong OpenTelemetry (OTel) support, including OTLP ingest and Collector pipelines, so teams can instrument once and switch backends without lock-in.

Context Propagation Across Services and Meshes

Requests often span HTTP calls, message queues, and service meshes like Istio or Linkerd. The right tool must reliably propagate context across these hops, ensuring traces stay stitched together even in async or retry-heavy workflows.

Tail-Based Sampling for High-Volume Traffic

Microservices generate millions of spans per second. Tools with tail-based or intelligent sampling keep error, latency, and rare traces while discarding routine noise—essential for controlling costs and keeping signal quality high.

Cost Model Aligned With Traffic Shape

Pricing surprises are common when every span, pod, or GiB of ingest adds to the bill. Teams should test how a tool’s pricing scales with bursty microservices traffic and high-cardinality workloads. Predictable models win in production.

Query Power for Complex Traces

With dozens of services in a single request, teams need expressive, fast queries. Features like attribute-based search, filters, and aggregates help engineers isolate “service X in region Y failed for tenant Z” scenarios quickly.

Kubernetes and Service Mesh Awareness

Most microservices run in Kubernetes, often with sidecar proxies. A tool should auto-discover pods, containers, and services, map their dependencies, and integrate cleanly with service meshes to avoid broken traces.

Correlation With Logs, Metrics, and User Data

Tracing alone shows what failed, but not why. Strong platforms let teams pivot from a hot span to related logs, metrics, or RUM data, reducing mean time to resolution (MTTR) in distributed environments.

Retention, Governance, and Data Residency

Microservices often handle sensitive data. A tracing solution must allow on-prem or regional storage, configurable retention, and fine-grained sampling to stay compliant while managing long-term trace data effectively.

SaaS Convenience vs. DIY Control

Large microservices estates can overwhelm SRE teams if self-hosted. Some organizations prefer SaaS platforms for simplicity, while others need open-source backends for cost control and custom retention policies.

Developer-Centric Workflows

Microservices debugging happens daily. Tools with fast UIs, high-cardinality exploration, and trace-based testing make developers more productive and accelerate root cause analysis across complex service chains.

Conclusion

Choosing the right distributed tracing tool for microservices isn’t easy. Teams face challenges like exploding span volumes, broken traces across service meshes, limited sampling options, and unpredictable pricing that balloons as traffic grows. Debugging latency or errors in requests that touch dozens of services is nearly impossible without the right platform.

This is where CubeAPM makes a difference. It delivers end-to-end distributed tracing, AI-driven smart sampling, root cause analysis, and real-time service maps that clearly show dependencies across microservices. Features like high-granularity spans and cross-layer correlation help teams catch issues early, while OpenTelemetry-native ingest ensures consistent instrumentation across all services.

Book a free demo to explore CubeAPM.

Disclaimer: The information in this article reflects the latest details available at the time of publication and may change as technologies and products evolve.

FAQs

1. Is distributed tracing the same as APM?

No. Application Performance Monitoring (APM) is broader and covers metrics, logs, and uptime, while distributed tracing specifically follows a request across microservices. Modern APM platforms often include tracing, but tracing alone provides deeper request-level visibility that traditional APM may lack.

2. How does distributed tracing work with Kubernetes?

In Kubernetes, distributed tracing tools capture spans from containers, pods, and services. With service meshes like Istio or Linkerd, tracing context is automatically propagated between sidecars, so traces stay intact even as pods scale up, down, or restart.

3. Can distributed tracing handle asynchronous workflows like queues or event-driven microservices?

Yes. Tracing tools that support W3C Trace Context and asynchronous propagation can connect spans across message queues (Kafka, RabbitMQ) and event-driven services. This is essential for modern microservices, where async calls are common.

4. Do I need to store 100% of traces in production?

Not necessarily. Most teams use tail-based sampling to retain only slow, error-prone, or high-value traces. This approach cuts storage costs while keeping the critical data needed for debugging microservices.

5. Can distributed tracing tools be used for compliance and security auditing?

Yes. Traces can provide detailed records of how requests move through services, which is valuable for auditing. However, tools must offer data residency controls, encryption, and retention policies to meet compliance requirements in industries like finance or healthcare.