Amazon DynamoDB is a fully managed NoSQL database powering high-performance workloads for thousands of enterprises. It can handle trillions of requests per day across globally distributed tables. But as usage grows, issues like throttling, latency, uneven partition usage, and cost spikes can easily appear without proper visibility.

CubeAPM solves this by offering observability that goes beyond CloudWatch metrics and correlates database performance with application behavior, queries, and traces. With smart sampling, unlimited retention, and predictability, it helps detect throttling, pinpoint latency sources, and reduce monitoring costs.

In this article, we’ll explore how DynamoDB monitoring is important, key metrics to watch, and how CubeAPM does it.

What Is DynamoDB Monitoring?

Amazon DynamoDB is a fully managed, serverless NoSQL database designed to deliver consistent single-digit millisecond performance at any scale. It automatically handles data replication, capacity scaling, and fault tolerance, making it ideal for high-throughput, low-latency workloads across industries like e-commerce, gaming, fintech, and IoT.

DynamoDB monitoring refers to the continuous tracking of performance metrics such as read/write capacity, latency, throttled requests, and storage utilization to ensure your tables and indexes operate efficiently. But modern monitoring goes far beyond what Amazon CloudWatch alone provides. It’s about correlating metrics with traces and logs from your application stack, understanding how each DynamoDB query impacts end-user latency, API throughput, and downstream services. When implemented properly, DynamoDB monitoring helps businesses:

- Detect latency spikes early, before they degrade user experience.

- Identify throttled requests or capacity misconfigurations in real time.

- Uncover hot partitions and skewed workloads affecting performance.

- Optimize cost by right-sizing provisioned capacity or switching to on-demand modes.

- Reduce MTTR (Mean Time to Resolution) by linking database metrics directly to traces and logs from your services.

By combining telemetry data from DynamoDB with distributed tracing through a tool like CubeAPM, teams gain full visibility, not just into what went wrong, but why and where it happened.

Example: Monitoring a Real-Time Order Processing System

Imagine an e-commerce company using DynamoDB to store millions of orders every day. During flash sales, write requests suddenly spike, and some get throttled due to capacity limits.

With CubeAPM’s unified dashboards, engineers can instantly see a correlation between the spike in DynamoDB WriteThrottleEvents and rising API response times. They can then trace the root cause — a misconfigured partition key — and scale capacity or rebalance writes before it impacts checkout performance.

Why DynamoDB Monitoring Is Important

Dedicated DynamoDB monitoring is critical for maintaining reliability, performance, and cost control.

Prevent Throttling Before It Becomes User Pain

DynamoDB enforces throughput limits at both the table and partition level. Even if total table capacity looks fine, a single overworked partition can trigger throttling and slow responses. Monitoring metrics like ConsumedRead/WriteCapacityUnits and ThrottledRequests helps teams detect stress before users are affected. With timely alerts, you can auto-scale capacity or redistribute writes long before errors surface.

Managing Hot Partitions and Skew

Adaptive capacity helps absorb uneven workloads, but it’s not magic. Bad key design, monotonically increasing sort keys, or traffic bursts to a single customer ID can still overload partitions. Monitoring partition activity through Contributor Insights or CubeAPM trace correlations reveals which keys or access patterns are generating hotspots. Catching and fixing these early prevents recurring throttling and improves query balance.

Maintaining Low Latency at p95/p99

While DynamoDB targets single-digit millisecond latency, tail performance (p95, p99) often degrades first during burst traffic or retries. Tracking latency distributions for GetItem, PutItem, and Query operations reveals these subtle regressions. When correlated with application traces in CubeAPM, engineers can isolate whether the issue stems from throttling, network delays, or inefficient access patterns, long before users notice slow requests.

Cost Efficiency and Capacity Balancing

Provisioning too high wastes money; too low triggers throttling. Monitoring utilization trends enables teams to right-size capacity, tune auto scaling, and decide when to switch between provisioned and on-demand modes. For read-heavy workloads, caching with DAX (DynamoDB Accelerator) can cut latency to sub-millisecond levels while reducing read costs dramatically.

Ensuring Global Table Replication Health

Global Tables replicate data asynchronously across AWS regions, meaning lag can appear under heavy writes or cross-region latency. Monitoring ReplicationLatency and PendingReplicationCount ensures writes remain consistent and conflicts don’t build up. In high-traffic setups, replication lag can stretch to multiple seconds, so real-time monitoring is essential to maintain data integrity.

Avoiding Downstream Cascades with Trace Correlation

A DynamoDB issue rarely stays isolated. When throttling or high latency occurs, dependent services like Lambda or API Gateway often retry requests, amplifying the load. By correlating DynamoDB metrics with distributed traces in CubeAPM, teams can visualize how database pressure impacts entire transaction paths — preventing cascading slowdowns and costly retry storms across microservices.

Monitoring Streams, Backups, and CDC Pipelines

If you use DynamoDB Streams for change data capture or event pipelines, lag in stream consumers can silently delay downstream analytics or order processing. Monitoring consumer lag, error counts, and throughput ensures your real-time workflows stay reliable. Similarly, keeping an eye on backup and Point-in-Time Recovery (PITR) jobs helps avoid conflicts with peak usage windows.

Key Metrics to Watch in DynamoDB Monitoring

Effective DynamoDB monitoring means keeping an eye on the metrics that best represent its performance, capacity usage, latency, and reliability. AWS exposes dozens of CloudWatch metrics for DynamoDB, but only a subset truly matters for ensuring stability and cost efficiency. Below are the most critical DynamoDB monitoring metrics, grouped by category, with explanations and practical thresholds that DevOps teams can use to set alerts and optimize workloads.

Performance Metrics

These metrics capture the real-time responsiveness of DynamoDB operations, helping you identify latency spikes and bottlenecks before they impact users.

- ReadLatency: The average time taken for successful

GetItemandQueryrequests. An increase often indicates throttling, index inefficiency, or network congestion. Sustained p95 latency above 20–30 ms is usually a warning sign for high-traffic apps. - WriteLatency: Measures the average duration of

PutItemorUpdateItemoperations. Consistently high write latency may point to write contention, excessive conditional updates, or hot partitions. Watch for values exceeding 25–40 ms at peak load. - SuccessfulRequestLatency: Tracks end-to-end latency across all successful operations, including reads, writes, and queries. This metric provides an overall view of DynamoDB responsiveness. Alert if averages rise 20–30% above baseline over multiple intervals.

- SystemErrors: Counts requests that failed due to internal DynamoDB errors (5xx responses). Even though rare, spikes often indicate transient AWS issues or throttling side effects. Set alerts if error rates exceed 1% of total requests for more than 5 minutes.

- ConditionalCheckFailedRequests: Indicates the number of write requests that failed because conditions were not met (e.g., optimistic locking). Occasional failures are expected; frequent spikes suggest flawed concurrency logic or data model issues. Trigger alerts if this exceeds 2–3% of total writes.

Capacity Metrics

Capacity metrics show how your tables are consuming provisioned or on-demand throughput. Monitoring them prevents both throttling and over-provisioning.

- ConsumedReadCapacityUnits (RCUs): Reflects the number of read units actually used. A steady upward trend means increasing demand or inefficient queries. If consumption consistently exceeds 80–90% of provisioned capacity, auto-scaling should be adjusted.

- ConsumedWriteCapacityUnits (WCUs): Shows how much write throughput is used. A spike may suggest bulk inserts, heavy updates, or uneven load across partitions. Alerts should trigger when WCUs approach 90% of the table’s write limit.

- ProvisionedReadCapacityUnits / ProvisionedWriteCapacityUnits: Indicates the total configured throughput capacity. Track this alongside consumed units to understand utilization efficiency and scaling needs. Under 30% utilization may imply over-provisioning and wasted cost.

- ThrottledRequests: Counts operations rejected because throughput limits were exceeded. Persistent throttling signals the need to rebalance partitions or increase capacity. Any value above 0.1% of total requests is worth investigating immediately.

- ReturnedItemCount: Shows how many items were fetched by read operations. Unexpected increases can indicate inefficient queries scanning too many items. Alert if average item count per query rises more than 2× baseline over time.

Storage & Table Health Metrics

These metrics reflect data volume, index growth, and underlying table utilization, all of which affect both cost and performance.

- TableSizeBytes: Indicates total data size for the table and all indexes. Growing rapidly? It could mean missing TTL cleanup or overly large attributes. Watch for sudden jumps over 20% within 24 hours.

- ItemCount: The total number of items in the table. Monitoring helps track ingestion trends or data pipeline errors. Alert when count unexpectedly diverges from expected growth patterns by >10%.

- IndexSizeBytes: Reflects the total storage used by global and local secondary indexes (GSIs and LSIs). Large or underused indexes add cost with minimal value. Review when index size grows beyond 30–40% of the base table size.

- ConsumedReadCapacityUnits (Indexes): Monitors read consumption specifically by indexes. Excessive index reads might indicate inefficient query patterns. Watch if index reads consume >40% of total RCUs.

Replication & Global Table Metrics

For global or multi-region deployments, replication lag and pending operations directly affect data consistency and read freshness.

- ReplicationLatency: Measures the time it takes for updates to propagate between AWS regions. In healthy systems, this should stay under 1–2 seconds. Sustained higher values may cause stale reads or version conflicts.

- PendingReplicationCount: Counts items waiting to be replicated to other regions. A rising trend can suggest replication backlogs or network delays. Set alerts when counts persistently exceed 100 pending items per region.

- ConflictRecords: Tracks the number of write conflicts during multi-region replication. While rare, increasing conflicts imply concurrent updates or replication drift. Monitor if conflict count exceeds 10 per hour.

Stream & Change Data Capture Metrics

These metrics matter if you rely on DynamoDB Streams for event-driven pipelines or CDC workflows.

- StreamRecordsProcessed: Number of stream records consumed successfully. Falling rates may indicate consumer lag or processing issues. Alert when processed records drop >30% below ingestion rate.

- IteratorAgeMilliseconds: Represents how far behind your stream consumer is from the head of the stream. Consistent increases mean delayed consumption. Keep this below 60,000 ms (1 minute) for near-real-time processing.

- FailedGetRecords / FailedPutRecords: Tracks failed reads or writes to Kinesis or downstream systems integrated with Streams. Repeated failures risk data loss — trigger alerts if rates exceed 0.5% of total records.

Application & Request-Level Metrics

Application metrics correlated with DynamoDB help visualize how database issues translate to user-facing slowdowns or API bottlenecks.

- RequestCount: Total API calls to DynamoDB, including reads, writes, and scans. Monitoring traffic trends helps forecast capacity and plan scaling. Sudden 2–3× jumps may indicate runaway processes or load-testing events.

- UserErrors: Represents client-side issues like invalid parameters or missing keys. Spikes often reflect application logic bugs or malformed requests. Keep this below 1% of total requests.

- ErrorRate (5xx / 4xx): Combined rate of system and user errors. A growing 5xx count often correlates with throttling or AWS service issues. Alert when total error rate exceeds 2% for more than 5 minutes.

- AverageRequestSizeBytes: Tracks payload size per operation. Larger items increase read/write latency and cost. Investigate when the average size exceeds 400 KB — the DynamoDB item limit is 400 KB per item.

DynamoDB Monitoring Fundamentals (and Why CloudWatch Alone Isn’t Enough)

How DynamoDB Integrates with CloudWatch

Amazon DynamoDB automatically publishes key operational metrics to Amazon CloudWatch, including throughput consumption, throttled requests, read/write latency, and storage usage. These metrics are collected at one-minute intervals and made available through prebuilt dashboards or custom queries.

This native integration offers a solid baseline for performance visibility — letting teams monitor trends in read/write activity, track latency, and set alerts for threshold breaches. For smaller applications or predictable workloads, CloudWatch is a dependable, built-in solution for DynamoDB monitoring.

Limitations of CloudWatch for Modern Workloads

As workloads scale and traffic patterns become more dynamic, CloudWatch’s default capabilities often fall short. Its 1-minute granularity and table-level aggregation make it difficult to detect short-lived anomalies or identify which partitions or tenants are causing bottlenecks.

Moreover, with metric data retained for only 15 months and without native support for trace correlation, teams find it hard to analyze root causes or link metrics to real application behavior.

Why CloudWatch Alone Isn’t Enough

While CloudWatch is useful for high-level visibility, it wasn’t designed for deep, distributed observability. Here’s why relying on it alone limits your ability for DynamoDB monitoring at scale:

- Limited Granularity: Metrics are collected every 60 seconds, which means short-lived throttling or latency spikes (lasting just a few seconds) can easily be averaged out and missed entirely.

- Aggregated Metrics: Data is shown per table rather than per partition or request, hiding the root cause of uneven access patterns or hot keys that trigger throttling.

- No Trace Correlation: CloudWatch provides numerical metrics but lacks context — it can’t link latency or throttling back to the specific API call, user session, or service responsible.

- Retention & Cost Constraints: Historical data retention caps at 15 months, and storing custom metrics for longer periods increases costs, making trend analysis expensive for large-scale teams.

- Fragmented Visibility: CloudWatch dashboards are service-specific. Teams must switch between multiple AWS consoles (Lambda, API Gateway, EC2) to piece together a complete picture, slowing down debugging during incidents.

- Limited Real-Time Insight: Metric aggregation delays and sampling can make CloudWatch feel “laggy” for real-time troubleshooting — a major drawback when diagnosing performance issues during peak traffic events.

Together, these limitations make CloudWatch better suited for basic monitoring, not deep analysis or performance optimization. To achieve full-stack visibility, teams often pair it with external observability platforms that can unify metrics, traces, and logs into a single, high-resolution view.

Introducing CubeAPM for DynamoDB Monitoring

Modern DynamoDB workloads demand more than metric charts, they need end-to-end visibility across applications, APIs, and infrastructure. That’s where CubeAPM steps in, delivering unified observability that goes far beyond CloudWatch’s one-minute snapshots. Built on OpenTelemetry, CubeAPM connects every DynamoDB metric, trace, and log into one cohesive picture, helping teams monitor, troubleshoot, and optimize with unmatched precision.

Unified DynamoDB Monitoring

CubeAPM provides a single source of truth for DynamoDB performance by combining metrics, logs, and distributed traces in real time. This means when a latency spike occurs, you can instantly trace it back to the exact API request, service, or tenant that caused it — all within seconds.

Whether you’re running DynamoDB behind Lambda, API Gateway, or containerized services, CubeAPM automatically captures and correlates telemetry across the full stack, removing the guesswork from troubleshooting.

High-Resolution Metrics and Smart Sampling

Unlike CloudWatch’s 60-second averages, CubeAPM ingests second-level telemetry with high precision. Its smart sampling engine uses contextual data, such as error type, latency, and payload size — to retain the most valuable traces while keeping costs predictable.

This approach ensures teams don’t lose critical data points during traffic spikes while still saving up to 60–70% in monitoring costs compared to legacy APMs like Datadog or New Relic.

Advanced Dashboards and Drill-Down Analytics

CubeAPM comes with prebuilt DynamoDB dashboards that visualize key metrics such as read/write capacity, throttled requests, and tail latency (p95/p99). Each panel links directly to trace-level data, enabling engineers to move from a high-level view to individual DynamoDB queries in one click.

You can also create custom dashboards by filtering by region, table, or microservice, ideal for multi-tenant SaaS or large-scale e-commerce platforms managing hundreds of DynamoDB tables.

Correlation Across Services and Workloads

Most DynamoDB slowdowns don’t happen in isolation, they often stem from upstream traffic surges, slow Lambdas, or heavy batch writes. CubeAPM automatically correlates DynamoDB metrics with connected services like AWS Lambda, API Gateway, ECS, and EC2, giving you full context for every latency spike or error.

This cross-service correlation dramatically reduces MTTR (Mean Time to Resolution) by letting teams see how database bottlenecks ripple across distributed architectures.

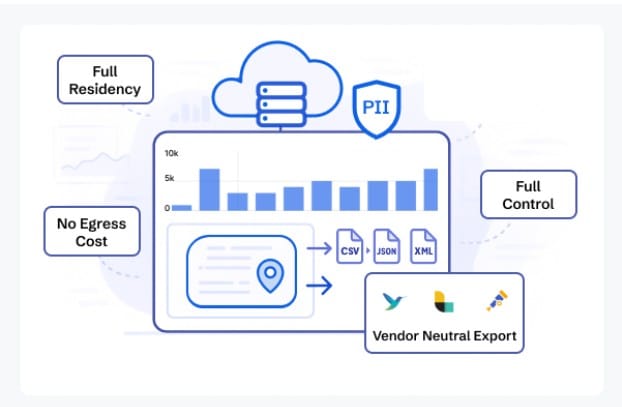

Data Control, Compliance, and Cost Transparency

CubeAPM offers flexible deployment — you can host it on-premises or in your own cloud, ensuring compliance with data localization and privacy regulations. All telemetry data stays under your control, with unlimited retention at $0.15 per GB of ingestion, eliminating hidden user or host-based charges.

This pricing transparency makes CubeAPM particularly attractive for organizations ingesting terabytes of DynamoDB telemetry each month — you always know what you’ll pay, no matter how much data you monitor.

Designed for Cloud-Native Scale

Whether you manage a few tables or thousands, CubeAPM scales effortlessly alongside DynamoDB’s elasticity. Its distributed architecture handles high cardinality data, frequent writes, and global traffic without sampling out critical traces.

For DevOps, SRE, and platform teams, this means complete confidence that no performance anomaly or cost surge goes unnoticed, even in high-throughput environments handling millions of requests per second.

How to Perform DynamoDB Monitoring with CubeAPM (Step-by-Step)

Let’s understand how CubeAPM performs DynamoDB monitoring with the following steps:

Step 1: Install CubeAPM on your platform

Install CubeAPM where you want to run your observability stack. You can use the single-binary install for Linux/macOS, Docker Compose for a quick local cluster, or Helm on Kubernetes. Keep the service reachable from your apps and collectors. See Install CubeAPM (binary), Docker, or Kubernetes (Helm) for exact commands.

Step 2: Apply essential configuration

Set required parameters so CubeAPM can accept and secure telemetry. At minimum, configure token and auth.key.session, and set base-url. You can set values via CLI flags, a config file, or environment variables (prefix CUBE_, replace ./- with _). Order of precedence is: CLI > env > file > defaults. (docs.cubeapm.com)

Step 3: Stream DynamoDB metrics into CubeAPM via CloudWatch

DynamoDB emits its operational metrics to Amazon CloudWatch. To ingest them, configure AWS CloudWatch Metric Streams to Amazon Data Firehose and point Firehose to CubeAPM (HTTP endpoint) as documented. Metric Streams keep you close to real-time and avoid manual polling; use this method for AWS-native services like DynamoDB. (

Step 4: (Kubernetes) Deploy the OpenTelemetry Collector for infra and custom scraping

If you run apps or collectors on Kubernetes, deploy the OpenTelemetry Collector with the official Helm chart and forward data to CubeAPM. This gives you node/pod metrics (for context) and also provides a path to scrape Prometheus-style exporters if you add them later.

Step 5: (VM/Bare metal) Install the OpenTelemetry Collector in one command

On VMs, use the recommended one-line installer to set up the OpenTelemetry Collector Contrib with a working config. This is handy for EC2 or on-prem nodes that need to forward metrics/logs to CubeAPM alongside your DynamoDB telemetry.

Step 6: Instrument your application with OpenTelemetry (DynamoDB client visibility)

Add OpenTelemetry to your services so traces/metrics/logs correlate with DynamoDB activity. Use the Instrumentation guide to pick your agent/SDK (Java, Node.js, Python, Go, etc.) and export via OTLP to CubeAPM. Include useful attributes in spans/metrics like db.system=dynamodb, aws.region, dynamodb.table_name, and operation so you can filter by table and operation in dashboards.

Step 7: (Optional but recommended) Centralize logs

Send application and platform logs to CubeAPM using OTel logs, Vector, Fluent/Fluent Bit, or Loki routes. Having request logs next to DynamoDB metrics and traces speeds up RCA (e.g., correlating ConditionalCheckFailedException bursts with throttled writes). See the Logs doc for supported agents and formats.

Step 8: Build DynamoDB-focused dashboards

Create dashboards that track DynamoDB’s critical metrics you’re streaming from CloudWatch (e.g., ConsumedReadCapacityUnits, ConsumedWriteCapacityUnits, ThrottledRequests, SuccessfulRequestLatency, storage) and correlate them with your application traces. Use table/region filters and saved labels to separate prod vs staging or tenant-specific tables. (Start from CubeAPM’s dashboarding flow after install/config.)

Step 9: Wire alerting and notifications

Define alert rules for DynamoDB hotspots (e.g., throttling > baseline, p95 write latency > SLO, replication lag trend up). Connect alert channels — email and webhooks are supported out of the box — so the right responders get paged. This lets you trigger automation or ticketing as needed.

Step 10: Iterate on thresholds, retention, and labels

Tune thresholds once you have a week or two of traffic. Adjust retention windows for metrics/logs/traces in config, and standardize labels/tags (service, env, region, table) so dashboards and alerts remain clear at scale. Revisit config using the Configure CubeAPM guide as your footprint grows.

Verification Checklist & Example Alert Rules for DynamoDB Monitoring with CubeAPM

Before going live, ensure your DynamoDB monitoring setup is fully operational and your alerting strategy is tuned to catch real-world performance issues in time. This section helps validate configuration and demonstrates how to implement practical alert rules in CubeAPM.

Verification Checklist for DynamoDB Monitoring

- CloudWatch integration: Verify that key DynamoDB metrics like

ThrottledRequests,ConsumedReadCapacityUnits, andWriteLatencyare visible in CubeAPM dashboards. - OpenTelemetry traces: Check that application spans include DynamoDB attributes (

db.system,table_name,aws.region,operation) for precise filtering. - Latency metrics: Ensure p95/p99 latency panels for read and write operations reflect expected SLOs during normal load.

- Throttling alerts: Validate alerts for spikes in throttled reads or writes are configured and fired during stress tests.

- Replication metrics: For global tables, confirm

ReplicationLatencyandPendingReplicationCountupdate correctly across regions. - Log correlation: Confirm DynamoDB-related logs align with traces in CubeAPM’s timeline for rapid root-cause analysis.

Example Alert Rules for DynamoDB Monitoring

1. Throttled Write Requests Spike

This rule detects when the percentage of throttled write requests exceeds safe limits, signaling capacity pressure or hot partitions.

alert: DynamoDB_ThrottledWrites_Spike

expr: (sum(rate(aws_dynamodb_throttled_requests_write[5m])) / sum(rate(aws_dynamodb_request_count_write[5m]))) > 0.001

for: 5m

labels:

severity: high

annotations:

summary: "Throttled writes >0.1% for 5m on {{ $labels.table }}"

impact: "User write operations may be delayed or dropped due to table-level capacity saturation."2. High Write Latency (p99 > 40 ms)

This alert triggers when p99 latency crosses 40 ms for more than 10 minutes, helping detect emerging write bottlenecks before they affect SLAs.

alert: DynamoDB_WriteLatency_High

expr: histogram_quantile(0.99, sum by (le,table)(rate(aws_dynamodb_write_latency_bucket[5m]))) > 0.040

for: 10m

labels:

severity: high

annotations:

summary: "p99 write latency exceeded 40 ms for {{ $labels.table }}"

impact: "End-user performance degradation detected; check throttling or hot partitions."3. Global Table Replication Lag Over 2 Seconds

Use this to track replication delays between global table regions, preventing stale reads or inconsistent data replication.

alert: DynamoDB_ReplicationLag_High

expr: avg(aws_dynamodb_replication_latency_seconds) > 2

for: 10m

labels:

severity: medium

annotations:

summary: "Replication lag > 2 s on {{ $labels.table }}"

impact: "Cross-region reads may be stale; review network health and write distribution."These alert rules provide early detection of DynamoDB’s most common production issues—throttling, latency regressions, and replication lag—so you can act before they impact real users.

Conclusion

Monitoring DynamoDB databases is essential for ensuring consistent performance, preventing throttling, and maintaining real-time data availability across global workloads. As applications scale, even minor latency or capacity issues can cascade into costly downtime and degraded user experience.

CubeAPM makes DynamoDB monitoring effortless by unifying metrics, traces, and logs under one observability layer. With OpenTelemetry-native integration, Engineering and SRE teams gain second-level visibility, customizable dashboards, and automated alerts that pinpoint bottlenecks instantly. They can move from reactive firefighting to proactive optimization, maximizing DynamoDB reliability, reducing costs, and maintaining query efficiency.

Start your CubeAPM free trial today and monitor DynamoDB the modern way.

Disclaimer: The information in this article reflects the latest details available at the time of publication and may change as technologies and products evolve.

FAQs

1. What are the key metrics to monitor in DynamoDB?

The most critical metrics include ReadLatency, WriteLatency, ThrottledRequests, ConsumedReadCapacityUnits, and ReplicationLatency. These reflect throughput efficiency, performance stability, and cross-region consistency.

2. How often should DynamoDB metrics be reviewed?

For production workloads, it’s best to monitor metrics in real time or at least every few seconds using a tool like CubeAPM that supports second-level resolution. Weekly trend reviews help fine-tune auto-scaling and cost efficiency.

3. Can DynamoDB monitoring detect partition hot spots?

Yes. By analyzing ThrottledRequests and ConsumedWriteCapacityUnits per partition or key pattern, monitoring tools can reveal hot partitions early—before they cause throttling or latency spikes.

4. How does monitoring help with DynamoDB cost optimization?

Monitoring identifies over-provisioned tables, inefficient queries, and excessive scans. These insights enable teams to adjust capacity units or caching, reducing unnecessary spending without impacting performance.

5. Is DynamoDB monitoring necessary for on-demand mode?

Absolutely. Even in on-demand mode, partitions can become overloaded, replication can lag, and tail latency can rise. Continuous monitoring ensures workloads remain stable and predictable under variable demand.