Contemporary observability platforms handle large amounts of telemetry data. A significant portion of which includes sensitive information such as personally identifiable information (PII). The presence of sensitive data in telemetry pipelines creates a significant security risk for observability tools. According to IBM, 53% of security attacks target PII, including authentication details. It highlights the significance of protecting telemetry data from unauthorized access.

SaaS observability tools simplify adoption, but they push telemetry into vendor-managed environments. Consequently, it limits control over data residency, retention, and deletion, even when the data is encrypted. As a result, many teams are transitioning to on-premises and VPC-hosted observability solutions. This architecture keeps telemetry within the infrastructure they control.

This article explores how self-hosted observability deployments promote data privacy and the security of telemetry data.

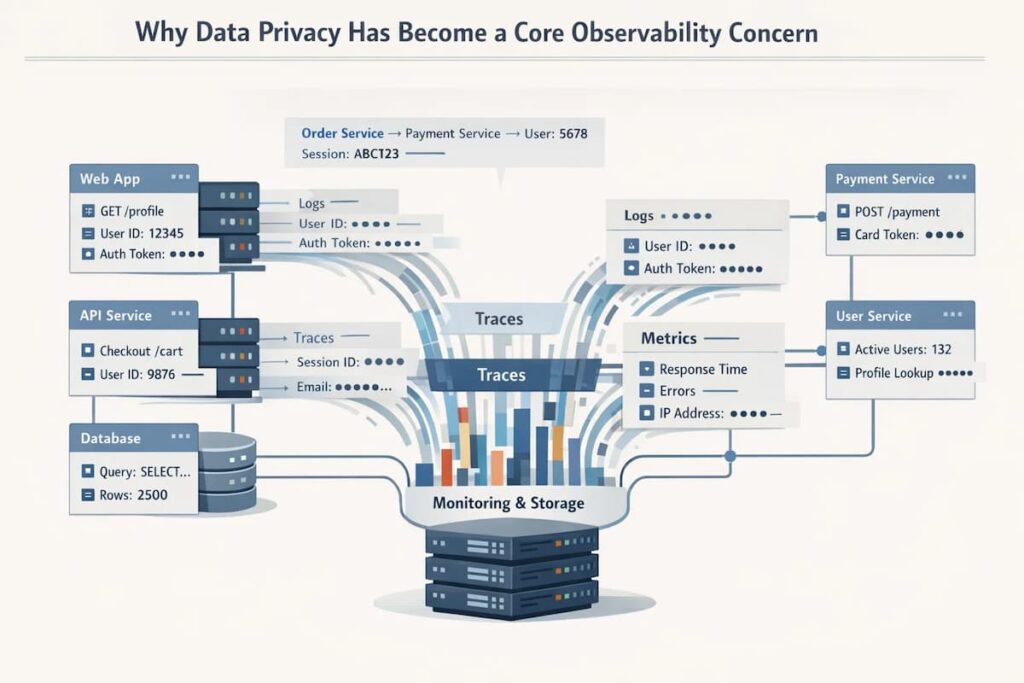

Why Data Privacy Has Become a Core Observability Concern

Observability tools collect and store a significant amount of telemetry data. The key components of observability, including traces, logs, and metrics, contain sensitive details. Telemetry can include personal data. When it does, observability platforms are no longer exempt from data regulations.

Some of the major reasons why privacy is a core observability concern:

- Observability Data Contain Sensitive Information: Telemetry usually contains critical information; for example, traces carry requests that contain API authentication tokens of the user. Observability tools must secure this critical information and prevent unauthorized access.

- Privacy Regulatory Requirements: Regulations such as GDPR and HIPAA do not exempt telemetry data. Governments have enacted strict data laws (e.g., GDPR, CCPA, HIPAA, SOC 2, ISO 27001) that regulate how personal data is handled. Failure to comply with regulations can attract heavy penalties and litigation measures, making data privacy a priority for many enterprises.

- Observability systems have become high-value data stores: Modern observability systems generate lots of data. Over time, they become high-value data stores with complete records of how systems function and how users behave. It includes user sessions with sensitive information like user IDs, email addresses, and authentication credentials. Enterprises are required to handle this data according to specific regional or country regulations.

- Security incidents due to data exposure: SaaS observability platform sends data to third-party vendor servers. Enterprises must account for third-party access, shared infrastructure, and data residency constraints. For security-sensitive teams, this loss of architectural control raises serious privacy concerns.

Data Privacy in an APM Context

Organizations running a SaaS business generate significant telemetry data. The telemetry usually contains critical information, including user names and addresses. Observability tools must secure this vital information and prevent unauthorized access.

To understand what data privacy means in an APM context, it is essential to distinguish the different stages: collection, processing, and control over collected data.

Data Collection

Data collection constitutes the type of telemetry collected at the source. This can be traces, logs, or metrics from applications or infrastructure. At this stage, the collection of sensitive information, such as user identifiers, increases security risk. After collecting the data, safeguarding against unauthorized access becomes a priority.

Data Processing

Includes everything that happens after the data is collected. This includes parsing, sampling, and storage. During processing, observability platforms add metadata such as user context and service names. Failure to implement data control measures results in making sensitive data easier to search, correlate, and share, increasing privacy exposure.

Data Ownership and Control

Data control entails the entity responsible for making decisions about the data. Includes where the data is stored, who can access it, the regions the data flows to, and for how long it is retained. It significantly matters since privacy obligations include limiting internal access, retention limits, and compliance with regional regulations.

Why encryption alone does not solve privacy concerns

Encryption is critical, but it only enforces confidentiality for data in transit and at rest. It does not address the main privacy questions in APM.

Encryption Does not Enforce Minimization

Privacy data laws and governance expect purpose limitation and minimization. For example, GDPR requires businesses to collect only what is needed to achieve the purpose for which it is processed.

Global frameworks such as SOC 2 and ISO 27001 also require organizations to define purpose, limit data collection, and demonstrate that only necessary data is processed and retained. The purpose can be for debugging and must be constrained to that operation only.

- Minimization is a policy, not a technical control: Encryption only protects data but does not dictate the data that should be collected. An enterprise can encrypt vast data sets, yet still violate privacy laws.

- Purpose limitation: Data should only be processed for achieving the specific purpose for which it was collected. For example, APM needs data for debugging but might collect personally identifiable information (PII) as part of the logs. Encrypting the logs does not make collecting the PII lawful if it was necessary for debugging.

Encryption Fails to Prevent Data Exposure

Encryption prevents unauthorized individuals from intercepting data. It does address issues such as authorized internal access or misuse.

- Internal Access: Encrypted data needs to be decrypted for authorized internal access. An authorized employee can see, use, and copy the data. Encryption doesn’t stop authorized employees from accessing and misusing data.

- Insufficient Audit Trails: Privacy measures necessitate the establishment of robust access controls and audit trails to monitor data access. Encryption does not give you these options. Without the audit system, an authorized employee could get to and use decrypted sensitive information.

Encryption Does not Prevent Over-collection

- Encryption does not prevent logging tokens or personal data: It does not change the fact that data was overcollected. It only focuses on preventing data interception. This can go against regulatory requirements that demand minimization.

- Regulatory requirements: Regional regulations require minimization. When organizations overcollect data, even if it is encrypted, it violates regional laws like GDPR.

If you log tokens, personal data, or payloads, encryption doesn’t change the fact that you collected them. It only changes who can intercept them on the wire or steal them from disk.

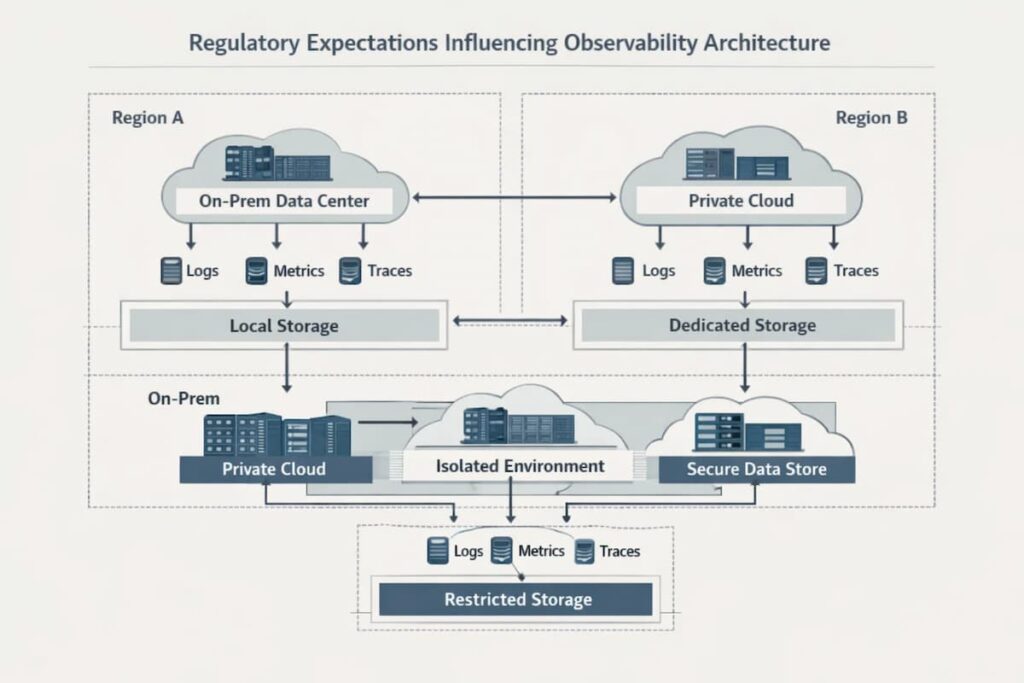

Regulatory Expectations Influencing Observability Architecture

Why compliance requirements increasingly dictate where telemetry can live

Telemetry data is no longer treated as mere operational data. Logs, traces, and metrics now carry sensitive data governed by regulations. Architecture choices now have compliance implications.

- Telemetry data is regulated data: Logs and traces can include sensitive data such as PII, IP addresses, and authentication tokens. Under regulations like the GDPR and HIPAA, this information is classified as sensitive. This makes the location and handling of the data subject to regional laws.

- Data residency concerns: Data residency and sovereignty are now architectural concerns. Regional regulations such as GDPR now require data to remain within a specific jurisdiction. Enterprises now choose an observability architecture where telemetry stays in a specific environment or regions they control.

- Industry-Specific Compliance Standards: Highly regulated industries like finance, healthcare, and government impose data retention policies, audit trails, and data retention policies. Telemetry often includes sensitive details, and storing it in non-compliant regions might deny enterprises certifications.

- Supply Chain and Third-Party Risks: Observability tools often integrate with vendors. Regulations like the US’s CMMC (Cybersecurity Maturity Model Certification) or the EU’s NIS2 Directive require vetting data flows through the entire chain. If telemetry traverses unauthorized regions, it could expose organizations to supply chain attacks or legal liabilities.

Limitations of region-based SaaS guarantees

Most SaaS observability vendors offer region-based assurance. However, this is not feasible with current regional data laws. Consequently, it might fail to work due to:

- Third-party Complexity: SaaS companies often use third-party sub-processors to store and process data. If the third-party vendors fail to comply, the data controller (the customer) can be held responsible.

- Jurisdictional Access Risk: A major limitation is the possibility of a government accessing data stored by a vendor under their jurisdiction, even if physical servers are in a compliant region. These limitations are one reason many security teams reassess SaaS-only observability platforms, and why discussions around Datadog alternatives often arise when data residency and jurisdictional access become audit concerns.

- Limited Global Coverage: This leads to compliance gaps, resulting in insufficient assurance. For example, a company operating in Southeast Asia might have to store telemetry in Europe, potentially violating local rules.

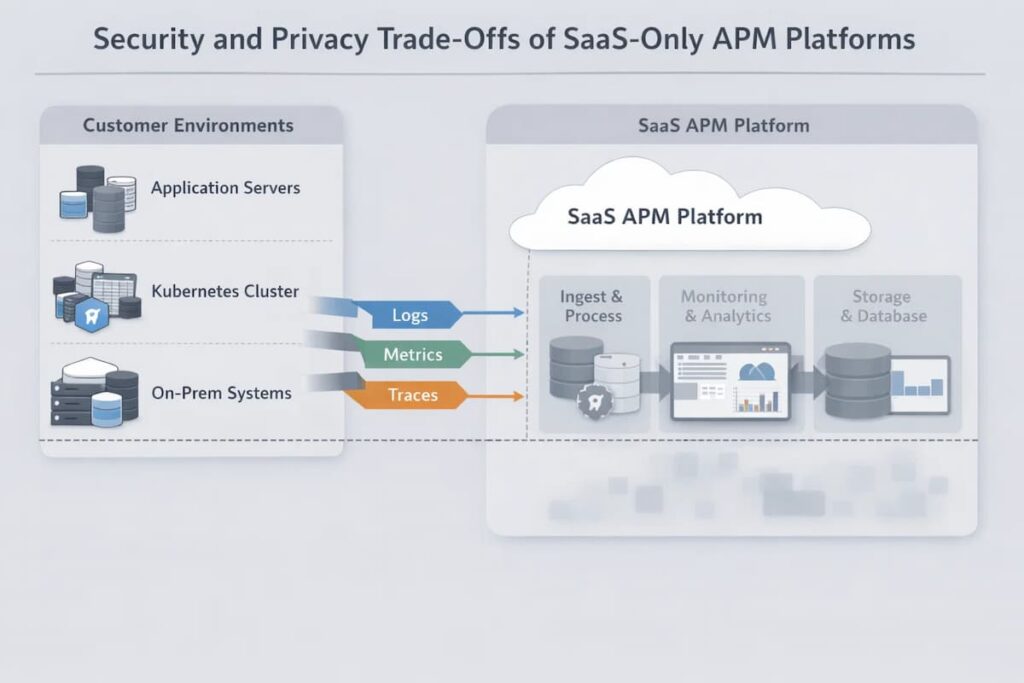

Security and Privacy Trade-Offs of SaaS-Only APM Platforms

Cloud-hosted observability with SaaS-only Application Performance Management (APM) platforms comes with some privacy trade-offs including:

- Shared infrastructure: SaaS observability platforms utilize cloud infrastructure that multiple users share. Even though efforts are being made to keep things separate, a problem in one environment could still affect other customers who share the same hardware or network.

- Vendor-managed access paths: Most SaaS platforms let the vendor control who can access telemetry. Data is transmitted to third parties, and enterprises don’t have control. Most enterprises rely on trusting the IAM policies of the provider to secure data privacy.

- Limited visibility into internal controls: Customers usually see only high-level statements about how vendors handle security and compliance. Day-to-day controls, audits, and incident handling are rarely visible.

As teams evaluating observability platforms dig deeper into how these systems handle data, the discussion often moves beyond dashboards and alerts. When organizations look for alternatives to SaaS-first APM tools, questions around data access, retention, and architectural control tend to surface early.

How proprietary data pipelines increase long-term risk:

- Vendor lock-in through instrumentation: Many SaaS APM platforms depend on proprietary agents and formats. At that point, switching tools and moving to self-hosted options is no longer a clean change. It usually involves rework and lost continuity.

- Dependence on vendor security: With proprietary pipelines, security is mostly the vendor’s problem. If something breaks in their ingestion or processing layer, customers can only wait for a fix.

- Limited portability: SaaS companies that don’t let you move your data around easily put you in more danger in the long run. If a vendor changes the terms of their service or goes out of business, teams may have trouble exporting their past performance data in a way that is useful. This has long-term effects on compliance.

On-Prem and Self-Hosted APM as a Privacy-First Model

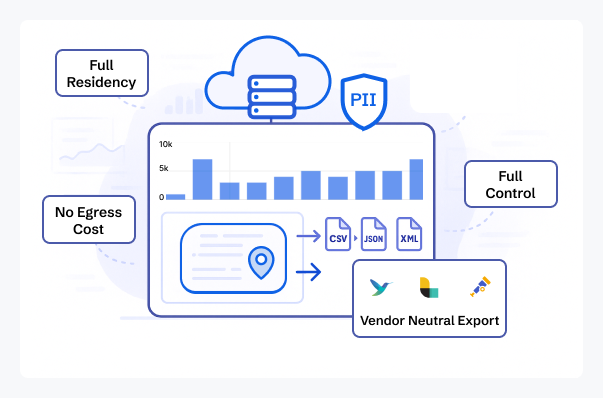

On-prem and self-hosted APM keep sensitive telemetry in a controlled infrastructure. Telemetry is not exported to third-party servers, guaranteeing data control and reducing the risk of a security attack.

How on-prem APM changes the data-security threat model

- Customer-controlled storage: Observability data is ingested and stored inside the customer’s infrastructure. The entire telemetry pipeline runs within the customer’s cloud. Customers have control over the retention period, where data is stored, and how it is processed.

- Control over infrastructure: The organization takes control over the physical and virtual infrastructure. Provides teams with the opportunity to implement strong security measures, including access controls and encryption.

- Reducing third-party risks: Data never leaves the organization’s infrastructure. Reduces reliance on third-party vendors for security. The full control enables the team to fully comply with data localization laws and implement PII safety standards.

- Customization and isolation: Organizations can implement customized security protocols tailored to their environment and security policies.

Benefits of keeping observability inside your infra

On-prem data centers

The on-prem deployment option secures data privacy and on-prem security through

- Data sovereignty: Teams have full control over data. Complies with internal data policies and regional regulations, such as the GDPR or CCPA, the majority of whose provisions focus on maintaining data confidentiality and seeking user consent for the use of data.

- Reduce Attack Surface: Keeping data in-house reduces the security risk that occurs while data is in transit. Potential data breach risks arise when transmitting data to external cloud providers and storing it on a third-party vendor’s server.

- Physical Control: You own the encryption keys. The observability platform cannot see your data unless you explicitly grant it access to your environment.

Private cloud/VPCs

On-Prem deployment option promotes data privacy and on-prem security through:

- Data isolation: The data within the VPC is separated from other public cloud users. It mitigates the risks emanating from a shared infrastructure.

- Access control: Security policies can be set using Network Access Control Lists (ACLs), which limit access to sensitive information for both incoming and outgoing traffic within teams.

Understanding Vendor Lock-In in Observability

Vendor lock-in in observability is often discussed as a pricing or tooling concern, but in practice, it runs much deeper. Lock-in affects how telemetry is gathered, stored, accessed, and managed over time in current APM platforms.

What lock-in looks like in APM tools

Agents that are owned by someone else

A lot of APM platforms use agents that are customized to each vendor and tightly link apps to a single backend. These agents frequently implement proprietary instrumentation APIs, configuration formats, and runtime requirements. When services are firmly integrated, it takes a lot of work to replace them, especially in distributed systems with microservices.

Concerns around proprietary agents and closed data models are also why teams exploring long-term data governance often review alternatives to SaaS platforms such as Dynatrace, New Relic, or Datadog as part of their observability strategy.

Schemas and data models that are closed

Often observability suppliers have their own schemas for logs, metrics, and traces. Makes it hard to understand or use telemetry data from outside the platform where it was created. As time goes on, companies lose the ability to query, correlate, or move data in different ways, even if the raw information is still there.

Exports limited data

Many tools claim to be able to export data; however, these claims are often false because the tools can only export certain types of data, at a certain speed, or without critical context like high-cardinality features. In practice, teams may discover that they are unable to completely recover previous observability data once it exits the vendor’s environment.

Why vendor lock-in becomes a data governance problem

As observability data increasingly contains sensitive operational and user context, vendor lock-in affects more than tooling choice. Organizations lose direct control over how that data is governed.

This creates challenges, including:

- Data ownership and retention

- Compliance and audit readiness

- Internal security policies and access reviews

- Consistent data handling across environments and regions

- CubeAPM’s Architecture: Privacy by Design

CubeAPM’s Architecture: Privacy by Design

Self-hosted Deployment

CubeAPM is deployed with the customer’s infrastructure. All the telemetry data operations is done within the end-user’s environment. Data is not exported to third-party servers. Observability data is therefore subject to the enterprise’s security policies.

VPC and private cloud isolation

CubeAPM also runs in a private cloud environment. Observability data is inside a private cloud and subject to the same firewall rules, security groups, and security policies. The telemetry network is safeguarded against external networks.

What this means for data access, retention, and internal controls

CubeAPM runs within customer infrastructure, giving customers full control over observability data. It makes it simple to connect authentication, authorization, and role separation with current identity systems, so there’s no need to depend on access methods managed by the vendor.

CubeAPM allows organizations to define retention policies that align with internal governance requirements. Teams can determine how long telemetry is stored, where it resides, and when it is deleted. Retention decisions are driven by operational value, compliance obligations, and storage policies, rather than fixed defaults imposed by a SaaS provider.

How CubeAPM promotes enterprise privacy and security requirements

Running observability inside customer-controlled environments allows teams to apply the same policies used for other internal systems. Regulatory requirements such as data residency, audit logging, and incident response are easily applied across observability data.

CubeAPM is OpenTelemetry native. Telemetry collection is based on open, standards-driven instrumentation, allowing organizations to retain portability across tools and environments. CubeAPM allows teams to switch observability backends without re-instrumenting their applications.

On-Prem Security Controls in Practice

When observability runs inside your environment, it falls under the same security model as the rest of the stack. That means the controls are not theoretical. They are the same ones already used for production systems.

Role-based access control (RBAC)

Access to telemetry is tied to internal identity systems. Engineers, SREs, and security teams get different levels of visibility based on role. No shared vendor accounts. No external support access by default. Access decisions follow the same approval and review processes used elsewhere in the organization.

Network segmentation

Self-hosted APM lives inside existing network boundaries. Ingestion endpoints, storage backends, and query layers can be isolated using VPCs, subnets, firewalls, and private routing. Telemetry traffic does not traverse public networks unless explicitly configured to do so.

Internal audit logging

Access to observability data can be logged the same way as access to application databases or internal services. Queries, configuration changes, and permission updates leave an audit trail. This makes it possible to answer who accessed what, when, and why.

Why observability should follow production security controls

Observability systems see deep into how applications work. They capture execution paths, request metadata, and failure context. Treating them differently from production workloads creates a blind spot. When the same controls apply, observability becomes easier to defend during audits and easier to reason about during incident reviews.

Conclusion

Self-hosted deployment is designed to deliver an observability experience that fosters data privacy and on-prem security. The underlying architecture ensures data stays within the customer’s infrastructure. It guarantees data sovereignty, making it easier for companies to comply with regional regulations. Moreover, keeping sensitive data within the physical premises or VPC eliminates exporting it to third-party servers, reducing the security risks during transmission.

CubeAPM takes a security-first approach. Deploy CubeAPM in your VPC and stop exporting your sensitive telemetry to third parties. See how our on-prem architecture makes regional compliance effortless.

Disclaimer: The information in this article reflects the latest details available at the time of publication and may change as technologies and products evolve.

FAQs

1. What does data privacy entail for modern observability platforms?

When it comes to observability, data privacy means who can see telemetry data like logs, traces, and metrics. Privacy is based on where this data is held, who can see it, and how long it is maintained. This is because it often has tokens, user IDs, or system information.

2. Why is privacy critical in the observability context?

Observability tools get raw operational data directly from running systems. Logs and trails can show private information. When this information is processed via vendor-managed SaaS platforms, companies can’t directly control who can see it, how long it stays, or how to delete it.

3. How can being able to see things on-site improve security?

On-prem observability keeps telemetry in customer-controlled infrastructure. Teams can use their own network controls, identity regulations, and audits on observability data.

4. Why is encryption not enough to keep observability data safe?

Encryption does not govern internal operations like data collection. Users can overcollect the encrypted data, violating regional regulations. To really have privacy, you need to be able to govern the infrastructure, access pathways, and retention regulations.

5. How do compliance requirements change how observability is designed?

Many rules apply to observability data, as logs and traces may contain restricted information. To meet data residency and audit requirements, teams generally prefer to set up observability on their servers or in a private cloud.