Nginx sits at the core of today’s internet, handling millions of requests per second for APIs, streaming platforms, and e-commerce sites. When it fails, it often fails loudly—502 Bad Gateway responses, upstream timeouts, SSL errors, or worker crashes—all captured inside its error logs.

These logs act as a forensic trail, pinpointing whether issues stem from misconfigurations, backend unavailability, or resource exhaustion. Ignoring them risks service disruptions, frustrated users, and revenue loss. Downtime is expensive: recent estimates put outage costs at $9,000 per minute on average.

Monitoring Nginx error logs continuously enables early detection, faster troubleshooting, and correlation with performance metrics and traces, so teams can resolve issues before customers notice.

This guide shows how to monitor Nginx error logs effectively and how CubeAPM makes it seamless, real-time, and cost-efficient.

What Are Nginx Error Logs?

Nginx error logs are system-generated records that capture abnormal events and failures occurring within the Nginx web server. Unlike access logs—which track every client request—error logs document issues like failed connections, upstream service crashes, misconfigured SSL certificates, permission errors, or resource exhaustion. Each entry typically contains a timestamp, severity level, process ID, and diagnostic message, making them critical for troubleshooting and performance tuning.

For businesses, monitoring these logs is far more than an operational task—it directly safeguards uptime, user experience, and revenue. By continuously analyzing error logs, teams can:

- Detect outages early: Spot spikes in 502 or 504 errors before they escalate into full downtime.

- Optimize performance: Identify recurring upstream timeouts and tune worker or proxy settings.

- Ensure compliance and security: Track SSL failures or permission issues that may expose vulnerabilities.

- Control costs: Prevent resource misallocation caused by misconfigurations or excessive retries.

Example: Debugging API Gateway Timeouts

A fintech platform using Nginx as an API gateway noticed recurring 504 Gateway Timeout errors during peak traffic. By analyzing error logs, the ops team traced the issue to exhausted worker connections and delayed responses from a payment microservice. Once correlated with system metrics, they increased the worker_connections limit and tuned keepalive settings, reducing timeout errors by 70% during load spikes.

Common Issues Detected in Nginx Error Logs

502 / 504 Gateway Errors

These errors typically occur when Nginx cannot get a timely or valid response from the upstream service it proxies to. Causes include backend crashes, slow databases, or exhausted connections. In large-scale APIs, recurring 502 or 504 spikes often reveal microservice bottlenecks.

Example log entry:

2025/09/29 10:12:43 [error] 23145#0: *174 upstream timed out (110: Connection timed out) while reading response header from upstream, client: 192.168.1.10, server: api.example.com, request: “GET /checkout HTTP/1.1”

Connection Refused / Timeout

When a backend is unreachable—due to downtime, firewall rules, or DNS failures—Nginx records “connection refused” or timeout errors. Monitoring these logs is critical in distributed systems where backend pods or instances may restart frequently.

Example log entry:

2025/09/29 10:15:21 [error] 23145#0: *189 connect() failed (111: Connection refused) while connecting to upstream, client: 203.0.113.24, server: api.example.com, request: “POST /login HTTP/1.1”

Permission Denied / Config Errors

Misconfigured file paths, invalid SSL certificates, or incorrect permissions generate immediate log entries. These errors often block startup, reload, or secure connections, leading to outages if undetected.

Example log entry:

2025/09/29 10:18:06 [crit] 23145#0: *205 SSL_do_handshake() failed (SSL: error:14090086:SSL routines:ssl3_get_server_certificate:certificate verify failed) while SSL handshaking, client: 198.51.100.5, server: secure.example.com

Resource Exhaustion

When Nginx reaches worker or buffer capacity, the error log reveals it with explicit warnings. This is a leading cause of hidden performance degradation—connections stall, retries pile up, and latency spikes.

Example log entry:

2025/09/29 10:20:44 [alert] 23145#0: *217 worker_connections are not enough while connecting to upstream, client: 203.0.113.88, server: app.example.com, request: “GET /dashboard HTTP/1.1”.

Why Monitoring Nginx Error Logs Is Important

Catch Upstream Failures and 5xx Spikes Early

Nginx error logs provide the earliest signal of upstream breakdowns—timeouts, bad headers, or connection resets—long before user complaints surface. By monitoring spikes in 502 or 504 entries, teams can isolate failing microservices or overloaded upstreams in real time. NGINX’s own documentation confirms that error log levels (crit, alert, emerg) are designed to flag these high-severity issues immediately.

Reduce MTTR with Root-Cause Clues

Error logs don’t just record that a request failed—they capture why: handshake errors, permission issues, or socket path misconfigurations. This context drastically reduces mean time to resolution (MTTR) when correlated with system metrics. According to Atlassian’s benchmarks, teams that rely on log-driven root-cause analysis shorten recovery times by over 30% compared to metric-only workflows.

Protect Revenue by Containing Downtime Costs

Every minute a gateway throws 502 errors translates into lost revenue. Error log alerts on critical error levels allow for immediate failover or rollback actions. Industry data shows downtime can cost businesses up to $9,000 per minute on average.

Guard Against Config Drift and Unsafe Reloads

Nginx error logs instantly capture startup and reload failures—missing includes, syntax errors, or deprecated directives. Without monitoring, these issues can silently degrade performance or block traffic after a reload. Surfacing these entries in dashboards ensures that unsafe changes are caught before they scale out across environments.

Prevent TLS/SSL Outages Before They Break Production

Expired or misconfigured TLS certificates are a leading cause of outages. Nginx logs emit clear messages like SSL_do_handshake() failed whenever there’s a mismatch or expiry. Monitoring these errors helps teams catch issues before end users see downtime. A 2024 Venafi report found that 72% of organizations experienced at least one certificate-related outage in the past year, and 67% reported monthly outages.

Spot Capacity Pressure Before It Cascades

When Nginx workers or buffers hit their limits, errors like “worker_connections are not enough” appear in the error log. Monitoring these signals helps teams quickly distinguish whether traffic bottlenecks are caused by Nginx limits or upstream app slowness. Addressing these early with targeted tuning avoids unnecessary infrastructure scaling and improves efficiency.

Strengthen Security with Actionable Server-Side Signals

Nginx error logs capture critical signals of malicious or malformed requests—protocol mismatches, invalid SSL renegotiations, or denied resource access. Industry surveys confirm that many organizations tie outages and incidents directly to misconfigurations and certificate hygiene. Incorporating these logs into security pipelines provides an additional early-warning system against abuse.

Key Metrics to Track for Monitoring Nginx Error Logs

Monitoring Nginx error logs becomes more powerful when combined with key metrics. These metrics convert raw log entries into measurable signals, helping teams detect, triage, and resolve issues faster.

Availability & Reliability Metrics

These metrics highlight whether upstream services and the Nginx gateway itself are reliably serving requests.

- Error Rate (5xx responses): Tracks the percentage of failed requests logged as server errors. A sudden spike in 502 or 504 logs usually indicates upstream instability. Threshold: alert if the error rate exceeds 2–5% of total requests for more than 1 minute.

- Connection Failures: Measures logs with “connection refused” or “connection reset.” This shows backend downtime or misconfigured firewalls. High connection failures sustained over several minutes indicate serious backend issues. Threshold: >100 connection errors/minute.

- SSL/TLS Handshake Failures: Surfaces log entries with SSL_do_handshake() failed, highlighting expired or mismatched certificates. This is a leading cause of outages in production. Threshold: >10 handshake errors in 5 minutes.

Performance & Latency Metrics

Performance metrics show how quickly upstreams respond and how efficiently Nginx handles requests.

- Upstream Response Time: Correlates timeout errors in logs with backend latency. Long response times often precede 504 entries. Threshold: p95 latency above 2 seconds for critical APIs.

- Request Processing Time: Captures how long Nginx workers take to process requests end-to-end. Log analysis here reveals bottlenecks under load. Threshold: >1 second average across multiple workers.

- Retry/Timeout Frequency: Indicates how often Nginx retries upstream connections due to slow or failing services. Frequent retries increase load and amplify outages. Threshold: >50 retries per minute.

Resource & Capacity Metrics

Nginx error logs also surface when workers, buffers, or sockets hit their limits, which directly impacts throughput.

- Worker Connections Usage: Logs like “worker_connections are not enough” indicate saturation. Monitoring this metric avoids dropped connections under peak traffic. Threshold: >80% worker connection utilization.

- Buffer Overflows: Errors in logs show when proxy buffers or client buffers are exceeded. These correlate with slow clients or oversized responses. Threshold: >10 buffer overflow entries in 5 minutes.

- Memory & File Descriptor Exhaustion: Captures log events tied to resource exhaustion (e.g., EMFILE errors). These failures block new connections. Threshold: memory or FD usage >85%.

Security & Compliance Metrics

Error logs provide signals of suspicious or insecure behavior that can impact compliance and security posture.

- Unauthorized Access Attempts: Logs “permission denied” or denied SSL requests. Tracking these highlights for brute force or misconfigurations. Threshold: >20 denied requests in 10 minutes.

- Protocol Errors: Errors from unsupported ciphers, TLS versions, or malformed requests. These often surface during attacks or misconfigured clients. Threshold: sustained >5 protocol errors/minute.

- Configuration Warnings: Log entries showing deprecated or unsafe directives after reload. These must be monitored to avoid compliance gaps. Threshold: alert on any critical-level configuration error.

How to Monitor Nginx Error Logs

Step 1: Install and Deploy CubeAPM

Begin by setting up CubeAPM, the observability backend that ingests and analyzes Nginx error logs.

- For standalone environments, follow the Install CubeAPM guide.

- For Kubernetes deployments, use the Helm-based setup in the Kubernetes install guide.

This ensures you have a centralized platform ready to collect error logs from your Nginx servers.

Step 2: Configure CubeAPM for Log Ingestion

Next, configure CubeAPM so it can ingest structured or plain-text Nginx error logs.

- Define environment-specific variables like base-url and cluster.peers using the configuration guide.

- Make sure token and auth.key.session are set so logs are securely received.

This step ensures your Nginx error logs won’t be dropped or rejected.

Step 3: Forward Nginx Error Logs to CubeAPM

Set up log shippers or agents to send Nginx error logs to CubeAPM.

- Configure your OpenTelemetry collector or Fluent Bit to tail /var/log/nginx/error.log.

- Use JSON logging where possible (error_log /var/log/nginx/error.json;) for better field indexing in CubeAPM.

This lets you query by severity, client IP, or upstream server directly in the CubeAPM UI.

Step 4: Parse and Index Error Log Fields

CubeAPM supports parsing structured fields so you can slice error logs by type.

- Map fields like _msg, severity, and upstream using the Log Monitoring guide.

- This makes it possible to separate SSL handshake failures from worker connection alerts.

Parsing ensures that you can search and alert on precise error categories, not just free-text logs.

Step 5: Create Alerts for Critical Error Patterns

Once logs are flowing, build alert rules that trigger on high-impact errors.

- Use the alerting integration to notify via email or Slack.

- Example patterns: sudden spike in 502/504 errors, >10 SSL handshake failures in 5 minutes, or repeated “worker_connections are not enough.”

This makes Nginx error logs an early-warning system instead of just a forensic tool.

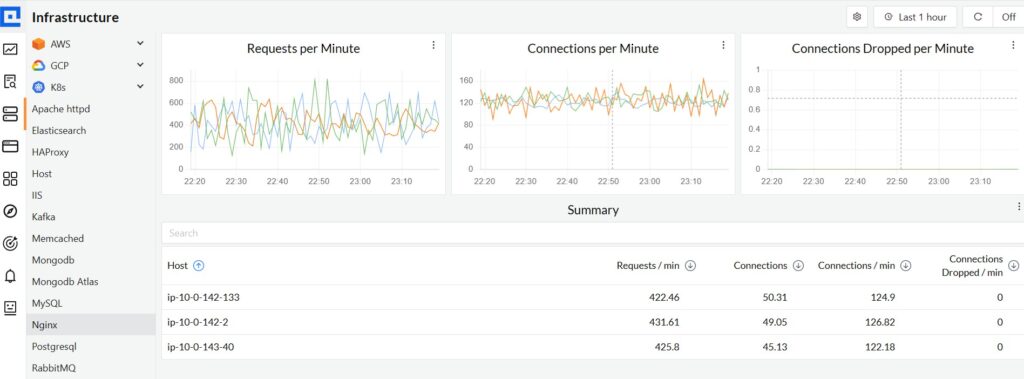

Step 6: Visualize Error Trends in Dashboards

Build dashboards that combine error log data with performance context.

- Chart error counts by type (502, SSL, config).

- Overlay with upstream response times or worker connection usage.

- Use the log query features to drill into specific spikes.

This allows SREs to trace an outage directly from log entry → root cause.

Step 7: Validate the Setup with Controlled Failures

Test your monitoring pipeline before relying on it in production.

- Intentionally point Nginx to a dead backend to generate 502 errors.

- Deploy an expired certificate to trigger handshake failures.

- Confirm that CubeAPM ingests the logs, visualizes them in dashboards, and fires alerts.

This validation ensures confidence that Nginx error logs are being monitored correctly.

Real-World Example – Checkout Failures in E-commerce

Challenge: Users Facing 502 Errors During Checkout

An online retailer noticed a sharp increase in 502 Bad Gateway errors during peak sale events. Customers were being dropped at the payment step, leading to abandoned carts and direct revenue loss. The root cause wasn’t obvious from frontend monitoring alone—requests looked fine until they hit the backend gateway.

Solution: Error Logs Ingested into CubeAPM, Correlated with Payment API Latency

By forwarding Nginx error logs into CubeAPM’s Log Monitoring pipeline, the SRE team could correlate spikes in 502 errors with traces showing latency from the payment provider’s API. CubeAPM dashboards overlaid error log counts with upstream response time, confirming that 502s occurred exactly when the API exceeded its 2-second SLA.

Fixes: Optimized Keepalive + Retried Upstream

Armed with this evidence, the team adjusted Nginx keepalive settings to reuse upstream connections efficiently, reducing connection churn during spikes. They also configured conditional retries for transient upstream failures, ensuring payment requests were retried against healthy instances instead of failing immediately.

Result: 40% Fewer Checkout Errors, Higher Conversions

Within two release cycles, the number of failed checkout sessions dropped by 40%, and transaction completion rates improved significantly. By monitoring Nginx error logs through CubeAPM, the retailer turned logs from a passive artifact into a proactive reliability tool, directly boosting conversions and customer satisfaction.

Verification Checklist & Example Alerts

Before relying on CubeAPM for production monitoring, it’s essential to validate that Nginx error logs are being captured, parsed, and acted upon correctly. This checklist ensures your pipeline is healthy and ready.

Verification Checklist

- Log format: Confirm that error_log in Nginx is set to structured JSON or at least includes timestamp, severity, and message. This ensures CubeAPM can parse fields for queries and dashboards.

- Collector configuration: Verify that your log shipper (OpenTelemetry Collector, Fluent Bit, or Vector) is tailing /var/log/nginx/error.log and forwarding events to CubeAPM’s ingestion endpoint.

- Ingestion health: Check CubeAPM’s Log Monitoring UI for incoming Nginx log entries. Ensure fields like severity, client.ip, and upstream are indexed.

- Alert delivery: Trigger a known error (e.g., point Nginx to a dead upstream) and confirm CubeAPM fires the configured alert via email or Slack.

Example Alerts for Nginx Error Logs in CubeAPM

1. Spike in 502 errors

Alert when the count of 502 Bad Gateway entries in Nginx error logs exceeds 100 per minute.

condition: count(error_log where status=502) > 100

alert:

name: "High rate of 502 Bad Gateway errors"

description: "Triggers when Nginx error log entries with status=502 exceed 100 per minute"

condition:

query: 'count(error_log{status="502"}) > 100'

for: 1m

severity: high

notify:

- email

- slack

2. Error severity ≥ crit

Trigger an alert whenever a crit, alert, or emerg severity entry is logged.

condition: error_log.severity in [“crit”,”alert”,”emerg”]

alert:

name: "Critical Nginx error log entry detected"

description: "Fires when severity level is crit, alert, or emerg"

condition:

query: 'error_log{severity=~"crit|alert|emerg"} > 0'

for: 0m

severity: critical

notify:

- email

3. Backend timeout > 5s

Detect upstream timeouts that exceed service-level agreements.

condition: count(error_log where message contains “upstream timed out”) > 10

alert:

name: "Upstream timeout exceeding 5s"

description: "Triggers when upstream timed out messages appear more than 10 times in 5 minutes"

condition:

query: 'count(error_log{message=~"upstream timed out"}) > 10'

for: 5m

severity: warning

notify:

- slack

These rules make Nginx error logs actionable, ensuring your team gets notified before a customer feels the impact.

Conclusion

Monitoring Nginx error logs is not just an operational task—it’s a frontline defense against outages, degraded performance, and lost revenue. Error logs capture the earliest signals of upstream failures, SSL issues, and resource bottlenecks that directly impact users.

By analyzing these logs continuously, teams can troubleshoot faster, reduce downtime, and prevent costly incidents. When combined with metrics and traces, error logs become a powerful tool for ensuring reliability and compliance in modern digital businesses.

CubeAPM makes this seamless with real-time log ingestion, smart correlation, and cost-efficient monitoring. Start monitoring Nginx error logs with CubeAPM today and deliver uninterrupted user experiences.

Disclaimer: The information in this article reflects the latest details available at the time of publication and may change as technologies and products evolve.

FAQs

1. Where are Nginx error logs stored by default?

On most Linux distributions, Nginx error logs are stored at /var/log/nginx/error.log. If you’ve customized log paths in the Nginx configuration file (nginx.conf), the location may differ. Always check the error_log directive to confirm.

2. Can Nginx error logs be rotated automatically?

Yes. Tools like logrotate can automatically rotate, compress, and archive Nginx error logs. Proper rotation prevents logs from consuming disk space and ensures long-term retention policies are enforced.

3. How can I monitor Nginx error logs in real time?

You can tail the logs directly using tail -f /var/log/nginx/error.log. For production environments, use observability platforms like CubeAPM to stream logs in real time, correlate them with metrics, and trigger alerts on anomalies.

4. Do Nginx error logs include client IP addresses?

Yes. Nginx error logs often include the client IP, request path, and upstream server information alongside the error message. This helps in tracing problematic requests or identifying attack patterns.

5. How long should I retain Nginx error logs?

Retention depends on compliance and business needs. For debugging, 30 days may be sufficient, but regulated industries often require 90–180 days. CubeAPM supports flexible log retention so teams can balance compliance with cost.