Redis monitoring ensures your databases run efficiently and reliably. Although Redis powers over 70% of real-time caching systems worldwide. Yet, as systems scale, teams face issues like memory fragmentation, latency spikes, hidden key evictions, and replication lag that degrade performance and are difficult to detect without full visibility.

CubeAPM is the best Redis monitoring solution. It provides OpenTelemetry-native Redis database monitoring that unifies metrics, logs, and error traces under one view, helping teams correlate Redis performance with upstream APIs and services for faster root-cause detection.

In this article, we’ll explore what Redis monitoring is, why it matters, key metrics, and how CubeAPM simplifies it end-to-end.

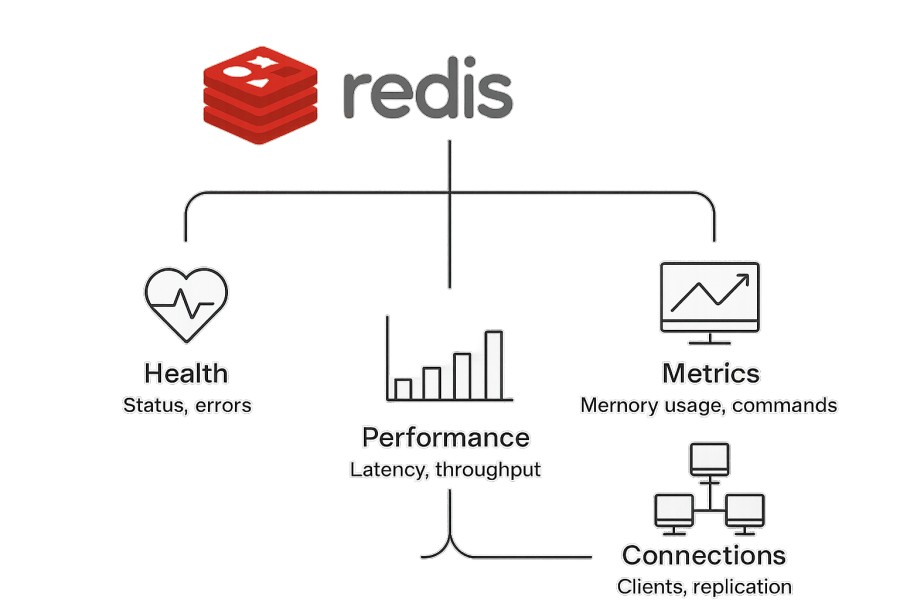

What Do You Mean by Redis Monitoring?

Redis is an open-source, in-memory data store widely used as a cache, message broker, and real-time database. It’s known for its speed and versatility, capable of processing millions of read and write operations per second with sub-millisecond latency.

Redis monitoring is the continuous process of tracking system metrics, logs, and command performance to ensure optimal memory usage, availability, and throughput. It helps detect early warning signs — like memory fragmentation, key evictions, replication lag, or slow commands, before they impact production. For modern businesses, this means:

- Consistent performance: Identify latency spikes and optimize command execution.

- Operational resilience: Prevent data loss during failovers or replication issues.

- Cost efficiency: Monitor memory growth to optimize infrastructure usage.

- Improved user experience: Ensure fast, reliable access to cached data and sessions.

By actively monitoring Redis, DevOps and SRE teams gain the insight needed to troubleshoot issues faster, scale efficiently, and maintain high service availability, critical for today’s data-driven applications.

Example: Monitoring Redis for E-Commerce Caching

Consider a large e-commerce platform that uses Redis to cache product catalogs and shopping cart data. During a seasonal sale, traffic spikes cause increased cache writes and key evictions. Without Redis monitoring, the operations team might only notice slower checkout responses.

But with real-time monitoring through CubeAPM, metrics such as memory usage, key eviction rates, and command latency reveal the exact bottleneck. The team can quickly tune eviction policies and scale nodes, ensuring smooth transactions and zero downtime during peak load.

Why Monitoring Redis Databases Is Important

Detect latency spikes, including those from persistence operations

Redis’ single-threaded model means any expensive operation (long Lua script, big key scan) or a fork during snapshot/AOF rewrite can halt command execution momentarily. Recent research shows that default fork behavior can inflate tail latency by 80–99% on large instances, making visibility into these events essential.

Prevent memory pressure and thrashing

When memory utilization nears capacity, Redis triggers evictions based on policy. But if fragmentation is high or key usage uneven, eviction churn increases, hit rates drop, and CPU load rises. Monitoring fragmentation ratio, evicted_keys, and real per-key memory usage helps teams detect these inefficiencies early.

Maintain replication safety and cluster resilience

During write surges or network strain, Redis replicas can lag significantly. That opens a window for stale reads or data loss during primary failovers. Observing replication offsets, synchronization backlog, and shard balancing lets you intervene before impact.

Correlate Redis health with business impact

Redis often lies on the hot path—checkout caches, session stores, search indices. According to ITIC’s 2024 survey, over 90% of firms report that one hour of downtime costs more than $300,000, and 41% say the cost is $1M–$5M per hour for large incidents. With proper monitoring, you shrink the blast radius and avoid cascading failures.

Track client behavior and protect connection stability

As traffic surges, Redis may hit maxclients, reject connections, or cause client timeouts. Monitoring client count, connection errors, and blocked clients helps you protect against connection storms—or runaway pipelines.

Optimize cost and scale smartly

Memory is expensive; oversizing wastes budget. With insights into hit rate, usage trends, and eviction behavior, you can right-size your Redis fleet—avoiding surprise infrastructure spend while maintaining headroom.

Key Challenges in Monitoring Redis

Memory fragmentation & eviction turbulence

Redis keeps all data in memory. Over time, insertions, deletions, and object resizing lead to fragmented memory layouts that hurt efficiency. A high fragmentation ratio (e.g. > 1.5) means the OS RSS memory is far larger than Redis’s internal allocation, representing wasted space or allocator inefficiencies.

When memory gets full, Redis triggers evictions based on its policy (LRU, LFU, etc.). But evictions also run on the main thread, slowing down other commands. In extreme cases, eviction activity can saturate the CPU, causing latency amplification and backlog formation.

Observing command latency via Slowlog and commandstats

Redis provides the Slow Log and commandstats via INFO to show which commands are slow and which are heavy in usage. But without continuous monitoring, sudden latency changes (e.g. during a traffic burst) often go unnoticed, especially when average latency seems normal. Monitoring these logs over time is vital to uncover command-level bottlenecks and anomalies.

Replication lag, stale reads & cluster partitioning

In master-replica setups, the replica must keep up with the writes from the primary. During surges or network blips, lag increases, risking stale data reads or an inconsistent state. Because Redis replication is asynchronous, there’s always a potential delay.

Additionally, in clustered setups, node failures or misbalanced shards can fragment the cluster and undermine availability or performance. Monitoring sync offsets, sync backlog, and cluster topology changes is nontrivial.

Network saturation or throughput bottlenecks

As you scale Redis across separate hosts or across data centers, the network becomes a factor. If replication or client traffic saturates bandwidth, latency, retransmissions, or packet drops degrade performance. Monitoring input/output, bandwidth, and network errors is necessary to spot these cross-node bottlenecks.

Visibility limitations at the command/key level

A big challenge is granularity: knowing which keys or operations are causing trouble (e.g. a heavy MGET, or scanning big sets). Aggregated metrics like avg latency mask outliers or microbursts. Without tracing or per-command breakdowns, root cause diagnosis is slow. Many tools don’t support this deep level by default for Redis.

Thin native observability in distributed Redis

Redis itself exports only a basic set of metrics (via INFO) and lacks distributed tracing or built-in dashboards. In distributed or clustered environments, stitching together cross-node context is hard. Many orgs end up relying heavily on external agents, custom instrumentation, or multi-tool stacks.

Core Metrics and Signals to Monitor in Redis

Monitoring Redis effectively means watching the right mix of resource, performance, and replication indicators. Each category below focuses on the key areas that reveal the health, stability, and responsiveness of your Redis infrastructure.

Memory Utilization and Efficiency

Redis is memory-bound, so tracking its usage and allocation efficiency is essential to prevent slowdowns or eviction storms.

- used_memory: Shows the total memory used by Redis, including internal overhead. A continuous rise could indicate memory leaks or unexpired keys. Threshold: Alert when it exceeds 80–85% of total memory.

- maxmemory: Defines the configured memory limit for Redis. If unset, Redis can grow until it consumes all system memory. Threshold: Always configure this to prevent OOM (Out-of-Memory) terminations.

- mem_fragmentation_ratio: Ratio of RSS (Resident Set Size) to used_memory. Values above 1.5 suggest fragmentation and allocator inefficiencies. Threshold: Investigate when > 1.5 persistently.

- evicted_keys: Number of keys removed when Redis runs out of space. A high eviction rate signals that memory is under pressure or TTLs are too short. Threshold: Alert if sustained > 100 evictions/sec.

- expired_keys: Keys that expired due to TTL settings. Sudden spikes might indicate application-level inefficiency in TTL management. Threshold: Investigate when expired_keys jump > 20% week-over-week.

Performance and Latency Metrics

These metrics reveal how fast Redis is serving commands and whether certain operations are slowing down under load.

- latency_ms: Average time Redis takes to execute commands. A growing latency under the same workload often indicates CPU saturation or blocking commands. Threshold: Keep < 2 ms for cache workloads.

- instantaneous_ops_per_sec: Measures Redis throughput (operations per second). Sudden drops often correlate with network or CPU bottlenecks. Threshold: Investigate if throughput dips > 20% from baseline.

- slowlog_length: Number of commands logged as slow in Redis’s Slow Log. Persistent entries suggest problematic queries (e.g., KEYS, SMEMBERS). Threshold: Alert if > 5 entries persistently.

- blocked_clients: Clients waiting for blocking operations like BLPOP or BRPOP. High numbers can indicate poor workload distribution or unoptimized queries. Threshold: Watch if blocked_clients > 5 % of total connections.

- commandstats: Provides per-command execution count and average runtime, useful for identifying heavy commands like MGET or HGETALL. Threshold: Review top commands weekly for imbalance.

Replication and Cluster Health

Replication ensures data durability and high availability. Monitoring replication lag, sync state, and cluster slots is vital to avoid stale reads or data divergence.

- master_repl_offset: Current replication byte offset for the primary. Compare with replica offsets to calculate lag. Threshold: Investigate lag > 10 MB or consistent growth.

- repl_backlog_active: Indicates if the replication backlog is active and sufficient. Inactive or undersized backlog can cause full resyncs after short disconnections. Threshold: Keep backlog at least 1 MB or 1% of DB size.

- slave_repl_offset: Replica’s current byte offset relative to primary. Discrepancies show replication delay. Threshold: Lag > 1000 ms suggests network or I/O bottleneck.

- sync_full: Counts full resyncs since startup. Frequent resyncs consume bandwidth and block replication temporarily. Threshold: Investigate if > 3 per hour.

- cluster_state: Reports if the cluster is healthy (“ok”) or degraded (“fail”). Threshold: Immediate alert if status != ok.

Connections and Network Health

Client and network metrics reveal throughput issues, overload, or connection churn that often signal application-side inefficiencies.

- connected_clients: Number of open client connections. Consistent growth indicates possible connection leaks or missing pooling. Threshold: Investigate if > 90% of

maxclients. - rejected_connections: Connections refused due to reaching the client limit. This often precedes application errors. Threshold: Immediate alert if > 0 per minute.

- network_input_bytes / network_output_bytes: Volume of incoming and outgoing traffic. Sharp asymmetry might indicate unbalanced read/write workloads. Threshold: Review when deviation > 30 % from baseline.

- instantaneous_input_kbps / instantaneous_output_kbps: Real-time network throughput. Dropping throughput despite normal ops/sec often points to I/O contention. Threshold: Investigate if decline > 20 % over 5 min.

Persistence and Durability

If your Redis instance uses RDB or AOF, monitoring persistence behavior is critical to prevent data loss and performance stalls during disk I/O.

- rdb_last_save_time: Timestamp of the last successful RDB snapshot. Delays indicate backup issues or blocked forks. Threshold: Alert if no save in > 1 hour for critical data.

- aof_last_rewrite_time_sec: Duration of the last AOF rewrite. Longer times imply disk contention or large append-only files. Threshold: Investigate if rewrite > 60 seconds.

- aof_pending_rewrite: Shows whether an AOF rewrite is currently queued. Continuous pending rewrites signal disk I/O pressure. Threshold: Alert if pending > 10 minutes.

- rdb_bgsave_in_progress: Flag showing if a background save is ongoing. Overlapping saves can spike latency due to fork overhead. Threshold: Ensure saves aren’t back-to-back within 5 minutes.

CPU and System Performance

Redis runs single-threaded for commands but uses extra threads for I/O and persistence. CPU monitoring ensures saturation and contention are controlled.

- used_cpu_sys / used_cpu_user: Measures system vs user CPU usage. A spike in sys CPU may reflect heavy context switching or network I/O issues. Threshold: Investigate if CPU > 85 % for > 10 min.

- used_cpu_sys_children / used_cpu_user_children: CPU used by child processes (forks for AOF/RDB). Sustained high values signal frequent persistence tasks. Threshold: Monitor if child CPU > 20 % of total.

- total_system_memory: Ensures host memory suffices for Redis plus OS overhead. Threshold: Keep 20–25 % buffer unallocated.

Keyspace and Hit Ratios

These metrics reveal cache health and operational efficiency by tracking how keys are created, expired, or missed.

- keyspace_hits / keyspace_misses: Ratio of cache hits vs misses. A dropping hit rate means inefficient caching or key churn. Threshold: Maintain hit rate > 85 %.

- dbN_keys / dbN_expires: Keys count and expiration stats for each database index (db0, db1, etc.). Rapid growth could indicate TTL misconfigurations or memory overuse. Threshold: Watch for > 10 % key growth/hour.

- avg_ttl: Average TTL for all expiring keys. Very low averages can stress Redis with frequent key churn. Threshold: Review if avg_ttl < 5 min for large datasets.

How to Monitor Redis Using CubeAPM

Below is a Redis-specific, production-grade workflow SREs can follow. Each step links to the relevant CubeAPM docs.

Step 1: Install CubeAPM (SaaS or Self-Hosted)

Deploy CubeAPM and note your base URL and auth token (required for alerting and user access). For Kubernetes, use the official Helm chart; for VM/Docker, follow the platform guides. Keep storage and network settings sized for metrics/logs throughput.

Step 2: Prepare the OpenTelemetry Collector close to Redis

Run the OpenTelemetry Collector (contrib) on the same host/VPC/cluster as Redis. This collector will scrape Redis metrics, parse Redis logs (optional), and export everything to CubeAPM over OTLP. Install the collector using the Infra Monitoring guides (Kubernetes or VM) and confirm outbound access to your CubeAPM endpoint.

Step 3: Scrape Redis metrics (Prometheus exporter → OTel → CubeAPM)

For the richest Redis signals (slowlog length, memory, ops, replication, keyspace), deploy the standard Redis Prometheus exporter (e.g., redis_exporter) and scrape its /metrics with the Collector’s prometheus receiver. Then export to CubeAPM. The “Prometheus Metrics” guide shows the pattern—adapt the job/target to your exporter service (redis-exporter:9121).

Example (collector snippet—abbreviated):

receivers:

prometheus:

config:

scrape_configs:

- job_name: "redis"

scrape_interval: 15s

static_configs:

- targets: ["redis-exporter:9121"]

processors:

resource:

attributes:

- key: service.name

value: redis-cluster

action: upsert

exporters:

otlp:

# Set to your CubeAPM OTLP endpoint and auth per your deployment

endpoint: https://<your-cubeapm-base-url>/otlp

# optional headers/tls as required by your setup

service:

pipelines:

metrics:

receivers: [prometheus]

processors: [resource]

exporters: [otlp]Step 4: Ingest Redis logs (server log and/or slow events)

Ship Redis server logs (e.g., /var/log/redis/redis-server.log) to CubeAPM for correlation with metrics/traces. You can use the Collector’s filelog receiver or your preferred log agent (Vector/Fluent/Loki) and export to CubeAPM. Ensure logs carry service/name, host, and (if possible) trace_id for MELT correlation. See the Logs section for ingestion formats, field handling, and search.

Step 5: Capture application traces with Redis spans

Instrument the applications that call Redis (Node, Python, Java, .NET, etc.) using OpenTelemetry SDKs and send OTLP directly to CubeAPM. This gives you end-to-end traces where Redis operations (e.g., GET, MGET, HGETALL) appear as spans alongside API/db calls. CubeAPM natively understands OTLP, and the docs list language starters to get you emitting traces quickly.

Step 6: Correlate infra and Redis context

Enable host/container metrics (CPU, RSS, I/O, network) so you can explain Redis symptoms (e.g., fork spikes, RSS growth, NIC saturation). Install the Collector on bare metal/VM or as a DaemonSet on Kubernetes, then add hostmetrics to the same OTLP pipeline so dashboards show Redis and system signals together.

Step 7: Create Redis-specific alerts and connect notifications

In CubeAPM, wire alert delivery (Email is one click; Slack, PagerDuty, Jira, Opsgenie are supported by config). Start with practical Redis thresholds:

- Memory pressure: used memory > 85% of

maxmemoryfor 5m - Evictions: sustained

evicted_keys> baseline + 3σ - Slowlog growth: slowlog length increasing > N/5m

- Replication lag: replica offset delay > 1000ms

Use the Alerting docs for email setup and the Configure reference for other channels (SMTP, Slack, PagerDuty, Jira/Opsgenie tokens).

Step 8: Build focused Redis dashboards (metrics + logs + traces)

Create panels for: memory & fragmentation (used_memory, mem_fragmentation_ratio), keyspace health (hits/misses, expired_keys, evicted_keys), latency (slowlog_length, P99 span latency), replication (master/replica offsets, full sync counts), and network/CPU overlays. With OTLP traces, add service maps and drill-downs from slow API routes to the exact Redis spans and correlated log lines. (Infra/Logs/OTLP capabilities referenced in docs.)

Step 9: Validate the pipeline and load-test hot paths

Smoke-test by generating Redis traffic (e.g., redis-benchmark or a staging replay) and confirm you see metrics in near-real time, logs searchable, and traces with Redis spans in CubeAPM. Verify that alerts fire to your chosen channel and dashboards update under load. Use the Install/Infra docs if you need to tune chart values or resource limits in Kubernetes.

With these steps, you’ll have end-to-end Redis observability in CubeAPM: exporter-backed metrics, searchable logs, and application traces, all correlated to accelerate root-cause analysis and keep latency and evictions in check.

Real-World Example: Redis Monitoring with CubeAPM

Challenge: Intermittent Latency in a High-Traffic Fintech App

A fintech company running microservices on Kubernetes began noticing sporadic API latency spikes during trading hours. Their Redis cluster (used for caching session tokens and market data) was showing inconsistent performance — sometimes under 1 ms latency, and at other times spiking to over 200 ms.

While redis-cli info showed normal memory usage, users experienced slow logins and delayed order updates. The ops team lacked unified visibility into whether the issue stemmed from Redis, the application layer, or the underlying Kubernetes nodes.

Solution: Full Redis Observability with CubeAPM

They deployed the CubeAPM OpenTelemetry Collector on each Redis node following Infra Monitoring for Kubernetes. Using the Redis Prometheus exporter, they scraped metrics such as latency_ms, evicted_keys, and used_memory.

Next, they instrumented their Go-based trading API with CubeAPM’s OpenTelemetry SDK so Redis spans (e.g., MGET, EXPIRE) were automatically captured within traces. Redis server logs were ingested using the Logs ingestion guide and tagged by service name for correlation.

Within CubeAPM’s unified dashboard, the team visualized Redis latency alongside CPU usage, network I/O, and command execution time, enabling real-time MELT correlation (Metrics, Events, Logs, Traces).

Fixes: Optimizing Memory and Network Throughput

Using CubeAPM’s trace explorer, engineers discovered latency spikes aligning with fork() operations during AOF rewrites and replication sync traffic saturating the network. They increased repl-backlog-size, moved the replica to a separate node pool, and scheduled AOF rewrites during off-peak hours.

CubeAPM’s alerting module (set up via Email Alerting Configuration) was configured to trigger warnings if redis_latency_ms > 2 or evicted_keys > 100/sec persisted for over five minutes.

Result: 45% Reduction in P99 Latency and Predictable Performance

After deploying these optimizations, P99 Redis latency dropped by 45%, and API response times stabilized across all trading sessions. With CubeAPM, the company gained a single-pane view of their Redis performance — from cluster metrics to transaction traces — allowing proactive tuning and faster incident resolution.

By unifying Redis observability under CubeAPM, the team not only reduced downtime but also established consistent, data-driven performance baselines for future scaling.

Verification Checklist and Alert Rules for Redis Monitoring with CubeAPM

Verification Checklist for Redis Monitoring

Before you roll Redis monitoring into production, run this checklist to make sure CubeAPM is capturing every key signal and correlation you’ll need for observability. Each verification point ensures that the data pipeline — from metrics to traces — is healthy and complete.

- Exporter connected: Confirm that your Redis metrics exporter is reachable by the OpenTelemetry Collector and that data is flowing into CubeAPM’s Redis dashboard. You should see metrics like

used_memory,evicted_keys, andinstantaneous_ops_per_secupdating in real time. - Slowlog captured: Verify that Redis slowlog entries are visible in CubeAPM under latency or trace views. The slowlog_length metric and related traces should reflect command-level execution times.

- Replication health: Ensure replica nodes are reporting

master_repl_offsetandslave_repl_offset. CubeAPM dashboards should display replication lag and sync offsets without data gaps. - Alert rules active: Validate that alert rules for memory usage, latency, and evictions are configured and firing correctly. Trigger a test by temporarily reducing

maxmemoryor simulating load, and confirm alerts appear in your configured channels (email, Slack, or PagerDuty). - Traces linked: Check that Redis spans are appearing inside distributed traces for your application. In CubeAPM’s service map, Redis should be linked to upstream APIs or microservices, showing latency correlation and query breakdowns across the stack.

Once all these checks pass, you can confidently go live knowing that CubeAPM is providing full-stack Redis observability, unifying metrics, logs, and traces into a single, actionable view.

Example Alert Rules for Redis Monitoring

1. Memory Utilization Breach

alert: RedisMemoryHigh

expr: redis_memory_used_bytes / redis_memory_max_bytes > 0.85

for: 5m

labels:

severity: warning

annotations:

description: "Redis memory usage exceeded 85%"2. Slow Command Detection

alert: RedisSlowCommands

expr: rate(redis_slowlog_length[5m]) > 5

for: 10m

labels:

severity: critical

annotations:

description: "More than 5 slow commands per 5 minutes"

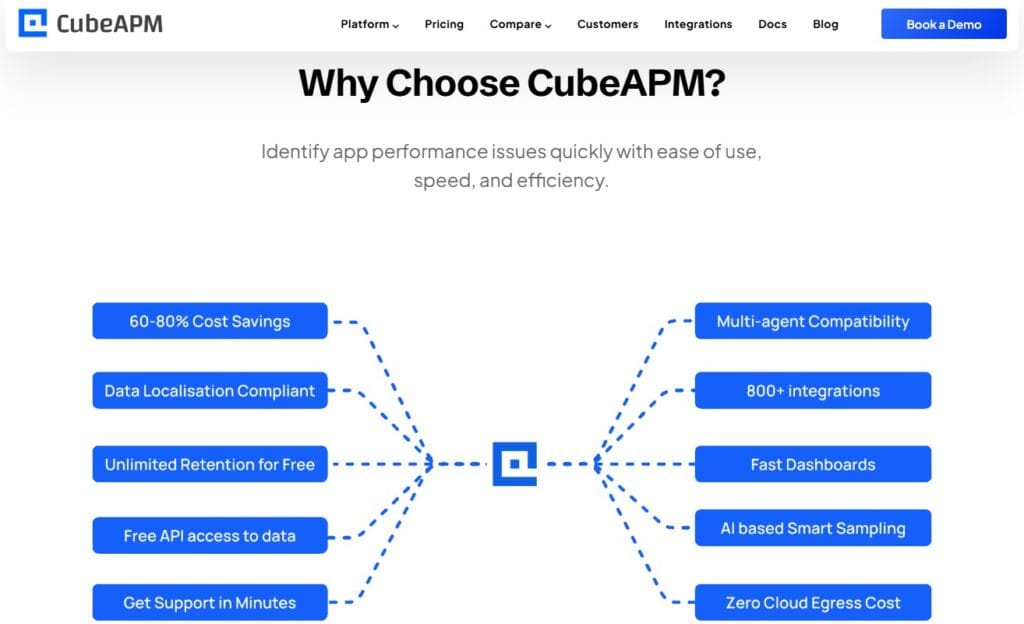

Why Use CubeAPM for Redis Monitoring

Choosing CubeAPM for Redis monitoring gives DevOps and SRE teams a unified, OpenTelemetry-native platform built for performance, compliance, and cost efficiency. Here’s why it stands out in production environments:

- Transparent pricing: CubeAPM follows a pricing model of $0.15/GB of data ingested, with no hidden fees or per-host/container billing. This means even large Redis clusters can be monitored at predictable costs, unlike traditional APMs that charge per node or feature.

- Full MELT observability: CubeAPM captures Metrics, Events, Logs, and Traces from Redis in a single view. You can visualize real-time memory usage, track eviction bursts, correlate slow commands with traces, and analyze event timelines — all within one platform.

- OpenTelemetry-native monitoring: Built entirely around the OpenTelemetry (OTel) standard, CubeAPM integrates seamlessly with Redis exporters and OTel collectors. There’s no proprietary lock-in, allowing Redis metrics and spans to flow directly from your existing OTel pipeline.

- Smart sampling for cost control: During peak traffic or memory spikes, CubeAPM’s adaptive smart sampling ensures you capture statistically significant Redis traces without ballooning data costs. You get deep visibility while maintaining budget predictability.

- Flexible deployment options: CubeAPM can be deployed as a fully managed SaaS for instant scalability or self-hosted within your private cloud or on-premises environment. Both models are HIPAA and GDPR compliant, ensuring secure observability for regulated industries.

- Integrated alerting and collaboration: CubeAPM connects seamlessly with your existing workflows — whether it’s Slack, Microsoft Teams, or WhatsApp — to deliver instant notifications for Redis anomalies like high latency, memory saturation, or replication lag.

With CubeAPM, Redis monitoring moves beyond isolated dashboards. It delivers end-to-end visibility across applications, infrastructure, and databases — empowering teams to detect, diagnose, and resolve Redis performance issues before they impact users.

Conclusion

Monitoring Redis is essential for ensuring consistent application performance, stability, and user experience in modern, data-intensive systems. Without visibility into memory utilization, command latency, and replication health, small inefficiencies can quickly escalate into outages and data inconsistency.

CubeAPM provides the complete observability stack for Redis — combining metrics, logs, and traces through an OpenTelemetry-native architecture. It simplifies Redis performance tracking, detects slow commands, monitors memory fragmentation, and correlates database behavior with upstream API performance.

By choosing CubeAPM, DevOps teams gain real-time insights, transparent pricing, and proactive alerting, helping prevent downtime and optimize Redis clusters at scale. Start monitoring Redis with CubeAPM today and achieve predictable performance with full MELT visibility.

Disclaimer: The information in this article reflects the latest details available at the time of publication and may change as technologies and products evolve.

1. What is Redis monitoring used for?

Redis monitoring helps track metrics such as latency, memory usage, key evictions, and replication lag to maintain database performance, prevent downtime, and optimize caching efficiency.

2. How do I monitor Redis performance effectively?

You can use OpenTelemetry-compatible exporters and dashboards in CubeAPM to visualize Redis health in real time, analyze slowlog trends, and set automated alerts for anomalies.

3. What are the most important Redis metrics to monitor?

Key metrics include used_memory, mem_fragmentation_ratio, evicted_keys, latency_ms, master_repl_offset, and connected_clients, which together reveal performance and stability.

4. How does CubeAPM help monitor Redis clusters?

CubeAPM aggregates Redis metrics, logs, and spans from multiple nodes, showing replication lag, keyspace efficiency, and latency trends — all correlated within a single observability dashboard.

5. Can CubeAPM send alerts for Redis issues?

Yes. CubeAPM’s alerting engine allows you to trigger notifications for high memory usage, slow commands, or connection saturation, delivered instantly via Slack, Email, or PagerDuty integrations.